Jul 23, 2013

The Size of Electronic Consumer Devices Affects Our Behavior

Re-blogged from Textually.org

What are our devices doing to us? We already know they're snuffing our creativity - but new research suggests they're also stifling our drive. How so? Because fussing with them on average 58 minutes a day leads to bad posture, FastCompany reports.

The body posture inherent in operating everyday gadgets affects not only your back, but your demeanor, reports a new experimental study entitled iPosture: The Size of Electronic Consumer Devices Affects Our Behavior. It turns out that working on a relatively large machine (like a desktop computer) causes users to act more assertively than working on a small one (like an iPad).That poor posture, Harvard Business School researchers Maarten Bos and Amy Cuddy find, undermines our assertiveness.

Read more.

22:23 Posted in Research tools, Wearable & mobile | Permalink | Comments (0)

SENSUS Transcutaneous Pain Management System Approved for Use During Sleep

Via Medgadget

NeuroMetrix of out of Waltham, MA received FDA clearance for its SENSUS Pain Management System to be used by patients during sleep. This is the first transcutaneous electrical nerve stimulation system to receive a sleep indication from the FDA for pain control.

The device is designed for use by diabetics and others with chronic pain in the legs and feet. It’s worn around one or both legs and delivers an electrical current to disrupt pain signals being sent up to the brain.

22:18 Posted in Biofeedback & neurofeedback, Wearable & mobile | Permalink | Comments (0)

Digital Assistance for Sign-Language Users

Via MedGadget

From Microsoft:

"In this project—which is being shown during the DemoFest portion of Faculty Summit 2013, which brings more than 400 academic researchers to Microsoft headquarters to share insight into impactful research—the hand tracking leads to a process of 3-D motion-trajectory alignment and matching for individual words in sign language. The words are generated via hand tracking by theKinect for Windows software and then normalized, and matching scores are computed to identify the most relevant candidates when a signed word is analyzed.

The algorithm for this 3-D trajectory matching, in turn, has enabled the construction of a system for sign-language recognition and translation, consisting of two modes. The first, Translation Mode, translates sign language into text or speech. The technology currently supports American sign language but has potential for all varieties of sign language."

12:39 Posted in Cognitive Informatics | Permalink | Comments (0)

Jul 18, 2013

How to see with your ears

Via: KurzweilAI.net

A participant wearing camera glasses and listening to the soundscape (credit: Alastair Haigh/Frontiers in Psychology)

A device that trains the brain to turn sounds into images could be used as an alternative to invasive treatment for blind and partially-sighted people, researchers at the University of Bath have found.

“The vOICe” is a visual-to-auditory sensory substitution device that encodes images taken by a camera worn by the user into “soundscapes” from which experienced users can extract information about their surroundings.

It helps blind people use sounds to build an image in their minds of the things around them.

A research team, led by Dr Michael Proulx, from the University’s Department of Psychology, looked at how blindfolded sighted participants would do on an eye test using the device.

Read full story

18:17 Posted in Brain training & cognitive enhancement, Neurotechnology & neuroinformatics | Permalink | Comments (0)

Advanced ‘artificial skin’ senses touch, humidity, and temperature

Artificial skin (credit: Technion) Technion-Israel Institute of Technology scientists have discovered how to make a new kind of flexible sensor that one day could be integrated into “electronic skin” (e-skin) — a covering for prosthetic limbs.

Read full story

18:14 Posted in Pervasive computing, Research tools, Wearable & mobile | Permalink | Comments (0)

US Army avatar role-play Experiment #3 now open for public registration

Military Open Simulator Enterprise Strategy (MOSES) is secure virtual world software designed to evaluate the ability of OpenSimulator to provide independent access to a persistent, virtual world. MOSES is a research project of the United States Army Simulation and Training Center. STTC’s Virtual World Strategic Applications team uses OpenSimulator to add capability and flexibility to virtual training scenarios.

18:12 Posted in Research tools, Telepresence & virtual presence | Permalink | Comments (0)

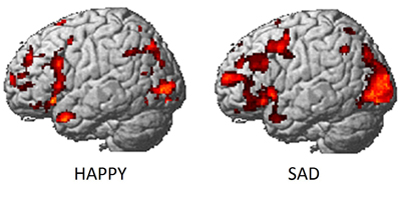

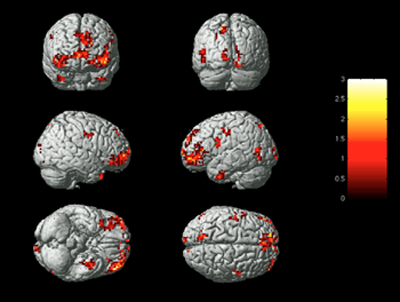

Identifying human emotions based on brain activity

For the first time, scientists at Carnegie Mellon University have identified which emotion a person is experiencing based on brain activity.

The study, published in the June 19 issue of PLOS ONE, combines functional magnetic resonance imaging (fMRI) and machine learning to measure brain signals to accurately read emotions in individuals. Led by researchers in CMU’s Dietrich College of Humanities and Social Sciences, the findings illustrate how the brain categorizes feelings, giving researchers the first reliable process to analyze emotions. Until now, research on emotions has been long stymied by the lack of reliable methods to evaluate them, mostly because people are often reluctant to honestly report their feelings. Further complicating matters is that many emotional responses may not be consciously experienced.

Identifying emotions based on neural activity builds on previous discoveries by CMU’s Marcel Just and Tom M. Mitchell, which used similar techniques to create a computational model that identifies individuals’ thoughts of concrete objects, often dubbed “mind reading.”

“This research introduces a new method with potential to identify emotions without relying on people’s ability to self-report,” said Karim Kassam, assistant professor of social and decision sciences and lead author of the study. “It could be used to assess an individual’s emotional response to almost any kind of stimulus, for example, a flag, a brand name or a political candidate.”

One challenge for the research team was find a way to repeatedly and reliably evoke different emotional states from the participants. Traditional approaches, such as showing subjects emotion-inducing film clips, would likely have been unsuccessful because the impact of film clips diminishes with repeated display. The researchers solved the problem by recruiting actors from CMU’s School of Drama.

“Our big breakthrough was my colleague Karim Kassam’s idea of testing actors, who are experienced at cycling through emotional states. We were fortunate, in that respect, that CMU has a superb drama school,” said George Loewenstein, the Herbert A. Simon University Professor of Economics and Psychology.

For the study, 10 actors were scanned at CMU’s Scientific Imaging & Brain Research Center while viewing the words of nine emotions: anger, disgust, envy, fear, happiness, lust, pride, sadness and shame. While inside the fMRI scanner, the actors were instructed to enter each of these emotional states multiple times, in random order.

Another challenge was to ensure that the technique was measuring emotions per se, and not the act of trying to induce an emotion in oneself. To meet this challenge, a second phase of the study presented participants with pictures of neutral and disgusting photos that they had not seen before. The computer model, constructed from using statistical information to analyze the fMRI activation patterns gathered for 18 emotional words, had learned the emotion patterns from self-induced emotions. It was able to correctly identify the emotional content of photos being viewed using the brain activity of the viewers.

To identify emotions within the brain, the researchers first used the participants’ neural activation patterns in early scans to identify the emotions experienced by the same participants in later scans. The computer model achieved a rank accuracy of 0.84. Rank accuracy refers to the percentile rank of the correct emotion in an ordered list of the computer model guesses; random guessing would result in a rank accuracy of 0.50.

Next, the team took the machine learning analysis of the self-induced emotions to guess which emotion the subjects were experiencing when they were exposed to the disgusting photographs. The computer model achieved a rank accuracy of 0.91. With nine emotions to choose from, the model listed disgust as the most likely emotion 60 percent of the time and as one of its top two guesses 80 percent of the time.

Finally, they applied machine learning analysis of neural activation patterns from all but one of the participants to predict the emotions experienced by the hold-out participant. This answers an important question: If we took a new individual, put them in the scanner and exposed them to an emotional stimulus, how accurately could we identify their emotional reaction? Here, the model achieved a rank accuracy of 0.71, once again well above the chance guessing level of 0.50.

“Despite manifest differences between people’s psychology, different people tend to neurally encode emotions in remarkably similar ways,” noted Amanda Markey, a graduate student in the Department of Social and Decision Sciences.

A surprising finding from the research was that almost equivalent accuracy levels could be achieved even when the computer model made use of activation patterns in only one of a number of different subsections of the human brain.

“This suggests that emotion signatures aren’t limited to specific brain regions, such as the amygdala, but produce characteristic patterns throughout a number of brain regions,” said Vladimir Cherkassky, senior research programmer in the Psychology Department.

The research team also found that while on average the model ranked the correct emotion highest among its guesses, it was best at identifying happiness and least accurate in identifying envy. It rarely confused positive and negative emotions, suggesting that these have distinct neural signatures. And, it was least likely to misidentify lust as any other emotion, suggesting that lust produces a pattern of neural activity that is distinct from all other emotional experiences.

Just, the D.O. Hebb University Professor of Psychology, director of the university’s Center for Cognitive Brain Imaging and leading neuroscientist, explained, “We found that three main organizing factors underpinned the emotion neural signatures, namely the positive or negative valence of the emotion, its intensity — mild or strong, and its sociality — involvement or non-involvement of another person. This is how emotions are organized in the brain.”

In the future, the researchers plan to apply this new identification method to a number of challenging problems in emotion research, including identifying emotions that individuals are actively attempting to suppress and multiple emotions experienced simultaneously, such as the combination of joy and envy one might experience upon hearing about a friend’s good fortune.

12:30 Posted in Emotional computing, Research tools | Permalink | Comments (0)

Jun 05, 2013

Omni brings full-body VR gaming to Kickstarter

I am really excited about this new interest in Virtual Reality, since I have been a fan of this technology for quite some time.

The latest news is that the virtual reality gaming device Omni launched a funding campaign Tuesday in Kickstarter and in few hours, more than doubled its goal of $150,000!

Used in tandem with the Oculus Rift and motion controller Razer Hydra, it potentially offers an unprecedented degree of immersion.

It's also a good news that VR hardware has been dropping dramaticaly in price in the last couple of years - I am thinking about at the incredible value-for-money of the Oculus Rift (dev kit: $300, consumer version: unknown - rumored <$300).

I had the privilege of trying out the Oculus (thanks to my friend Giuseppe, who got one in advance by supporting the project on Kickstarter) and I must say, it's just AMAZING.

The last time I had this feeling it was when I tested a CAVE system for the first time, during my internship at the CCVR. And now, 10 years after my first encounter with immersive VR, I am just as excited as then and look forward to try all novelties that are coming in very soon.

21:16 | Permalink | Comments (0)

Help Dravet's Syndrome children

Dravet's syndrome is a rare genetic dysfunction of the brain with onset during the first year in an otherwise healthy infant. Despite the devastating nature of this condition, its rarity and relatively new distinction as its own syndrome mean that there is little formal research available.

I was introduced to this disease by a friend of mine and wanted to help in some way. If you also want to give a contribution, you can do it by getting in touch with the Dravet Syndrome Foundation or, if you are from Italy, with the Italian one.

Your donation will help fight this disease. Hope you can help - even a visit to these associations will do..

20:21 | Permalink | Comments (0)

Jun 03, 2013

Positivetechnology.it

15:43 | Permalink | Comments (0)

May 29, 2013

Symposium on Positive Technology @ Third World Congress on Positive Psychology

We hope you will join us in Los Angeles, California, on Saturday June 29th for attending the Symposium on Positive Technology.

The special session features interventions by key PT researchers and is a great opportunity to meet, share ideas and build the future of this exciting research field!

Download the conference program here (PDF)

18:51 Posted in Positive Technology events | Permalink | Comments (0)

May 26, 2013

Cross-Brain Neurofeedback: Scientific Concept and Experimental Platform

Cross-Brain Neurofeedback: Scientific Concept and Experimental Platform.

PLoS One. 2013;8(5):e64590

Authors: Duan L, Liu WJ, Dai RN, Li R, Lu CM, Huang YX, Zhu CZ

Abstract. The present study described a new type of multi-person neurofeedback with the neural synchronization between two participants as the direct regulating target, termed as "cross-brain neurofeedback." As a first step to implement this concept, an experimental platform was built on the basis of functional near-infrared spectroscopy, and was validated with a two-person neurofeedback experiment. This novel concept as well as the experimental platform established a framework for investigation of the relationship between multiple participants' cross-brain neural synchronization and their social behaviors, which could provide new insight into the neural substrate of human social interactions.

19:36 Posted in Biofeedback & neurofeedback, Cognitive Informatics, Creativity and computers | Permalink | Comments (0)

A Hybrid Brain-Computer Interface-Based Mail Client

A Hybrid Brain-Computer Interface-Based Mail Client.

Comput Math Methods Med. 2013;2013:750934

Authors: Yu T, Li Y, Long J, Li F

Abstract. Brain-computer interface-based communication plays an important role in brain-computer interface (BCI) applications; electronic mail is one of the most common communication tools. In this study, we propose a hybrid BCI-based mail client that implements electronic mail communication by means of real-time classification of multimodal features extracted from scalp electroencephalography (EEG). With this BCI mail client, users can receive, read, write, and attach files to their mail. Using a BCI mouse that utilizes hybrid brain signals, that is, motor imagery and P300 potential, the user can select and activate the function keys and links on the mail client graphical user interface (GUI). An adaptive P300 speller is employed for text input. The system has been tested with 6 subjects, and the experimental results validate the efficacy of the proposed method.

19:33 Posted in Brain-computer interface | Permalink | Comments (0)

Using Music as a Signal for Biofeedback

Using Music as a Signal for Biofeedback.

Int J Psychophysiol. 2013 Apr 23;

Authors: Bergstrom I, Seinfeld S, Arroyo-Palacios J, Slater M, Sanchez-Vives MV

Abstract. Studies on the potential benefits of conveying biofeedback stimulus using a musical signal have appeared in recent years with the intent of harnessing the strong effects that music listening may have on subjects. While results are encouraging, the fundamental question has yet to be addressed, of how combined music and biofeedback compares to the already established use of either of these elements separately. This experiment, involving young adults (N=24), compared the effectiveness at modulating participants' states of physiological arousal of each of the following conditions: A) listening to pre-recorded music, B) sonification biofeedback of the heart rate, and C) an algorithmically modulated musical feedback signal conveying the subject's heart rate. Our hypothesis was that each of the conditions (A), (B) and (C) would differ from the other two in the extent to which it enables participants to increase and decrease their state of physiological arousal, with (C) being more effective than (B), and both more than (A). Several physiological measures and qualitative responses were recorded and analyzed. Results show that using musical biofeedback allowed participants to modulate their state of physiological arousal at least equally well as sonification biofeedback, and much better than just listening to music, as reflected in their heart rate measurements, controlling for respiration-rate. Our findings indicate that the known effects of music in modulating arousal can therefore be beneficially harnessed when designing a biofeedback protocol.

19:32 Posted in Biofeedback & neurofeedback | Permalink | Comments (0)

Application of alpha/theta neurofeedback and heart rate variability training to young contemporary dancers: State anxiety and creativity.

Application of alpha/theta neurofeedback and heart rate variability training to young contemporary dancers: State anxiety and creativity.

Int J Psychophysiol. 2013 May 15;

Authors: Gruzelier JH, Thompson T, Redding E, Brandt R, Steffert T

Abstract. As one in a series on the impact of EEG-neurofeedback in the performing arts, we set out to replicate a previous dance study in which alpha/theta (A/T) neurofeedback and heart rate variability (HRV) biofeedback enhanced performance in competitive ballroom dancers compared with controls. First year contemporary dance conservatoire students were randomised to the same two psychophysiological interventions or a choreology instruction comparison group or a no-training control group. While there was demonstrable neurofeedback learning, there was no impact of the three interventions on dance performance as assessed by four experts. However, HRV training reduced anxiety and the reduction correlated with improved technique and artistry in performance; the anxiety scale items focussed on autonomic functions, especially cardiovascular activity. In line with the putative impact of hypnogogic training on creativity A/T training increased cognitive creativity with the test of unusual uses, but not insight problems. Methodological and theoretical implications are considered.

19:30 Posted in Biofeedback & neurofeedback | Permalink | Comments (0)

May 24, 2013

New LinkedIn group on Positive Technology

Are you interested in Positive Technology? Then come and join us on LinkedIn!

Our new group is the place to share expertise and brilliant ideas on positive applications of technology!

20:15 Posted in Positive Technology events | Permalink | Comments (0)

May 06, 2013

An app for the mind

With the rapid adoption of mobile technologies and the proliferation of smartphones, new opportunities are emerging for the delivery of mental health services. And indeed, psychologists are starting to realize this potential: a recent survey by Luxton and coll. (2011) identified over 200 smartphone apps focused on behavioral health, covering a wide range of disorders, including developmental disorders, cognitive disorders, substance-related disorders as well as psychotic and mood disorders. These applications are used in behavioral health for several purposes, the most common of which are health education, assessment, homework and monitoring progress of treatment.

For example, T2 MoodTracker is an application that allows users to self-monitor, track and reference their emotional experience over a period of days, weeks and months using a visual analogue rating scale. Using this application, patients can self-monitor emotional experiences associated with common deployment-related behavioral health issues like post-traumatic stress, brain injury, life stress, depression and anxiety. Self-monitoring results can be used as self-help tool or they shared with a therapist or health care professional, providing a record of the patient’s emotional experience over a selected time frame.

Measuring objective correlatives of subjectively-reported emotional states is an important concern in research and clinical applications. Physiological and physical activity information provide mental health professionals with integrative measures, which can be used to improve understanding of patients’ self-reported feelings and emotions.

The combined use of wearable biosensors and smart phones offers unprecedented opportunities to collect, elaborate and transmit real-time body signals to the remote therapist. This approach is also useful to allow the patient collecting real-time information related to his/her health conditions and identifying specific trends. Insights gained by means of this feedback can empower the user to self-engage and manage his/her own health status, minimizing any interaction with other health care actors. One such tool is MyExperience, an open-source mobile platform that allows the combination of sensing and self-report to collect both quantitative and qualitative data on user experience and activity.

Other applications are designed to empower users with information for making better decisions, preventing life-style related conditions and preserving/enhancing cognitive performance. For example, BeWell monitors different user activities (sleep, physical activity, social interaction) and provides feedback to promote healthier lifestyle decisions.

Besides applications in mental health and wellbeing, smartphones are increasingly used in psychological research. The potential of this approach has been recently discussed by Geoffrey Miller in a review entitled “The Smartphone Psychology Manifesto”. According to Miller, smartphones can be effectively used to collect large quantities of ecologically valid data, in a easier and quicker way than other available research methodologies. Since the smartphone is becoming one of the most pervasive devices in our lives, it provides access to domains of behavioral data not previously available without either constant observation or reliance on self-reports only.

For example, the INTERSTRESS project, which I am coordinating, developed PsychLog, a psycho-physiological mobile data collection platform for mental health research. This free, open source experience sampling platform for Windows mobile allows collecting self-reported psychological data as well as ECG data via a bluetooth ECG sensor unit worn by the user. Althought PsychLog provides less features with respect to more advanced experience sampling platform, it can be easily configured also by researchers with no programming skills.

In summary, the use of smartphones can have a significant impact on both psychological research and practice. However, there is still limited evidence of the effectiveness of this approach. As for other mHealth applications, few controlled trials have tested the potential of mobile technology interventions in improving mental health care delivery processes. Therefore, further research is needed in order to determine the real cost-effectiveness of mobile cybertherapy applications.

16:18 Posted in Cybertherapy, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Apr 05, 2013

Humanoid Robots Being Studied for Autism Therapy

Via MedGadget

Researchers at Vanderbilt University are studying the potential benefits of using human-looking robots as tools to help kids with autism spectrum disorder (ASD) improve their communication skills. The programmable NAO robot used in the study was developed by Aldebaran Robotics out of Paris, France, and offers the ability to be part of a larger, smarter system.

Though a child might feel like the pink eyed humanoid is an autonomous being, the NAO robot that the team is using is actually hooked up to computers and external cameras that track the kid’s movements. Using the newly developed ARIA (Adaptive Robot-Mediated Intervention Architecture) protocol, they found that children paid more attention to NAO and followed in exercises almost as well as with a human adult therapist.

15:21 Posted in AI & robotics, Cybertherapy | Permalink | Comments (0)

Mar 11, 2013

Is virtual reality always an effective stressors for exposure treatments? Some insights from a controlled trial

Is virtual reality always an effective stressors for exposure treatments? Some insights from a controlled trial.

BMC psychiatry, 13(1) p. 52, 2013

Federica Pallavicini, Pietro Cipresso, Simona Raspelli, Alessandra Grassi, Silvia Serino, Cinzia Vigna, Stefano Triberti, Marco Villamira, Andrea Gaggioli, Giuseppe Riva

Abstract. Several research studies investigating the effectiveness of the different treatments have demonstrated that exposure-based therapies are more suitable and effective than others for the treatment of anxiety disorders. Traditionally, exposure may be achieved in two manners: in vivo, with direct contact to the stimulus, or by imagery, in the person’s imagination. However, despite its effectiveness, both types of exposure present some limitations that supported the use of Virtual Reality (VR). But is VR always an effective stressor? Are the technological breakdowns that may appear during such an experience a possible risk for its effectiveness? (...)

Full paper available here (open access)

14:11 Posted in Cybertherapy, Research tools, Virtual worlds | Permalink | Comments (0)

Mar 03, 2013

Permanently implanted neuromuscolar electrodes allow natural control of a robotic prosthesis

Source: Chalmers University of Technology

“The new technology is a major breakthrough that has many advantages over current technology, which provides very limited functionality to patients with missing limbs,” Brånemark says.

Presently, robotic prostheses rely on electrodes over the skin to pick up the muscles electrical activity to drive few actions by the prosthesis. The problem with this approach is that normally only two functions are regained out of the tens of different movements an able-body is capable of. By using implanted electrodes, more signals can be retrieved, and therefore control of more movements is possible. Furthermore, it is also possible to provide the patient with natural perception, or “feeling”, through neural stimulation.

“We believe that implanted electrodes, together with a long-term stable human-machine interface provided by the osseointegrated implant, is a breakthrough that will pave the way for a new era in limb replacement,” says Rickard Brånemark.

Read full story

14:39 Posted in AI & robotics, Neurotechnology & neuroinformatics | Permalink | Comments (0)