Jan 02, 2017

Babies exposed to stimulation get brain boost

Source: The Norwegian University of Science and Technology (NTNU)

Many new parents still think that babies should develop at their own pace, and that they shouldn't be challenged to do things that they're not yet ready for. Infants should learn to roll around under their own power, without any "helpful" nudges, and they shouldn't support their weight before they can stand or walk on their own. They mustn't be potty trained before they are ready for it.

According to neuroscientist Audrey van der Meer, a professor at the Norwegian University of Science and Technology (NTNU) this mindset can be traced back to the early 1900s, when professionals were convinced that our genes determine who we are, and that child development occurred independently of the stimulation that a baby is exposed to. They believed it was harmful to hasten development, because development would and should happen naturally.

Early stimulation in the form of baby gym activities and early potty training play a central role in Asia and Africa. The old development theory also contrasts with modern brain research that shows that early stimulation contributes to brain development gains even in the wee ones among us.

Using the body and senses

Van der Meer is a professor of neuropsychology and has used advanced EEG technology for many years to study the brain activity of hundreds of babies.

The results show that the neurons in the brains of young children quickly increase in both number and specialization as the baby learns new skills and becomes more mobile. Neurons in very young children form up to a thousand new connections per second.

Van der Meer's research also shows that the development of our brain, sensory perception and motor skills happen in sync. She believes that even the smallest babies must be challenged and stimulated at their level from birth onward. They need to engage their entire body and senses by exploring their world and different materials, both indoors and out and in all types of weather. She emphasizes that the experiences must be self-produced; it is not enough for children merely to be carried or pushed in a stroller.

Unused brain synapses disappear

"Many people believe that children up to three years old only need cuddles and nappy changes, but studies show that rats raised in cages have less dendritic branching in the brain than rats raised in an environment with climbing and hiding places and tunnels. Research also shows that children born into cultures where early stimulation is considered important, develop earlier than Western children do," van der Meer says.

She adds that the brains of young children are very malleable, and can therefore adapt to what is happening around them. If the new synapses that are formed in the brain are not being used, they disappear as the child grows up and the brain loses some of its plasticity.

Van der Meer mentions the fact that Chinese babies hear a difference between the R and L sounds when they are four months old, but not when they get older. Since Chinese children do not need to distinguish between these sounds to learn their mother tongue, the brain synapses that carry this knowledge disappear when they are not used.

Loses the ability to distinguish between sounds

Babies actually manage to distinguish between the sounds of any language in the world when they are four months old, but by the time they are eight months old they have lost this ability, according to van der Meer.

In the 1970s, it was believed that children could only learn one language properly. Foreign parents were advised not to speak their native language to their children, because it could impede the child's language development. Today we think completely differently, and there are examples of children who speak three, four or five languages fluently without suffering language confusion or delays.

Brain research suggests that in these cases the native language area in the brain is activated when children speak the languages. If we study a foreign language after the age of seven, other areas of the brain are used when we speak the language, explains Van der Meer.

She adds that it is important that children learn languages by interacting with real people.

"Research shows that children don't learn language by watching someone talk on a screen, it has to be real people who expose them to the language," says van der Meer.

Early intervention with the very young

Since a lot is happening in the brain during the first years of life, van der Meer says that it is easier to promote learning and prevent problems when children are very young.

The term "early intervention" keeps popping up in discussions of kindergartens and schools, teaching and learning. Early intervention is about helping children as early as possible to ensure that as many children as possible succeed in their education and on into adulthood - precisely because the brain has the greatest ability to change under the influence of the ambient conditions early in life.

"When I talk about early intervention, I'm not thinking of six-year-olds, but even younger children from newborns to age three. Today, 98 per cent of Norwegian children attend kindergarten, so the quality of the time that children spend there is especially important. I believe that kindergarten should be more than just a holding place -- it should be a learning arena - and by that I mean that play is learning," says van der Meer.

Too many untrained staff

She adds that a two-year old can easily learn to read or swim, as long as the child has access to letters or water. However, she does not want kindergarten to be a preschool, but rather a place where children can have varied experiences through play.

"This applies to both healthy children and those with different challenges. When it comes to children with motor challenges or children with impaired vision and hearing, we have to really work to bring the world to them," says van der Meer.

"One-year-olds can't be responsible for their own learning, so it's up to the adults to see to it. Today untrained temporary staff tend to be assigned to the infant and toddler rooms, because it's 'less dangerous' with the youngest ones since they only need cuddles and nappy changes. I believe that all children deserve teachers who understand how the brains of young children work. Today, Norway is the only one of 25 surveyed OECD countries where kindergarten teachers do not constitute 50 per cent of kindergarten staffing," she said.

More children with special needs

Lars Adde is a specialist in paediatric physical therapy at St. Olavs Hospital and a researcher at NTNU's Department of Laboratory Medicine, Children's and Women's Health. He works with young children who have special needs, in both his clinical practice and research.

He believes it is important that all children are stimulated and get to explore the world, but this is especially important for children who have special challenges. He points out that a greater proportion of children that are now coming into the world in Norway have special needs.

"This is due to the rapid development in medical technology, which enables us to save many more children -- like extremely premature babies and infants who get cancer. These children would have died 50 years ago, and today they survive -- but often with a number of subsequent difficulties," says Adde.

New knowledge offers better treatment

Adde says that the new understanding of brain development that has been established since the 1970s has given these children far better treatment and care options.

For example, the knowledge that some synapses in the brain are strengthened while others disappear has led to the understanding that we have to work at what we want to be good at - like walking. According to the old mindset, any general movement would provide good general motor function.

Babies who are born very prematurely at St. Olavs Hospital receive follow-up by an interdisciplinary team at the hospital and a municipal physiotherapist in their early years. Kindergarten staff where the child attends receive training in exactly how this child should be stimulated and challenged at the appropriate level. The follow-up enables a child with developmental delays to catch up quickly, so that measures can be implemented early -- while the child's brain is still very plastic.

A child may, for example, have a small brain injury that causes him to use his arms differently. Now we know that the brain connections that govern this arm become weaker when it is used less, which reinforces the reduced function.

"Parents may then be asked to put a sock on the "good" hand when their child uses his hands to play. Then the child is stimulated and the brain is challenged to start using the other arm," says Adde.

Shouldn't always rush development

Adde stresses that it is not always advisable to speed up the development of children with special needs who initially struggle with their motor skills.

A one-year old learning to walk first has to learn to find her balance. If the child is helped to standing position, she will eventually learn to stand - but before she has learned how to sit down again. If the child loses her balance, she'll fall like a stiff cane, which can be both scary and counterproductive.

In that situation, "we might then ask the parents to instead help their child up to kneeling position while it holds onto something. Then the child will learn to stand up on its own. If the child falls, it will bend in the legs and tumble on its bum. Healthy children figure this out on their own, but children with special challenges don't necessarily do this," says Adde.

Story Source:

Materials provided by The Norwegian University of Science and Technology (NTNU).

22:37 Posted in Brain stimulation, Brain training & cognitive enhancement | Permalink | Comments (0)

Mind-controlled toys: The next generation of Christmas presents?

Source: University of Warwick

The next generation of toys could be controlled by the power of the mind, thanks to research by the University of Warwick.

Led by Professor Christopher James, Director of Warwick Engineering in Biomedicine at the School of Engineering, technology has been developed which allows electronic devices to be activated using electrical impulses from brain waves, by connecting our thoughts to computerised systems. Some of the most popular toys on children's lists to Santa - such as remote-controlled cars and helicopters, toy robots and Scalextric racing sets - could all be controlled via a headset, using 'the power of thought'.

This could be based on levels of concentration - thinking of your favourite colour or stroking your dog, for example. Instead of a hand-held controller, a headset is used to create a brain-computer interface - a communication link between the human brain and the computerised device.

Sensors in the headset measure the electrical impulses from brain at various different frequencies - each frequency can be somewhat controlled, under special circumstances. This activity is then processed by a computer, amplified and fed into the electrical circuit of the electronic toy. Professor James comments on the future potential for this technology: "Whilst brain-computer interfaces already exist - there are already a few gaming headsets on the market - their functionality has been quite limited.

New research is making the headsets now read cleaner and stronger signals than ever before - this means stronger links to the toy, game or action thus making it a very immersive experience. "The exciting bit is what comes next - how long before we start unlocking the front door or answering the phone through brain-computer interfaces?"

May 26, 2016

From User Experience (UX) to Transformative User Experience (T-UX)

In 1999, Joseph Pine and James Gilmore wrote a seminal book titled “The Experience Economy” (Harvard Business School Press, Boston, MA) that theorized the shift from a service-based economy to an experience-based economy.

According to these authors, in the new experience economy the goal of the purchase is no longer to own a product (be it a good or service), but to use it in order to enjoy a compelling experience. An experience, thus, is a whole-new type of offer: in contrast to commodities, goods and services, it is designed to be as personal and memorable as possible. Just as in a theatrical representation, companies stage meaningful events to engage customers in a memorable and personal way, by offering activities that provide engaging and rewarding experiences.

Indeed, if one looks back at the past ten years, the concept of experience has become more central to several fields, including tourism, architecture, and – perhaps more relevant for this column – to human-computer interaction, with the rise of “User Experience” (UX).

The concept of UX was introduced by Donald Norman in a 1995 article published on the CHI proceedings (D. Norman, J. Miller, A. Henderson: What You See, Some of What's in the Future, And How We Go About Doing It: HI at Apple Computer. Proceedings of CHI 1995, Denver, Colorado, USA). Norman argued that focusing exclusively on usability attribute (i.e. easy of use, efficacy, effectiveness) when designing an interactive product is not enough; one should take into account the whole experience of the user with the system, including users’ emotional and contextual needs. Since then, the UX concept has assumed an increasing importance in HCI. As McCarthy and Wright emphasized in their book “Technology as Experience” (MIT Press, 2004):

“In order to do justice to the wide range of influences that technology has in our lives, we should try to interpret the relationship between people and technology in terms of the felt life and the felt or emotional quality of action and interaction.” (p. 12).

However, according to Pine and Gilmore experience may not be the last step of what they call as “Progression of Economic Value”. They speculated further into the future, by identifying the “Transformation Economy” as the likely next phase. In their view, while experiences are essentially memorable events which stimulate the sensorial and emotional levels, transformations go much further in that they are the result of a series of experiences staged by companies to guide customers learning, taking action and eventually achieving their aspirations and goals.

In Pine and Gilmore terms, an aspirant is the individual who seeks advice for personal change (i.e. a better figure, a new career, and so forth), while the provider of this change (a dietist, a university) is an elictor. The elictor guide the aspirant through a series of experiences which are designed with certain purpose and goals. According to Pine and Gilmore, the main difference between an experience and a transformation is that the latter occurs when an experience is customized:

“When you customize an experience to make it just right for an individual - providing exactly what he needs right now - you cannot help changing that individual. When you customize an experience, you automatically turn it into a transformation, which companies create on top of experiences (recall that phrase: “a life-transforming experience”), just as they create experiences on top of services and so forth” (p. 244).

A further key difference between experiences and transformations concerns their effects: because an experience is inherently personal, no two people can have the same one. Likewise, no individual can undergo the same transformation twice: the second time it’s attempted, the individual would no longer be the same person (p. 254-255).

But what will be the impact of this upcoming, “transformation economy” on how people relate with technology? If in the experience economy the buzzword is “User Experience”, in the next stage the new buzzword might be “User Transformation”.

Indeed, we can see some initial signs of this shift. For example, FitBit and similar self-tracking gadgets are starting to offer personalized advices to foster enduring changes in users’ lifestyle; another example is from the fields of ambient intelligence and domotics, where there is an increasing focus towards designing systems that are able to learn from the user’s behaviour (i.e. by tracking the movement of an elderly in his home) to provide context-aware adaptive services (i.e. sending an alert when the user is at risk of falling).

But likely, the most important ICT step towards the transformation economy could take place with the introduction of next-generation immersive virtual reality systems. Since these new systems are based on mobile devices (an example is the recent partnership between Oculus and Samsung), they are able to deliver VR experiences that incorporate information on the external/internal context of the user (i.e. time, location, temperature, mood etc) by using the sensors incapsulated in the mobile phone.

By personalizing the immersive experience with context-based information, it might be possibile to induce higher levels of involvement and presence in the virtual environment. In case of cyber-therapeutic applications, this could translate into the development of more effective, transformative virtual healing experiences.

Furthermore, the emergence of "symbiotic technologies", such as neuroprosthetic devices and neuro-biofeedback, is enabling a direct connection between the computer and the brain. Increasingly, these neural interfaces are moving from the biomedical domain to become consumer products. But unlike existing digital experiential products, symbiotic technologies have the potential to transform more radically basic human experiences.

Brain-computer interfaces, immersive virtual reality and augmented reality and their various combinations will allow users to create “personalized alterations” of experience. Just as nowadays we can download and install a number of “plug-ins”, i.e. apps to personalize our experience with hardware and software products, so very soon we may download and install new “extensions of the self”, or “experiential plug-ins” which will provide us with a number of options for altering/replacing/simulating our sensorial, emotional and cognitive processes.

Such mediated recombinations of human experience will result from of the application of existing neuro-technologies in completely new domains. Although virtual reality and brain-computer interface were originally developed for applications in specific domains (i.e. military simulations, neurorehabilitation, etc), today the use of these technologies has been extended to other fields of application, ranging from entertainment to education.

In the field of biology, Stephen Jay Gould and Elizabeth Vrba (Paleobiology, 8, 4-15, 1982) have defined “exaptation” the process in which a feature acquires a function that was not acquired through natural selection. Likewise, the exaptation of neurotechnologies to the digital consumer market may lead to the rise of a novel “neuro-experience economy”, in which technology-mediated transformation of experience is the main product.

Just as a Genetically-Modified Organism (GMO) is an organism whose genetic material is altered using genetic-engineering techniques, so we could define aTechnologically-Modified Experience (ETM) a re-engineered experience resulting from the artificial manipulation of neurobiological bases of sensorial, affective, and cognitive processes.

Clearly, the emergence of the transformative neuro-experience economy will not happen in weeks or months but rather in years. It will take some time before people will find brain-computer devices on the shelves of electronic stores: most of these tools are still in the pre-commercial phase at best, and some are found only in laboratories.

Nevertheless, the mere possibility that such scenario will sooner or later come to pass, raises important questions that should be addressed before symbiotic technologies will enter our lives: does technological alteration of human experience threaten the autonomy of individuals, or the authenticity of their lives? How can we help individuals decide which transformations are good or bad for them?

Answering these important issues will require the collaboration of many disciplines, including philosophy, computer ethics and, of course, cyberpsychology.

Oct 06, 2014

First direct brain-to-brain communication between human subjects

Via KurzweilAI.net

An international team of neuroscientists and robotics engineers have demonstrated the first direct remote brain-to-brain communication between two humans located 5,000 miles away from each other and communicating via the Internet, as reported in a paper recently published in PLOS ONE (open access).

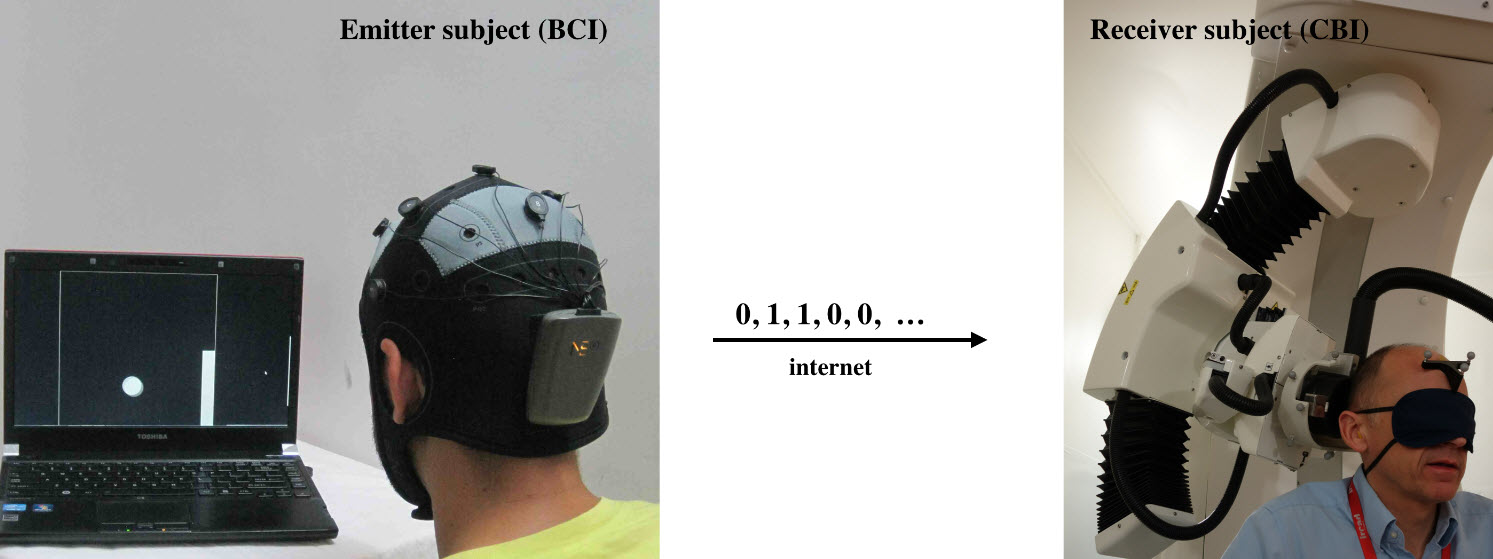

Emitter and receiver subjects with non-invasive devices supporting, respectively, a brain-computer interface (BCI), based on EEG changes, driven by motor imagery (left) and a computer-brain interface (CBI) based on the reception of phosphenes elicited by neuro-navigated TMS (right) (credit: Carles Grau et al./PLoS ONE)

In India, researchers encoded two words (“hola” and “ciao”) as binary strings and presented them as a series of cues on a computer monitor. They recorded the subject’s EEG signals as the subject was instructed to think about moving his feet (binary 0) or hands (binary 1). They then sent the recorded series of binary values in an email message to researchers in France, 5,000 miles away.

There, the binary strings were converted into a series of transcranial magnetic stimulation (TMS) pulses applied to a hotspot location in the right visual occipital cortex that either produced a phosphene (perceived flash of light) or not.

“We wanted to find out if one could communicate directly between two people by reading out the brain activity from one person and injecting brain activity into the second person, and do so across great physical distances by leveraging existing communication pathways,” explains coauthor Alvaro Pascual-Leone, MD, PhD, Director of the Berenson-Allen Center for Noninvasive Brain Stimulation at Beth Israel Deaconess Medical Center (BIDMC) and Professor of Neurology at Harvard Medical School.

A team of researchers from Starlab Barcelona, Spain and Axilum Robotics, Strasbourg, France conducted the experiment. A second similar experiment was conducted between individuals in Spain and France.

“We believe these experiments represent an important first step in exploring the feasibility of complementing or bypassing traditional language-based or other motor/PNS mediated means in interpersonal communication,” the researchers say in the paper.

“Although certainly limited in nature (e.g., the bit rates achieved in our experiments were modest even by current BCI (brain-computer interface) standards, mostly due to the dynamics of the precise CBI (computer-brain interface) implementation, these initial results suggest new research directions, including the non-invasive direct transmission of emotions and feelings or the possibility of sense synthesis in humans — that is, the direct interface of arbitrary sensors with the human brain using brain stimulation, as previously demonstrated in animals with invasive methods.

Brain-to-brain (B2B) communication system overview. On the left, the BCI subsystem is shown schematically, including electrodes over the motor cortex and the EEG amplifier/transmitter wireless box in the cap. Motor imagery of the feet codes the bit value 0, of the hands codes bit value 1. On the right, the CBI system is illustrated, highlighting the role of coil orientation for encoding the two bit values. Communication between the BCI and CBI components is mediated by the Internet. (Credit: Carles Grau et al./PLoS ONE)

“The proposed technology could be extended to support a bi-directional dialogue between two or more mind/brains (namely, by the integration of EEG and TMS systems in each subject). In addition, we speculate that future research could explore the use of closed mind-loops in which information associated to voluntary activity from a brain area or network is captured and, after adequate external processing, used to control other brain elements in the same subject. This approach could lead to conscious synthetically mediated modulation of phenomena best detected subjectively by the subject, including emotions, pain and psychotic, depressive or obsessive-compulsive thoughts.

“Finally, we anticipate that computers in the not-so-distant future will interact directly with the human brain in a fluent manner, supporting both computer- and brain-to-brain communication routinely. The widespread use of human brain-to-brain technologically mediated communication will create novel possibilities for human interrelation with broad social implications that will require new ethical and legislative responses.”

This work was partly supported by the EU FP7 FET Open HIVE project, the Starlab Kolmogorov project, and the Neurology Department of the Hospital de Bellvitge.

Aug 31, 2014

Improving memory with transcranial magnetic stimulation

A new Northwestern Medicine study reports stimulating a particular region in the brain via non-invasive delivery of electrical current using magnetic pulses, called Transcranial Magnetic Stimulation, improves memory.

Feb 09, 2014

Effects of the addition of transcranial direct current stimulation to virtual reality therapy after stroke: A pilot randomized controlled trial

Effects of the addition of transcranial direct current stimulation to virtual reality therapy after stroke: A pilot randomized controlled trial.

NeuroRehabilitation. 2014 Jan 28;

Authors: Viana RT, Laurentino GE, Souza RJ, Fonseca JB, Silva Filho EM, Dias SN, Teixeira-Salmela LF, Monte-Silva KK

Abstract. BACKGROUND: Upper limb (UL) impairment is the most common disabling deficit following a stroke. Previous studies have suggested that transcranial direct current stimulation (tDCS) enhances the effect of conventional therapies.

OBJECTIVE: This pilot double-blind randomized control trial aimed to determine whether or not tDCS, combined with Wii virtual reality therapy (VRT), would be superior to Wii therapy alone in improving upper limb function and quality of life in chronic stroke individuals.

METHODS: Twenty participants were randomly assigned either to an experimental group that received VRT and tDCS, or a control group that received VRT and sham tDCS. The therapy was delivered over 15 sessions with 13 minutes of active or sham anodal tDCS, and one hour of virtual reality therapy. The outcomes included were determined using the Fugl-Meyer scale, the Wolf motor function test, the modified Ashworth scale (MAS), grip strength, and the stroke specific quality of life scale (SSQOL). Minimal clinically important differences (MCID) were observed when assessing outcome data.

RESULTS: Both groups demonstrated gains in all evaluated areas, except for the SSQOL-UL domain. Differences between groups were only observed in wrist spasticity levels in the experimental group, where more than 50% of the participants achieved the MCID.

CONCLUSIONS: These findings support that tDCS, combined with VRT therapy, should be investigated and clarified further.

22:21 Posted in Brain stimulation, Cybertherapy, Virtual worlds | Permalink | Comments (0)