Jun 21, 2016

New book on Human Computer Confluence - FREE PDF!

Two good news for Positive Technology followers.

1) Our new book on Human Computer Confluence is out!

2) It can be downloaded for free here

Human-computer confluence refers to an invisible, implicit, embodied or even implanted interaction between humans and system components. New classes of user interfaces are emerging that make use of several sensors and are able to adapt their physical properties to the current situational context of users.

A key aspect of human-computer confluence is its potential for transforming human experience in the sense of bending, breaking and blending the barriers between the real, the virtual and the augmented, to allow users to experience their body and their world in new ways. Research on Presence, Embodiment and Brain-Computer Interface is already exploring these boundaries and asking questions such as: Can we seamlessly move between the virtual and the real? Can we assimilate fundamentally new senses through confluence?

The aim of this book is to explore the boundaries and intersections of the multidisciplinary field of HCC and discuss its potential applications in different domains, including healthcare, education, training and even arts.

DOWNLOAD THE FULL BOOK HERE AS OPEN ACCESS

Please cite as follows:

Andrea Gaggioli, Alois Ferscha, Giuseppe Riva, Stephen Dunne, Isabell Viaud-Delmon (2016). Human computer confluence: transforming human experience through symbiotic technologies. Warsaw: De Gruyter. ISBN 9783110471120.

09:53 Posted in AI & robotics, Augmented/mixed reality, Biofeedback & neurofeedback, Blue sky, Brain training & cognitive enhancement, Brain-computer interface, Cognitive Informatics, Cyberart, Cybertherapy, Emotional computing, Enactive interfaces, Future interfaces, ICT and complexity, Neurotechnology & neuroinformatics, Positive Technology events, Research tools, Self-Tracking, Serious games, Technology & spirituality, Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink

Apr 27, 2016

Predictive Technologies: Can Smart Tools Augment the Brain's Predictive Abilities?

Mar 19, 2015

Ultrasound treats Alzheimer’s disease, restoring memory in mice

Scanning ultrasound treatment of Alzheimer’s disease in mouse model (credit: Gerhard Leinenga and Jürgen Götz/Science Translational Medicine)

University of Queensland researchers have discovered that non-invasive scanning ultrasound (SUS) technology* can be used to treat Alzheimer’s disease in mice and restore memory by breaking apart the neurotoxic Amyloid-β (Aβ) peptide plaques that result in memory loss and cognitive decline.

The method can temporarily open the blood-brain barrier (BBB), activating microglial cells that digest and remove the amyloid plaques that destroy brain synapses.

Treated AD mice displayed improved performance on three memory tasks: the Y-maze, the novel object recognition test, and the active place avoidance task.

The next step is to scale the research in higher animal models ahead of human clinical trials, which are at least two years away. In their paper in the journal Science Translational Medicine, the researchers note possible hurdles. For example, the human brain is much larger, and it’s also thicker than that of a mouse, which may require stronger energy that could cause tissue damage. And it will be necessary to avoid excessive immune activation.

The researchers also plan to see whether this method clears toxic protein aggregates in other neurodegenerative diseases and restores executive functions, including decision-making and motor control. It could also be used as a vehicle for drug or gene delivery, since the BBB remains the major obstacle for the uptake by brain tissue of therapeutic agents.

Previous research in treating Alzheimer’s with ultrasound used magnetic resonance imaging (MRI) to focus the ultrasonic energy to open the BBB for more effective delivery of drugs to the brain.

23:27 Posted in Neurotechnology & neuroinformatics | Permalink | Comments (0)

Jan 25, 2015

Blind mom is able to see her newborn baby son for the very first time using high-tech glasses

Kathy Beitz, 29, is legally blind - she lost her vision as a child and, for a long time, adapted to living in a world she couldn't see (Kathy has Stargardt disease, a condition that causes macular degeneration). Technology called eSight glasses allowed Kathy to see her son on the day he was born. The glasses cost $15,000 and work by capturing real-time video and enhancing it.

20:12 Posted in Brain training & cognitive enhancement, Neurotechnology & neuroinformatics | Permalink | Comments (0)

Dec 16, 2014

Neuroprosthetics

Neuroprosthetics is a relatively new discipline at the boundaries of neuroscience and biomedical engineering, which aims at developing implantable devices to restore neural function. The most popular and clinically successfull neuroprosthesis to date is the cochlear implant, a device that can restore hearing by stimulating directly the human auditory nerve, by bypassing damaged hair cells in the cochlea.

Visual prostheses, on the other hand, are still in a preliminary phase of development, although substantial progress has been made in the last few years. This kind of implantable devices are designed to micro-electrically stimulate nerves in the visual system, based on an image from an external camera. These impulses are then propagated to the visual cortex, which is able to process the information and generate a “pixelated” image. The resulting impression has not the same quality as natural vision but it is still useful for performing basic perceptual and motor tasks, such as identifying an object or navigating a room. An example of this approach is the Boston Retinal Implant Project, a large joint collaborative effort that includes, among others, the Harvard Medical School and MIT.

Another area of neuroprosthetics is concerned with the development of implantable devices to help patients with diseases such as spinal cord injury, limb loss, stroke and neuromuscolar disorders improving their ability to interact with their environment and communicate. These motor neuroprosthetics are also known as “brain computer interfaces” (BCI), which in essence are devices that decode brain signals representing motor intentions and convert these information into overt device control. This process allows the patient to perform different motor tasks, from writing a text on a virtual keyboard to driving a wheel chair or controlling a prosthetic limb. An impressive evolution of motor neuroprosthetic is the combination of BCI and robotics. For example, Leigh R. Hochberg and coll. (Nature 485, 372–375; 2012) have reported that using a robotic arm connected to a neural interface called “BrainGate” two people with long-standing paralysis could control the reaching and grasping actions, such as drinking from a bottle.

Cognitive neuroprosthetics is a further research direction of neuroprosthetics. A cognitive prosthesis is an implantable device which aims at restoring cognitive function to brain-injured individuals by performing the function of the damaged tissue. One of the world’s most advanced effort in this area is being lead by Theodore Berger, a biomedical engineer and neuroscientist at the University of Southern California in Los Angeles. Berger and his coll. are attempting to develop a microchip-based neural prosthesis for the hippocampus, a region of the brain responsible for long-term memory (IEEE Trans Neural Syst Rehabil Eng 20/2, 198–211; 2012). More specifically, the team is developing a biomimetic model of the hippocampal dynamics, which should serve as a neural prosthesis by allowing a bi-directional communication with other neural tissue that normally provides the inputs and outputs to/from a damaged hippocampal area.

22:44 Posted in Neurotechnology & neuroinformatics | Permalink | Comments (0)

Oct 06, 2014

Bionic Vision Australia’s Bionic Eye Gives New Sight to People Blinded by Retinitis Pigmentosa

Via Medgadget

Bionic Vision Australia, a collaboration between of researchers working on a bionic eye, has announced that its prototype implant has completed a two year trial in patients with advanced retinitis pigmentosa. Three patients with profound vision loss received 24-channel suprachoroidal electrode implants that caused no noticeable serious side effects. Moreover, though this was not formally part of the study, the patients were able to see more light and able to distinguish shapes that were invisible to them prior to implantation. The newly gained vision allowed them to improve how they navigated around objects and how well they were able to spot items on a tabletop.

The next step is to try out the latest 44-channel device in a clinical trial slated for next year and then move on to a 98-channel system that is currently in development.

This study is critically important to the continuation of our research efforts and the results exceeded all our expectations,” Professor Mark Hargreaves, Chair of the BVA board, said in a statement. “We have demonstrated clearly that our suprachoroidal implants are safe to insert surgically and cause no adverse events once in place. Significantly, we have also been able to observe that our device prototype was able to evoke meaningful visual perception in patients with profound visual loss.”

Here’s one of the study participants using the bionic eye:

First direct brain-to-brain communication between human subjects

Via KurzweilAI.net

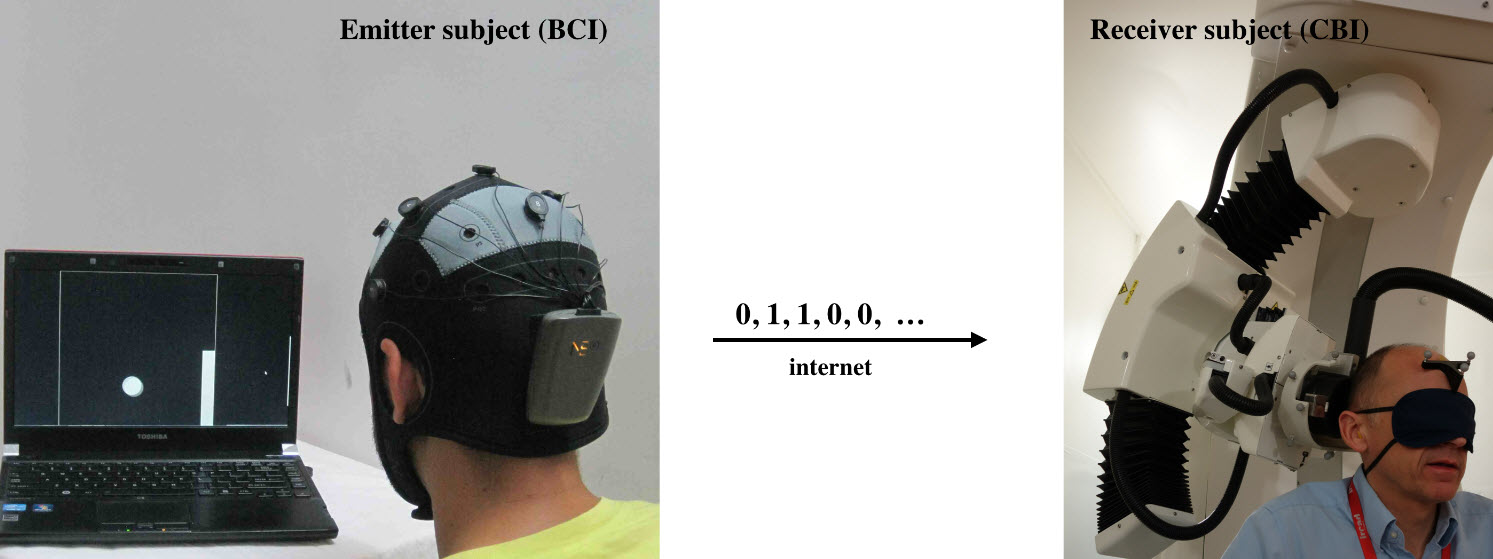

An international team of neuroscientists and robotics engineers have demonstrated the first direct remote brain-to-brain communication between two humans located 5,000 miles away from each other and communicating via the Internet, as reported in a paper recently published in PLOS ONE (open access).

Emitter and receiver subjects with non-invasive devices supporting, respectively, a brain-computer interface (BCI), based on EEG changes, driven by motor imagery (left) and a computer-brain interface (CBI) based on the reception of phosphenes elicited by neuro-navigated TMS (right) (credit: Carles Grau et al./PLoS ONE)

In India, researchers encoded two words (“hola” and “ciao”) as binary strings and presented them as a series of cues on a computer monitor. They recorded the subject’s EEG signals as the subject was instructed to think about moving his feet (binary 0) or hands (binary 1). They then sent the recorded series of binary values in an email message to researchers in France, 5,000 miles away.

There, the binary strings were converted into a series of transcranial magnetic stimulation (TMS) pulses applied to a hotspot location in the right visual occipital cortex that either produced a phosphene (perceived flash of light) or not.

“We wanted to find out if one could communicate directly between two people by reading out the brain activity from one person and injecting brain activity into the second person, and do so across great physical distances by leveraging existing communication pathways,” explains coauthor Alvaro Pascual-Leone, MD, PhD, Director of the Berenson-Allen Center for Noninvasive Brain Stimulation at Beth Israel Deaconess Medical Center (BIDMC) and Professor of Neurology at Harvard Medical School.

A team of researchers from Starlab Barcelona, Spain and Axilum Robotics, Strasbourg, France conducted the experiment. A second similar experiment was conducted between individuals in Spain and France.

“We believe these experiments represent an important first step in exploring the feasibility of complementing or bypassing traditional language-based or other motor/PNS mediated means in interpersonal communication,” the researchers say in the paper.

“Although certainly limited in nature (e.g., the bit rates achieved in our experiments were modest even by current BCI (brain-computer interface) standards, mostly due to the dynamics of the precise CBI (computer-brain interface) implementation, these initial results suggest new research directions, including the non-invasive direct transmission of emotions and feelings or the possibility of sense synthesis in humans — that is, the direct interface of arbitrary sensors with the human brain using brain stimulation, as previously demonstrated in animals with invasive methods.

Brain-to-brain (B2B) communication system overview. On the left, the BCI subsystem is shown schematically, including electrodes over the motor cortex and the EEG amplifier/transmitter wireless box in the cap. Motor imagery of the feet codes the bit value 0, of the hands codes bit value 1. On the right, the CBI system is illustrated, highlighting the role of coil orientation for encoding the two bit values. Communication between the BCI and CBI components is mediated by the Internet. (Credit: Carles Grau et al./PLoS ONE)

“The proposed technology could be extended to support a bi-directional dialogue between two or more mind/brains (namely, by the integration of EEG and TMS systems in each subject). In addition, we speculate that future research could explore the use of closed mind-loops in which information associated to voluntary activity from a brain area or network is captured and, after adequate external processing, used to control other brain elements in the same subject. This approach could lead to conscious synthetically mediated modulation of phenomena best detected subjectively by the subject, including emotions, pain and psychotic, depressive or obsessive-compulsive thoughts.

“Finally, we anticipate that computers in the not-so-distant future will interact directly with the human brain in a fluent manner, supporting both computer- and brain-to-brain communication routinely. The widespread use of human brain-to-brain technologically mediated communication will create novel possibilities for human interrelation with broad social implications that will require new ethical and legislative responses.”

This work was partly supported by the EU FP7 FET Open HIVE project, the Starlab Kolmogorov project, and the Neurology Department of the Hospital de Bellvitge.

Google Glass can now display captions for hard-of-hearing users

Georgia Institute of Technology researchers have created a speech-to-text Android app for Google Glass that displays captions for hard-of-hearing persons when someone is talking to them in person.

“This system allows wearers like me to focus on the speaker’s lips and facial gestures, “said School of Interactive Computing Professor Jim Foley.

“If hard-of-hearing people understand the speech, the conversation can continue immediately without waiting for the caption. However, if I miss a word, I can glance at the transcription, get the word or two I need and get back into the conversation.”

Captioning on Glass display captions for the hard-of-hearing (credit: Georgia Tech)

The “Captioning on Glass” app is now available to install from MyGlass. More information here.

Foley and the students are working with the Association of Late Deafened Adults in Atlanta to improve the program. An iPhone app is planned.

Aug 31, 2014

Improving memory with transcranial magnetic stimulation

A new Northwestern Medicine study reports stimulating a particular region in the brain via non-invasive delivery of electrical current using magnetic pulses, called Transcranial Magnetic Stimulation, improves memory.

Jul 29, 2014

Real-time functional MRI neurofeedback: a tool for psychiatry

Real-time functional MRI neurofeedback: a tool for psychiatry.

Curr Opin Psychiatry. 2014 Jul 14;

Authors: Kim S, Birbaumer N

Abstract. PURPOSE OF REVIEW: The aim of this review is to provide a critical overview of recent research in the field of neuroscientific and clinical application of real-time functional MRI neurofeedback (rtfMRI-nf).

RECENT FINDINGS: RtfMRI-nf allows self-regulating activity in circumscribed brain areas and brain systems. Furthermore, the learned regulation of brain activity has an influence on specific behaviors organized by the regulated brain regions. Patients with mental disorders show abnormal activity in certain regions, and simultaneous control of these regions using rtfMRI-nf may affect the symptoms of related behavioral disorders. SUMMARY: The promising results in clinical application indicate that rtfMRI-nf and other metabolic neurofeedback, such as near-infrared spectroscopy, might become a potential therapeutic tool. Further research is still required to examine whether rtfMRI-nf is a useful tool for psychiatry because there is still lack of knowledge about the neural function of certain brain systems and about neuronal markers for specific mental illnesses.

22:47 Posted in Biofeedback & neurofeedback, Neurotechnology & neuroinformatics | Permalink | Comments (0)

Combining Google Glass with consumer oriented BCI headsets

MindRDR connects Google Glass with a device to monitor brain activity, allowing users to take pictures and socialise them on Twitter or Facebook.

Once a user has decided to share an image, we analyse their brain data and provide an evaluation of their ability to control the interface with their mind. This information is attached to every shared image.

The current version of MindRDR uses commercially available brain monitor Neurosky MindWave Mobile to extract core metrics from the mind.

22:38 Posted in Neurotechnology & neuroinformatics, Wearable & mobile | Permalink | Comments (0)

Apr 15, 2014

A Hybrid Brain Computer Interface System Based on the Neurophysiological Protocol and Brain-actuated Switch for Wheelchair Control

A Hybrid Brain Computer Interface System Based on the Neurophysiological Protocol and Brain-actuated Switch for Wheelchair Control.

J Neurosci Methods. 2014 Apr 5;

Authors: Cao L, Li J, Ji H, Jiang C

BACKGROUND: Brain Computer Interfaces (BCIs) are developed to translate brain waves into machine instructions for external devices control. Recently, hybrid BCI systems are proposed for the multi-degree control of a real wheelchair to improve the systematical efficiency of traditional BCIs. However, it is difficult for existing hybrid BCIs to implement the multi-dimensional control in one command cycle.

NEW METHOD: This paper proposes a novel hybrid BCI system that combines motor imagery (MI)-based bio-signals and steady-state visual evoked potentials (SSVEPs) to control the speed and direction of a real wheelchair synchronously. Furthermore, a hybrid modalities-based switch is firstly designed to turn on/off the control system of the wheelchair.

RESULTS: Two experiments were performed to assess the proposed BCI system. One was implemented for training and the other one conducted a wheelchair control task in the real environment. All subjects completed these tasks successfully and no collisions occurred in the real wheelchair control experiment.

COMPARISON WITH EXISTING METHOD(S): The protocol of our BCI gave much more control commands than those of previous MI and SSVEP-based BCIs. Comparing with other BCI wheelchair systems, the superiority reflected by the index of path length optimality ratio validated the high efficiency of our control strategy.

CONCLUSIONS: The results validated the efficiency of our hybrid BCI system to control the direction and speed of a real wheelchair as well as the reliability of hybrid signals-based switch control.

23:03 Posted in Brain-computer interface, Neurotechnology & neuroinformatics | Permalink | Comments (0)

Mar 02, 2014

3D Thought controlled environment via Interaxon

In this demo video, artist Alex McLeod shows an environment he designed for Interaxon to use at CES in 2011 interaxon.ca/CES#.

The glasses display the scene in 3D and attaches sensors read users brain-states which control elements of the scene.

3D Thought controlled environment via Interaxon from Alex McLeod on Vimeo.

Feb 11, 2014

Neurocam

Via KurzweilAI.net

Keio University scientists have developed a “neurocam” — a wearable camera system that detects emotions, based on an analysis of the user’s brainwaves.

The hardware is a combination of Neurosky’s Mind Wave Mobile and a customized brainwave sensor.

The algorithm is based on measures of “interest” and “like” developed by Professor Mitsukura and the neurowear team.

The users interests are quantified on a range of 0 to 100. The camera automatically records five-second clips of scenes when the interest value exceeds 60, with timestamp and location, and can be replayed later and shared socially on Facebook.

The researchers plan to make the device smaller, more comfortable, and fashionable to wear.

19:31 Posted in Emotional computing, Neurotechnology & neuroinformatics, Wearable & mobile | Permalink | Comments (0)

Feb 02, 2014

A low-cost sonification system to assist the blind

Via KurzweilAI.net

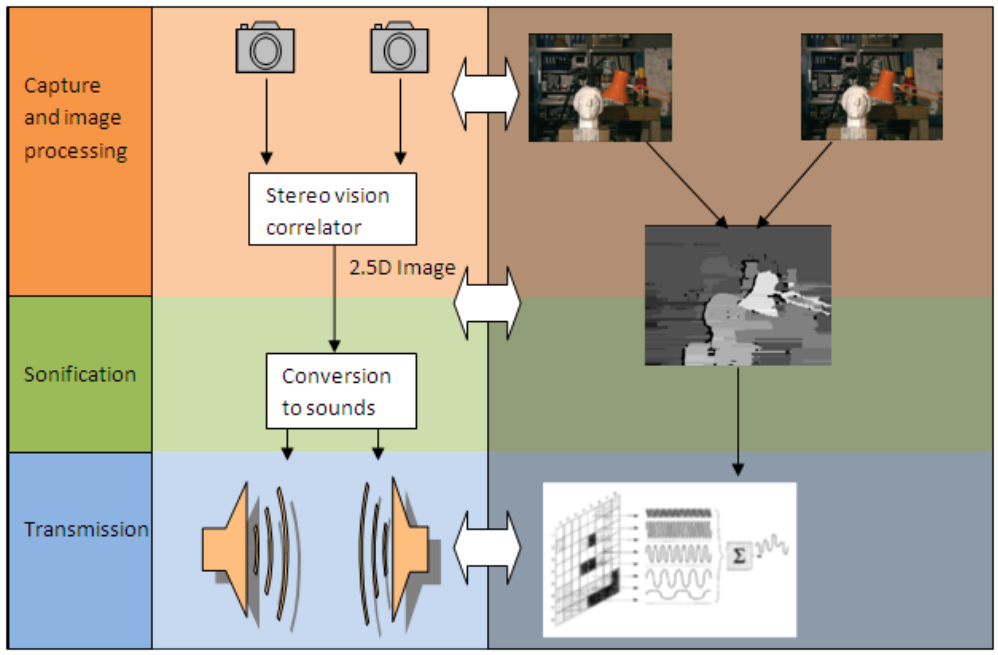

An improved assistive technology system for the blind that uses sonification (visualization using sounds) has been developed by Universidad Carlos III de Madrid (UC3M) researchers, with the goal of replacing costly, bulky current systems.

Called Assistive Technology for Autonomous Displacement (ATAD), the system includes a stereo vision processor measures the difference of images captured by two cameras that are placed slightly apart (for image depth data) and calculates the distance to each point in the scene.

Then it transmits the information to the user by means of a sound code that gives information regarding the position and distance to the different obstacles, using a small audio stereo amplifier and bone-conduction headphones.

19:48 Posted in Neurotechnology & neuroinformatics, Wearable & mobile | Permalink | Comments (0)

Jan 25, 2014

Chris Eliasmith – How to Build a Brain

Via Futuristic news

He’s the creator of “Spaun” the world’s largest brain simulation. Can he really make headway into mimicking the human brain?

Chris Eliasmith has cognitive flexibility on the brain. How do people manage to walk, chew gum and listen to music all at the same time? What is our brain doing as it switches between these tasks and how do we use the same components in head to do all those different things? These are questions that Chris and his team’s Semantic Pointer Architecture Unified Network (Spaun) are determined to answer. Spaun is currently the world’s largest functional brain simulation, and is unique because it’s the first model that can actually emulate behaviours while also modeling the physiology that underlies them.

This groundbreaking work was published in Science, and has been featured by CNN, BBC, Der Spiegel, Popular Science, The Economist and CBC.He is co-author of Neural Engineering , which describes a framework for building biologically realistic neural models and his new book, How to Build a Brain applies those methods to large-scale cognitive brain models.

Chris holds a Canada Research Chair in Theoretical Neuroscience at the University of Waterloo. He is also Director of Waterloo’s Centre for Theoretical Neuroscience, and is jointly appointed in the Philosophy, Systems Design Engineering departments, as well as being cross-appointed to Computer Science.

For more on Chris, visit http://arts.uwaterloo.ca/~celiasmi/

Source: TEDxTalks

21:57 Posted in Cognitive Informatics, Neurotechnology & neuroinformatics | Permalink | Comments (0)

Dec 24, 2013

Speaking and cognitive distractions during EEG-based brain control of a virtual neuroprosthesis-arm

Speaking and cognitive distractions during EEG-based brain control of a virtual neuroprosthesis-arm.

J Neuroeng Rehabil. 2013 Dec 21;10(1):116

Authors: Foldes ST, Taylor DM

BACKGROUND: Brain-computer interface (BCI) systems have been developed to provide paralyzed individuals the ability to command the movements of an assistive device using only their brain activity. BCI systems are typically tested in a controlled laboratory environment were the user is focused solely on the brain-control task. However, for practical use in everyday life people must be able to use their brain-controlled device while mentally engaged with the cognitive responsibilities of daily activities and while compensating for any inherent dynamics of the device itself. BCIs that use electroencephalography (EEG) for movement control are often assumed to require significant mental effort, thus preventing users from thinking about anything else while using their BCI. This study tested the impact of cognitive load as well as speaking on the ability to use an EEG-based BCI. FINDINGS: Six participants controlled the two-dimensional (2D) movements of a simulated neuroprosthesis-arm under three different levels of cognitive distraction. The two higher cognitive load conditions also required simultaneously speaking during BCI use. On average, movement performance declined during higher levels of cognitive distraction, but only by a limited amount. Movement completion time increased by 7.2%, the percentage of targets successfully acquired declined by 11%, and path efficiency declined by 8.6%. Only the decline in percentage of targets acquired and path efficiency were statistically significant (p < 0.05). CONCLUSION: People who have relatively good movement control of an EEG-based BCI may be able to speak and perform other cognitively engaging activities with only a minor drop in BCI-control performance.

21:03 Posted in Brain-computer interface, Neurotechnology & neuroinformatics | Permalink | Comments (0)

Case hand prosthesis with sense of touch allows amputees to feel

Via Medgadget

There have been a few attempts at simulating a sense of touch in prosthetic hands, but a recently released video from Case Western Reserve University demonstrates newly developed haptic technology that looks convincingly impressive. Here’s a video of an amputee wearing a prosthetic hand with a sensor on the forefinger, while blindfolded and wearing headphones that block any hearing, pulling stems off of cherries. The first part of the video shows him doing it with the sensor turned off and then when it’s activated.

For a picture of the electrode technology, please visit:http://www.flickr.com/photos/tylerlab/10075384624/

20:56 Posted in Neurotechnology & neuroinformatics | Permalink | Comments (0)

NeuroOn mask improves sleep and helps manage jet lag

Via Medgadget

A group of Polish engineers is working on a smart sleeping mask that they hope will allow people to get more out of their resting time, as well as allow for unusual sleeping schedules that would particularly benefit those who are often on-call. The NeuroOn mask will have an embedded EEG for brain wave monitoring, EMG for detecting muscle motion on the face, and sensors that can track whether your pupils are moving and whether they are going through REM. The team is currently raising money on Kickstarter where you can pre-order your own NeuroOn once it’s developed into a final product.

Dec 21, 2013

New Scientist: Mind-reading light helps you stay in the zone

Re-blogged from New Scientist

WITH a click of a mouse, I set a path through the mountains for drone #4. It's one of five fliers under my control, all now heading to different destinations. Routes set, their automation takes over and my mind eases, bringing a moment of calm. But the machine watching my brain notices the lull, decides I can handle more, and drops a new drone in the south-east corner of the map.

The software is keeping my brain in a state of full focus known as flow, or being "in the zone". Too little work, and the program notices my attention start to flag and gives me more drones to handle. If I start to become a frazzled air traffic controller, the computer takes one of the drones off my plate, usually without me even noticing.

The system monitors the workload by pulsing light into my prefrontal cortex 12 times a second. The amount of light that oxygenated and deoxygenated haemoglobin in the blood there absorbs and reflects gives an indication of how mentally engaged I am. Harder brain work calls for more oxygenated blood, and changes how the light is absorbed. Software interprets the signal from this functional near infrared spectroscopy (fNIRS) and uses it to assign me the right level of work.

Dan Afergan, who is running the study at Tufts University in Medford, Massachusetts, points to an on-screen readout as I play. "It's predicting high workload with very high certainty, and, yup, number three just dropped off," he says over my shoulder. Sure enough, I'm now controlling just five drones again.

To achieve this mind-monitoring, I'm hooked up to a bulky rig of fibre-optic cables and have an array of LEDs stuck to my forehead. The cables stream off my head into a box that converts light signals to electrical ones. These fNIRS systems don't have to be this big, though. A team led by Sophie Piper at Charité University of Medicine in Berlin, Germany, tested a portable device on cyclists in Berlin earlier this year – the first time fNIRS has been done during an outdoor activity.

Afergan doesn't plan to be confined to the lab for long either. He's studying ways to integrate brain-activity measuring into the Google Glass wearable computer. A lab down the hall already has a prototype fNIRS system on a chip that could, with a few improvements, be built into a Glass headset. "Glass is already on your forehead. It's really not much of a stretch to imagine building fNIRS into the headband," he says.

Afergan is working on a Glass navigation system for use in cars that responds to a driver's level of focus. When they are concentrating hard, Glass will show only basic instructions, or perhaps just give audio directions. When the driver is focusing less, on a straight stretch of road perhaps, Glass will provide more details of the route. The team also plans to adapt Google Now – the company's digital assistant software – for Glass so that it only gives you notifications when your mind has room for them.

Peering into drivers' minds will become increasingly important, says Erin Solovey, a computer scientist at Drexel University in Philadelphia, Pennsylvania. Many cars have automatic systems for adaptive cruise control, keeping in the right lane and parking. These can help, but they also bring the risk that drivers may not stay focused on the task at hand, because they are relying on the automation.

Systems using fNIRS could monitor a driver's focus and adjust the level of automation to keep drivers safely engaged with what the car is doing, she says.

This article appeared in print under the headline "Stay in the zone"