Apr 06, 2017

Crowdsourcing VR research

If 2016 has been a golden year for virtual reality, there is reason to believe that the coming year may be even better. According to a recent market forecast by International Data Corporation (IDC), worldwide revenues for the augmented reality and virtual reality market are projected to grow from $5.2 billion in 2016 to more than $162 billion in 2020.

With virtual reality becoming a mass product, it becomes crucial to understand its psychological effects on users.

Over the last decade, a growing body of research has been addressing the positive and negative implications of virtual experience for the human mind. Yet many questions still remain unanswered.

Some of these issues are concerned with the defining features of virtual experience, i.e., what it means to be “present” in a computer-simulated reality. Other questions regard the drawbacks of virtual environments, such as cybersickness, addiction and other psychological disorders caused by prolonged exposure to immersive virtual worlds.

For example, in a recent article appeared in The Atlantic, Rebecca Searles wrote that after exploring a virtual environment, some users have reported a feeling of detachment that can last days or even weeks. This effect had been already documented by Frederick Aardema and colleagues in the journal Cyberpsychology, Behavior, and Social Networking some years ago. The team administered a nonclinical sample questionnaires to measures dissociation, sense of presence, and immersion before and after an immersion in a virtual environment. Findings showed that after explosure to virtual reality, participants reported an increase in dissociative experience (depersonalization and derealization), including a lessened sense of presence in objective reality.

However, more research is needed to understand this phenomenon, and other aspects of virtual experience that are still to be uncovered.

Until today, most studies on virtual reality have been mainly conducted in scientific laboratories, because of the relatively high costs of virtual reality hardware and the need of specialist expertise for system setup and maintenance.

However, the increasing diffusion of commercial virtual reality headsets and software could make it possible to move research from the laboratory to private homes. For example, researchers could create online experiments and ask people to participate using their own virtual reality equipment, eventually providing some kind of rewards for their involvement.

An online collaboration platform could be developed to plan studies, create research protocols, collect and share data from participants. This open research strategy may offer several advantages. For example, the platform would offer researchers the opportunity to rapidly get input from large numbers of virtual reality participants. Furthermore, the users themselves could be involved in formulating research questions and co-create experiments with researchers.

In the medical field, this approach has been successfully pioneered by online patient communities such as PatientsLikeMe and CureTogether. These social health sites provide a real-time research platform that allow clinical researchers and patients to partner for improving health outcomes. Other examples of internet-based citizen science projects include applications in astronomy, environmental protection, neuroscience to name a few (more examples can be found in Zooniverse, the world’s largest citizen science web portal).

But virtual reality could extend the potential of citizen science even further. For example, virtual reality applications could be developed that are specifically designed for research purposes, i.e., virtual reality games that “manipulate” some variables of interest for researchers, or virtual reality versions of classic experimental paradigms, such as the “Stroop test”. It could be even possible to create virtual reality simulations of whole research laboratories, to allow participants to participate in online experiments using their avatars.

09:41 Posted in Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Oct 15, 2016

Transforming Experience: The Potential of Augmented Reality and Virtual Reality for Enhancing Personal and Clinical Change

Front. Psychiatry, 30 September 2016 http://dx.doi.org/10.3389/fpsyt.2016.00164

Giuseppe Riva, Rosa M. Baños, Cristina Botella, Fabrizia Mantovani and Andrea Gaggioli

During life, many personal changes occur. These include changing house, school, work, and even friends and partners. However, the daily experience shows clearly that, in some situations, subjects are unable to change even if they want to. The recent advances in psychology and neuroscience are now providing a better view of personal change, the change affecting our assumptive world: (a) the focus of personal change is reducing the distance between self and reality (conflict); (b) this reduction is achieved through (1) an intense focus on the particular experience creating the conflict or (2) an internal or external reorganization of this experience; (c) personal change requires a progression through a series of different stages that however happen in discontinuous and non-linear ways; and (d) clinical psychology is often used to facilitate personal change when subjects are unable to move forward. Starting from these premises, the aim of this paper is to review the potential of virtuality for enhancing the processes of personal and clinical change. First, the paper focuses on the two leading virtual technologies – augmented reality (AR) and virtual reality (VR) – exploring their current uses in behavioral health and the outcomes of the 28 available systematic reviews and meta-analyses. Then the paper discusses the added value provided by VR and AR in transforming our external experience by focusing on the high level of personal efficacy and self-reflectiveness generated by their sense of presence and emotional engagement. Finally, it outlines the potential future use of virtuality for transforming our inner experience by structuring, altering, and/ or replacing our bodily self-consciousness. The final outcome may be a new generation of transformative experiences that provide knowledge that is epistemically inaccessible to the individual until he or she has that experience, while at the same time transforming the individual's worldview.

Jun 21, 2016

New book on Human Computer Confluence - FREE PDF!

Two good news for Positive Technology followers.

1) Our new book on Human Computer Confluence is out!

2) It can be downloaded for free here

Human-computer confluence refers to an invisible, implicit, embodied or even implanted interaction between humans and system components. New classes of user interfaces are emerging that make use of several sensors and are able to adapt their physical properties to the current situational context of users.

A key aspect of human-computer confluence is its potential for transforming human experience in the sense of bending, breaking and blending the barriers between the real, the virtual and the augmented, to allow users to experience their body and their world in new ways. Research on Presence, Embodiment and Brain-Computer Interface is already exploring these boundaries and asking questions such as: Can we seamlessly move between the virtual and the real? Can we assimilate fundamentally new senses through confluence?

The aim of this book is to explore the boundaries and intersections of the multidisciplinary field of HCC and discuss its potential applications in different domains, including healthcare, education, training and even arts.

DOWNLOAD THE FULL BOOK HERE AS OPEN ACCESS

Please cite as follows:

Andrea Gaggioli, Alois Ferscha, Giuseppe Riva, Stephen Dunne, Isabell Viaud-Delmon (2016). Human computer confluence: transforming human experience through symbiotic technologies. Warsaw: De Gruyter. ISBN 9783110471120.

09:53 Posted in AI & robotics, Augmented/mixed reality, Biofeedback & neurofeedback, Blue sky, Brain training & cognitive enhancement, Brain-computer interface, Cognitive Informatics, Cyberart, Cybertherapy, Emotional computing, Enactive interfaces, Future interfaces, ICT and complexity, Neurotechnology & neuroinformatics, Positive Technology events, Research tools, Self-Tracking, Serious games, Technology & spirituality, Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink

May 26, 2016

From User Experience (UX) to Transformative User Experience (T-UX)

In 1999, Joseph Pine and James Gilmore wrote a seminal book titled “The Experience Economy” (Harvard Business School Press, Boston, MA) that theorized the shift from a service-based economy to an experience-based economy.

According to these authors, in the new experience economy the goal of the purchase is no longer to own a product (be it a good or service), but to use it in order to enjoy a compelling experience. An experience, thus, is a whole-new type of offer: in contrast to commodities, goods and services, it is designed to be as personal and memorable as possible. Just as in a theatrical representation, companies stage meaningful events to engage customers in a memorable and personal way, by offering activities that provide engaging and rewarding experiences.

Indeed, if one looks back at the past ten years, the concept of experience has become more central to several fields, including tourism, architecture, and – perhaps more relevant for this column – to human-computer interaction, with the rise of “User Experience” (UX).

The concept of UX was introduced by Donald Norman in a 1995 article published on the CHI proceedings (D. Norman, J. Miller, A. Henderson: What You See, Some of What's in the Future, And How We Go About Doing It: HI at Apple Computer. Proceedings of CHI 1995, Denver, Colorado, USA). Norman argued that focusing exclusively on usability attribute (i.e. easy of use, efficacy, effectiveness) when designing an interactive product is not enough; one should take into account the whole experience of the user with the system, including users’ emotional and contextual needs. Since then, the UX concept has assumed an increasing importance in HCI. As McCarthy and Wright emphasized in their book “Technology as Experience” (MIT Press, 2004):

“In order to do justice to the wide range of influences that technology has in our lives, we should try to interpret the relationship between people and technology in terms of the felt life and the felt or emotional quality of action and interaction.” (p. 12).

However, according to Pine and Gilmore experience may not be the last step of what they call as “Progression of Economic Value”. They speculated further into the future, by identifying the “Transformation Economy” as the likely next phase. In their view, while experiences are essentially memorable events which stimulate the sensorial and emotional levels, transformations go much further in that they are the result of a series of experiences staged by companies to guide customers learning, taking action and eventually achieving their aspirations and goals.

In Pine and Gilmore terms, an aspirant is the individual who seeks advice for personal change (i.e. a better figure, a new career, and so forth), while the provider of this change (a dietist, a university) is an elictor. The elictor guide the aspirant through a series of experiences which are designed with certain purpose and goals. According to Pine and Gilmore, the main difference between an experience and a transformation is that the latter occurs when an experience is customized:

“When you customize an experience to make it just right for an individual - providing exactly what he needs right now - you cannot help changing that individual. When you customize an experience, you automatically turn it into a transformation, which companies create on top of experiences (recall that phrase: “a life-transforming experience”), just as they create experiences on top of services and so forth” (p. 244).

A further key difference between experiences and transformations concerns their effects: because an experience is inherently personal, no two people can have the same one. Likewise, no individual can undergo the same transformation twice: the second time it’s attempted, the individual would no longer be the same person (p. 254-255).

But what will be the impact of this upcoming, “transformation economy” on how people relate with technology? If in the experience economy the buzzword is “User Experience”, in the next stage the new buzzword might be “User Transformation”.

Indeed, we can see some initial signs of this shift. For example, FitBit and similar self-tracking gadgets are starting to offer personalized advices to foster enduring changes in users’ lifestyle; another example is from the fields of ambient intelligence and domotics, where there is an increasing focus towards designing systems that are able to learn from the user’s behaviour (i.e. by tracking the movement of an elderly in his home) to provide context-aware adaptive services (i.e. sending an alert when the user is at risk of falling).

But likely, the most important ICT step towards the transformation economy could take place with the introduction of next-generation immersive virtual reality systems. Since these new systems are based on mobile devices (an example is the recent partnership between Oculus and Samsung), they are able to deliver VR experiences that incorporate information on the external/internal context of the user (i.e. time, location, temperature, mood etc) by using the sensors incapsulated in the mobile phone.

By personalizing the immersive experience with context-based information, it might be possibile to induce higher levels of involvement and presence in the virtual environment. In case of cyber-therapeutic applications, this could translate into the development of more effective, transformative virtual healing experiences.

Furthermore, the emergence of "symbiotic technologies", such as neuroprosthetic devices and neuro-biofeedback, is enabling a direct connection between the computer and the brain. Increasingly, these neural interfaces are moving from the biomedical domain to become consumer products. But unlike existing digital experiential products, symbiotic technologies have the potential to transform more radically basic human experiences.

Brain-computer interfaces, immersive virtual reality and augmented reality and their various combinations will allow users to create “personalized alterations” of experience. Just as nowadays we can download and install a number of “plug-ins”, i.e. apps to personalize our experience with hardware and software products, so very soon we may download and install new “extensions of the self”, or “experiential plug-ins” which will provide us with a number of options for altering/replacing/simulating our sensorial, emotional and cognitive processes.

Such mediated recombinations of human experience will result from of the application of existing neuro-technologies in completely new domains. Although virtual reality and brain-computer interface were originally developed for applications in specific domains (i.e. military simulations, neurorehabilitation, etc), today the use of these technologies has been extended to other fields of application, ranging from entertainment to education.

In the field of biology, Stephen Jay Gould and Elizabeth Vrba (Paleobiology, 8, 4-15, 1982) have defined “exaptation” the process in which a feature acquires a function that was not acquired through natural selection. Likewise, the exaptation of neurotechnologies to the digital consumer market may lead to the rise of a novel “neuro-experience economy”, in which technology-mediated transformation of experience is the main product.

Just as a Genetically-Modified Organism (GMO) is an organism whose genetic material is altered using genetic-engineering techniques, so we could define aTechnologically-Modified Experience (ETM) a re-engineered experience resulting from the artificial manipulation of neurobiological bases of sensorial, affective, and cognitive processes.

Clearly, the emergence of the transformative neuro-experience economy will not happen in weeks or months but rather in years. It will take some time before people will find brain-computer devices on the shelves of electronic stores: most of these tools are still in the pre-commercial phase at best, and some are found only in laboratories.

Nevertheless, the mere possibility that such scenario will sooner or later come to pass, raises important questions that should be addressed before symbiotic technologies will enter our lives: does technological alteration of human experience threaten the autonomy of individuals, or the authenticity of their lives? How can we help individuals decide which transformations are good or bad for them?

Answering these important issues will require the collaboration of many disciplines, including philosophy, computer ethics and, of course, cyberpsychology.

Dec 26, 2015

Manus VR Experiment with Valve’s Lighthouse to Track VR Gloves

Via Road to VR

The Manus VR team demonstrate their latest experiment, utilising Valve’s laser-based Lighthouse system to track their in-development VR glove.

Manus VR (previously Manus Machina), the company from Eindhoven, Netherlands dedicated to building VR input devices, seem have gained momentum in 2015. They secured their first round of seed funding and have shipped early units to developers and now, their R&D efforts have extended to Valve’s laser based tracking solution Lighthouse, as used in the forthcoming HTC Vive headset and SteamVR controllers.

The Manus VR team seem to have canibalised a set of SteamVR controllers, leveraging the positional tracking of wrist mounted units to augment Manus VR’s existing glove-mounted IMUs. Last time I tried the system, the finger joint detection was pretty good, but the Samsung Gear VR camera-based positional tracking struggled understandably with latency and accuracy. The experience on show seems immeasurably better, perhaps unsurprisingly.

11:47 Posted in Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Oct 18, 2014

New Technique Helps Diagnose Consciousness in Locked-in Patients

Via Medgadget

Brain networks in two behaviourally-similar vegetative patients (left and middle), but one of whom imagined playing tennis (middle panel), alongside a healthy adult (right panel). Credit: Srivas Chennu

People locked into a vegetative state due to disease or injury are a major mystery for medical science. Some may be fully unconscious, while others remain aware of what’s going on around them but can’t speak or move to show it. Now scientists at Cambridge have reported in journal PLOS Computational Biology on a new technique that can help identify locked-in people that can still hear and retain their consciousness.

Some details from the study abstract:

We devised a novel topographical metric, termed modular span, which showed that the alpha network modules in patients were also spatially circumscribed, lacking the structured long-distance interactions commonly observed in the healthy controls. Importantly however, these differences between graph-theoretic metrics were partially reversed in delta and theta band networks, which were also significantly more similar to each other in patients than controls. Going further, we found that metrics of alpha network efficiency also correlated with the degree of behavioural awareness. Intriguingly, some patients in behaviourally unresponsive vegetative states who demonstrated evidence of covert awareness with functional neuroimaging stood out from this trend: they had alpha networks that were remarkably well preserved and similar to those observed in the controls. Taken together, our findings inform current understanding of disorders of consciousness by highlighting the distinctive brain networks that characterise them. In the significant minority of vegetative patients who follow commands in neuroimaging tests, they point to putative network mechanisms that could support cognitive function and consciousness despite profound behavioural impairment.

Study in PLOS Computational Biology: Spectral Signatures of Reorganised Brain Networks in Disorders of Consciousness

Oct 17, 2014

Leia Display System - promo video HD short version

www.leiadisplay.com

www.facebook.com/LeiaDisplaySystem

21:11 Posted in Telepresence & virtual presence | Permalink | Comments (0)

Oct 06, 2014

Is the metaverse still alive?

In the last decade, online virtual worlds such as Second Life and alike have become enormously popular. Since their appearance on the technology landscape, many analysts regarded shared 3D virtual spaces as a disruptive innovation, which would have rendered the Web itself obsolete.

This high expectation attracted significant investments from large corporations such as IBM, which started building their virtual spaces and offices in the metaverse. Then, when it became clear that these promises would not be kept, disillusionment set in and virtual worlds started losing their edge. However, this is not a new phenomenon in high-tech, happening over and over again.

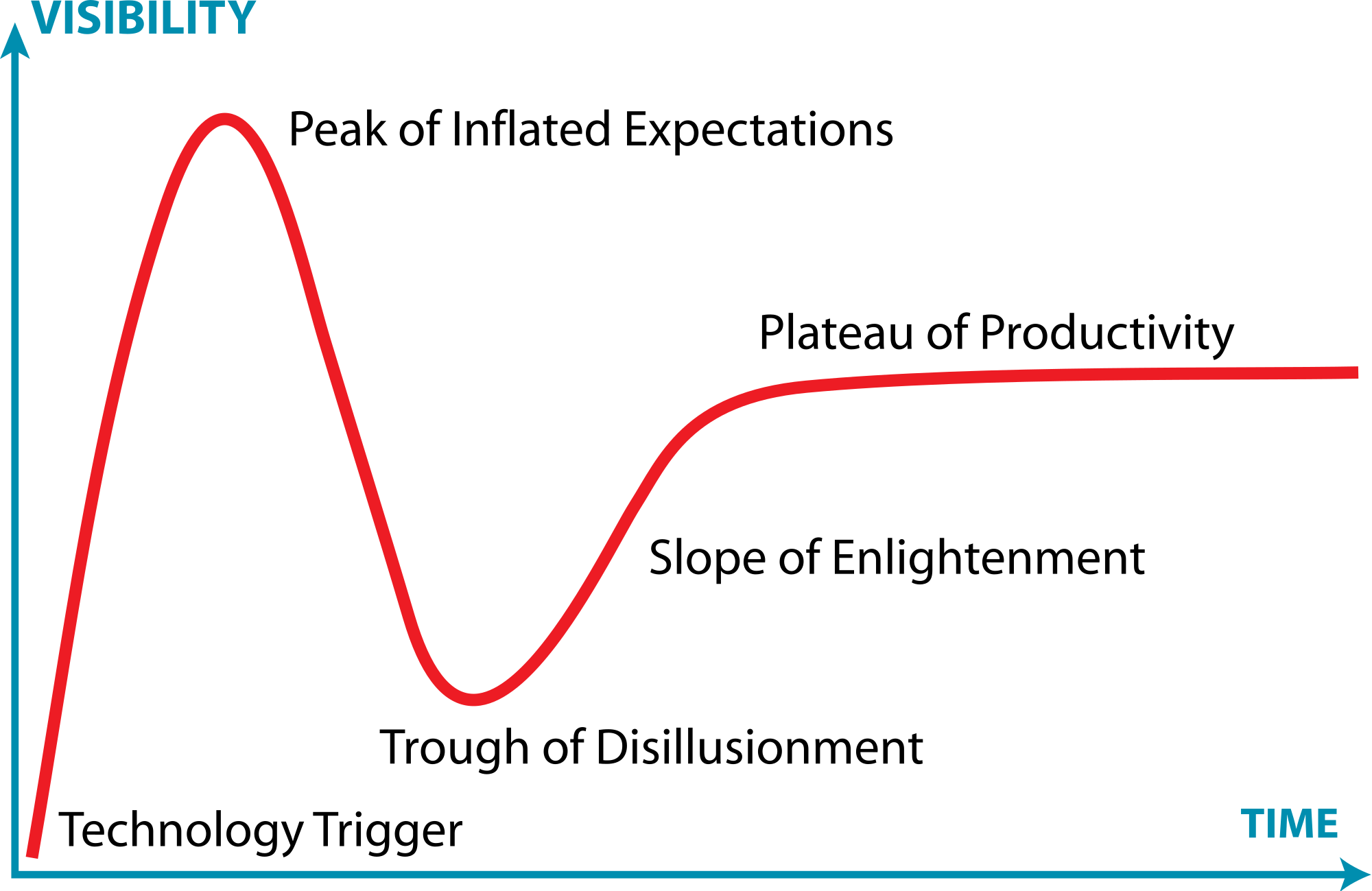

The US consulting company Gartner has developed a very popular model to describe this effect, called the “Hype Cycle”. The Hype Cycle provides a graphic representation of the maturity and adoption of technologies and applications.

It consists of five phases, which show how emerging technologies will evolve.

In the first, “technology trigger” phase, a new technology is launched which attracts the interest of media. This is followed by the “peak of inflated expectations”, characterized by a proliferation of positive articles and comments, which generate overexpectations among users and stakeholders.

In the next, “trough of disillusionment” phase, these exaggerated expectations are not fulfilled, resulting in a growing number of negative comments generally followed by a progressive indifference.

In the “slope of enlightenment” the technology potential for further applications becomes more broadly understood and an increasing number of companies start using it.

In the final, “plateau of productivity” stage, the emerging technology established itself as an effective tool and mainstream adoption takes off.

So what stage in the hype cycle are virtual worlds now?

After the 2006-2007 peak, metaverses entered the downward phase of the hype cycle, progressively loosing media interest, investments and users. Many high-tech analysts still consider this decline an irreversible process.

However, the negative outlook that headed shared virtual worlds into the trough of disillusionment maybe soon reversed. This is thanks to the new interest in virtual reality raised by the Oculus Rift (recently acquired by Facebook for $2 billion), Sony’s Project Morpheus and alike immersive displays, which are still at the takeoff stage in the hype cycle.

Oculus Rift's chief scientist Michael Abrash makes no mystery of the fact that his main ambition has always been to build a metaverse such the one described in Neal Stephenson's (1992) cyberpunk novel Snow Crash. As he writes on the Oculus blog

"Sometime in 1993 or 1994, I read Snow Crash and for the first time thought something like the Metaverse might be possible in my lifetime."

Furthermore, despite the negative comments and deluded expectations, the metaverse keeps attracting new users: in its 10th anniversary on June 23rd 2013, an infographic reported that Second Life had over 1 million users visit around the world monthly, more than 400,000 new accounts per month, and 36 million registered users.

So will Michael Abrash’s metaverse dream come true? Even if one looks into the crystal ball of the hype cycle, the answer is not easily found.

22:36 Posted in Blue sky, Serious games, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

First direct brain-to-brain communication between human subjects

Via KurzweilAI.net

An international team of neuroscientists and robotics engineers have demonstrated the first direct remote brain-to-brain communication between two humans located 5,000 miles away from each other and communicating via the Internet, as reported in a paper recently published in PLOS ONE (open access).

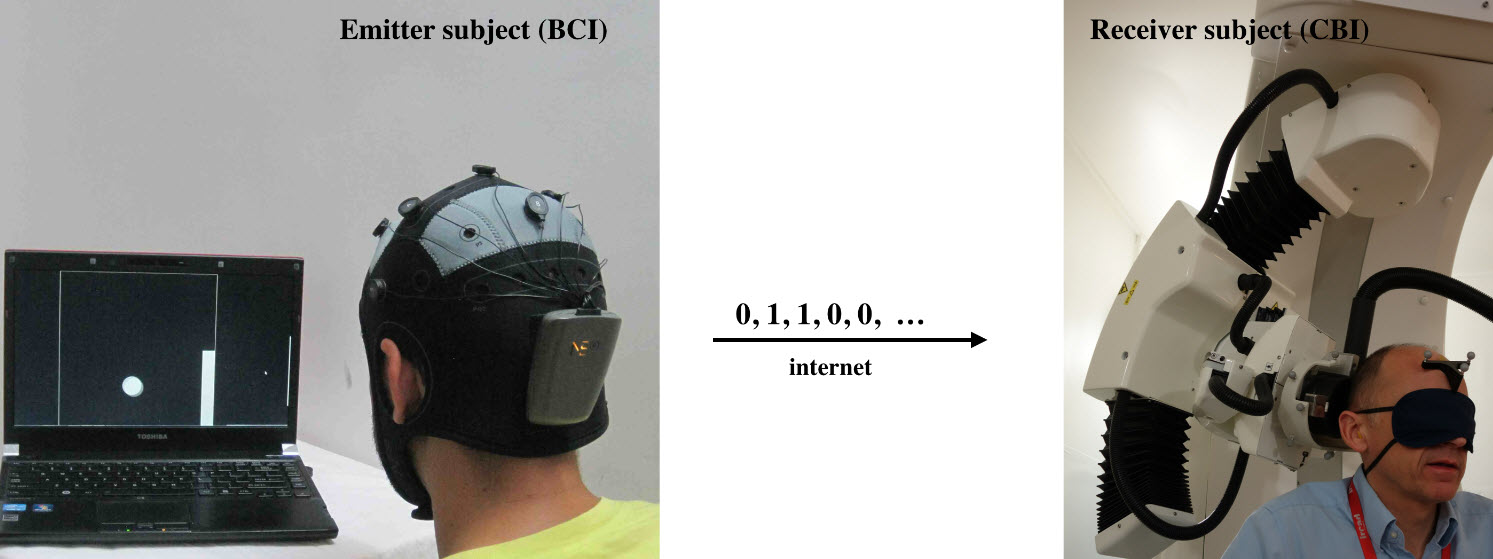

Emitter and receiver subjects with non-invasive devices supporting, respectively, a brain-computer interface (BCI), based on EEG changes, driven by motor imagery (left) and a computer-brain interface (CBI) based on the reception of phosphenes elicited by neuro-navigated TMS (right) (credit: Carles Grau et al./PLoS ONE)

In India, researchers encoded two words (“hola” and “ciao”) as binary strings and presented them as a series of cues on a computer monitor. They recorded the subject’s EEG signals as the subject was instructed to think about moving his feet (binary 0) or hands (binary 1). They then sent the recorded series of binary values in an email message to researchers in France, 5,000 miles away.

There, the binary strings were converted into a series of transcranial magnetic stimulation (TMS) pulses applied to a hotspot location in the right visual occipital cortex that either produced a phosphene (perceived flash of light) or not.

“We wanted to find out if one could communicate directly between two people by reading out the brain activity from one person and injecting brain activity into the second person, and do so across great physical distances by leveraging existing communication pathways,” explains coauthor Alvaro Pascual-Leone, MD, PhD, Director of the Berenson-Allen Center for Noninvasive Brain Stimulation at Beth Israel Deaconess Medical Center (BIDMC) and Professor of Neurology at Harvard Medical School.

A team of researchers from Starlab Barcelona, Spain and Axilum Robotics, Strasbourg, France conducted the experiment. A second similar experiment was conducted between individuals in Spain and France.

“We believe these experiments represent an important first step in exploring the feasibility of complementing or bypassing traditional language-based or other motor/PNS mediated means in interpersonal communication,” the researchers say in the paper.

“Although certainly limited in nature (e.g., the bit rates achieved in our experiments were modest even by current BCI (brain-computer interface) standards, mostly due to the dynamics of the precise CBI (computer-brain interface) implementation, these initial results suggest new research directions, including the non-invasive direct transmission of emotions and feelings or the possibility of sense synthesis in humans — that is, the direct interface of arbitrary sensors with the human brain using brain stimulation, as previously demonstrated in animals with invasive methods.

Brain-to-brain (B2B) communication system overview. On the left, the BCI subsystem is shown schematically, including electrodes over the motor cortex and the EEG amplifier/transmitter wireless box in the cap. Motor imagery of the feet codes the bit value 0, of the hands codes bit value 1. On the right, the CBI system is illustrated, highlighting the role of coil orientation for encoding the two bit values. Communication between the BCI and CBI components is mediated by the Internet. (Credit: Carles Grau et al./PLoS ONE)

“The proposed technology could be extended to support a bi-directional dialogue between two or more mind/brains (namely, by the integration of EEG and TMS systems in each subject). In addition, we speculate that future research could explore the use of closed mind-loops in which information associated to voluntary activity from a brain area or network is captured and, after adequate external processing, used to control other brain elements in the same subject. This approach could lead to conscious synthetically mediated modulation of phenomena best detected subjectively by the subject, including emotions, pain and psychotic, depressive or obsessive-compulsive thoughts.

“Finally, we anticipate that computers in the not-so-distant future will interact directly with the human brain in a fluent manner, supporting both computer- and brain-to-brain communication routinely. The widespread use of human brain-to-brain technologically mediated communication will create novel possibilities for human interrelation with broad social implications that will require new ethical and legislative responses.”

This work was partly supported by the EU FP7 FET Open HIVE project, the Starlab Kolmogorov project, and the Neurology Department of the Hospital de Bellvitge.

Sep 25, 2014

With Cyberith's Virtualizer, you can run around wearing an Oculus Rift

00:38 Posted in Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Sep 21, 2014

First hands-on: Crescent Bay demo

I just tested the Oculus Crescent Bay prototype at the Oculus Connect event in LA.

I still can't close my mouth.

The demo lasted about 10 min, during which several scenes were presented. The resolution and framerate are astounding, you can turn completely around. I can say this is the first time in my life I can really say I was there.

I believe this is really the begin of a new era for VR, and I am sure I won't sleep tonight thinking about the infinite possibilities and applications of this technology. and I don't think I am exaggerating - if anything, I am underestimating

04:50 Posted in Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink | Comments (0)

Aug 03, 2014

Fly like a Birdly

Birdly is a full body, fully immersive, Virtual Reality flight simulator developed at the Zurich University of the Arts (ZHdK). With Birdly, you can embody an avian creature, the Red Kite, visualized through Oculus Rift, as it soars over the 3D virtual city of San Francisco, heightened by sonic, olfactory, and wind feedback.

21:38 Posted in Blue sky, Creativity and computers, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Jul 09, 2014

Experiential Virtual Scenarios With Real-Time Monitoring (Interreality) for the Management of Psychological Stress: A Block Randomized Controlled Trial

The recent convergence between technology and medicine is offering innovative methods and tools for behavioral health care. Among these, an emerging approach is the use of virtual reality (VR) within exposure-based protocols for anxiety disorders, and in particular posttraumatic stress disorder. However, no systematically tested VR protocols are available for the management of psychological stress. Objective: Our goal was to evaluate the efficacy of a new technological paradigm, Interreality, for the management and prevention of psychological stress. The main feature of Interreality is a twofold link between the virtual and the real world achieved through experiential virtual scenarios (fully controlled by the therapist, used to learn coping skills and improve self-efficacy) with real-time monitoring and support (identifying critical situations and assessing clinical change) using advanced technologies (virtual worlds, wearable biosensors, and smartphones).

Full text paper available at: http://www.jmir.org/2014/7/e167/

Jun 30, 2014

Never do a Tango with an Eskimo

Apr 15, 2014

Avegant - Glyph Kickstarter - Wearable Retinal Display

Via Mashable

Move over Google Glass and Oculus Rift, there's a new kid on the block: Glyph, a mobile, personal theater.

Glyph looks like a normal headset and operates like one, too. That is, until you move the headband down over your eyes and it becomes a fully-functional visual visor that displays movies, television shows, video games or any other media connected via the attached HDMI cable.

Using Virtual Retinal Display (VRD), a technology that mimics the way we see light, the Glyph projects images directly onto your retina using one million micromirrors in each eye piece. These micromirrors reflect the images back to the retina, producing a reportedly crisp and vivid quality.

22:56 Posted in Future interfaces, Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink | Comments (0)

Apr 06, 2014

Measuring the effects through time of the influence of visuomotor and visuotactile synchronous stimulation on a virtual body ownership illusion

Measuring the effects through time of the influence of visuomotor and visuotactile synchronous stimulation on a virtual body ownership illusion.

Perception. 2014;43(1):43-58

Authors: Kokkinara E, Slater M

Abstract. Previous studies have examined the experience of owning a virtual surrogate body or body part through specific combinations of cross-modal multisensory stimulation. Both visuomotor (VM) and visuotactile (VT) synchronous stimulation have been shown to be important for inducing a body ownership illusion, each tested separately or both in combination. In this study we compared the relative importance of these two cross-modal correlations, when both are provided in the same immersive virtual reality setup and the same experiment. We systematically manipulated VT and VM contingencies in order to assess their relative role and mutual interaction. Moreover, we present a new method for measuring the induced body ownership illusion through time, by recording reports of breaks in the illusion of ownership ('breaks') throughout the experimental phase. The balance of the evidence, from both questionnaires and analysis of the breaks, suggests that while VM synchronous stimulation contributes the greatest to the attainment of the illusion, a disruption of either (through asynchronous stimulation) contributes equally to the probability of a break in the illusion.

23:59 Posted in Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Mar 02, 2014

Voluntary Out-of-Body Experience: An fMRI Study

Voluntary Out-of-Body Experience: An fMRI Study.

Front Hum Neurosci. 2014;8:70

Authors: Smith AM, Messier C

Abstract

The present single-case study examined functional brain imaging patterns in a participant that reported being able, at will, to produce somatosensory sensations that are experienced as her body moving outside the boundaries of her physical body all the while remaining aware of her unmoving physical body. We found that the brain functional changes associated with the reported extra-corporeal experience (ECE) were different than those observed in motor imagery. Activations were mainly left-sided and involved the left supplementary motor area and supramarginal and posterior superior temporal gyri, the last two overlapping with the temporal parietal junction that has been associated with out-of-body experiences. The cerebellum also showed activation that is consistent with the participant's report of the impression of movement during the ECE. There was also left middle and superior orbital frontal gyri activity, regions often associated with action monitoring. The results suggest that the ECE reported here represents an unusual type of kinesthetic imagery.

22:24 Posted in Telepresence & virtual presence | Permalink | Comments (0)

Humanlike robot hands controlled by brain activity arouse illusion of ownership in operators.

Humanlike robot hands controlled by brain activity arouse illusion of ownership in operators.

Sci Rep. 2013;3:2396

Authors: Alimardani M, Nishio S, Ishiguro H

Abstract

Operators of a pair of robotic hands report ownership for those hands when they hold image of a grasp motion and watch the robot perform it. We present a novel body ownership illusion that is induced by merely watching and controlling robot's motions through a brain machine interface. In past studies, body ownership illusions were induced by correlation of such sensory inputs as vision, touch and proprioception. However, in the presented illusion none of the mentioned sensations are integrated except vision. Our results show that during BMI-operation of robotic hands, the interaction between motor commands and visual feedback of the intended motions is adequate to incorporate the non-body limbs into one's own body. Our discussion focuses on the role of proprioceptive information in the mechanism of agency-driven illusions. We believe that our findings will contribute to improvement of tele-presence systems in which operators incorporate BMI-operated robots into their body representations.

22:17 Posted in Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Feb 09, 2014

The importance of synchrony and temporal order of visual and tactile input for illusory limb ownership experiences - an FMRI study applying virtual reality

The importance of synchrony and temporal order of visual and tactile input for illusory limb ownership experiences - an FMRI study applying virtual reality.

PLoS One. 2014;9(1):e87013

Authors: Bekrater-Bodmann R, Foell J, Diers M, Kamping S, Rance M, Kirsch P, Trojan J, Fuchs X, Bach F, Cakmak HK, Maaß H, Flor H

Abstract. In the so-called rubber hand illusion, synchronous visuotactile stimulation of a visible rubber hand together with one's own hidden hand elicits ownership experiences for the artificial limb. Recently, advanced virtual reality setups were developed to induce a virtual hand illusion (VHI). Here, we present functional imaging data from a sample of 25 healthy participants using a new device to induce the VHI in the environment of a magnetic resonance imaging (MRI) system. In order to evaluate the neuronal robustness of the illusion, we varied the degree of synchrony between visual and tactile events in five steps: in two conditions, the tactile stimulation was applied prior to visual stimulation (asynchrony of -300 ms or -600 ms), whereas in another two conditions, the tactile stimulation was applied after visual stimulation (asynchrony of +300 ms or +600 ms). In the fifth condition, tactile and visual stimulation was applied synchronously. On a subjective level, the VHI was successfully induced by synchronous visuotactile stimulation. Asynchronies between visual and tactile input of ±300 ms did not significantly diminish the vividness of illusion, whereas asynchronies of ±600 ms did. The temporal order of visual and tactile stimulation had no effect on VHI vividness. Conjunction analyses of functional MRI data across all conditions revealed significant activation in bilateral ventral premotor cortex (PMv). Further characteristic activation patterns included bilateral activity in the motion-sensitive medial superior temporal area as well as in the bilateral Rolandic operculum, suggesting their involvement in the processing of bodily awareness through the integration of visual and tactile events. A comparison of the VHI-inducing conditions with asynchronous control conditions of ±600 ms yielded significant PMv activity only contralateral to the stimulation site. These results underline the temporal limits of the induction of limb ownership related to multisensory body-related input.

22:16 Posted in Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Feb 02, 2014

Activation of the human mirror neuron system during the observation of the manipulation of virtual tools in the absence of a visible effector limb

Activation of the human mirror neuron system during the observation of the manipulation of virtual tools in the absence of a visible effector limb.

Neurosci Lett. 2013 Oct 25;555:220-4

Authors: Modroño C, Navarrete G, Rodríguez-Hernández AF, González-Mora JL

Abstract. This work explores the mirror neuron system activity produced by the observation of virtual tool manipulations in the absence of a visible effector limb. Functional MRI data was obtained from healthy right-handed participants who manipulated a virtual paddle in the context of a digital game and watched replays of their actions. The results show how action observation produced extended bilateral activations in the parietofrontal mirror neuron system. At the same time, three regions in the left hemisphere (in the primary motor and the primary somatosensory cortex, the supplementary motor area and the dorsolateral prefrontal cortex) showed a reduced BOLD, possibly related with the prevention of inappropriate motor execution. These results can be of interest for researchers and developers working in the field of action observation neurorehabilitation.

20:07 Posted in Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)