Jan 25, 2014

MemoryMirror: First Body-Controlled Smart Mirror

The Intel® Core™ i7-based MemoryMirror takes the clothes shopping experience to a whole different level, allowing shoppers to try on multiple outfits, then virtually view and compare previous choices on the mirror itself using intuitive hand gestures. Users control all their data and can remain anonymous to the retailer if they so choose. The Memory Mirror uses Intel integrated graphics technology to create avatars of the shopper wearing various clothing that can be shared with friends to solicit feedback or viewed instantly to make an immediate in-store purchase. Shoppers can also save their looks in mobile app should they decide to purchase at a later time online.

21:53 Posted in Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink | Comments (0)

Jan 21, 2014

The Oculus Rift 'Crystal Cove' prototype is 2014's Best of CES winner

21:54 Posted in Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Dec 19, 2013

Tricking the brain with transformative virtual reality

18:40 Posted in Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Nov 20, 2013

inFORM

inFORM is a Dynamic Shape Display developed by MIT Tangible Media Group that can render 3D content physically, so users can interact with digital information in a tangible way.

inFORM can also interact with the physical world around it, for example moving objects on the table’s surface.

Remote participants in a video conference can be displayed physically, allowing for a strong sense of presence and the ability to interact physically at a distance.

Nov 16, 2013

Monkeys Control Avatar’s Arms Through Brain-Machine Interface

Via Medgadget

Researchers at Duke University have reported in journal Science Translational Medicine that they were able to train monkeys to control two virtual limbs through a brain-computer interface (BCI). The rhesus monkeys initially used joysticks to become comfortable moving the avatar’s arms, but later the brain-computer interfaces implanted on their brains were activated to allow the monkeys to drive the avatar using only their minds. Two years ago the same team was able to train monkeys to control one arm, but the complexity of controlling two arms required the development of a new algorithm for reading and filtering the signals. Moreover, the monkey brains themselves showed great adaptation to the training with the BCI, building new neural pathways to help improve how the monkeys moved the virtual arms. As the authors of the study note in the abstract, “These findings should help in the design of more sophisticated BMIs capable of enabling bimanual motor control in human patients.”

Here’s a video of one of the avatars being controlled to tap on the white balls:

15:56 Posted in Brain-computer interface, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Aug 07, 2013

On Phenomenal Consciousness

A recent introductory talk on the problem that consciousness and qualia presents to physicalism by Frank C. Jackson.

14:00 Posted in Research tools, Telepresence & virtual presence | Permalink | Comments (0)

Welcome to wonderland: the influence of the size and shape of a virtual hand on the perceived size and shape of virtual objects

Welcome to wonderland: the influence of the size and shape of a virtual hand on the perceived size and shape of virtual objects.

PLoS One. 2013;8(7):e68594

Authors: Linkenauger SA, Leyrer M, Bülthoff HH, Mohler BJ

The notion of body-based scaling suggests that our body and its action capabilities are used to scale the spatial layout of the environment. Here we present four studies supporting this perspective by showing that the hand acts as a metric which individuals use to scale the apparent sizes of objects in the environment. However to test this, one must be able to manipulate the size and/or dimensions of the perceiver's hand which is difficult in the real world due to impliability of hand dimensions. To overcome this limitation, we used virtual reality to manipulate dimensions of participants' fully-tracked, virtual hands to investigate its influence on the perceived size and shape of virtual objects. In a series of experiments, using several measures, we show that individuals' estimations of the sizes of virtual objects differ depending on the size of their virtual hand in the direction consistent with the body-based scaling hypothesis. Additionally, we found that these effects were specific to participants' virtual hands rather than another avatar's hands or a salient familiar-sized object. While these studies provide support for a body-based approach to the scaling of the spatial layout, they also demonstrate the influence of virtual bodies on perception of virtual environments.

13:56 Posted in Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Using avatars to model weight loss behaviors: participant attitudes and technology development.

Using avatars to model weight loss behaviors: participant attitudes and technology development.

J Diabetes Sci Technol. 2013;7(4):1057-65

Authors: Napolitano MA, Hayes S, Russo G, Muresu D, Giordano A, Foster GD

BACKGROUND: Virtual reality and other avatar-based technologies are potential methods for demonstrating and modeling weight loss behaviors. This study examined avatar-based technology as a tool for modeling weight loss behaviors. METHODS: This study consisted of two phases: (1) an online survey to obtain feedback about using avatars for modeling weight loss behaviors and (2) technology development and usability testing to create an avatar-based technology program for modeling weight loss behaviors. RESULTS: Results of phase 1 (n = 128) revealed that interest was high, with 88.3% stating that they would participate in a program that used an avatar to help practice weight loss skills in a virtual environment. In phase 2, avatars and modules to model weight loss skills were developed. Eight women were recruited to participate in a 4-week usability test, with 100% reporting they would recommend the program and that it influenced their diet/exercise behavior. Most women (87.5%) indicated that the virtual models were helpful. After 4 weeks, average weight loss was 1.6 kg (standard deviation = 1.7). CONCLUSIONS: This investigation revealed a high level of interest in an avatar-based program, with formative work indicating promise. Given the high costs associated with in vivo exposure and practice, this study demonstrates the potential use of avatar-based technology as a tool for modeling weight loss behaviors.Abstract

13:52 Posted in Cybertherapy, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

What Color is My Arm? Changes in Skin Color of an Embodied Virtual Arm Modulates Pain Threshold

What Color is My Arm? Changes in Skin Color of an Embodied Virtual Arm Modulates Pain Threshold.

Front Hum Neurosci. 2013;7:438

Authors: Martini M, Perez-Marcos D, Sanchez-Vives MV

It has been demonstrated that visual inputs can modulate pain. However, the influence of skin color on pain perception is unknown. Red skin is associated to inflamed, hot and more sensitive skin, while blue is associated to cyanotic, cold skin. We aimed to test whether the color of the skin would alter the heat pain threshold. To this end, we used an immersive virtual environment where we induced embodiment of a virtual arm that was co-located with the real one and seen from a first-person perspective. Virtual reality allowed us to dynamically modify the color of the skin of the virtual arm. In order to test pain threshold, increasing ramps of heat stimulation applied on the participants' arm were delivered concomitantly with the gradual intensification of different colors on the embodied avatar's arm. We found that a reddened arm significantly decreased the pain threshold compared with normal and bluish skin. This effect was specific when red was seen on the arm, while seeing red in a spot outside the arm did not decrease pain threshold. These results demonstrate an influence of skin color on pain perception. This top-down modulation of pain through visual input suggests a potential use of embodied virtual bodies for pain therapy.

13:49 Posted in Biofeedback & neurofeedback, Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

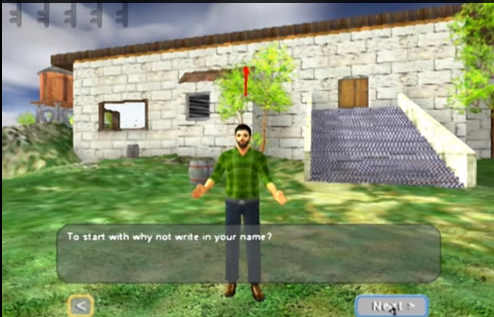

The Computer Game That Helps Therapists Chat to Adolescents With Mental Health Problems

Adolescents with mental health problems are particularly hard for therapists to engage. But a new computer game is providing a healthy conduit for effective communication between them.

Read the full story on MIT Technology Review

13:40 Posted in Cybertherapy, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Detecting delay in visual feedback of an action as a monitor of self recognition

Detecting delay in visual feedback of an action as a monitor of self recognition.

Exp Brain Res. 2012 Oct;222(4):389-97

Authors: Hoover AE, Harris LR

Abstract. How do we distinguish "self" from "other"? The correlation between willing an action and seeing it occur is an important cue. We exploited the fact that this correlation needs to occur within a restricted temporal window in order to obtain a quantitative assessment of when a body part is identified as "self". We measured the threshold and sensitivity (d') for detecting a delay between movements of the finger (of both the dominant and non-dominant hands) and visual feedback as seen from four visual perspectives (the natural view, and mirror-reversed and/or inverted views). Each trial consisted of one presentation with minimum delay and another with a delay of between 33 and 150 ms. Participants indicated which presentation contained the delayed view. We varied the amount of efference copy available for this task by comparing performances for discrete movements and continuous movements. Discrete movements are associated with a stronger efference copy. Sensitivity to detect asynchrony between visual and proprioceptive information was significantly higher when movements were viewed from a "plausible" self perspective compared with when the view was reversed or inverted. Further, we found differences in performance between dominant and non-dominant hand finger movements across the continuous and single movements. Performance varied with the viewpoint from which the visual feedback was presented and on the efferent component such that optimal performance was obtained when the presentation was in the normal natural orientation and clear efferent information was available. Variations in sensitivity to visual/non-visual temporal incongruence with the viewpoint in which a movement is seen may help determine the arrangement of the underlying visual representation of the body.

13:18 Posted in Research tools, Telepresence & virtual presence | Permalink | Comments (0)

Jul 23, 2013

Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes

Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes.

Proc Natl Acad Sci USA. 2013 Jul 15;

Authors: Banakou D, Groten R, Slater M

Abstract. An illusory sensation of ownership over a surrogate limb or whole body can be induced through specific forms of multisensory stimulation, such as synchronous visuotactile tapping on the hidden real and visible rubber hand in the rubber hand illusion. Such methods have been used to induce ownership over a manikin and a virtual body that substitute the real body, as seen from first-person perspective, through a head-mounted display. However, the perceptual and behavioral consequences of such transformed body ownership have hardly been explored. In Exp. 1, immersive virtual reality was used to embody 30 adults as a 4-y-old child (condition C), and as an adult body scaled to the same height as the child (condition A), experienced from the first-person perspective, and with virtual and real body movements synchronized. The result was a strong body-ownership illusion equally for C and A. Moreover there was an overestimation of the sizes of objects compared with a nonembodied baseline, which was significantly greater for C compared with A. An implicit association test showed that C resulted in significantly faster reaction times for the classification of self with child-like compared with adult-like attributes. Exp. 2 with an additional 16 participants extinguished the ownership illusion by using visuomotor asynchrony, with all else equal. The size-estimation and implicit association test differences between C and A were also extinguished. We conclude that there are perceptual and probably behavioral correlates of body-ownership illusions that occur as a function of the type of body in which embodiment occurs.

22:28 Posted in Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Jul 18, 2013

US Army avatar role-play Experiment #3 now open for public registration

Military Open Simulator Enterprise Strategy (MOSES) is secure virtual world software designed to evaluate the ability of OpenSimulator to provide independent access to a persistent, virtual world. MOSES is a research project of the United States Army Simulation and Training Center. STTC’s Virtual World Strategic Applications team uses OpenSimulator to add capability and flexibility to virtual training scenarios.

18:12 Posted in Research tools, Telepresence & virtual presence | Permalink | Comments (0)

Nov 02, 2012

Networked Flow: Towards an understanding of creative networks

Gaggioli A., Riva G., Milani L., Mazzoni E.

19:46 Posted in Creativity and computers, Social Media, Telepresence & virtual presence | Permalink | Comments (0)

Aug 04, 2012

Extending body space in immersive virtual reality: a very long arm illusion

PLoS One. 2012;7(7):e40867

Authors: Kilteni K, Normand JM, Sanchez-Vives MV, Slater M

Abstract. Recent studies have shown that a fake body part can be incorporated into human body representation through synchronous multisensory stimulation on the fake and corresponding real body part - the most famous example being the Rubber Hand Illusion. However, the extent to which gross asymmetries in the fake body can be assimilated remains unknown. Participants experienced, through a head-tracked stereo head-mounted display a virtual body coincident with their real body. There were 5 conditions in a between-groups experiment, with 10 participants per condition. In all conditions there was visuo-motor congruence between the real and virtual dominant arm. In an Incongruent condition (I), where the virtual arm length was equal to the real length, there was visuo-tactile incongruence. In four Congruent conditions there was visuo-tactile congruence, but the virtual arm lengths were either equal to (C1), double (C2), triple (C3) or quadruple (C4) the real ones. Questionnaire scores and defensive withdrawal movements in response to a threat showed that the overall level of ownership was high in both C1 and I, and there was no significant difference between these conditions. Additionally, participants experienced ownership over the virtual arm up to three times the length of the real one, and less strongly at four times the length. The illusion did decline, however, with the length of the virtual arm. In the C2-C4 conditions although a measure of proprioceptive drift positively correlated with virtual arm length, there was no correlation between the drift and ownership of the virtual arm, suggesting different underlying mechanisms between ownership and drift. Overall, these findings extend and enrich previous results that multisensory and sensorimotor information can reconstruct our perception of the body shape, size and symmetry even when this is not consistent with normal body proportions.

20:03 Posted in Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

New HMD "Oculus RIFT" launched on Kickstarter

The Oculus Rift, a new HMD which promises to take 3D gaming to the next level, was launched on Kickstarter last week. The funding goal is $250,000.

“With an incredibly wide field of view, high resolution display, and ultra-low latency head tracking, the Rift provides a truly immersive experience that allows you to step inside your favorite game and explore new worlds like never before."

Technical specs of the Dev Kit (subject to change)

Head tracking: 6 degrees of freedom (DOF) ultra low latency

Field of view: 110 degrees diagonal / 90 degrees horizontal

Resolution: 1280×800 (640×800 per eye)

Inputs: DVI/HDMI and USB

Platforms: PC and mobile

Weight: ~0.22 kilograms

The developer kits acquired through Kickstarter will include access to the Oculus Developer Center, a community for Oculus developers. The Oculus Rift SDK will include code, samples, and documentation to facilitate integration with any new or existing games, initially on PCs and mobiles, with consoles to follow.

Oculus says it will be showcasing the Rift at a number of upcoming tradeshows, including Quakecon, Siggraph, GDC Europe, gamescom and Unite.

May 07, 2012

Mind-controlled robot allows a quadriplegic patient moving virtually in space

Researchers at Federal Institute of Technology in Lausanne, Switzerland (EPFL), have successfully demonstrated a robot controlled by the mind of a partially quadriplegic patient in a hospital 62 miles away. The EPFL brain-computer interface system does not require invasive neural implants in the brain, since it is based on a special EEG cap fitted with electrodes that record the patient’s neural signals. The task of the patient is to imagine moving his paralyzed fingers, and this input is than translated by the BCI system into command for the robot.

16:06 Posted in AI & robotics, Brain-computer interface, Telepresence & virtual presence | Permalink | Comments (0)

Dec 19, 2011

‘Brainlink’ lets you remotely control toy robots and other gadgets

BirdBrain Technologies, a spin-off of Carnegie Mellon University has developed a device called Brainlink that allows users to remotely control robots and other gadgets (including TVs, cable boxes, and DVD players) with an Android-based smartphone. This is achieved through a small triangular controller that you attach to the gadget, with a Bluetooth range of 30 feet.

19:44 Posted in Future interfaces, Telepresence & virtual presence, Wearable & mobile | Permalink | Comments (0)

Jul 22, 2011

Mirroring avatars: dissociation of action and intention in human motor resonance

17:53 Posted in Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Nov 09, 2010

3D holographic technology: the future of telemedicine?

Scientists at the University of Arizona in Tucson have developed a new form of holographic telepresence that projects a three-dimensional, full-color, moving image without viewers needing to use 3-D glasses. While the technology could be used in TV or movies, it also could be used in telemedicine and mapping, as well as in everyday corporate meetings, the report notes. The image is recorded using an array of regular cameras, each one viewing the object from a different angle. Then, using fast-pulsed laser beams, a holographic, or three-dimensional, pixel is created. Such technology could be a “game changer” in some industries, including telemedicine, lead researcher Nasser Peyghambarian said. “Holographic telepresence means we can record a three-dimensional image in one location and show it in another location, in real-time, anywhere in the world,” he added. “Surgeons at different locations around the world can observe in 3-D, in real time, and participate in the surgical procedure.

16:45 Posted in Cybertherapy, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)