Nov 17, 2015

The new era of Computational Biomedicine

In recent years, the increasing convergence between nanotechnology, biomedicine and health informatics have generated massive amounts of data, which are changing the way healthcare research, development, and applications are done.

Clinical data integrate physiological data, enabling detailed descriptions of various healthy and diseased states, progression, and responses to therapies. Furthermore, mobile and home-based devices monitor vital signs and activities in real-time and communicate with personal health record services, personal computers, smartphones, caregivers, and health care professionals.

However, our ability to analyze and interpret multiple sources of data lags far behind today’s data generation and storage capacity. Consequently, mathematical and computational models are increasingly used to help interpret massive biomedical data produced by high-throughput genomics and proteomics projects. Advanced applications of computer models that enable the simulation of biological processes are used to generate hypotheses and plan experiments.

The emerging discipline of computational biomedicine is concerned with the application of computer-based techniques and particularly modelling and simulation to human health. Since almost ten years, this vision is at the core of an European-funded program called “Virtual Physiological Human”. The goal of this initiative is to develop next-generation computer technologies to integrate all information available for each patient, and generated computer models capable of predicting how the health of that patient will evolve under certain conditions.

In particular, this programme is expected, over the next decades, to transform the study and practice of healthcare, moving it towards the priorities known as ‘4P's’: predictive, preventative, personalized and participatory medicine. Future developments of computational biomedicine may provide the possibility of developing not just qualitative but truly quantitative analytical tools, that is, models, on the basis of the data available through the system just described. Information not available today (large cohort studies nowadays include thousands of individuals whereas here we are talking about millions of records) will be available for free. Large cohorts of data will be available for online consultation and download. Integrative and multi-scale models will benefit from the availability of this large amount of data by using parameter estimation in a statistically meaningful manner. At the same time distribution maps of important parameters will be generated and continuously updated. Through a certain mechanism, the user will be given the opportunity to express his interest on this or that model so to set up a consensus model selection process. Moreover, models should be open for consultation and annotation. Flexible and user friendly services have many potential positive outcomes. Some examples include simulation of case studies, tests, and validation of specific assumptions on the nature or related diseases, understanding the world-wide distribution of these parameters and disease patterns, ability to hypothesize intervention strategies in cases such as spreading of an infectious disease, and advanced risk modeling.

11:25 Posted in Blue sky, ICT and complexity, Physiological Computing, Positive Technology events | Permalink

Oct 06, 2014

First direct brain-to-brain communication between human subjects

Via KurzweilAI.net

An international team of neuroscientists and robotics engineers have demonstrated the first direct remote brain-to-brain communication between two humans located 5,000 miles away from each other and communicating via the Internet, as reported in a paper recently published in PLOS ONE (open access).

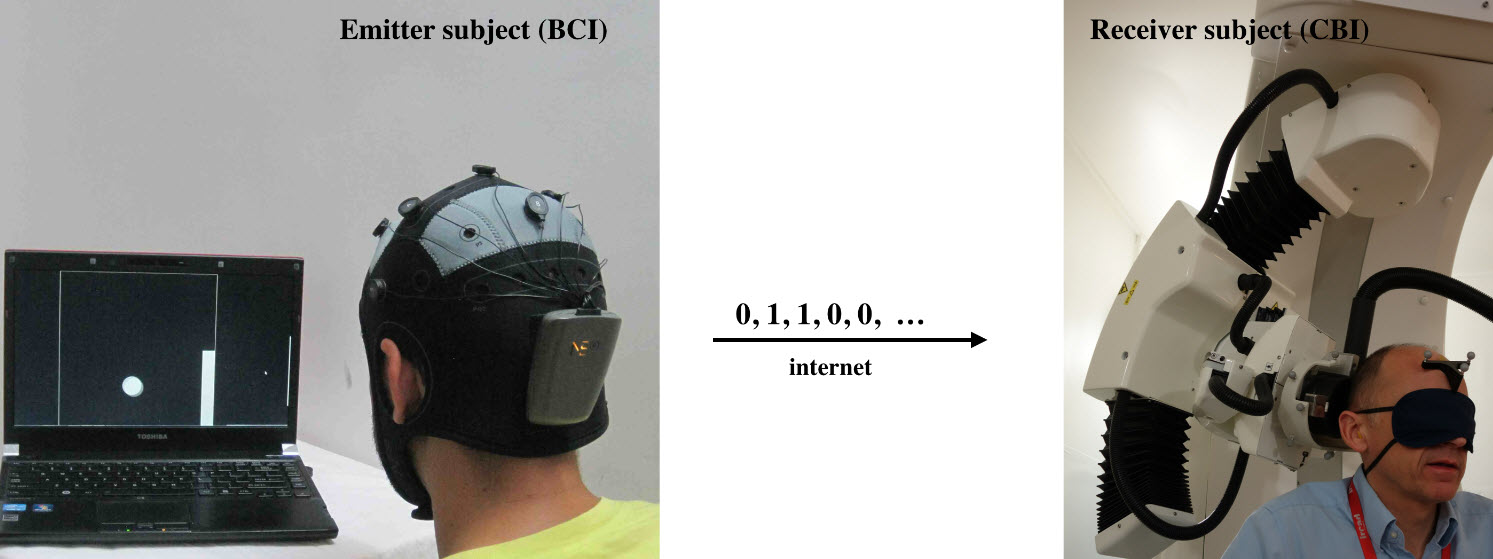

Emitter and receiver subjects with non-invasive devices supporting, respectively, a brain-computer interface (BCI), based on EEG changes, driven by motor imagery (left) and a computer-brain interface (CBI) based on the reception of phosphenes elicited by neuro-navigated TMS (right) (credit: Carles Grau et al./PLoS ONE)

In India, researchers encoded two words (“hola” and “ciao”) as binary strings and presented them as a series of cues on a computer monitor. They recorded the subject’s EEG signals as the subject was instructed to think about moving his feet (binary 0) or hands (binary 1). They then sent the recorded series of binary values in an email message to researchers in France, 5,000 miles away.

There, the binary strings were converted into a series of transcranial magnetic stimulation (TMS) pulses applied to a hotspot location in the right visual occipital cortex that either produced a phosphene (perceived flash of light) or not.

“We wanted to find out if one could communicate directly between two people by reading out the brain activity from one person and injecting brain activity into the second person, and do so across great physical distances by leveraging existing communication pathways,” explains coauthor Alvaro Pascual-Leone, MD, PhD, Director of the Berenson-Allen Center for Noninvasive Brain Stimulation at Beth Israel Deaconess Medical Center (BIDMC) and Professor of Neurology at Harvard Medical School.

A team of researchers from Starlab Barcelona, Spain and Axilum Robotics, Strasbourg, France conducted the experiment. A second similar experiment was conducted between individuals in Spain and France.

“We believe these experiments represent an important first step in exploring the feasibility of complementing or bypassing traditional language-based or other motor/PNS mediated means in interpersonal communication,” the researchers say in the paper.

“Although certainly limited in nature (e.g., the bit rates achieved in our experiments were modest even by current BCI (brain-computer interface) standards, mostly due to the dynamics of the precise CBI (computer-brain interface) implementation, these initial results suggest new research directions, including the non-invasive direct transmission of emotions and feelings or the possibility of sense synthesis in humans — that is, the direct interface of arbitrary sensors with the human brain using brain stimulation, as previously demonstrated in animals with invasive methods.

Brain-to-brain (B2B) communication system overview. On the left, the BCI subsystem is shown schematically, including electrodes over the motor cortex and the EEG amplifier/transmitter wireless box in the cap. Motor imagery of the feet codes the bit value 0, of the hands codes bit value 1. On the right, the CBI system is illustrated, highlighting the role of coil orientation for encoding the two bit values. Communication between the BCI and CBI components is mediated by the Internet. (Credit: Carles Grau et al./PLoS ONE)

“The proposed technology could be extended to support a bi-directional dialogue between two or more mind/brains (namely, by the integration of EEG and TMS systems in each subject). In addition, we speculate that future research could explore the use of closed mind-loops in which information associated to voluntary activity from a brain area or network is captured and, after adequate external processing, used to control other brain elements in the same subject. This approach could lead to conscious synthetically mediated modulation of phenomena best detected subjectively by the subject, including emotions, pain and psychotic, depressive or obsessive-compulsive thoughts.

“Finally, we anticipate that computers in the not-so-distant future will interact directly with the human brain in a fluent manner, supporting both computer- and brain-to-brain communication routinely. The widespread use of human brain-to-brain technologically mediated communication will create novel possibilities for human interrelation with broad social implications that will require new ethical and legislative responses.”

This work was partly supported by the EU FP7 FET Open HIVE project, the Starlab Kolmogorov project, and the Neurology Department of the Hospital de Bellvitge.

Apr 29, 2014

Evidence, Enactment, Engagement: The three 'nEEEds' of mental mHealth

Actually, according to my experience, citizens and public stakeholders are not well-informed or educated about mHealth. For example, to many people the idea of using phones to deliver mental health programs still sounds weird.

Yet the number of mental health apps is rapidly growing: a recent survey identified 200 unique mobile tools specifically associated with behavioral health.

These applications now cover a wide array of clinical areas including developmental disorders, cognitive disorders, substance-related disorders, as well as psychotic and mood disorders.

I think that the increasing "applification" of mental health is explained by three potential benefits of this approach:

- First, mobile apps can be integrated in different stages of treatment: from promoting awareness of disease, to increasing treatment compliance, to preventing relapse.

- Furthermore, mobile tools can be used to monitor behavioural and psychological symptoms in everyday life: self-reported data can be complemented with readings from inbuilt or wearable sensors to fine-tune treatment according to the individual patient’s needs.

- Last - but not least - mobile applications can help patients to stay on top of current research, facilitating access to evidence-based care. For example, in the EC-funded INTERSTRESS project, we investigated these potentials in the assessment and management of psychological stress, by developing different mobile applications (including the award-winning Positive Technology app) for helping people to monitor stress levels “on the go” and learn new relaxation skills.

In short, I believe that mental mHealth has the potential to provide the right care, at the right time, at the right place. However, from my personal experience I have identified three key challenges that must be faced in order to realize the potential of this approach.

I call them the three "nEEEds" of mental mHealth: evidence, engagement, enactment.

- Evidence refers to the need of clinical proof of efficacy or effectiveness to be provided using randomised trials.

- Engagement is related to the need of ensuring usability and accessibility for mobile interfaces: this goes beyond reducing use errors that may generate risks of psychological discomfort for the patient, to include the creation of a compelling and engaging user experience.

- Finally, enactment concerns the need that appropriate regulations enacted by competent authorities catch up with mHealth technology development.

Being myself a beneficiary of EC-funded grants, I can recognize that R&D investments on mHealth made by EC across FP6 and FP7 have contributed to position Europe at the forefront of this revolution. And the return of this investment could be strong: it has been predicted that full exploitation of mHealth solutions could lead to nearly 100 billion EUR savings in total annual EU healthcare spend in 2017.

I believe that a progressively larger portion of these savings may be generated by the adoption of mobile solutions in the mental health sector: actually, in the WHO European Region, mental ill health accounts for almost 20% of the burden of disease.

For this prediction to be fulfilled, however, many barriers must be overcome: thethree "nEEEds" of mental mHealth are probably only the start of the list. Hopefully, the Green Paper consultation will help to identify further opportunities and concerns that may be facing mental mHealth, in order to ensure a successful implementation of this approach.

23:51 Posted in Cybertherapy, Physiological Computing, Wearable & mobile | Permalink | Comments (0)

Apr 06, 2014

Glass brain flythrough: beyond neurofeedback

Via Neurogadget

Researchers have developed a new way to explore the human brain in virtual reality. The system, called Glass Brain, which is developed by Philip Rosedale, creator of the famous game Second Life, and Adam Gazzaley, a neuroscientist at the University of California San Francisco, combines brain scanning, brain recording and virtual reality to allow a user to journey through a person’s brain in real-time.

Read the full story on Neurogadget

23:52 Posted in Biofeedback & neurofeedback, Blue sky, Information visualization, Physiological Computing, Virtual worlds | Permalink | Comments (0)

Stick-on electronic patches for health monitoring

Researchers at at John A. Rogers’ lab at the University of Illinois, Urbana-Champaign have incorporated off-the-shelf chips into fexible electronic patches to allow for high quality ECG and EEG monitoring.

Here is the video:

23:45 Posted in Physiological Computing, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Dec 24, 2013

NeuroOn mask improves sleep and helps manage jet lag

Via Medgadget

A group of Polish engineers is working on a smart sleeping mask that they hope will allow people to get more out of their resting time, as well as allow for unusual sleeping schedules that would particularly benefit those who are often on-call. The NeuroOn mask will have an embedded EEG for brain wave monitoring, EMG for detecting muscle motion on the face, and sensors that can track whether your pupils are moving and whether they are going through REM. The team is currently raising money on Kickstarter where you can pre-order your own NeuroOn once it’s developed into a final product.

Dec 02, 2013

A genetically engineered weight-loss implant

Via KurzweilAI

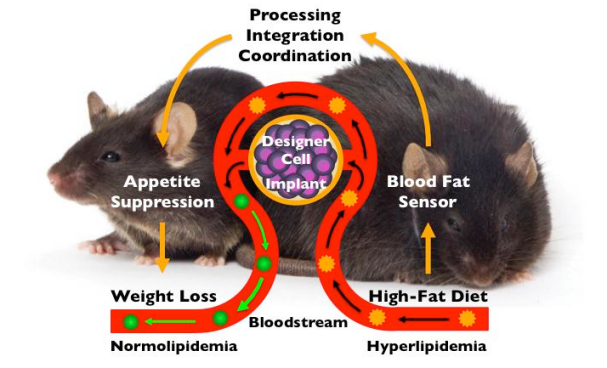

ETH-Zurich biotechnologists have constructed an implantable genetic regulatory circuit that monitors blood-fat levels. In response to excessive levels, it produces a messenger substance that signals satiety (fullness) to the body. Tests on obese mice revealed that this helps them lose weight.

Genetically modified cells implanted in the body monitor the blood-fat level. If it is too high, they produce a satiety hormone. The animal stops eating and loses weight. (Credit: Martin Fussenegger / ETH Zurich / Jackson Lab)

23:25 Posted in Neurotechnology & neuroinformatics, Physiological Computing | Permalink | Comments (0)

Nov 20, 2013

Call for Papers on Physiological Computing for Intelligent Adaptation: A Special Issue of Interacting with Computers

Special issue editors:

• Hugo Gamboa (Universidade Nova de Lisboa, Portugal)

• Hugo Plácido da Silva (IT – Institute of Telecommunications, Portugal)

• Kiel Gilleade (Liverpool John Moores University, United Kingdom)

• Sergi Bermúdez i Badia (Universidade da Madeira, Portugal)

• Stephen Fairclough (Liverpool John Moores University, United Kingdom)

Contact:

s.fairclough@ljmu.ac.uk

Deadline for Submissions:

30 June 2014

Physiological data provides a wealth of information about the behavioural state of the user. These data can provide important contextual information by allowing the system to draw inferences with respect to the affective, cognitive and physical state of a person. In a computerised system this information can be used as an input control to drive system adaptation. For example, a videogame can use psychophysiological inferences of the player’s level of mental workload during play to adjust game difficulty in real-time.

A basic physiological computer system will simply reflect changes in the physiological data in its system adaptations. More advanced systems would use their knowledge of the individual user and the context in which changes are occurring in order to “intelligently” adapt the system at the most appropriate time with the most appropriate intervention.

In this special issue we call for the submission of cutting edge research work relating to the creation, facilitation of and issues involved in intelligent adaptive physiological computing systems (PCS). The focus of this special issue is on Physiological Computing for Intelligent Adaptation, and within this the scope includes but is not limited to:

• Applications of intelligent adaptation in PCS

• Mobile and embedded systems for intelligent adaptation in PCS

• Adaptive user interfaces driven by physiological computing

• Assistive technologies mediated by physiological computing

• Pervasive technologies for physiological computing

• Affective interfaces

• Context aware interfaces

• The user experience of intelligent adaptive PCS

• Ethics of intelligent adaptation in PCS

All contributions will be rigorously peer reviewed to the usual exacting standards of IwC. Further information, including submission procedures and advice on formatting and preparing your manuscript, can be found at:http://iwc.oxfordjournals.org/

11:15 Posted in Call for papers, Physiological Computing, Positive Technology events | Permalink | Comments (0)

Oct 31, 2013

Signal Processing Turns Regular Headphones Into Pulse Sensors

Via Medgadget

A new signal processing algorithm that enables any pair of earphones to detect your pulse was demonstrated recently at the Healthcare Device Exhibition 2013 in Yokohama, Japan. The technology comes from a joint effort of Bifrostec (Tokyo, Japan) and the Kaiteki Institute. It is built on the premise that the eardrum creates pressure waves with each heartbeat, which can be detected in a perfectly enclosed space. However, typically, earphones do not create a perfect seal, which is what gives everyone in a packed elevator the privilege to listen to that guy’s tunes. The new algorithm allows the software to process the pressure signal despite the lack of a perfect seal to determine a user’s pulse.

23:30 Posted in Physiological Computing, Wearable & mobile | Permalink | Comments (0)

Sep 10, 2013

BITalino: Do More!

BITalino is a low-cost toolkit that allows anyone from students to professional developers to create projects and applications with physiological sensors. Out of the box, BITalino already integrates easy to use software & hardware blocks with sensors for electrocardiography (ECG), electromyography (EMG), electrodermal activity (EDA), an accelerometer, & ambient light. Imagination is the limit; each individual block can be snapped off and combined to prototype anything you want. You can connect others sensors, including your own custom designs.

18:22 Posted in Physiological Computing, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Aug 07, 2013

International Conference on Physiological Computing Systems

7-9 January 2014, Lisbon, Portugal

Physiological data in its different dimensions, either bioelectrical, biomechanical, biochemical or biophysical, and collected through specialized biomedical devices, video and image capture or other sources, is opening new boundaries in the field of human-computer interaction into what can be defined as Physiological Computing. PhyCS is the annual meeting of the physiological interaction and computing community, and serves as the main international forum for engineers, computer scientists and health professionals, interested in outstanding research and development that bridges the gap between physiological data handling and human-computer interaction.

Regular Paper Submission Extension: September 15, 2013

Regular Paper Authors Notification: October 23, 2013

Regular Paper Camera Ready and Registration: November 5, 2013