Jun 21, 2016

New book on Human Computer Confluence - FREE PDF!

Two good news for Positive Technology followers.

1) Our new book on Human Computer Confluence is out!

2) It can be downloaded for free here

Human-computer confluence refers to an invisible, implicit, embodied or even implanted interaction between humans and system components. New classes of user interfaces are emerging that make use of several sensors and are able to adapt their physical properties to the current situational context of users.

A key aspect of human-computer confluence is its potential for transforming human experience in the sense of bending, breaking and blending the barriers between the real, the virtual and the augmented, to allow users to experience their body and their world in new ways. Research on Presence, Embodiment and Brain-Computer Interface is already exploring these boundaries and asking questions such as: Can we seamlessly move between the virtual and the real? Can we assimilate fundamentally new senses through confluence?

The aim of this book is to explore the boundaries and intersections of the multidisciplinary field of HCC and discuss its potential applications in different domains, including healthcare, education, training and even arts.

DOWNLOAD THE FULL BOOK HERE AS OPEN ACCESS

Please cite as follows:

Andrea Gaggioli, Alois Ferscha, Giuseppe Riva, Stephen Dunne, Isabell Viaud-Delmon (2016). Human computer confluence: transforming human experience through symbiotic technologies. Warsaw: De Gruyter. ISBN 9783110471120.

09:53 Posted in AI & robotics, Augmented/mixed reality, Biofeedback & neurofeedback, Blue sky, Brain training & cognitive enhancement, Brain-computer interface, Cognitive Informatics, Cyberart, Cybertherapy, Emotional computing, Enactive interfaces, Future interfaces, ICT and complexity, Neurotechnology & neuroinformatics, Positive Technology events, Research tools, Self-Tracking, Serious games, Technology & spirituality, Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink

May 26, 2016

From User Experience (UX) to Transformative User Experience (T-UX)

In 1999, Joseph Pine and James Gilmore wrote a seminal book titled “The Experience Economy” (Harvard Business School Press, Boston, MA) that theorized the shift from a service-based economy to an experience-based economy.

According to these authors, in the new experience economy the goal of the purchase is no longer to own a product (be it a good or service), but to use it in order to enjoy a compelling experience. An experience, thus, is a whole-new type of offer: in contrast to commodities, goods and services, it is designed to be as personal and memorable as possible. Just as in a theatrical representation, companies stage meaningful events to engage customers in a memorable and personal way, by offering activities that provide engaging and rewarding experiences.

Indeed, if one looks back at the past ten years, the concept of experience has become more central to several fields, including tourism, architecture, and – perhaps more relevant for this column – to human-computer interaction, with the rise of “User Experience” (UX).

The concept of UX was introduced by Donald Norman in a 1995 article published on the CHI proceedings (D. Norman, J. Miller, A. Henderson: What You See, Some of What's in the Future, And How We Go About Doing It: HI at Apple Computer. Proceedings of CHI 1995, Denver, Colorado, USA). Norman argued that focusing exclusively on usability attribute (i.e. easy of use, efficacy, effectiveness) when designing an interactive product is not enough; one should take into account the whole experience of the user with the system, including users’ emotional and contextual needs. Since then, the UX concept has assumed an increasing importance in HCI. As McCarthy and Wright emphasized in their book “Technology as Experience” (MIT Press, 2004):

“In order to do justice to the wide range of influences that technology has in our lives, we should try to interpret the relationship between people and technology in terms of the felt life and the felt or emotional quality of action and interaction.” (p. 12).

However, according to Pine and Gilmore experience may not be the last step of what they call as “Progression of Economic Value”. They speculated further into the future, by identifying the “Transformation Economy” as the likely next phase. In their view, while experiences are essentially memorable events which stimulate the sensorial and emotional levels, transformations go much further in that they are the result of a series of experiences staged by companies to guide customers learning, taking action and eventually achieving their aspirations and goals.

In Pine and Gilmore terms, an aspirant is the individual who seeks advice for personal change (i.e. a better figure, a new career, and so forth), while the provider of this change (a dietist, a university) is an elictor. The elictor guide the aspirant through a series of experiences which are designed with certain purpose and goals. According to Pine and Gilmore, the main difference between an experience and a transformation is that the latter occurs when an experience is customized:

“When you customize an experience to make it just right for an individual - providing exactly what he needs right now - you cannot help changing that individual. When you customize an experience, you automatically turn it into a transformation, which companies create on top of experiences (recall that phrase: “a life-transforming experience”), just as they create experiences on top of services and so forth” (p. 244).

A further key difference between experiences and transformations concerns their effects: because an experience is inherently personal, no two people can have the same one. Likewise, no individual can undergo the same transformation twice: the second time it’s attempted, the individual would no longer be the same person (p. 254-255).

But what will be the impact of this upcoming, “transformation economy” on how people relate with technology? If in the experience economy the buzzword is “User Experience”, in the next stage the new buzzword might be “User Transformation”.

Indeed, we can see some initial signs of this shift. For example, FitBit and similar self-tracking gadgets are starting to offer personalized advices to foster enduring changes in users’ lifestyle; another example is from the fields of ambient intelligence and domotics, where there is an increasing focus towards designing systems that are able to learn from the user’s behaviour (i.e. by tracking the movement of an elderly in his home) to provide context-aware adaptive services (i.e. sending an alert when the user is at risk of falling).

But likely, the most important ICT step towards the transformation economy could take place with the introduction of next-generation immersive virtual reality systems. Since these new systems are based on mobile devices (an example is the recent partnership between Oculus and Samsung), they are able to deliver VR experiences that incorporate information on the external/internal context of the user (i.e. time, location, temperature, mood etc) by using the sensors incapsulated in the mobile phone.

By personalizing the immersive experience with context-based information, it might be possibile to induce higher levels of involvement and presence in the virtual environment. In case of cyber-therapeutic applications, this could translate into the development of more effective, transformative virtual healing experiences.

Furthermore, the emergence of "symbiotic technologies", such as neuroprosthetic devices and neuro-biofeedback, is enabling a direct connection between the computer and the brain. Increasingly, these neural interfaces are moving from the biomedical domain to become consumer products. But unlike existing digital experiential products, symbiotic technologies have the potential to transform more radically basic human experiences.

Brain-computer interfaces, immersive virtual reality and augmented reality and their various combinations will allow users to create “personalized alterations” of experience. Just as nowadays we can download and install a number of “plug-ins”, i.e. apps to personalize our experience with hardware and software products, so very soon we may download and install new “extensions of the self”, or “experiential plug-ins” which will provide us with a number of options for altering/replacing/simulating our sensorial, emotional and cognitive processes.

Such mediated recombinations of human experience will result from of the application of existing neuro-technologies in completely new domains. Although virtual reality and brain-computer interface were originally developed for applications in specific domains (i.e. military simulations, neurorehabilitation, etc), today the use of these technologies has been extended to other fields of application, ranging from entertainment to education.

In the field of biology, Stephen Jay Gould and Elizabeth Vrba (Paleobiology, 8, 4-15, 1982) have defined “exaptation” the process in which a feature acquires a function that was not acquired through natural selection. Likewise, the exaptation of neurotechnologies to the digital consumer market may lead to the rise of a novel “neuro-experience economy”, in which technology-mediated transformation of experience is the main product.

Just as a Genetically-Modified Organism (GMO) is an organism whose genetic material is altered using genetic-engineering techniques, so we could define aTechnologically-Modified Experience (ETM) a re-engineered experience resulting from the artificial manipulation of neurobiological bases of sensorial, affective, and cognitive processes.

Clearly, the emergence of the transformative neuro-experience economy will not happen in weeks or months but rather in years. It will take some time before people will find brain-computer devices on the shelves of electronic stores: most of these tools are still in the pre-commercial phase at best, and some are found only in laboratories.

Nevertheless, the mere possibility that such scenario will sooner or later come to pass, raises important questions that should be addressed before symbiotic technologies will enter our lives: does technological alteration of human experience threaten the autonomy of individuals, or the authenticity of their lives? How can we help individuals decide which transformations are good or bad for them?

Answering these important issues will require the collaboration of many disciplines, including philosophy, computer ethics and, of course, cyberpsychology.

Apr 27, 2016

Predictive Technologies: Can Smart Tools Augment the Brain's Predictive Abilities?

Nov 17, 2015

The new era of Computational Biomedicine

In recent years, the increasing convergence between nanotechnology, biomedicine and health informatics have generated massive amounts of data, which are changing the way healthcare research, development, and applications are done.

Clinical data integrate physiological data, enabling detailed descriptions of various healthy and diseased states, progression, and responses to therapies. Furthermore, mobile and home-based devices monitor vital signs and activities in real-time and communicate with personal health record services, personal computers, smartphones, caregivers, and health care professionals.

However, our ability to analyze and interpret multiple sources of data lags far behind today’s data generation and storage capacity. Consequently, mathematical and computational models are increasingly used to help interpret massive biomedical data produced by high-throughput genomics and proteomics projects. Advanced applications of computer models that enable the simulation of biological processes are used to generate hypotheses and plan experiments.

The emerging discipline of computational biomedicine is concerned with the application of computer-based techniques and particularly modelling and simulation to human health. Since almost ten years, this vision is at the core of an European-funded program called “Virtual Physiological Human”. The goal of this initiative is to develop next-generation computer technologies to integrate all information available for each patient, and generated computer models capable of predicting how the health of that patient will evolve under certain conditions.

In particular, this programme is expected, over the next decades, to transform the study and practice of healthcare, moving it towards the priorities known as ‘4P's’: predictive, preventative, personalized and participatory medicine. Future developments of computational biomedicine may provide the possibility of developing not just qualitative but truly quantitative analytical tools, that is, models, on the basis of the data available through the system just described. Information not available today (large cohort studies nowadays include thousands of individuals whereas here we are talking about millions of records) will be available for free. Large cohorts of data will be available for online consultation and download. Integrative and multi-scale models will benefit from the availability of this large amount of data by using parameter estimation in a statistically meaningful manner. At the same time distribution maps of important parameters will be generated and continuously updated. Through a certain mechanism, the user will be given the opportunity to express his interest on this or that model so to set up a consensus model selection process. Moreover, models should be open for consultation and annotation. Flexible and user friendly services have many potential positive outcomes. Some examples include simulation of case studies, tests, and validation of specific assumptions on the nature or related diseases, understanding the world-wide distribution of these parameters and disease patterns, ability to hypothesize intervention strategies in cases such as spreading of an infectious disease, and advanced risk modeling.

11:25 Posted in Blue sky, ICT and complexity, Physiological Computing, Positive Technology events | Permalink

Aug 23, 2015

Gartner hype cycle sees digital humanism as emergent trend

The journey to digital business continues as the key theme of Gartner, Inc.'s "Hype Cycle for Emerging Technologies, 2015." New to the Hype Cycle this year is the emergence of technologies that support what Gartner defines as digital humanism — the notion that people are the central focus in the manifestation of digital businesses and digital workplaces.

The Hype Cycle for Emerging Technologies report is the longest-running annual Hype Cycle, providing a cross-industry perspective on the technologies and trends that business strategists, chief innovation officers, R&D leaders, entrepreneurs, global market developers and emerging-technology teams should consider in developing emerging-technology portfolios.

"The Hype Cycle for Emerging Technologies is the broadest aggregate Gartner Hype Cycle, featuring technologies that are the focus of attention because of particularly high levels of interest, and those that Gartner believes have the potential for significant impact," said Betsy Burton, vice president and distinguished analyst at Gartner. "This year, we encourage CIOs and other IT leaders to dedicate time and energy focused on innovation, rather than just incremental business advancement, while also gaining inspiration by scanning beyond the bounds of their industry."

Major changes in the 2015 Hype Cycle for Emerging Technologies include the placement of autonomous vehicles, which have shifted from pre-peak to peak of the Hype Cycle. While autonomous vehicles are still embryonic, this movement still represents a significant advancement, with all major automotive companies putting autonomous vehicles on their near-term roadmaps. Similarly, the growing momentum (from post-trigger to pre-peak) in connected-home solutions has introduced entirely new solutions and platforms enabled by new technology providers and existing manufacturers.

16:32 Posted in ICT and complexity | Permalink | Comments (1)

Apr 05, 2015

Are you concerned about AI?

Recently, a growing number of opinion leaders have started to point out the potential risks associated to the rapid advancement of Artificial Intelligence. This shared concern has led an interdisciplinary group of scientists, technologists and entrepreneurs to sign an open letter (http://futureoflife.org/misc/open_letter/), drafted by the Future of Life Institute, which focuses on priorities to be considered as Artificial Intelligence develops as well as on the potential dangers posed by this paradigm.

The concern that machines may soon dominate humans, however, is not new: in the last thirty years, this topic has been widely represented in movies (i.e. Terminator, the Matrix), novels and various interactive arts. For example, australian-based performance artist Stelarc has incorporated themes of cyborgization and other human-machine interfaces in his work, by creating a number of installations that confront us with the question of where human ends and technology begins.

In his 2005 well-received book “The Singularity Is Near: When Humans Transcend Biology” (Viking Penguin: New York), inventor and futurist Ray Kurzweil argued that Artificial Intelligence is one of the interacting forces that, together with genetics, robotic and nanotechnology, may soon converge to overcome our biological limitations and usher in the beginning of the Singularity, during which Kurzweil predicts that human life will be irreversibly transformed. According to Kurzweil, will take place around 2045 and will probably represent the most extraordinary event in all of human history.

Ray Kurzweil’s vision of the future of intelligence is at the forefront of the transhumanist movement, which considers scientific and technological advances as a mean to augment human physical and cognitive abilities, with the final aim of improving and even extending life. According to transhumanists, however, the choice whether to benefit from such enhancement options should generally reside with the individual. The concept of transhumanism has been criticized, among others, by the influential american philosopher of technology, Don Ihde, who pointed out that no technology will ever be completely internalized, since any technological enhancement implies a compromise. Ihde has distinguished four different relations that humans can have with technological artifacts. In particular, in the “embodiment relation” a technology becomes (quasi)transparent, allowing a partial symbiosis of ourself and the technology. In wearing of eyeglasses, as Ihde examplifies, I do not look “at” them but “through” them at the world: they are already assimilated into my body schema, withdrawing from my perceiving.

According to Ihde, there is a doubled desire which arises from such embodiment relations: “It is the doubled desire that, on one side, is a wish for total transparency, total embodiment, for the technology to truly "become me."(...) But that is only one side of the desire. The other side is the desire to have the power, the transformation that the technology makes available. Only by using the technology is my bodily power enhanced and magnified by speed, through distance, or by any of the other ways in which technologies change my capacities. (…) The desire is, at best, contradictory. l want the transformation that the technology allows, but I want it in such a way that I am basically unaware of its presence. I want it in such a way that it becomes me. Such a desire both secretly rejects what technologies are and overlooks the transformational effects which are necessarily tied to human-technology relations. This lllusory desire belongs equally to pro- and anti-technology interpretations of technology.” (Ihde, D. (1990). Technology and the Lifeworld: From Garden to Earth. Bloomington: Indiana, p. 75).

Despite the different philosophical stances and assumptions on what our future relationship with technology will look like, there is little doubt that these questions will become more pressing and acute in the next years. In my personal view, technology should not be viewed as mean to replace human life, but as an instrument for improving it. As William S. Haney II suggests in his book “Cyberculture, Cyborgs and Science Fiction: Consciousness and the Posthuman” (Rodopi: Amsterdam, 2006), “each person must choose for him or herself between the technological extension of physical experience through mind, body and world on the one hand, and the natural powers of human consciousness on the other as a means to realize their ultimate vision.” (ix, Preface).

23:26 Posted in AI & robotics, Blue sky, ICT and complexity | Permalink | Comments (0)

Oct 16, 2014

TIME: Fear, Misinformation, and Social Media Complicate Ebola Fight

From TIME

Based on Facebook and Twitter chatter, it can seem like Ebola is everywhere. Following the first diagnosis of an Ebola case in the United States on Sept. 30, mentions of the virus on Twitter leapt from about 100 per minute to more than 6,000. Cautious health officials have tested potential cases in Newark, Miami Beach and Washington D.C., sparking more worry. Though the patients all tested negative, some people are still tweeting as if the disease is running rampant in these cities. In Iowa the Department of Public Health was forced to issue a statement dispelling social media rumors that Ebola had arrived in the state. Meanwhile there have been a constant stream of posts saying that Ebola can be spread through the air, water, or food, which are all inaccurate claims.

Research scientists who study how we communicate on social networks have a name for these people: the “infected.”

11:05 Posted in ICT and complexity, Social Media | Permalink | Comments (0)

Oct 06, 2014

First direct brain-to-brain communication between human subjects

Via KurzweilAI.net

An international team of neuroscientists and robotics engineers have demonstrated the first direct remote brain-to-brain communication between two humans located 5,000 miles away from each other and communicating via the Internet, as reported in a paper recently published in PLOS ONE (open access).

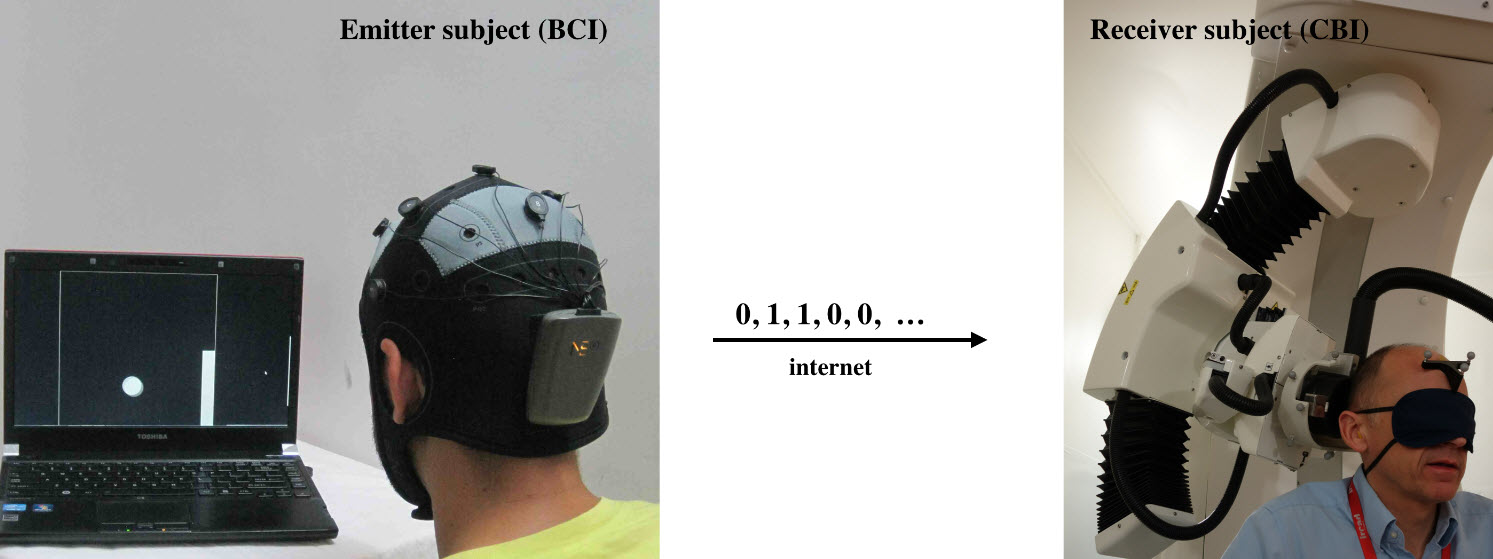

Emitter and receiver subjects with non-invasive devices supporting, respectively, a brain-computer interface (BCI), based on EEG changes, driven by motor imagery (left) and a computer-brain interface (CBI) based on the reception of phosphenes elicited by neuro-navigated TMS (right) (credit: Carles Grau et al./PLoS ONE)

In India, researchers encoded two words (“hola” and “ciao”) as binary strings and presented them as a series of cues on a computer monitor. They recorded the subject’s EEG signals as the subject was instructed to think about moving his feet (binary 0) or hands (binary 1). They then sent the recorded series of binary values in an email message to researchers in France, 5,000 miles away.

There, the binary strings were converted into a series of transcranial magnetic stimulation (TMS) pulses applied to a hotspot location in the right visual occipital cortex that either produced a phosphene (perceived flash of light) or not.

“We wanted to find out if one could communicate directly between two people by reading out the brain activity from one person and injecting brain activity into the second person, and do so across great physical distances by leveraging existing communication pathways,” explains coauthor Alvaro Pascual-Leone, MD, PhD, Director of the Berenson-Allen Center for Noninvasive Brain Stimulation at Beth Israel Deaconess Medical Center (BIDMC) and Professor of Neurology at Harvard Medical School.

A team of researchers from Starlab Barcelona, Spain and Axilum Robotics, Strasbourg, France conducted the experiment. A second similar experiment was conducted between individuals in Spain and France.

“We believe these experiments represent an important first step in exploring the feasibility of complementing or bypassing traditional language-based or other motor/PNS mediated means in interpersonal communication,” the researchers say in the paper.

“Although certainly limited in nature (e.g., the bit rates achieved in our experiments were modest even by current BCI (brain-computer interface) standards, mostly due to the dynamics of the precise CBI (computer-brain interface) implementation, these initial results suggest new research directions, including the non-invasive direct transmission of emotions and feelings or the possibility of sense synthesis in humans — that is, the direct interface of arbitrary sensors with the human brain using brain stimulation, as previously demonstrated in animals with invasive methods.

Brain-to-brain (B2B) communication system overview. On the left, the BCI subsystem is shown schematically, including electrodes over the motor cortex and the EEG amplifier/transmitter wireless box in the cap. Motor imagery of the feet codes the bit value 0, of the hands codes bit value 1. On the right, the CBI system is illustrated, highlighting the role of coil orientation for encoding the two bit values. Communication between the BCI and CBI components is mediated by the Internet. (Credit: Carles Grau et al./PLoS ONE)

“The proposed technology could be extended to support a bi-directional dialogue between two or more mind/brains (namely, by the integration of EEG and TMS systems in each subject). In addition, we speculate that future research could explore the use of closed mind-loops in which information associated to voluntary activity from a brain area or network is captured and, after adequate external processing, used to control other brain elements in the same subject. This approach could lead to conscious synthetically mediated modulation of phenomena best detected subjectively by the subject, including emotions, pain and psychotic, depressive or obsessive-compulsive thoughts.

“Finally, we anticipate that computers in the not-so-distant future will interact directly with the human brain in a fluent manner, supporting both computer- and brain-to-brain communication routinely. The widespread use of human brain-to-brain technologically mediated communication will create novel possibilities for human interrelation with broad social implications that will require new ethical and legislative responses.”

This work was partly supported by the EU FP7 FET Open HIVE project, the Starlab Kolmogorov project, and the Neurology Department of the Hospital de Bellvitge.

Aug 31, 2014

Information Entropy

Information – Entropy by Oliver Reichenstein

Will information technology affect our minds the same way the environment was affected by our analogue technology? Designers hold a key position in dealing with ever increasing data pollution. We are mostly focussed on speeding things up, on making sharing easier, faster, more accessible. But speed, usability, accessibility are not the main issue anymore. The main issues are not technological, they are structural, processual. What we lack is clarity, correctness, depth, time. Are there counter-techniques we can employ to turn data into information, information into knowledge, knowledge into wisdom?

Oliver Reichenstein — Information Entropy (SmashingConf NYC 2014) from Smashing Magazine on Vimeo.

Aug 07, 2013

Hive-mind solves tasks using Google Glass ant game

Re-blogged from New Scientist

Glass could soon be used for more than just snapping pics of your lunchtime sandwich. A new game will connect Glass wearers to a virtual ant colony vying for prizes by solving real-world problems that vex traditional crowdsourcing efforts.

Crowdsourcing is most famous for collaborative projects like Wikipedia and "games with a purpose" like FoldIt, which turns the calculations involved in protein folding into an online game. All require users to log in to a specific website on their PC.

Now Daniel Estrada of the University of Illinois in Urbana-Champaign and Jonathan Lawhead of Columbia University in New York are seeking to bring crowdsourcing to Google's wearable computer, Glass.

The pair have designed a game called Swarm! that puts a Glass wearer in the role of an ant in a colony. Similar to the pheromone trails laid down by ants, players leave virtual trails on a map as they move about. These behave like real ant trails, fading away with time unless reinforced by other people travelling the same route. Such augmented reality games already exist – Google's Ingress, for one – but in Swarm! the tasks have real-world applications.

Swarm! players seek out virtual resources to benefit their colony, such as food, and must avoid crossing the trails of other colony members. They can also monopolise a resource pool by taking photos of its real-world location.

To gain further resources for their colony, players can carry out real-world tasks. For example, if the developers wanted to create a map of the locations of every power outlet in an airport, they could reward players with virtual food for every photo of a socket they took. The photos and location data recorded by Glass could then be used to generate a map that anyone could use. Such problems can only be solved by people out in the physical world, yet the economic incentives aren't strong enough for, say, the airport owner to provide such a map.

Estrada and Lawhead hope that by turning tasks such as these into games, Swarm! will capture the group intelligence ant colonies exhibit when they find the most efficient paths between food sources and the home nest.

Read full story

13:12 Posted in Augmented/mixed reality, Creativity and computers, ICT and complexity, Wearable & mobile | Permalink | Comments (0)

Jul 22, 2011

4th International Workshop on "From Event-Driven Business Process Management to Ubiquitous Complex Event Processing"

The Workshop

4th International Workshop on “Event-Driven Business Process Management” is co-located with ServiceWave/Future Internet Conference 2011:Poznan, Poland from October 25-28, 2011.

This workshop focuses on the topics of connecting Internet of Services and Things with the management of business processes and the Future and Emerging Technologies as addressed by the ISTAG Recommendations of the European FET-F 2020 and Beyond Initiative. Such FET challenges are not longer limited to business processes, but focus on new ideas in order to connect processes on the basis of CEP with disciplines of Cell Biology, Epigenetics, Brain Research, Robotics, Emergency Management, SocioGeonomics, Bio- and Quantum Computing – summarized under the concept of U-CEP.

Important Dates

Deadline paper or extended abstract submissions: 2 September 2011

Notification of acceptance: 26 September 2011

Workshop: 28 October 2011

Camera-ready papers: 15 December 2011

More information here

15:26 Posted in ICT and complexity | Permalink | Comments (0)

Mar 10, 2008

Nooron: a platform for collaboration on a global scale

18:50 Posted in ICT and complexity | Permalink | Comments (0)