Sep 23, 2018

The development of the Awe Experience Scale

Awe is a complex emotion composed of an appraisal of vastness and a need for accommodation. The purpose of this study was to develop a robust state measure of awe, the Awe Experience Scale (AWE-S), based on the extant experimental literature. In study 1, participants (N = 501) wrote about an intense moment of awe that they had experienced and then completed a survey about their experience. Exploratory factor analysis revealed a 6-factor structure, including: altered time perception (F1); self-diminishment (F2); connectedness (F3); perceived vastness (F4); physical sensations (F5); need for accommodation (F6). Internal consistency was strong for each factor (α ≥ .80). Study 2 confirmed the 6-factor structure (N = 636) using fit indices (CFI = .905; RMSEA = .054). Each factor of the AWES is significantly correlated with the awe items of the modified Differential Emotions Scale (mDES) and Dispositional Positive Emotion Scale (D-PES). Triggers, valence, and themes associated with awe experiences are reported.

To cite this research:

Yaden, D.B. Kaufman, S.B., Hyde, E., Chirico, A., Gaggioli, A., Zhang, J.W., Keltner, D. (2018) The development of the Awe Experience Scale (AWE-S): A multifactorial measure for a complex emotion, The Journal of Positive Psychology, DOI: 10.1080/17439760.2018.1484940

18:57 Posted in Emotional computing | Permalink | Comments (0)

Jan 02, 2017

The Potential of Virtual Reality for the Investigation of Awe

The Potential of Virtual Reality for the Investigation of Awe

Alice Chirico, David B. Yaden, Giuseppe Riva and Andrea Gaggioli

Front. Psychol., 09 November 2016 | https://doi.org/10.3389/fpsyg.2016.01766

The emotion of awe is characterized by the perception of vastness and a need for accommodation, which can include a positive and/or negative valence. While a number of studies have successfully manipulated this emotion, the issue of how to elicit particularly intense awe experiences in laboratory settings remains. We suggest that virtual reality (VR) is a particularly effective mood induction tool for eliciting awe. VR provides three key assets for improving awe. First, VR provides users with immersive and ecological yet controlled environments that can elicit a sense of “presence,” the subjective experience of “being there” in a simulated reality. Further, VR can be used to generate complex, vast stimuli, which can target specific theoretical facets of awe. Finally, VR allows for convenient tracking of participants’ behavior and physiological responses, allowing for more integrated assessment of emotional experience. We discussed the potential and challenges of the proposed approach with an emphasis on VR’s capacity to raise the signal of reactions to emotions such as awe in laboratory settings.

22:21 Posted in Emotional computing, Research tools, Virtual worlds | Permalink | Comments (0)

Jun 21, 2016

New book on Human Computer Confluence - FREE PDF!

Two good news for Positive Technology followers.

1) Our new book on Human Computer Confluence is out!

2) It can be downloaded for free here

Human-computer confluence refers to an invisible, implicit, embodied or even implanted interaction between humans and system components. New classes of user interfaces are emerging that make use of several sensors and are able to adapt their physical properties to the current situational context of users.

A key aspect of human-computer confluence is its potential for transforming human experience in the sense of bending, breaking and blending the barriers between the real, the virtual and the augmented, to allow users to experience their body and their world in new ways. Research on Presence, Embodiment and Brain-Computer Interface is already exploring these boundaries and asking questions such as: Can we seamlessly move between the virtual and the real? Can we assimilate fundamentally new senses through confluence?

The aim of this book is to explore the boundaries and intersections of the multidisciplinary field of HCC and discuss its potential applications in different domains, including healthcare, education, training and even arts.

DOWNLOAD THE FULL BOOK HERE AS OPEN ACCESS

Please cite as follows:

Andrea Gaggioli, Alois Ferscha, Giuseppe Riva, Stephen Dunne, Isabell Viaud-Delmon (2016). Human computer confluence: transforming human experience through symbiotic technologies. Warsaw: De Gruyter. ISBN 9783110471120.

09:53 Posted in AI & robotics, Augmented/mixed reality, Biofeedback & neurofeedback, Blue sky, Brain training & cognitive enhancement, Brain-computer interface, Cognitive Informatics, Cyberart, Cybertherapy, Emotional computing, Enactive interfaces, Future interfaces, ICT and complexity, Neurotechnology & neuroinformatics, Positive Technology events, Research tools, Self-Tracking, Serious games, Technology & spirituality, Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink

Feb 11, 2014

Neurocam

Via KurzweilAI.net

Keio University scientists have developed a “neurocam” — a wearable camera system that detects emotions, based on an analysis of the user’s brainwaves.

The hardware is a combination of Neurosky’s Mind Wave Mobile and a customized brainwave sensor.

The algorithm is based on measures of “interest” and “like” developed by Professor Mitsukura and the neurowear team.

The users interests are quantified on a range of 0 to 100. The camera automatically records five-second clips of scenes when the interest value exceeds 60, with timestamp and location, and can be replayed later and shared socially on Facebook.

The researchers plan to make the device smaller, more comfortable, and fashionable to wear.

19:31 Posted in Emotional computing, Neurotechnology & neuroinformatics, Wearable & mobile | Permalink | Comments (0)

Feb 09, 2014

Bodily maps of emotions

Via KurzweilAI.net

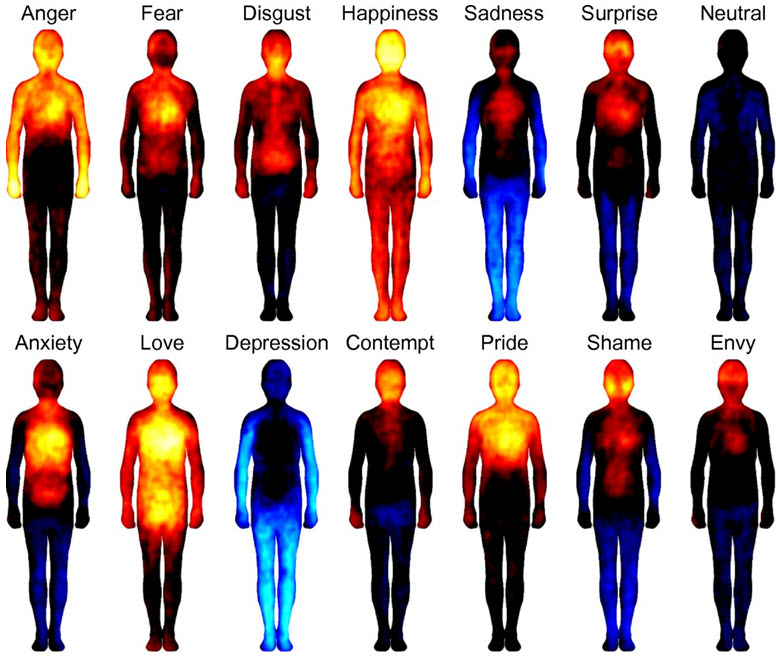

Bodily topography of basic (Upper) and nonbasic (Lower) emotions associated with words. The body maps show regions whose activation increased (warm colors) or decreased (cool colors) when feeling each emotion. (Credit: Lauri Nummenmaa et al./PNAS)

Researchers at Aalto University in Finland have compiled maps of emotional feelings associated with culturally universal bodily sensations, which could be at the core of emotional experience.

The researchers found that the most common emotions trigger strong bodily sensations, and the bodily maps of these sensations were topographically different for different emotions. The sensation patterns were, however, consistent across different West European and East Asian cultures, highlighting that emotions and their corresponding bodily sensation patterns have a biological basis.

The research was carried out on line, and over 700 individuals from Finland, Sweden and Taiwan took part in the study. The researchers induced different emotional states in their Finnish and Taiwanese participants. Subsequently the participants were shown pictures of human bodies on a computer, and asked to color the bodily regions whose activity they felt increasing or decreasing.

“Unraveling the subjective bodily sensations associated with human emotions may help us to better understand mood disorders such as depression and anxiety, which are accompanied by altered emotional processing, autonomic nervous system activity, and somatosensation (body sensations),” the researchers said in an open-access paper in Proceedings of the National Academy of Sciences. “These topographical changes in emotion-triggered sensations in the body could provide a novel biomarker for emotional disorders.”

Abstract of PNAS paper

Emotions are often felt in the body, and somatosensory feedback has been proposed to trigger conscious emotional experiences. Here we reveal maps of bodily sensations associated with different emotions using a unique topographical self-report method. In five experiments, participants (n = 701) were shown two silhouettes of bodies alongside emotional words, stories, movies, or facial expressions. They were asked to color the bodily regions whose activity they felt increasing or decreasing while viewing each stimulus. Different emotions were consistently associated with statistically separable bodily sensation maps across experiments. These maps were concordant across West European and East Asian samples. Statistical classifiers distinguished emotion-specific activation maps accurately, confirming independence of topographies across emotions. We propose that emotions are represented in the somatosensory system as culturally universal categorical somatotopic maps. Perception of these emotion-triggered bodily changes may play a key role in generating consciously felt emotions.

21:57 Posted in Emotional computing, Research tools | Permalink | Comments (0)

Nov 03, 2013

Neurocam wearable camera reads your brainwaves and records what interests you

Via KurzweilAI.net

The neurocam is the world’s first wearable camera system that automatically records what interests you, based on brainwaves, DigInfo TV reports.

It consists of a headset with a brain-wave sensor and uses the iPhone’s camera to record a 5-second GIF animation. It could also be useful for life-logging.

The algorithm for quantifying brain waves was co-developed by Associate Professor Mitsukura at Keio University.

The project team plans to create an emotional interface.

22:59 Posted in Brain-computer interface, Emotional computing, Wearable & mobile | Permalink | Comments (0)

Aug 07, 2013

What Color Is Your Night Light? It May Affect Your Mood

When it comes to some of the health hazards of light at night, a new study suggests that the color of the light can make a big difference.

Read full story on Science Daily

13:46 Posted in Emotional computing, Future interfaces, Research tools | Permalink | Comments (0)

International Conference on Physiological Computing Systems

7-9 January 2014, Lisbon, Portugal

Physiological data in its different dimensions, either bioelectrical, biomechanical, biochemical or biophysical, and collected through specialized biomedical devices, video and image capture or other sources, is opening new boundaries in the field of human-computer interaction into what can be defined as Physiological Computing. PhyCS is the annual meeting of the physiological interaction and computing community, and serves as the main international forum for engineers, computer scientists and health professionals, interested in outstanding research and development that bridges the gap between physiological data handling and human-computer interaction.

Regular Paper Submission Extension: September 15, 2013

Regular Paper Authors Notification: October 23, 2013

Regular Paper Camera Ready and Registration: November 5, 2013

Jul 18, 2013

Identifying human emotions based on brain activity

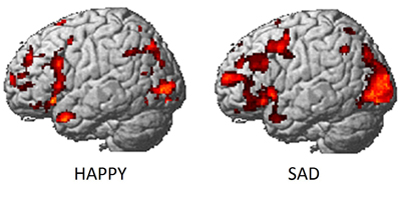

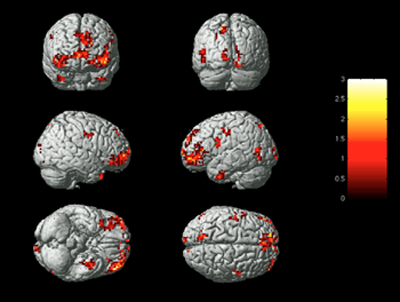

For the first time, scientists at Carnegie Mellon University have identified which emotion a person is experiencing based on brain activity.

The study, published in the June 19 issue of PLOS ONE, combines functional magnetic resonance imaging (fMRI) and machine learning to measure brain signals to accurately read emotions in individuals. Led by researchers in CMU’s Dietrich College of Humanities and Social Sciences, the findings illustrate how the brain categorizes feelings, giving researchers the first reliable process to analyze emotions. Until now, research on emotions has been long stymied by the lack of reliable methods to evaluate them, mostly because people are often reluctant to honestly report their feelings. Further complicating matters is that many emotional responses may not be consciously experienced.

Identifying emotions based on neural activity builds on previous discoveries by CMU’s Marcel Just and Tom M. Mitchell, which used similar techniques to create a computational model that identifies individuals’ thoughts of concrete objects, often dubbed “mind reading.”

“This research introduces a new method with potential to identify emotions without relying on people’s ability to self-report,” said Karim Kassam, assistant professor of social and decision sciences and lead author of the study. “It could be used to assess an individual’s emotional response to almost any kind of stimulus, for example, a flag, a brand name or a political candidate.”

One challenge for the research team was find a way to repeatedly and reliably evoke different emotional states from the participants. Traditional approaches, such as showing subjects emotion-inducing film clips, would likely have been unsuccessful because the impact of film clips diminishes with repeated display. The researchers solved the problem by recruiting actors from CMU’s School of Drama.

“Our big breakthrough was my colleague Karim Kassam’s idea of testing actors, who are experienced at cycling through emotional states. We were fortunate, in that respect, that CMU has a superb drama school,” said George Loewenstein, the Herbert A. Simon University Professor of Economics and Psychology.

For the study, 10 actors were scanned at CMU’s Scientific Imaging & Brain Research Center while viewing the words of nine emotions: anger, disgust, envy, fear, happiness, lust, pride, sadness and shame. While inside the fMRI scanner, the actors were instructed to enter each of these emotional states multiple times, in random order.

Another challenge was to ensure that the technique was measuring emotions per se, and not the act of trying to induce an emotion in oneself. To meet this challenge, a second phase of the study presented participants with pictures of neutral and disgusting photos that they had not seen before. The computer model, constructed from using statistical information to analyze the fMRI activation patterns gathered for 18 emotional words, had learned the emotion patterns from self-induced emotions. It was able to correctly identify the emotional content of photos being viewed using the brain activity of the viewers.

To identify emotions within the brain, the researchers first used the participants’ neural activation patterns in early scans to identify the emotions experienced by the same participants in later scans. The computer model achieved a rank accuracy of 0.84. Rank accuracy refers to the percentile rank of the correct emotion in an ordered list of the computer model guesses; random guessing would result in a rank accuracy of 0.50.

Next, the team took the machine learning analysis of the self-induced emotions to guess which emotion the subjects were experiencing when they were exposed to the disgusting photographs. The computer model achieved a rank accuracy of 0.91. With nine emotions to choose from, the model listed disgust as the most likely emotion 60 percent of the time and as one of its top two guesses 80 percent of the time.

Finally, they applied machine learning analysis of neural activation patterns from all but one of the participants to predict the emotions experienced by the hold-out participant. This answers an important question: If we took a new individual, put them in the scanner and exposed them to an emotional stimulus, how accurately could we identify their emotional reaction? Here, the model achieved a rank accuracy of 0.71, once again well above the chance guessing level of 0.50.

“Despite manifest differences between people’s psychology, different people tend to neurally encode emotions in remarkably similar ways,” noted Amanda Markey, a graduate student in the Department of Social and Decision Sciences.

A surprising finding from the research was that almost equivalent accuracy levels could be achieved even when the computer model made use of activation patterns in only one of a number of different subsections of the human brain.

“This suggests that emotion signatures aren’t limited to specific brain regions, such as the amygdala, but produce characteristic patterns throughout a number of brain regions,” said Vladimir Cherkassky, senior research programmer in the Psychology Department.

The research team also found that while on average the model ranked the correct emotion highest among its guesses, it was best at identifying happiness and least accurate in identifying envy. It rarely confused positive and negative emotions, suggesting that these have distinct neural signatures. And, it was least likely to misidentify lust as any other emotion, suggesting that lust produces a pattern of neural activity that is distinct from all other emotional experiences.

Just, the D.O. Hebb University Professor of Psychology, director of the university’s Center for Cognitive Brain Imaging and leading neuroscientist, explained, “We found that three main organizing factors underpinned the emotion neural signatures, namely the positive or negative valence of the emotion, its intensity — mild or strong, and its sociality — involvement or non-involvement of another person. This is how emotions are organized in the brain.”

In the future, the researchers plan to apply this new identification method to a number of challenging problems in emotion research, including identifying emotions that individuals are actively attempting to suppress and multiple emotions experienced simultaneously, such as the combination of joy and envy one might experience upon hearing about a friend’s good fortune.

12:30 Posted in Emotional computing, Research tools | Permalink | Comments (0)

Oct 27, 2012

Using Activity-Related Behavioural Features towards More Effective Automatic Stress Detection

Giakoumis D, Drosou A, Cipresso P, Tzovaras D, Hassapis G, Gaggioli A, Riva G.

PLoS One. 2012;7(9):e43571. doi: 10.1371/journal.pone.0043571. Epub 2012 Sep 19

This paper introduces activity-related behavioural features that can be automatically extracted from a computer system, with the aim to increase the effectiveness of automatic stress detection. The proposed features are based on processing of appropriate video and accelerometer recordings taken from the monitored subjects. For the purposes of the present study, an experiment was conducted that utilized a stress-induction protocol based on the stroop colour word test. Video, accelerometer and biosignal (Electrocardiogram and Galvanic Skin Response) recordings were collected from nineteen participants. Then, an explorative study was conducted by following a methodology mainly based on spatiotemporal descriptors (Motion History Images) that are extracted from video sequences. A large set of activity-related behavioural features, potentially useful for automatic stress detection, were proposed and examined. Experimental evaluation showed that several of these behavioural features significantly correlate to self-reported stress. Moreover, it was found that the use of the proposed features can significantly enhance the performance of typical automatic stress detection systems, commonly based on biosignal processing.

Full paper available here

13:36 Posted in Emotional computing, Research tools, Wearable & mobile | Permalink | Comments (0)

Jul 05, 2012

Games as transpersonal technologies

When we think about video games, we generally think of them as computer programs designed to provide enjoyment and fun. However, the rapid evolution of gaming technologies, which includes advances in 3d graphics accelerator, stereoscopic displays, gesture recognition, wireless peripheals etc, is providing novel human-computer interaction opportunities that are able to engage players’ mind and bodies in totally new ways. Thanks to these features, games are increasingly used for purposes other than pure entertainment, such as in medical rehabilitation, psychotherapy, education and training.

However, “serious” applications of video games do not represent the most advanced frontier of their evolution. A new trend is emerging, which consists in designing video-games that are able to deliver emotionally-rich, memorable and “transformative” experiences. In this new type of games, there is no shooting, no monsters, no competition, no score accumulation: players are virtually transported into charming and evocative places, where they can make extraordinary encounters, challenge physical laws, or become another form of life. The most representative examples of this emerging trend are the games created by computer scientist Jenova Chen.

J. Chen

Born in 1981 in Shangai, Chen holds a master's degree in the Interactive Media Program of the School of Cinematic Arts at the University of Southern California. He believes that for video games to become a mature medium like film, it is important to create games that are able to induce different emotional responses in the player than just excitement or fear.

This design philosophy is reflected in his award-winning games "Clound" "flOw" and "Flower". The first game, Cloud, was developed while Chen was still in college. The game is the story of a boy who dreams of flying through the sky while asleep in a hospital bed. The player controls of the sleeping boy's avatar and guides him through his dream of a small group of islands with a light gathering of clouds, which can be manipulated by the player in various ways.

The second game, flOw, is about piloting a small, snake-like creature through an aquatic environment where players consume other organisms, evolve, and advance their organisms to the abyss. Despite its apparent simplicity, the game was received very well by the audience, attracting 350,000 downloads within the first two weeks following its release. One of the most innovative feature of the flOw game is the implementation of the Dynamic Difficulty Adjustment process, which allows adapting the difficulty of the game to the player’s skill level.

In the third game developed by Chen and his collaborators, Flower, the player controls the wind as it blows a single flower petal through the air; approaching flowers results in the player's petal being followed by other flower petals. Getting closer to flowers affects other features of the virtual world, such as colouring previously dead fields, or activating stationary windmills. The final goal is to blow the breeze that carries color into every part of the gaming world, defeating the dinginess that surrounds the flowers in the city. The game includes no text or dialogue, but is built upon a narrative structure whose basic elements are visual representations and emotional cues.

Chen's last brainchild is Journey, where the player takes the role of a red-robed figure in a big desert populated by supernatural ruins. On the far horizon is a big mountain with a light beam shooting straight up into the sky, which becomes the natural destination of the adventure. While walking towards the mountain, the avatar can encounter other players, one at a time, if they are online; they cannot speak but can help each other in their journey if they wish. Again, as in the other three games, the scope of Journey is to provoke emotions and feelings that are difficult to find words to express, and that are able to produce memorable, inspiring experiences in the player.

Chen’s vision of gaming is rooted into Mihaly Csikszentmihalyi's theory of Flow. According to Csikszentmihalyi, flow is a complex state of consciousness characterized by high levels of concentration and involvement in the task at hand, enjoyment, a positive affective state and intrinsic motivation. This optimal experience is usually associated with activities which involve individuals’ creative abilities. During flow, people typically feels "strong, alert, in effortless control, unselfconscious, and at the peak of their abilities. Both the sense of time and emotional problems seem to disappear, and there is an exhilarating feeling of transcendence…. With such goals, we learn to order the information that enters consciousness and thereby improve the quality of our lives.” (Csikszentmihalyi, M. Flow: The Psychology of Optimal Experience. New York: Harper Perennial, 1991).

The most interesting aspect in Chen’s design philosophy is the fact that his videogames are based on established psychological theories and are purposefully designed to support positive emotions and foster the development of consciousness. From this perspective, Chen’s videogames could be seen as advanced “transpersonal technologies”. As suggested by Roy Ascott, a transpersonal technology is any medium that “enables us to transform our selves, transfer our thoughts and transcend the limitations of our bodies. Transpersonal experience gives us insight into the interconnectedness of all things, the permeability and instability of boundaries, the lack of distinction between part and whole, foreground and background, context and content” (Ascott, R. 1995. The Architecture of Cyberception. In Toy. M. (Ed) Architects in Cyberspace. London Academy Editions, pp. 38-41).

23:02 Posted in Emotional computing, Serious games, Technology & spirituality | Permalink | Comments (0)

Mar 28, 2012

Emotizer

Emotizer is a new emotional social network that allows you to bookmark places, people, objects, dreams and thoughts and link them to the emotions you're feeling within.

19:27 Posted in Emotional computing, Locative media, Self-Tracking | Permalink | Comments (0)

Jan 27, 2012

Moodscope

Moodscope is "an online personal mood management tool that helps people grappling with depression or mood disorders to effectively measure and track their moods". It allows tracking your "ups and downs" and displaying them on a graph to better understand how your mood fluctuates over time. And yes, of course it allows sharing your scores with trusted friends so they can support you if you are down..

20:02 Posted in Emotional computing, Self-Tracking | Permalink | Comments (0)

A smart-phone will detect people's emotions

MIT's Technology Review reports that Samsung researchers have released a smart-phone designed to "read" people's emotions. Rather than relying on specialized sensors or cameras, the phone infers a user's emotional state based on how he's using the phone.

"For example, it monitors certain inputs, such as the speed at which a user types, how often the “backspace” or “special symbol” buttons are pressed, and how much the device shakes. These measures let the phone postulate whether the user is happy, sad, surprised, fearful, angry, or disgusted, says Hosub Lee, a researcher with Samsung Electronics and the Samsung Advanced Institute of Technology’s Intelligence Group, in South Korea. Lee led the work on the new system. He says that such inputs may seem to have little to do with emotions, but there are subtle correlations between these behaviors and one’s mental state, which the software’s machine-learning algorithms can detect with an accuracy of 67.5 percent."

Once a phone infers an emotional state, it can then change how it interacts with the user: "The system could trigger different ringtones on a phone to convey the caller’s emotional state or cheer up someone who’s feeling low. “The smart phone might show a funny cartoon to make the user feel better,” he says.

19:07 Posted in Emotional computing, Wearable & mobile | Permalink | Comments (0)

Jan 05, 2011

INTERSTRESS video released

We have just released a new video introducing the INTERSTRESS project, an EU-funded initiative that aims to design, develop and test an advanced ICT-based solution for the assessment and treatment of psychological stress. The specific objectives of the project are:

-

Quantitative and objective assessment of symptoms using biosensors and behavioral analysis

-

Decision support for treatment planning through data fusion and detection algorithms

-

Provision of warnings and motivating feedback to improve compliance and long-term outcomes

Credits: Virtual Reality Medical Institute

13:12 Posted in Cybertherapy, Emotional computing, Positive Technology events, Virtual worlds, Wearable & mobile | Permalink | Comments (0)

Aug 16, 2010

My Relax 3d

There is nothing more regenerating than a long sea vacation. But what we do as we are back to the office and find an overwhelming pile of email? A good recovery strategy from post-vacation stress is essential, and advanced technologies may help.

For example, My Relax 3D is a mobile application that helps you relax while watching at stunning 3D landscapes of an exotic island. When you enter the application, you can choose between highly realistic 3d environments, depicting various island scenarios (i.e. a tropical forest, a sunset)

During the experience, a voiceover provides instructions to relieve from stress and develop positive emotions.

The application is highly configurable: it can be experienced with or without 3D glasses (but I strongly recommend this option to enhance your feeling of "presence"). It is also possible to choose between different pleasant music themes.

Of course, it's not like a first class holiday in a luxury resort... but it's definitely the best you can do with five bucks!

18:07 Posted in Emotional computing, Meditation & brain, Wearable & mobile | Permalink | Comments (0) | Tags: relaxation, mobile, 3d

Nov 25, 2009

The Razionalizer

Via Leeander

The “Rationalizer”, is a new concept device by Philips that is designed for “mirroring" your emotions.

The user wears an Emobracelet on his/her wrist which measures the arousal component of the user’s emotion through a galvanic skin response sensor. The EmoBracelet is wirelessly connected with the EmoBowl, a bowl with lighted patterns that displays user’s emotional status.

The range is a soft yellow, orange, or deep red.

When the user sees that the bowl is flashing red, it means that it might be good to take a breather and calm down before making any irrational decisions (i.e. risky investments).

See how the Razionalizer works in this concept video:

21:07 Posted in Emotional computing | Permalink | Comments (0) | Tags: emotional computing, self tracking, stress, emotion detection

Apr 29, 2009

Emotional Cartography

Via Info Aesthetic

The free-downloadable book Emotional Cartography - Technologies of the Self is a collection of essays that explores the political, social and cultural implications of visualizing intimate biometric data and emotional experiences using technology. The theme of this collection of essays is to investigate the apparent desire for technologies to map emotion, using a variety of different approaches.

18:11 Posted in Emotional computing, Information visualization | Permalink | Comments (0) | Tags: information visualization, affective computing

Nov 04, 2008

Textual emotion recognition and visualization

a textual emotion recognition & visualization engine based on the concept of synesthesia , or in other words: "code that feels the words visually". the synesketch application is able to dynamically transfer the text into animated visual patterns.

the emotional parameters are based on a WordNet-based lexicon of words with their general & specific emotional weights, for the emotion types happiness, sadness, fear, anger, disgust, surprise. the visualization is based on a generative painting system of imaginary colliding particles. colors & shapes of these patterns depend on the type and intensity of interpreted textual emotions.

22:02 Posted in Emotional computing, Information visualization | Permalink | Comments (0) | Tags: information visualization

Mar 16, 2008

Exmocare wins F&S Technology Innovation of the Year Award

Via Medgadget

Frost & Sullivan has awarded Exmocare, Inc. with 2008 North American Emotion Monitoring Technology Innovation of the Year Award for "developing the ExmoCare physiology and emotion monitoring platform that aids in understanding the physiological state of a person through monitoring the expression of emotion patterns. The platforms developed by the company Exmocare, Inc. are unique in that they are the first of their kind to become a first-stop solution for vital sign monitoring , emotional monitoring, and online reporting."

Press release: Exmocare Taps into $200 Billion Industry - Maker of Award-winning 24/7 Bluetooth Vital Signs Wristwatch

Product page: The Exmocare BT2...

23:36 Posted in Emotional computing | Permalink | Comments (0) | Tags: emotional computing