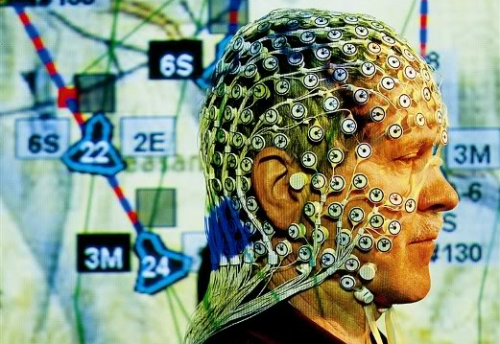

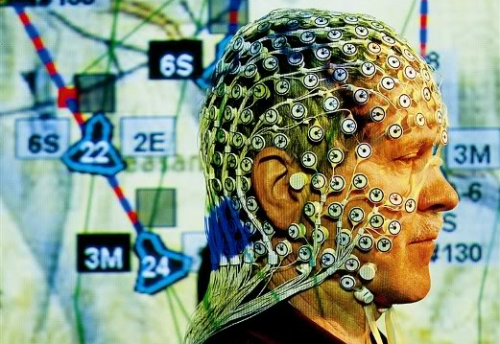

The increasing miniaturization and computing power of information technology devices allow new ways of interaction between human brains and computers, progressively blurring the boundaries between man and machine. An example is provided by brain-computer interface systems, which allow users to use their brain to control the behavior of a computer or of an external device such as a robotic arm (in this latter case, we speak of “neuroprostetics”).

The idea of using information technologies to augment cognition, however, is not new, dating back in 1950’s and 1960’s. One of the first to write about this concept was british psychiatrist William Ross Ashby.

In his Introduction to Cybernetics (1956), he described intelligence as the “power of appropriate selection,” which could be amplified by means of technologies in the same way that physical power is amplified. A second major conceptual contribution towards the development of cognitive augmentation was provided few years later by computer scientist and Internet pioneer Joseph Licklider, in a paper entitled Man-Computer Symbiosis (1960).

In this article, Licklider envisions the development of computer technologies that will enable users “to think in interaction with a computer in the same way that you think with a colleague whose competence supplements your own.” According to his vision, the raise of computer networks would allow to connect together millions of human minds, within a “'thinking center' that will incorporate the functions of present-day libraries together with anticipated advances in information storage and retrieval.” This view represent a departure from the prevailing Artificial Intelligence approach of that time: instead of creating an artificial brain, Licklider focused on the possibility of developing new forms of interaction between human and information technologies, with the aim of extending human intelligence.

A similar view was proposed in the same years by another computer visionnaire, Douglas Engelbart, in its famous 1962 article entitled Augmenting Human Intellect: A Conceptual Framework.

In this report, Engelbart defines the goal of intelligence augmentation as “increasing the capability of a man to approach a complex problem situation, to gain comprehension to suit his particular needs, and to derive solutions to problems. Increased capability in this respect is taken to mean a mixture of the following: more-rapid comprehension, better comprehension, the possibility of gaining a useful degree of comprehension in a situation that previously was too complex, speedier solutions, better solutions, and the possibility of finding solutions to problems that before seemed insoluble (…) We do not speak of isolated clever tricks that help in particular situations.We refer to away of life in an integrated domain where hunches, cut-and-try, intangibles, and the human ‘feel for a situation’ usefully co-exist with powerful concepts, streamlined terminology and notation, sophisticated methods, and high-powered electronic aids.”

These “electronic aids” nowdays include any kind of harware and software computing devices used i.e. to store information in external memories, to process complex data, to perform routine tasks and to support decision making. However, today the concept of cognitive augmentation is not limited to the amplification of human intellectual abilities through external hardware. As recently noted by Nick Bostrom and Anders Sandberg (Sci Eng Ethics 15:311–341, 2009), “What is new is the growing interest in creating intimate links between the external systems and the human user through better interaction. The software becomes less an external tool and more of a mediating ‘‘exoself’’. This can be achieved through mediation, embedding the human within an augmenting ‘‘shell’’ such as wearable computers (…) or virtual reality, or through smart environments in which objects are given extended capabilities” (p. 320).

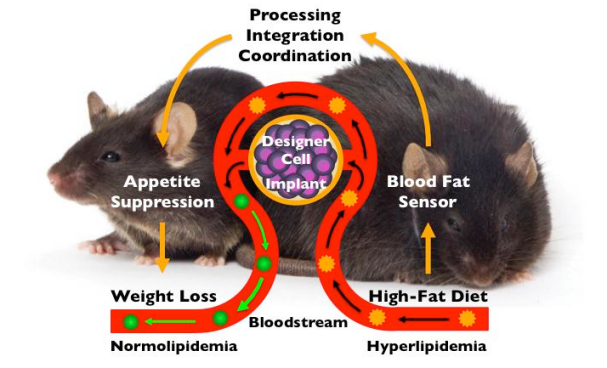

At the forefront of this trend is neurotechnology, an emerging research and development field which includes technologies that are specifically designed with the aim of improving brain function. Examples of neurotechnologies include brain training games such as BrainAge and programs like Fast ForWord, but also neurodevices used to monitor or regulate brain activity, such as deep brain stimulators (DBS), and smart prosthetics for the replacement of impaired sensory systems (i.e. cochlear or retinal implants).

Clearly, the vision of neurotechnology is not free of issues. The more they become powerful and sophisticated, the more attention should be dedicated to understand the socio-economic, legal and ethical implications of their applications in various field, from medicine to neuromarketing.