Aug 04, 2012

Non-Pharmacological Cognitive Enhancement

Neuropharmacology. 2012 Jul 21;

Authors: Dresler M, Sandberg A, Ohla K, Bublitz C, Trenado C, Mroczko-Wasowicz A, Kühn S, Repantis D

Abstract. The term "cognitive enhancement" usually characterizes interventions in humans that aim to improve mental functioning beyond what is necessary to sustain or restore good health. While the current bioethical debate mainly concentrates on pharmaceuticals, according to the given characterization, cognitive enhancement also by non-pharmacological means has to be regarded as enhancement proper. Here we summarize empirical data on approaches using nutrition, physical exercise, sleep, meditation, mnemonic strategies, computer training, and brain stimulation for enhancing cognitive capabilities. Several of these non-pharmacological enhancement strategies seem to be more efficacious compared to currently available pharmaceuticals usually coined as cognitive enhancers. While many ethical arguments of the cognitive enhancement debate apply to both pharmacological and non-pharmacological enhancers, some of them appear in new light when considered on the background of non-pharmacological enhancement.

17:49 Posted in Brain training & cognitive enhancement | Permalink | Comments (0)

The Virtual Brain

The Virtual Brain project promises "to deliver the first open simulation of the human brain based on individual large-scale connectivity", by "employing novel concepts from neuroscience, effectively reducing the complexity of the brain simulation while still keeping it sufficiently realistic".

The Virtual Brain team includes well-recognized neuroscientists from all over the world. In the video below, Dr. Randy McIntosh explains what the project is about.

First teaser release of The Virtual Brain software suite is available for download – for Windows, Mac and Linux: http://thevirtualbrain.org/

13:34 Posted in Blue sky, Neurotechnology & neuroinformatics, Research tools | Permalink | Comments (0)

New HMD "Oculus RIFT" launched on Kickstarter

The Oculus Rift, a new HMD which promises to take 3D gaming to the next level, was launched on Kickstarter last week. The funding goal is $250,000.

“With an incredibly wide field of view, high resolution display, and ultra-low latency head tracking, the Rift provides a truly immersive experience that allows you to step inside your favorite game and explore new worlds like never before."

Technical specs of the Dev Kit (subject to change)

Head tracking: 6 degrees of freedom (DOF) ultra low latency

Field of view: 110 degrees diagonal / 90 degrees horizontal

Resolution: 1280×800 (640×800 per eye)

Inputs: DVI/HDMI and USB

Platforms: PC and mobile

Weight: ~0.22 kilograms

The developer kits acquired through Kickstarter will include access to the Oculus Developer Center, a community for Oculus developers. The Oculus Rift SDK will include code, samples, and documentation to facilitate integration with any new or existing games, initially on PCs and mobiles, with consoles to follow.

Oculus says it will be showcasing the Rift at a number of upcoming tradeshows, including Quakecon, Siggraph, GDC Europe, gamescom and Unite.

Aug 03, 2012

Around 40 researchers attended the 1st Summer School on Human Computer Confluence in Milan

Jul 30, 2012

Nike’s stroboscopic eyewear improves visual memory, hand-eye coordination

Via ExtremeTech

Sports athletes in recent years have concentrated on making themselves stronger and faster (sometimes to their own detriment and sanctity of the sport — see Baseball, Steroids Era), but building muscle mass is only part of the equation. Nike, one of the biggest sponsors of sport, sees potential (and profit) in specialized eye gear designed to allow athletes to fine tune their sensory skills and “see their sport better” through the use of modern technology.

To prove its point and draw attention to its Sparq Vapor Strobe sports glasses, Nike commissioned a study at Duke’s Institute for Brain Sciences that focuses on “stroboscopic training” using Nike’s eyewear. In essence, Nike went in search of scientific data to prove that simulating a strobe-like experience can increase visual short-term memory retention, and purportedly found it.

Read the full story

14:55 Posted in Brain training & cognitive enhancement, Wearable & mobile | Permalink | Comments (0)

Jul 14, 2012

Robot avatar body controlled by thought alone

Via: New Scientist

For the first time, a person lying in an fMRI machine has controlled a robot hundreds of kilometers away using thought alone.

"The ultimate goal is to create a surrogate, like in Avatar, although that’s a long way off yet,” says Abderrahmane Kheddar, director of the joint robotics laboratory at the National Institute of Advanced Industrial Science and Technology in Tsukuba, Japan.

Teleoperated robots, those that can be remotely controlled by a human, have been around for decades. Kheddar and his colleagues are going a step further. “True embodiment goes far beyond classical telepresence, by making you feel that the thing you are embodying is part of you,” says Kheddar. “This is the feeling we want to reach.”

To attempt this feat, researchers with the international Virtual Embodiment and Robotic Re-embodiment project used fMRI to scan the brain of university student Tirosh Shapira as he imagined moving different parts of his body. He attempted to direct a virtual avatar by thinking of moving his left or right hand or his legs.

The scanner works by measuring changes in blood flow to the brain’s primary motor cortex, and using this the team was able to create an algorithm that could distinguish between each thought of movement (see diagram). The commands were then sent via an internet connection to a small robot at the Béziers Technology Institute in France.

The set-up allowed Shapira to control the robot in near real time with his thoughts, while a camera on the robot’s head allowed him to see from the robot’s perspective. When he thought of moving his left or right hand, the robot moved 30 degrees to the left or right. Imagining moving his legs made the robot walk forward.

Read further at: http://www.newscientist.com/article/mg21528725.900-robot-avatar-body-controlled-by-thought-alone.html

18:51 Posted in Brain-computer interface, Future interfaces | Permalink | Comments (0)

Does technology affect happiness?

Via The New York Times

As young people spend more time on computers, smartphones and other devices, researchers are asking how all that screen time and multitasking affects children’s and teenagers’ ability to focus and learn — even drive cars.

A study from Stanford University, published Wednesday, wrestles with a new question: How is technology affecting their happiness and emotional development?

Read the full article here

http://bits.blogs.nytimes.com/2012/01/25/does-technology-affect-happiness/

18:51 Posted in Ethics of technology, Positive Technology events | Permalink | Comments (0)

Jul 05, 2012

A Real-Time fMRI-Based Spelling Device Immediately Enabling Robust Motor-Independent Communication

A Real-Time fMRI-Based Spelling Device Immediately Enabling Robust Motor-Independent Communication.

Curr Biol. 2012 Jun 27

Authors: Sorger B, Reithler J, Dahmen B, Goebel R

Human communication entirely depends on the functional integrity of the neuromuscular system. This is devastatingly illustrated in clinical conditions such as the so-called locked-in syndrome (LIS) [1], in which severely motor-disabled patients become incapable to communicate naturally-while being fully conscious and awake. For the last 20 years, research on motor-independent communication has focused on developing brain-computer interfaces (BCIs) implementing neuroelectric signals for communication (e.g., [2-7]), and BCIs based on electroencephalography (EEG) have already been applied successfully to concerned patients [8-11]. However, not all patients achieve proficiency in EEG-based BCI control [12]. Thus, more recently, hemodynamic brain signals have also been explored for BCI purposes [13-16]. Here, we introduce the first spelling device based on fMRI. By exploiting spatiotemporal characteristics of hemodynamic responses, evoked by performing differently timed mental imagery tasks, our novel letter encoding technique allows translating any freely chosen answer (letter-by-letter) into reliable and differentiable single-trial fMRI signals. Most importantly, automated letter decoding in real time enables back-and-forth communication within a single scanning session. Because the suggested spelling device requires only little effort and pretraining, it is immediately operational and possesses high potential for clinical applications, both in terms of diagnostics and establishing short-term communication with nonresponsive and severely motor-impaired patients.

23:24 Posted in Brain-computer interface, Cybertherapy, Neurotechnology & neuroinformatics | Permalink | Comments (0)

Games as transpersonal technologies

When we think about video games, we generally think of them as computer programs designed to provide enjoyment and fun. However, the rapid evolution of gaming technologies, which includes advances in 3d graphics accelerator, stereoscopic displays, gesture recognition, wireless peripheals etc, is providing novel human-computer interaction opportunities that are able to engage players’ mind and bodies in totally new ways. Thanks to these features, games are increasingly used for purposes other than pure entertainment, such as in medical rehabilitation, psychotherapy, education and training.

However, “serious” applications of video games do not represent the most advanced frontier of their evolution. A new trend is emerging, which consists in designing video-games that are able to deliver emotionally-rich, memorable and “transformative” experiences. In this new type of games, there is no shooting, no monsters, no competition, no score accumulation: players are virtually transported into charming and evocative places, where they can make extraordinary encounters, challenge physical laws, or become another form of life. The most representative examples of this emerging trend are the games created by computer scientist Jenova Chen.

J. Chen

Born in 1981 in Shangai, Chen holds a master's degree in the Interactive Media Program of the School of Cinematic Arts at the University of Southern California. He believes that for video games to become a mature medium like film, it is important to create games that are able to induce different emotional responses in the player than just excitement or fear.

This design philosophy is reflected in his award-winning games "Clound" "flOw" and "Flower". The first game, Cloud, was developed while Chen was still in college. The game is the story of a boy who dreams of flying through the sky while asleep in a hospital bed. The player controls of the sleeping boy's avatar and guides him through his dream of a small group of islands with a light gathering of clouds, which can be manipulated by the player in various ways.

The second game, flOw, is about piloting a small, snake-like creature through an aquatic environment where players consume other organisms, evolve, and advance their organisms to the abyss. Despite its apparent simplicity, the game was received very well by the audience, attracting 350,000 downloads within the first two weeks following its release. One of the most innovative feature of the flOw game is the implementation of the Dynamic Difficulty Adjustment process, which allows adapting the difficulty of the game to the player’s skill level.

In the third game developed by Chen and his collaborators, Flower, the player controls the wind as it blows a single flower petal through the air; approaching flowers results in the player's petal being followed by other flower petals. Getting closer to flowers affects other features of the virtual world, such as colouring previously dead fields, or activating stationary windmills. The final goal is to blow the breeze that carries color into every part of the gaming world, defeating the dinginess that surrounds the flowers in the city. The game includes no text or dialogue, but is built upon a narrative structure whose basic elements are visual representations and emotional cues.

Chen's last brainchild is Journey, where the player takes the role of a red-robed figure in a big desert populated by supernatural ruins. On the far horizon is a big mountain with a light beam shooting straight up into the sky, which becomes the natural destination of the adventure. While walking towards the mountain, the avatar can encounter other players, one at a time, if they are online; they cannot speak but can help each other in their journey if they wish. Again, as in the other three games, the scope of Journey is to provoke emotions and feelings that are difficult to find words to express, and that are able to produce memorable, inspiring experiences in the player.

Chen’s vision of gaming is rooted into Mihaly Csikszentmihalyi's theory of Flow. According to Csikszentmihalyi, flow is a complex state of consciousness characterized by high levels of concentration and involvement in the task at hand, enjoyment, a positive affective state and intrinsic motivation. This optimal experience is usually associated with activities which involve individuals’ creative abilities. During flow, people typically feels "strong, alert, in effortless control, unselfconscious, and at the peak of their abilities. Both the sense of time and emotional problems seem to disappear, and there is an exhilarating feeling of transcendence…. With such goals, we learn to order the information that enters consciousness and thereby improve the quality of our lives.” (Csikszentmihalyi, M. Flow: The Psychology of Optimal Experience. New York: Harper Perennial, 1991).

The most interesting aspect in Chen’s design philosophy is the fact that his videogames are based on established psychological theories and are purposefully designed to support positive emotions and foster the development of consciousness. From this perspective, Chen’s videogames could be seen as advanced “transpersonal technologies”. As suggested by Roy Ascott, a transpersonal technology is any medium that “enables us to transform our selves, transfer our thoughts and transcend the limitations of our bodies. Transpersonal experience gives us insight into the interconnectedness of all things, the permeability and instability of boundaries, the lack of distinction between part and whole, foreground and background, context and content” (Ascott, R. 1995. The Architecture of Cyberception. In Toy. M. (Ed) Architects in Cyberspace. London Academy Editions, pp. 38-41).

23:02 Posted in Emotional computing, Serious games, Technology & spirituality | Permalink | Comments (0)

May 07, 2012

Watch-like wrist sensor could gauge the severity of epileptic seizures as accurately as EEG

From the MIT press release

Researchers at MIT and two Boston hospitals provide early evidence that a simple, unobtrusive wrist sensor could gauge the severity of epileptic seizures as accurately as electroencephalograms (EEGs) do — but without the ungainly scalp electrodes and electrical leads. The device could make it possible to collect clinically useful data from epilepsy patients as they go about their daily lives, rather than requiring them to come to the hospital for observation. And if early results are borne out, it could even alert patients when their seizures are severe enough that they need to seek immediate medical attention.

Rosalind Picard, a professor of media arts and sciences at MIT, and her group originally designed the sensors to gauge the emotional states of children with autism, whose outward behavior can be at odds with what they’re feeling. The sensor measures the electrical conductance of the skin, an indicator of the state of the sympathetic nervous system, which controls the human fight-or-flight response.

In a study conducted at Children’s Hospital Boston, the research team — Picard, her student Ming-Zher Poh, neurologist Tobias Loddenkemper and four colleagues from MIT, Children’s Hospital and Brigham and Women’s Hospital — discovered that the higher a patient’s skin conductance during a seizure, the longer it took for the patient’s brain to resume the neural oscillations known as brain waves, which EEG measures.

At least one clinical study has shown a correlation between the duration of brain-wave suppression after seizures and the incidence of sudden unexplained death in epilepsy (SUDEP), a condition that claims thousands of lives each year in the United States alone. With SUDEP, death can occur hours after a seizure.

Currently, patients might use a range of criteria to determine whether a seizure is severe enough to warrant immediate medical attention. One of them is duration. But during the study at Children’s Hospital, Picard says, “what we found was that this severity measure had nothing to do with the length of the seizure.” Ultimately, data from wrist sensors could provide crucial information to patients deciding whether to roll over and go back to sleep or get to the emergency room.

Read the full press release

16:59 Posted in Cybertherapy, Pervasive computing, Wearable & mobile | Permalink | Comments (0)

CFP – Brain Computer Interfaces Grand Challenge 2012

(From the CFP website)

Sensors, such as wireless EEG caps, that provide us with information about the brain activity are becoming available for use outside the medical domain. As in the case of physiological sensors information derived from these sensors can be used – as an information source for interpreting the user’s activity and intentions. For example, a user can use his or her brain activity to issue commands by using motor imagery. But this control-oriented interaction is unreliable and inefficient compared to other available interaction modalities. Moreover a user needs to behave as almost paralyzed (sit completely still) to generate artifact-free brain activity which can be recognized by the Brain-Computer Interface (BCI).

Of course BCI systems are improving in various ways; improved sensors, better recognition techniques, software that is more usable, natural, and context aware, hybridization with physiological sensors and other communication systems. New applications arise at the horizon and are explored, such as motor recovery and entertainment. Testing and validation with target users in home settings is becoming more common. These and other developments are making BCIs increasingly practical for conventional users (persons with severe motor disabilities) as well as non-disabled users. But despite this progress BCIs remain, as a control interface, quite limited in real world settings. BCIs are slow and unreliable, particularly over extended periods with target users. BCIs require expert assistance in many ways; a typical end user today needs help to identify, buy, setup, configure, maintain, repair and upgrade the BCI. User-centered design is underappreciated, with BCIs meeting the goals and abilities of the designer rather than user. Integration in the daily lives of people is just beginning. One of the reasons why this integration is problematic is due to view point of BCI as control device; mainly due to the origin of BCI as a control mechanism for severely physical disabled people.

In this challenge (organised within the framework of the Call for Challenges at ICMI 2012), we propose to change this view point and therefore consider BCI as an intelligent sensor, similar to a microphone or camera, which can be used in multimodal interaction. A typical example is the use of BCI in sonification of brain signals is the exposition Staalhemel created by Christoph de Boeck. Staalhemel is an interactive installation with 80 steel segments suspended over the visitor’s head as he walks through the space. Tiny hammers tap rhythmic patterns on the steel plates, activated by the brainwaves of the visitor who wears a portable BCI (EEG scanner). Thus, visitors are directly interacting with their surroundings, in this case a artistic installation.

The main challenges to research and develop BCIs as intelligent sensors include but are not limited to:

- How could BCIs as intelligent sensors be integrated in multimodal HCI, HRI and HHI applications alongside other modes of input control?

- What constitutes appropriate categories of adaptation (to challenge, to help, to promote positive emotion) in response to physiological data?

- What are the added benefits of this approach with respect to user experience of HCI, HRI and HHI with respect to performance, safety and health?

- How to present the state of the user in the context of HCI or HRI (representation to a machine) compared to HHI (representation to the self or another person)?

- How to design systems that promote trust in the system and protect the privacy of the user?

- What constitutes opportune support for BCI based intelligent sensor? In other words, how can the interface adapt to the user information such that the user feels supported rather than distracted?

- What is the user experience of HCI, HRI and HHI enhanced through BCIs as intelligent sensors?

- What are the ethical, legal and societal implications of such technologies? And how can we address these issues timely?

We solicit papers, demonstrators, videos or design descriptions of possible demonstrators that address the above challenges. Demonstrators and videos should be accompanied by a paper explaining the design. Descriptions of possible demonstrators can be presented through a poster.

Accepted papers will be included in the ICMI conference proceedings, which will be published by ACM as part of their series of International Conference Proceedings. As such the ICMI proceedings will have an ISBN number assigned to it and all papers will have a unique DOI and URL assigned to them. Moreover, all accepted papers will be included in the ACM digital library.

Deadline for submission: June 15, 2012

Notification of acceptance: July 7, 2012

Final paper: August 15, 2102

Mind-controlled robot allows a quadriplegic patient moving virtually in space

Researchers at Federal Institute of Technology in Lausanne, Switzerland (EPFL), have successfully demonstrated a robot controlled by the mind of a partially quadriplegic patient in a hospital 62 miles away. The EPFL brain-computer interface system does not require invasive neural implants in the brain, since it is based on a special EEG cap fitted with electrodes that record the patient’s neural signals. The task of the patient is to imagine moving his paralyzed fingers, and this input is than translated by the BCI system into command for the robot.

16:06 Posted in AI & robotics, Brain-computer interface, Telepresence & virtual presence | Permalink | Comments (0)

Social influences on neuroplasticity: stress and interventions to promote well-being

Social influences on neuroplasticity: stress and interventions to promote well-being.

Nat Neurosci. 2012;15(5):689-95

Authors: Davidson RJ, McEwen BS

Experiential factors shape the neural circuits underlying social and emotional behavior from the prenatal period to the end of life. These factors include both incidental influences, such as early adversity, and intentional influences that can be produced in humans through specific interventions designed to promote prosocial behavior and well-being. Here we review important extant evidence in animal models and humans. Although the precise mechanisms of plasticity are still not fully understood, moderate to severe stress appears to increase the growth of several sectors of the amygdala, whereas the effects in the hippocampus and prefrontal cortex tend to be opposite. Structural and functional changes in the brain have been observed with cognitive therapy and certain forms of meditation and lead to the suggestion that well-being and other prosocial characteristics might be enhanced through training.

15:52 Posted in Brain training & cognitive enhancement, Mental practice & mental simulation | Permalink | Comments (0)

Apr 29, 2012

The SoLoMo manifesto

The SoLoMo Manifesto is a free-downloadable white paper that explores the mega-markets of social, local, and mobile as a cohesive ecosystem of marketing technologies.

The manifesto has been produced by MomentFeed, a location-based marketing platform for the enterprise. It's an interesting reading. You can download this free whitepaper here.

13:18 Posted in Locative media, Pervasive computing | Permalink | Comments (0)

Apr 20, 2012

Enhancement of motor imagery-related cortical activation during first-person observation measured by functional near-infrared spectroscopy

Enhancement of motor imagery-related cortical activation during first-person observation measured by functional near-infrared spectroscopy.

Eur J Neurosci. 2012 Apr 18;

Authors: Kobashi N, Holper L, Scholkmann F, Kiper D, Eng K

Abstract. It is known that activity in secondary motor areas during observation of human limbs performing actions is affected by the observer's viewpoint, with first-person views generally leading to stronger activation. However, previous neuroimaging studies have displayed limbs in front of the observer, providing an offset view of the limbs without a truly first-person viewpoint. It is unknown to what extent these pseudo-first-person viewpoints have affected the results published to date. In this experiment, we used a horizontal two-dimensional mirrored display that places virtual limbs at the correct egocentric position relative to the observer. We compared subjects using the mirrored and conventional displays while recording over the premotor cortex with functional near-infrared spectroscopy. Subjects watched a first-person view of virtual arms grasping incoming balls on-screen; they were instructed to either imagine the virtual arm as their own [motor imagery during observation (MIO)] or to execute the movements [motor execution (ME)]. With repeated-measures anova, the hemoglobin difference as a direct index of cortical oxygenation revealed significant main effects of the factors hemisphere (P = 0.005) and condition (P ≤ 0.001) with significant post hoc differences between MIO-mirror and MIO-conventional (P = 0.024). These results suggest that the horizontal mirrored display provides a more accurate first-person view, enhancing subjects' ability to perform motor imagery during observation. Our results may have implications for future experimental designs involving motor imagery, and may also have applications in video gaming and virtual reality therapy, such as for patients following stroke.

19:50 Posted in Mental practice & mental simulation, Virtual worlds | Permalink | Comments (0)

Neurofeedback using real-time near-infrared spectroscopy enhances motor imagery related cortical activation

Neurofeedback using real-time near-infrared spectroscopy enhances motor imagery related cortical activation.

PLoS One. 2012;7(3):e32234

Authors: Mihara M, Miyai I, Hattori N, Hatakenaka M, Yagura H, Kawano T, Okibayashi M, Danjo N, Ishikawa A, Inoue Y, Kubota K

Abstract. Accumulating evidence indicates that motor imagery and motor execution share common neural networks. Accordingly, mental practices in the form of motor imagery have been implemented in rehabilitation regimes of stroke patients with favorable results. Because direct monitoring of motor imagery is difficult, feedback of cortical activities related to motor imagery (neurofeedback) could help to enhance efficacy of mental practice with motor imagery. To determine the feasibility and efficacy of a real-time neurofeedback system mediated by near-infrared spectroscopy (NIRS), two separate experiments were performed. Experiment 1 was used in five subjects to evaluate whether real-time cortical oxygenated hemoglobin signal feedback during a motor execution task correlated with reference hemoglobin signals computed off-line. Results demonstrated that the NIRS-mediated neurofeedback system reliably detected oxygenated hemoglobin signal changes in real-time. In Experiment 2, 21 subjects performed motor imagery of finger movements with feedback from relevant cortical signals and irrelevant sham signals. Real neurofeedback induced significantly greater activation of the contralateral premotor cortex and greater self-assessment scores for kinesthetic motor imagery compared with sham feedback. These findings suggested the feasibility and potential effectiveness of a NIRS-mediated real-time neurofeedback system on performance of kinesthetic motor imagery. However, these results warrant further clinical trials to determine whether this system could enhance the effects of mental practice in stroke patients.

19:39 Posted in Biofeedback & neurofeedback, Mental practice & mental simulation | Permalink | Comments (0)

Mental workload during brain-computer interface training

Mental workload during brain-computer interface training.

Ergonomics. 2012 Apr 16;

Authors: Felton EA, Williams JC, Vanderheiden GC, Radwin RG

Abstract. It is not well understood how people perceive the difficulty of performing brain-computer interface (BCI) tasks, which specific aspects of mental workload contribute the most, and whether there is a difference in perceived workload between participants who are able-bodied and disabled. This study evaluated mental workload using the NASA Task Load Index (TLX), a multi-dimensional rating procedure with six subscales: Mental Demands, Physical Demands, Temporal Demands, Performance, Effort, and Frustration. Able-bodied and motor disabled participants completed the survey after performing EEG-based BCI Fitts' law target acquisition and phrase spelling tasks. The NASA-TLX scores were similar for able-bodied and disabled participants. For example, overall workload scores (range 0-100) for 1D horizontal tasks were 48.5 (SD = 17.7) and 46.6 (SD 10.3), respectively. The TLX can be used to inform the design of BCIs that will have greater usability by evaluating subjective workload between BCI tasks, participant groups, and control modalities. Practitioner Summary: Mental workload of brain-computer interfaces (BCI) can be evaluated with the NASA Task Load Index (TLX). The TLX is an effective tool for comparing subjective workload between BCI tasks, participant groups (able-bodied and disabled), and control modalities. The data can inform the design of BCIs that will have greater usability.

19:37 Posted in Brain-computer interface, Research tools | Permalink | Comments (0)

Just 10 days left to apply for a place at 1st Summer School on Human-Computer Confluence

Just 10 days left to apply for a place at 1st Summer School on Human-Computer Confluence!

HCC, Human-Computer Confluence, is an ambitious research program studying how the emerging symbiotic relation between humans and computing devices can enable radically new forms of sensing, perception, interaction, and understanding.

The Summer School will take place in Milan, Italy, on 18-20 July 2012, hosted and organized by the the Doctoral School in Psychology of the Faculty of Psychology at the Università Cattolica del Sacro Cuore di Milano.

The specific objectives of the Summer School are, firstly, to provide selected and highly-motivated participants hands-on experience with question-driven Human-Computer Confluence projects, applications and experimental paradigms, and secondly, to bring together project leaders, researchers and students in order to work on inter-disciplinary challenges in the field of HCC. Participants will be assigned to different teams, where they will be encouraged to work creatively and collaboratively on a specific topic.

The Summer School has up to 40 places for students interested in the emerging symbiotic relationship between humans and computing devices. There is no registration fee for the Summer School and financial aid will be available for a significant number of students towards travel and accommodation.

We look forward to your application!

19:34 | Permalink | Comments (0)

Apr 04, 2012

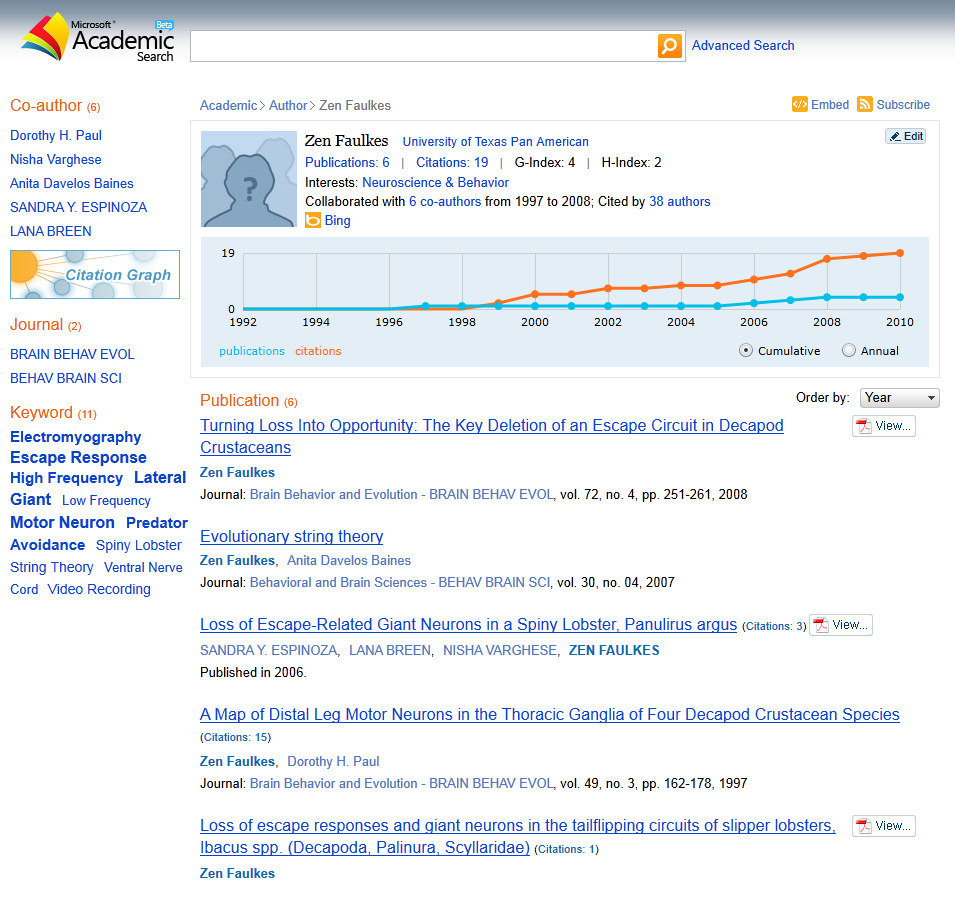

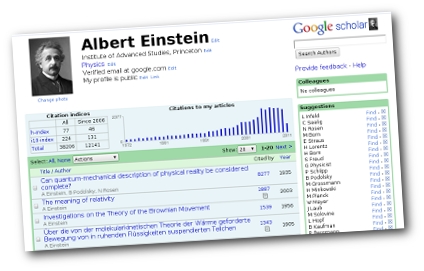

Microsoft Academic Search: nice Science 2.0 service, wrong H-index calculation!

Yesterday I came across Microsoft Academic Search, supposingly the main contender of Google Scholar Citations.

Although the service provides a plenty of interesting and useful features (including customizable author profile pages, a visual explorer, open API and many others), I don't like AT ALL the fact that it publishes an author's profile, including publication's profile and list, without asking the permission to do that (as Google Scholar does).

But what is even worse, is that the data published are, in most cases, incomplete or incorrect (or at least, they seemed incorrect for most of the authors that I included in my search). And this is not limited to the list of the list of publications (which could be understandable) but it also affects the calculation of the H-index (which measures both the productivity and impact of a scholar, based on the set of the author's most cited papers and the number of citations that they have received in other publications).

Since in the last few years the H-index has become critical to measure researcher's importance, displaying an inaccurate value of this index on a author's profile is NOT appropriate. While Microsoft may object that its service is open for users to edit the content (actually, the Help Center informs that "If you find any wrong or out-of-date information about author profile, publication profile or author publication list, you can make corrections or updates directly online") my point is that you CANNOT FORCE someone to correct otherwise potentially wrong data concerning scientific productivity.

One could even suspect that Microsoft purposefully underestimates the H-index to encourage users to join the service in order to edit their data. I do not think that this is the case, but at the same time, I cannot exclude this possibility.

Anyway, these are my two cents and I welcome your comments.

p.s. Interested in other Science 2.0 topics? Join us on Linkedin

11:30 Posted in Research tools, Social Media | Permalink | Comments (0)

Mar 31, 2012

Blogging from my iPad

BlogSpirit has developed a nice app that allows posting from the iPad/iPhone.

I am just trying it out.

If you are able to read this post, well, it does work (btw, this is Matilde)

17:03 Posted in Social Media | Permalink | Comments (0)