Aug 07, 2013

On Phenomenal Consciousness

A recent introductory talk on the problem that consciousness and qualia presents to physicalism by Frank C. Jackson.

14:00 Posted in Research tools, Telepresence & virtual presence | Permalink | Comments (0)

Welcome to wonderland: the influence of the size and shape of a virtual hand on the perceived size and shape of virtual objects

Welcome to wonderland: the influence of the size and shape of a virtual hand on the perceived size and shape of virtual objects.

PLoS One. 2013;8(7):e68594

Authors: Linkenauger SA, Leyrer M, Bülthoff HH, Mohler BJ

The notion of body-based scaling suggests that our body and its action capabilities are used to scale the spatial layout of the environment. Here we present four studies supporting this perspective by showing that the hand acts as a metric which individuals use to scale the apparent sizes of objects in the environment. However to test this, one must be able to manipulate the size and/or dimensions of the perceiver's hand which is difficult in the real world due to impliability of hand dimensions. To overcome this limitation, we used virtual reality to manipulate dimensions of participants' fully-tracked, virtual hands to investigate its influence on the perceived size and shape of virtual objects. In a series of experiments, using several measures, we show that individuals' estimations of the sizes of virtual objects differ depending on the size of their virtual hand in the direction consistent with the body-based scaling hypothesis. Additionally, we found that these effects were specific to participants' virtual hands rather than another avatar's hands or a salient familiar-sized object. While these studies provide support for a body-based approach to the scaling of the spatial layout, they also demonstrate the influence of virtual bodies on perception of virtual environments.

13:56 Posted in Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Using avatars to model weight loss behaviors: participant attitudes and technology development.

Using avatars to model weight loss behaviors: participant attitudes and technology development.

J Diabetes Sci Technol. 2013;7(4):1057-65

Authors: Napolitano MA, Hayes S, Russo G, Muresu D, Giordano A, Foster GD

BACKGROUND: Virtual reality and other avatar-based technologies are potential methods for demonstrating and modeling weight loss behaviors. This study examined avatar-based technology as a tool for modeling weight loss behaviors. METHODS: This study consisted of two phases: (1) an online survey to obtain feedback about using avatars for modeling weight loss behaviors and (2) technology development and usability testing to create an avatar-based technology program for modeling weight loss behaviors. RESULTS: Results of phase 1 (n = 128) revealed that interest was high, with 88.3% stating that they would participate in a program that used an avatar to help practice weight loss skills in a virtual environment. In phase 2, avatars and modules to model weight loss skills were developed. Eight women were recruited to participate in a 4-week usability test, with 100% reporting they would recommend the program and that it influenced their diet/exercise behavior. Most women (87.5%) indicated that the virtual models were helpful. After 4 weeks, average weight loss was 1.6 kg (standard deviation = 1.7). CONCLUSIONS: This investigation revealed a high level of interest in an avatar-based program, with formative work indicating promise. Given the high costs associated with in vivo exposure and practice, this study demonstrates the potential use of avatar-based technology as a tool for modeling weight loss behaviors.Abstract

13:52 Posted in Cybertherapy, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

What Color is My Arm? Changes in Skin Color of an Embodied Virtual Arm Modulates Pain Threshold

What Color is My Arm? Changes in Skin Color of an Embodied Virtual Arm Modulates Pain Threshold.

Front Hum Neurosci. 2013;7:438

Authors: Martini M, Perez-Marcos D, Sanchez-Vives MV

It has been demonstrated that visual inputs can modulate pain. However, the influence of skin color on pain perception is unknown. Red skin is associated to inflamed, hot and more sensitive skin, while blue is associated to cyanotic, cold skin. We aimed to test whether the color of the skin would alter the heat pain threshold. To this end, we used an immersive virtual environment where we induced embodiment of a virtual arm that was co-located with the real one and seen from a first-person perspective. Virtual reality allowed us to dynamically modify the color of the skin of the virtual arm. In order to test pain threshold, increasing ramps of heat stimulation applied on the participants' arm were delivered concomitantly with the gradual intensification of different colors on the embodied avatar's arm. We found that a reddened arm significantly decreased the pain threshold compared with normal and bluish skin. This effect was specific when red was seen on the arm, while seeing red in a spot outside the arm did not decrease pain threshold. These results demonstrate an influence of skin color on pain perception. This top-down modulation of pain through visual input suggests a potential use of embodied virtual bodies for pain therapy.

13:49 Posted in Biofeedback & neurofeedback, Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

What Color Is Your Night Light? It May Affect Your Mood

When it comes to some of the health hazards of light at night, a new study suggests that the color of the light can make a big difference.

Read full story on Science Daily

13:46 Posted in Emotional computing, Future interfaces, Research tools | Permalink | Comments (0)

Phubbing: the war against anti-social phone use

Via Textually.org

Don't you just hate it when someone snubs you by looking at their phone instead of paying attention? The Stop Phubbing campaign group certainly does. The Guardian reports.

In a list of "Disturbing Phubbing Stats" on their website, of note:

-- If phubbing were a plague it would decimate six Chinas

-- 97% of people claim their food tasted worse while being a victim of phubbing

-- 92% of repeat phubbers go on to become politicians

So it's really just a joke site? Well, a joke site with a serious message about our growing estrangement from our fellow human beings. But mostly a joke site, yes.

Read full article.

13:43 Posted in Ethics of technology | Permalink | Comments (0)

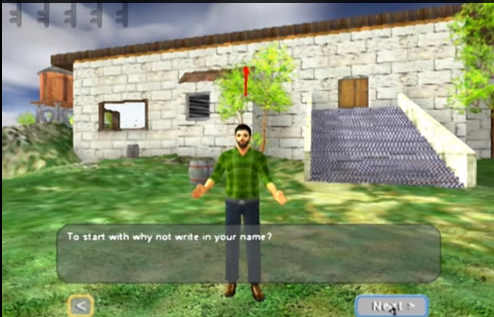

The Computer Game That Helps Therapists Chat to Adolescents With Mental Health Problems

Adolescents with mental health problems are particularly hard for therapists to engage. But a new computer game is providing a healthy conduit for effective communication between them.

Read the full story on MIT Technology Review

13:40 Posted in Cybertherapy, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

OpenGlass Project Makes Google Glass Useful for the Visually Impaired

Re-blogged from Medgadget

Google Glass may have been developed to transform the way people see the world around them, but thanks to Dapper Vision’s OpenGlass Project, one doesn’t even need to be able to see to experience the Silicon Valley tech giant’s new spectacles.

Harnessing the power of Google Glass’ built-in camera, the cloud, and the “hive-mind”, visually impaired users will be able to know what’s in front of them. The system consists of two components: Question-Answer sends pictures taken by the user and uploads them to Amazon’s Mechanical Turk and Twitter for the public to help identify, and Memento takes video from Glass and uses image matching to identify objects from a database created with the help of seeing users. Information about what the Glass wearer “sees” is read aloud to the user via bone conduction speakers.

Here’s a video that explains more about how it all works:

13:37 Posted in Augmented/mixed reality, Wearable & mobile | Permalink | Comments (0)

Pupil responses allow communication in locked-in syndrome patients

Pupil responses allow communication in locked-in syndrome patients.

Josef Stoll et al., Current Biology, Volume 23, Issue 15, R647-R648, 5 August 2013

For patients with severe motor disabilities, a robust means of communication is a crucial factor for their well-being. We report here that pupil size measured by a bedside camera can be used to communicate with patients with locked-in syndrome. With the same protocol we demonstrate command-following for a patient in a minimally conscious state, suggesting its potential as a diagnostic tool for patients whose state of consciousness is in question. Importantly, neither training nor individual adjustment of our system’s decoding parameters were required for successful decoding of patients’ responses.

Paper full text PDF

Image credit: Flickr user Beth77

13:25 Posted in Brain-computer interface, Neurotechnology & neuroinformatics | Permalink | Comments (0)

German test of the controllability of motor imagery in older adults

German test of the controllability of motor imagery in older adults.

Z Gerontol Geriatr. 2013 Aug 3;

Authors: Schott N

Abstract. After a person is instructed to imagine a certain movement, no possibility exists to control whether the person is doing what they are asked for. The purpose of this study was to validate the German Test of the Controllability of Motor Imagery ("Tests zur Kontrollierbarkeit von Bewegungsvorstellungen" TKBV). A total sample of 102 men [mean 55.6, standard deviation (SD) 25.1] and 93 women (mean 59.2, SD 24.0) ranging in age from 18-88 years completed the TKBV. Two conditions were performed: a recognition (REC) and a regeneration (REG) test. In both conditions the participants had to perform the six consecutive instructions. They were asked to imagine the posture of their own body. Subjects had to move only one body part (head, arms, legs, trunk) per instruction. On the regeneration test the participants had to actually produce the final position. On the recognition test, they were required to select the one picture among five pictures, which fit the imagery they have. Explorative factor analysis showed the proposed two-dimensional solution: (1) the ability to control their body scheme, and (2) the ability to transform a visual imagery. Cronbach's α of the two dimensions of the TKBV were 0.89 and 0.73, respectively. The scales correlate low with convergent measures assessing mental chronometry (Timed-Up-and-Go test, rREG = - 0.33, rREC = - 0.31), and the vividness of motor imagery (MIQvis, rREG = 0.14, rREC = 0.14; MIQkin, rREG = 0.11, rREC = 0.13). Criterion validity of the TKBV was established by statistically significant correlations between the subscales, the Corsi block tapping test (BTT, rREG = 0.45, rREC = 0.38) and with physical activity (rREG = 0.50, rREC = 0.36). The TKBV is a valid instrument to assess motor imagery. Thus, it is an important and helpful tool in the neurologic and orthopedic rehabilitation.

13:20 Posted in Mental practice & mental simulation | Permalink | Comments (0)

Detecting delay in visual feedback of an action as a monitor of self recognition

Detecting delay in visual feedback of an action as a monitor of self recognition.

Exp Brain Res. 2012 Oct;222(4):389-97

Authors: Hoover AE, Harris LR

Abstract. How do we distinguish "self" from "other"? The correlation between willing an action and seeing it occur is an important cue. We exploited the fact that this correlation needs to occur within a restricted temporal window in order to obtain a quantitative assessment of when a body part is identified as "self". We measured the threshold and sensitivity (d') for detecting a delay between movements of the finger (of both the dominant and non-dominant hands) and visual feedback as seen from four visual perspectives (the natural view, and mirror-reversed and/or inverted views). Each trial consisted of one presentation with minimum delay and another with a delay of between 33 and 150 ms. Participants indicated which presentation contained the delayed view. We varied the amount of efference copy available for this task by comparing performances for discrete movements and continuous movements. Discrete movements are associated with a stronger efference copy. Sensitivity to detect asynchrony between visual and proprioceptive information was significantly higher when movements were viewed from a "plausible" self perspective compared with when the view was reversed or inverted. Further, we found differences in performance between dominant and non-dominant hand finger movements across the continuous and single movements. Performance varied with the viewpoint from which the visual feedback was presented and on the efferent component such that optimal performance was obtained when the presentation was in the normal natural orientation and clear efferent information was available. Variations in sensitivity to visual/non-visual temporal incongruence with the viewpoint in which a movement is seen may help determine the arrangement of the underlying visual representation of the body.

13:18 Posted in Research tools, Telepresence & virtual presence | Permalink | Comments (0)

First Google Glass use for real-time location of where multiple viewers are looking

13:14 | Permalink | Comments (0)

Hive-mind solves tasks using Google Glass ant game

Re-blogged from New Scientist

Glass could soon be used for more than just snapping pics of your lunchtime sandwich. A new game will connect Glass wearers to a virtual ant colony vying for prizes by solving real-world problems that vex traditional crowdsourcing efforts.

Crowdsourcing is most famous for collaborative projects like Wikipedia and "games with a purpose" like FoldIt, which turns the calculations involved in protein folding into an online game. All require users to log in to a specific website on their PC.

Now Daniel Estrada of the University of Illinois in Urbana-Champaign and Jonathan Lawhead of Columbia University in New York are seeking to bring crowdsourcing to Google's wearable computer, Glass.

The pair have designed a game called Swarm! that puts a Glass wearer in the role of an ant in a colony. Similar to the pheromone trails laid down by ants, players leave virtual trails on a map as they move about. These behave like real ant trails, fading away with time unless reinforced by other people travelling the same route. Such augmented reality games already exist – Google's Ingress, for one – but in Swarm! the tasks have real-world applications.

Swarm! players seek out virtual resources to benefit their colony, such as food, and must avoid crossing the trails of other colony members. They can also monopolise a resource pool by taking photos of its real-world location.

To gain further resources for their colony, players can carry out real-world tasks. For example, if the developers wanted to create a map of the locations of every power outlet in an airport, they could reward players with virtual food for every photo of a socket they took. The photos and location data recorded by Glass could then be used to generate a map that anyone could use. Such problems can only be solved by people out in the physical world, yet the economic incentives aren't strong enough for, say, the airport owner to provide such a map.

Estrada and Lawhead hope that by turning tasks such as these into games, Swarm! will capture the group intelligence ant colonies exhibit when they find the most efficient paths between food sources and the home nest.

Read full story

13:12 Posted in Augmented/mixed reality, Creativity and computers, ICT and complexity, Wearable & mobile | Permalink | Comments (0)

International Conference on Physiological Computing Systems

7-9 January 2014, Lisbon, Portugal

Physiological data in its different dimensions, either bioelectrical, biomechanical, biochemical or biophysical, and collected through specialized biomedical devices, video and image capture or other sources, is opening new boundaries in the field of human-computer interaction into what can be defined as Physiological Computing. PhyCS is the annual meeting of the physiological interaction and computing community, and serves as the main international forum for engineers, computer scientists and health professionals, interested in outstanding research and development that bridges the gap between physiological data handling and human-computer interaction.

Regular Paper Submission Extension: September 15, 2013

Regular Paper Authors Notification: October 23, 2013

Regular Paper Camera Ready and Registration: November 5, 2013

Jul 30, 2013

How it feels (through Google Glass)

14:12 Posted in Augmented/mixed reality, Wearable & mobile | Permalink | Comments (0)

Jul 23, 2013

A mobile data collection platform for mental health research

A mobile data collection platform for mental health research

22:57 Posted in Pervasive computing, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Augmented Reality - Projection Mapping

22:50 Posted in Augmented/mixed reality, Blue sky, Cyberart | Permalink | Comments (0)

The beginning of infinity

A little old video but still inspiring...

THE BEGINNING OF INFINITY from Jason Silva on Vimeo.

22:46 Posted in Blue sky | Permalink | Comments (0)

Neural Reorganization Accompanying Upper Limb Motor Rehabilitation from Stroke with Virtual Reality-Based Gesture Therapy

Neural Reorganization Accompanying Upper Limb Motor Rehabilitation from Stroke with Virtual Reality-Based Gesture Therapy.

Top Stroke Rehabil. 2013 May-June 1;20(3):197-209

Authors: Orihuela-Espina F, Fernández Del Castillo I, Palafox L, Pasaye E, Sánchez-Villavicencio I, Leder R, Franco JH, Sucar LE

Background: Gesture Therapy is an upper limb virtual reality rehabilitation-based therapy for stroke survivors. It promotes motor rehabilitation by challenging patients with simple computer games representative of daily activities for self-support. This therapy has demonstrated clinical value, but the underlying functional neural reorganization changes associated with this therapy that are responsible for the behavioral improvements are not yet known. Objective: We sought to quantify the occurrence of neural reorganization strategies that underlie motor improvements as they occur during the practice of Gesture Therapy and to identify those strategies linked to a better prognosis. Methods: Functional magnetic resonance imaging (fMRI) neuroscans were longitudinally collected at 4 time points during Gesture Therapy administration to 8 patients. Behavioral improvements were monitored using the Fugl-Meyer scale and Motricity Index. Activation loci were anatomically labelled and translated to reorganization strategies. Strategies are quantified by counting the number of active clusters in brain regions tied to them. Results: All patients demonstrated significant behavioral improvements (P < .05). Contralesional activation of the unaffected motor cortex, cerebellar recruitment, and compensatory prefrontal cortex activation were the most prominent strategies evoked. A strong and significant correlation between motor dexterity upon commencing therapy and total recruited activity was found (r2 = 0.80; P < .05), and overall brain activity during therapy was inversely related to normalized behavioral improvements (r2 = 0.64; P < .05). Conclusions: Prefrontal cortex and cerebellar activity are the driving forces of the recovery associated with Gesture Therapy. The relation between behavioral and brain changes suggests that those with stronger impairment benefit the most from this paradigm.

22:32 | Permalink | Comments (0)

Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes

Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes.

Proc Natl Acad Sci USA. 2013 Jul 15;

Authors: Banakou D, Groten R, Slater M

Abstract. An illusory sensation of ownership over a surrogate limb or whole body can be induced through specific forms of multisensory stimulation, such as synchronous visuotactile tapping on the hidden real and visible rubber hand in the rubber hand illusion. Such methods have been used to induce ownership over a manikin and a virtual body that substitute the real body, as seen from first-person perspective, through a head-mounted display. However, the perceptual and behavioral consequences of such transformed body ownership have hardly been explored. In Exp. 1, immersive virtual reality was used to embody 30 adults as a 4-y-old child (condition C), and as an adult body scaled to the same height as the child (condition A), experienced from the first-person perspective, and with virtual and real body movements synchronized. The result was a strong body-ownership illusion equally for C and A. Moreover there was an overestimation of the sizes of objects compared with a nonembodied baseline, which was significantly greater for C compared with A. An implicit association test showed that C resulted in significantly faster reaction times for the classification of self with child-like compared with adult-like attributes. Exp. 2 with an additional 16 participants extinguished the ownership illusion by using visuomotor asynchrony, with all else equal. The size-estimation and implicit association test differences between C and A were also extinguished. We conclude that there are perceptual and probably behavioral correlates of body-ownership illusions that occur as a function of the type of body in which embodiment occurs.

22:28 Posted in Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)