May 26, 2016

From User Experience (UX) to Transformative User Experience (T-UX)

In 1999, Joseph Pine and James Gilmore wrote a seminal book titled “The Experience Economy” (Harvard Business School Press, Boston, MA) that theorized the shift from a service-based economy to an experience-based economy.

According to these authors, in the new experience economy the goal of the purchase is no longer to own a product (be it a good or service), but to use it in order to enjoy a compelling experience. An experience, thus, is a whole-new type of offer: in contrast to commodities, goods and services, it is designed to be as personal and memorable as possible. Just as in a theatrical representation, companies stage meaningful events to engage customers in a memorable and personal way, by offering activities that provide engaging and rewarding experiences.

Indeed, if one looks back at the past ten years, the concept of experience has become more central to several fields, including tourism, architecture, and – perhaps more relevant for this column – to human-computer interaction, with the rise of “User Experience” (UX).

The concept of UX was introduced by Donald Norman in a 1995 article published on the CHI proceedings (D. Norman, J. Miller, A. Henderson: What You See, Some of What's in the Future, And How We Go About Doing It: HI at Apple Computer. Proceedings of CHI 1995, Denver, Colorado, USA). Norman argued that focusing exclusively on usability attribute (i.e. easy of use, efficacy, effectiveness) when designing an interactive product is not enough; one should take into account the whole experience of the user with the system, including users’ emotional and contextual needs. Since then, the UX concept has assumed an increasing importance in HCI. As McCarthy and Wright emphasized in their book “Technology as Experience” (MIT Press, 2004):

“In order to do justice to the wide range of influences that technology has in our lives, we should try to interpret the relationship between people and technology in terms of the felt life and the felt or emotional quality of action and interaction.” (p. 12).

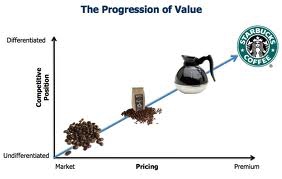

However, according to Pine and Gilmore experience may not be the last step of what they call as “Progression of Economic Value”. They speculated further into the future, by identifying the “Transformation Economy” as the likely next phase. In their view, while experiences are essentially memorable events which stimulate the sensorial and emotional levels, transformations go much further in that they are the result of a series of experiences staged by companies to guide customers learning, taking action and eventually achieving their aspirations and goals.

In Pine and Gilmore terms, an aspirant is the individual who seeks advice for personal change (i.e. a better figure, a new career, and so forth), while the provider of this change (a dietist, a university) is an elictor. The elictor guide the aspirant through a series of experiences which are designed with certain purpose and goals. According to Pine and Gilmore, the main difference between an experience and a transformation is that the latter occurs when an experience is customized:

“When you customize an experience to make it just right for an individual - providing exactly what he needs right now - you cannot help changing that individual. When you customize an experience, you automatically turn it into a transformation, which companies create on top of experiences (recall that phrase: “a life-transforming experience”), just as they create experiences on top of services and so forth” (p. 244).

A further key difference between experiences and transformations concerns their effects: because an experience is inherently personal, no two people can have the same one. Likewise, no individual can undergo the same transformation twice: the second time it’s attempted, the individual would no longer be the same person (p. 254-255).

But what will be the impact of this upcoming, “transformation economy” on how people relate with technology? If in the experience economy the buzzword is “User Experience”, in the next stage the new buzzword might be “User Transformation”.

Indeed, we can see some initial signs of this shift. For example, FitBit and similar self-tracking gadgets are starting to offer personalized advices to foster enduring changes in users’ lifestyle; another example is from the fields of ambient intelligence and domotics, where there is an increasing focus towards designing systems that are able to learn from the user’s behaviour (i.e. by tracking the movement of an elderly in his home) to provide context-aware adaptive services (i.e. sending an alert when the user is at risk of falling).

But likely, the most important ICT step towards the transformation economy could take place with the introduction of next-generation immersive virtual reality systems. Since these new systems are based on mobile devices (an example is the recent partnership between Oculus and Samsung), they are able to deliver VR experiences that incorporate information on the external/internal context of the user (i.e. time, location, temperature, mood etc) by using the sensors incapsulated in the mobile phone.

By personalizing the immersive experience with context-based information, it might be possibile to induce higher levels of involvement and presence in the virtual environment. In case of cyber-therapeutic applications, this could translate into the development of more effective, transformative virtual healing experiences.

Furthermore, the emergence of "symbiotic technologies", such as neuroprosthetic devices and neuro-biofeedback, is enabling a direct connection between the computer and the brain. Increasingly, these neural interfaces are moving from the biomedical domain to become consumer products. But unlike existing digital experiential products, symbiotic technologies have the potential to transform more radically basic human experiences.

Brain-computer interfaces, immersive virtual reality and augmented reality and their various combinations will allow users to create “personalized alterations” of experience. Just as nowadays we can download and install a number of “plug-ins”, i.e. apps to personalize our experience with hardware and software products, so very soon we may download and install new “extensions of the self”, or “experiential plug-ins” which will provide us with a number of options for altering/replacing/simulating our sensorial, emotional and cognitive processes.

Such mediated recombinations of human experience will result from of the application of existing neuro-technologies in completely new domains. Although virtual reality and brain-computer interface were originally developed for applications in specific domains (i.e. military simulations, neurorehabilitation, etc), today the use of these technologies has been extended to other fields of application, ranging from entertainment to education.

In the field of biology, Stephen Jay Gould and Elizabeth Vrba (Paleobiology, 8, 4-15, 1982) have defined “exaptation” the process in which a feature acquires a function that was not acquired through natural selection. Likewise, the exaptation of neurotechnologies to the digital consumer market may lead to the rise of a novel “neuro-experience economy”, in which technology-mediated transformation of experience is the main product.

Just as a Genetically-Modified Organism (GMO) is an organism whose genetic material is altered using genetic-engineering techniques, so we could define aTechnologically-Modified Experience (ETM) a re-engineered experience resulting from the artificial manipulation of neurobiological bases of sensorial, affective, and cognitive processes.

Clearly, the emergence of the transformative neuro-experience economy will not happen in weeks or months but rather in years. It will take some time before people will find brain-computer devices on the shelves of electronic stores: most of these tools are still in the pre-commercial phase at best, and some are found only in laboratories.

Nevertheless, the mere possibility that such scenario will sooner or later come to pass, raises important questions that should be addressed before symbiotic technologies will enter our lives: does technological alteration of human experience threaten the autonomy of individuals, or the authenticity of their lives? How can we help individuals decide which transformations are good or bad for them?

Answering these important issues will require the collaboration of many disciplines, including philosophy, computer ethics and, of course, cyberpsychology.

May 24, 2016

Virtual reality painting tool

12:07 Posted in Blue sky, Future interfaces, Virtual worlds | Permalink | Comments (0)

Computational Personality?

The field of artificial intelligence (AI) has undergone a dramatic evolution in the last years. The impressive advances in this field have inspired several leaders in the scientific and technological community - including Stephen Hawking and Elon Musk - to raise concerns about a potential domination of machines over humans.

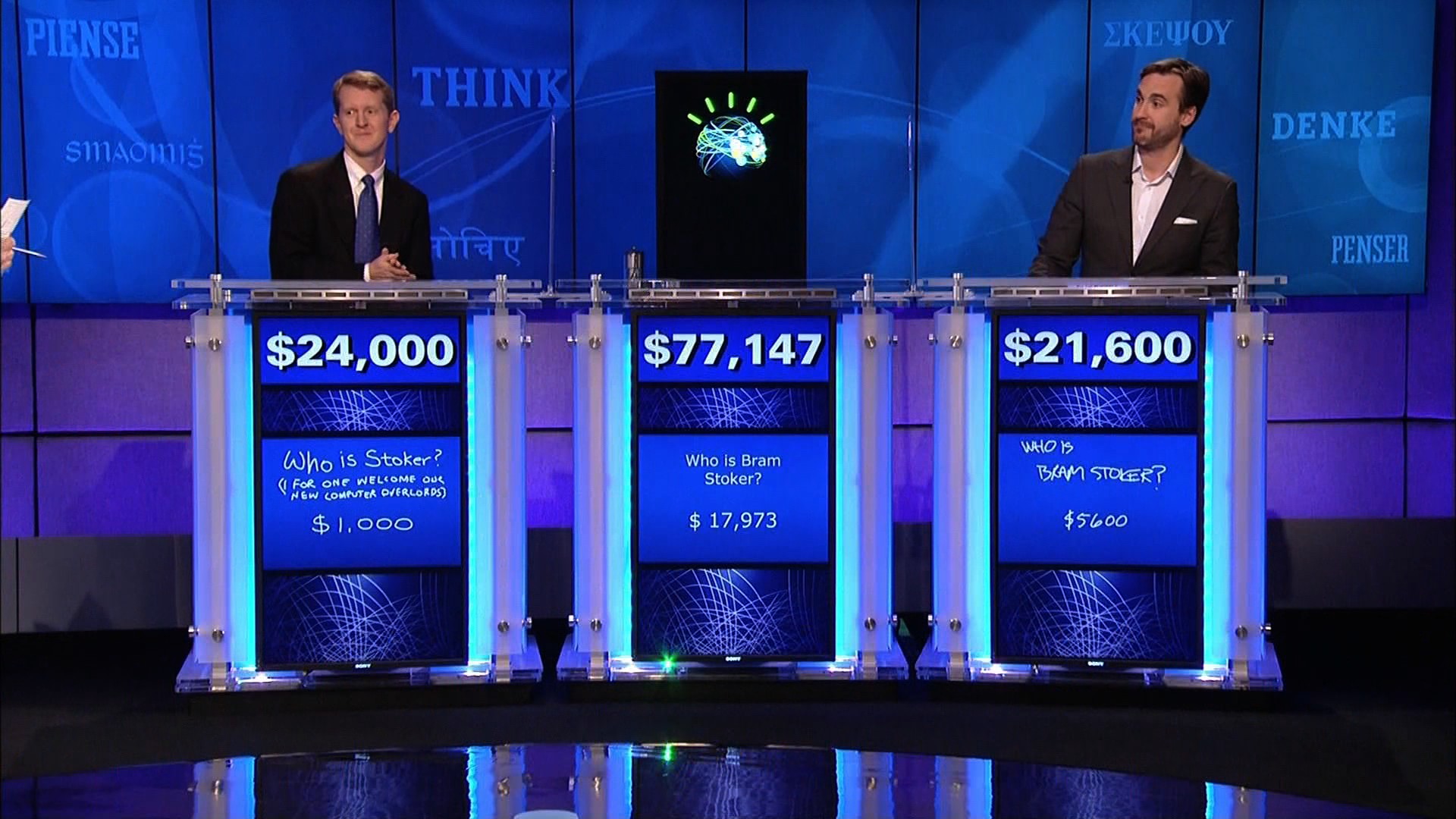

While many people still think about AI as robots with human-like characteristics, this field is much broader and include a number of diverse tools and applications, from SIRI to self-driving cars, to autonomous weapons. Among the key innovations in the AI field, IBM’s Watson computer system is certainly one of the most popular.

Developed within IBM’s DeepQA project lead by principal investigator David Ferrucci, Watson allows answering questions addressed in natural language, but also features advanced cognitive abilities such as information retrieval, knowledge representation, automatic reasoning, and “open domain question answering”.

Thanks to these advanced functions, Watson could compete at the human champion level in real time on the American TV quiz show, Jeopardy. This impressive result has opened several potential business applications of so-called “cognitive computing”, i.e. targeting big data analytics problems in health, pharma, and other business sectors. But psychology, too, may be one of the next frontier of the cognitive computing revolution.

For example, Watson Personality Insight is a service designed to automatically-generate psychological profiles on the basis of unstructured text extracted from mails, tweets, blog posts, articles and forums. In addition to a description of your personality, needs and values, the program provides an automated analysis of “Big Five” personality traits: openness, conscientiousness, extroversion, agreeableness, and neuroticism; all these data can then be visualized in a graphic representation. According to IBM’s documentation, to give a reliable estimate of personality, the Watson program requires at least 3,500 words, but preferably 6,000 words. Furthermore, the content of the text should ideally reflects personal experiences, thoughts and responses. The psychological model behind the service is based on studies showing that frequency with which we use certain categories of words can provide clues to personality, thinking style, social connections, and emotional stress variations.

Clearly, many psychologists (and non-psychologists, too) may have several doubts about the reliability and accuracy of this service. Furthermore, for some people, collecting social media data to identify psychological traits may lead to Orwellian scenarios. Although these concerns are understandable, they may be mitigated by the important positive applications and benefits that this technology may bring about for individuals, organizations and society.

11:44 Posted in AI & robotics, Big Data, Blue sky, Computational psychology | Permalink | Comments (0)

Incentivized competitions boost innovation

The last decade has witnessed a tremendous advance in technological innovations. This is also thanks to the growing diffusion of open innovation platforms, which have leveraged on the explosion of social network and digital media to promote a new culture of “bottom-up” discovery and invention.

An example of the potential of open innovation to revolutionize technology and science is provided by online crowdfunding sites for creative projects, such Kickstarter and Indiegogo. In the last few years, these online platforms have supported thousands of projects, including extremely innovative products such as the headset Oculus, which has contributed to the renaissance of Virtual Reality.

Incentivized competitions represent a further strategy for engaging the public and gathering innovative ideas on a global scale. This approach consists in identifying the most interesting challenges and inviting the community to solve them.

One of the first and most popular incentivized competitions is the Ansari X-Prize, celebrating this year its 10th anniversary. Funded by the Ansari family, the Ansari X-Prize challenged teams from around the world to build a reliable, reusable, privately financed, manned spaceship capable of carrying three people to 100 kilometers above the Earth's surface twice within two weeks. The prize was awarded in 2004 to Mojave Aereospace Ventures and since then, the award has contributed to create a new private space industry. Recently, X-Prize has introduced spin-off for-profit venture HeroX, a kind of “Kickstarter” of X-Prize-type competitions. The platform allows anyone to post their own competition.

Those who think they have the best solution can then submit their entries to win a cash prize. Another successful incentivized contest is Qualcomm Tricorder X Prize, offering a US$7 million grand prize, US$2 million second prize, and US$1 million third prize to the best among the finalists offering an automatic non-invasive health diagnostics packaged into a single portable device that weighs no more than 5 pounds (2.3 kg), able to diagnose over a dozen medical conditions, including whooping cough, hypertension, mononucleosis, shingles, melanoma, HIV, and osteoporosis.

Incentivized competitions have proven effective in supporting the solution to global issues and develop powerful new visions of the future that can potentially impact the lives of billions of people. The reason of such effectiveness is related to the “format” of these competitions.

Open idea contests include clear and well-defined objectives, which can be measured objectively in terms of performance/outcome, and a significant amount of financial resources to achieve those objectives. Further, incentive competitions target only “stretch goals”, very ambitious (and risky) objectives that require very innovative strategies and original methodologies in order to be addressed.

Incentive competitions are also very “democratic”, in the sense that they are not limited to academic teams or research organizations, but are open to the involvement of large and small companies, start-ups, governments and even single individuals.

11:16 Posted in Blue sky, Creativity and computers | Permalink | Comments (0)

Using Big Data in Cyberpsychology

Thanks to the pervasive diffusion of social media and the increasing affordability of smartphone and wearable sensors, psychologists can gather and analyse massive quantities of data concerning people behaviours and moods in naturalistic situations.

The availability of “big data” presents psychologists with unprecedented professional and scientific opportunities, but also with new challenges. On the business side, for example, a growing number of tech-companies are hiring psychologists to help make sense of huge data sets collected online from their actual and prospective customers.

The job description of a “data psychologist” not only requires perfect mastery of advanced statistics, but also the ability to identify the kinds of behaviours that are most useful to track and analyse, in order to improve products and business strategies. Psychological research, too, may be revolutionized from emerging field of big data. Until recently, online research methods were mostly represented by web experiments and online survey studies.

Example of topic areas included cognitive psychology, social psychology, but also health psychology and forensing psychology (for an updated list of psychological experiments on the Internet see this useful resource by the Hanover College Psychology Department).

However, the emergence of advanced cloud-based data analytics has provided psychologists with powerful new ways of studying human behaviour using digital footprints. An interesting example is CrowdSignal, a crowdfunded mobile data collection campaign that aims at building the largest set of longitudinal mobile and sensor data recorded from smartphones and smartwatches available to the community. As reported in the project’s website, the final dataset will include geo-location, sensor, system and network logs, user interactions, social connections, communications as well as user-provided ground truth labels and survey feedback, collected from a demographically diverse pool of Android users across the United States.

A further interesting service that well exemplifies the scientific potential of social data analytics is the “Apply Magic Sauce PredictionAPI” developed by the Psychometrics Centre of the University of Cambridge. According to the Cambridge researchers, this algorithm allows predicting users’ personality traits based on Facebook interactions (i.e., Facebook Likes). To test the validity of the tool, the team compared the predictions generated by computer algorithms and the personality judgments made by human. The results, which were reported on Proceedings of the National Academy of Sciences (Youyou et al., 2015, PNAS, 112/4, pp. 1036–1040), showed that the computers’ judgments of people’s personalities based on their digital behaviors were more accurate than judgments made by their close others or acquaintances.

However, the emergence of “big data psychology” presents also big challenges. For example, it is the advantages of this approach for business and research should take into account the issues related to ethical, privacy and legal implications that are unavoidably linked to the collection of digital footprints. On the methodological side, it is also important to consider that quantity (of data) is not synonimous with quality (of data interpretation).

In order to create meaningful and accurate models from behavioural logs, one needs to consider the role played by contextual variables, as well as the possible data errors and spurious correlations introduced by high dimensionality.

10:48 Posted in Big Data, Computational psychology, Pervasive computing, Research tools, Self-Tracking | Permalink | Comments (0)

SignAloud: Gloves that Transliterate Sign Language into Text and Speech

10:25 Posted in Future interfaces, Wearable & mobile | Permalink | Comments (0)

Apr 27, 2016

Predictive Technologies: Can Smart Tools Augment the Brain's Predictive Abilities?

Feb 18, 2016

A S.E.T.I. for psychology

The recent debate about the issue of reproducibility in psychology lead me to question whether 21th century scientific psychology should still regard the “conventional” experimental approach as the prime method of inquiry.

Even most controlled lab experiments produce results that are difficult to generalize to real-life, because of the artificiality of the setting. On the other hand, field and natural experiments are higher in ecological validity (since they are carried out in real-world situations) but are hard to control and replicate. Not to mention that most psychology research is consistently done primarily on undergraduate students.

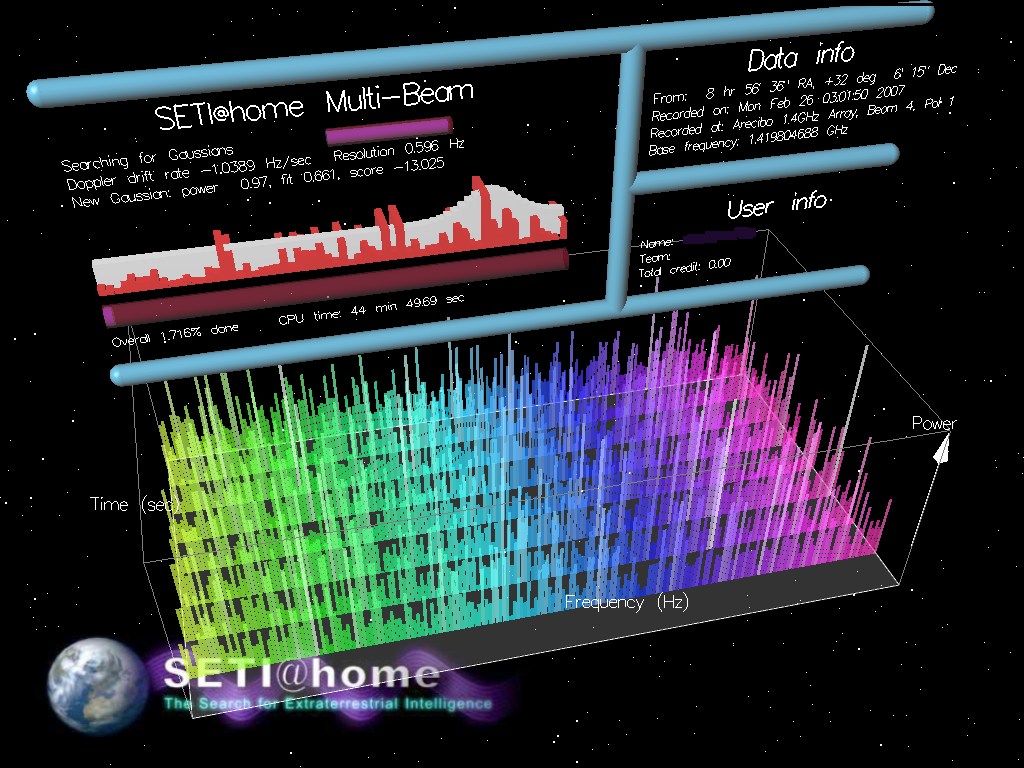

I suggest that the digital revolution and the emergence of “citizen science” could offer a totally new research approach to psychology.

Every second, social media, sensors and mobile tools generate massive amounts of data concerning people’s behavior and activities. According to a recent forecast by networking company Cisco concerning global mobile data traffic growth trends, global mobile data traffic reached 3.7 exabytes per month at the end of 2015, up from 2.1 exabytes per month at the end of 2014. Mobile data traffic has grown 4,000-fold over the past 10 years and almost 400-million-fold over the past 15 years. Mobile networks carried fewer than 10 gigabytes per month in 2000, and less than 1 petabyte per month in 2005. (One exabyte is equivalent to one billion gigabytes, and one thousand petabytes.)

The analysis of these large-scale “digital footprints” may open new avenues for discovery to psychologists, revealing patterns that would be otherwise impossible to detect through conventional experimental methods. A cloud-based open data portal could be designed to gather data streams made available from volunteering citizens, following protocols and guidelines developed by scientists – think about it as a sort of “S.E.T.I.” project for psychology. Autorized researchers may access these shared datasets to make collective analysis and interpretations.

I argue that the introduction of collective digital experiments may offer psychology novel opportunities to advance its research, and eventually achieve the rigour of natural sciences.

23:05 Posted in Blue sky | Permalink | Comments (0)

Feb 14, 2016

3D addiction

As many analysts predict, next-generation virtual reality technology promises to change our lives.

From manufacturing to medicine, from entertainment to learning, there is no economic or cultural sector that is immune from the VR revolution.

According to a recent report from Digi-Capital, the augmented/virtual reality market could hit $150B revenue by 2020, with augmented reality projected to reach $120B and virtual reality $30B.

Still, there are a lot of unanswered questions concerning the potential negative effects of virtual reality on the human brain. For example, we know very little about the consequences of prolonged immersion in a virtual world.

Most of scientific virtual reality experiments carried out so far have lasted for short time intervals (typically, less than an hour). However, we don’t know what are the potential side effects of being “immersed” for a 12-hour virtual marathon. When one looks at today’s headsets, it might seem unlikely that people will spend so much time wearing them, because they are still ergonomically poor. Furthermore, most virtual reality contents available on the market are not exploiting the full narrative potential of the medium, which can go well beyond a “virtual Manhattan skyride”.

But as soon as usability problems will be fixed, and 3D contents will be compelling and engaging enough, the risk of “3D addiction” may be around the corner. Most importantly, risks of virtual reality exposure are not limited to adults, but especially endanger adolescents’ and children’s health. Given the widespread use of smartphones among kids, it is likely that virtual reality games will become very popular within this segment.

Given that Zuckerberg regards virtual reality as the next big thing after video for Facebook (in March 2014 his corporation bought Oculus VR in a deal worth $2 billion), perhaps he might also consider investing some of these resources for supporting research on the health risks that are potentially associated with this amazing and life-changing technology.

21:55 Posted in Virtual worlds | Permalink | Comments (0)

Dec 26, 2015

Manus VR Experiment with Valve’s Lighthouse to Track VR Gloves

Via Road to VR

The Manus VR team demonstrate their latest experiment, utilising Valve’s laser-based Lighthouse system to track their in-development VR glove.

Manus VR (previously Manus Machina), the company from Eindhoven, Netherlands dedicated to building VR input devices, seem have gained momentum in 2015. They secured their first round of seed funding and have shipped early units to developers and now, their R&D efforts have extended to Valve’s laser based tracking solution Lighthouse, as used in the forthcoming HTC Vive headset and SteamVR controllers.

The Manus VR team seem to have canibalised a set of SteamVR controllers, leveraging the positional tracking of wrist mounted units to augment Manus VR’s existing glove-mounted IMUs. Last time I tried the system, the finger joint detection was pretty good, but the Samsung Gear VR camera-based positional tracking struggled understandably with latency and accuracy. The experience on show seems immeasurably better, perhaps unsurprisingly.

11:47 Posted in Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

How to reduce costs in Cyberpsychology research

Cyberpsychology is a fascinating field of research, yet it requires a lot of financial resources for its advancement. As an inherently interdisciplinary endeavor, the implementation of a cyberpsychology study often involves the collaboration of several scientific disciplines outside psychology, such as experts in human-computer interaction, software developers, data scientists, and engineers. Further, an increasing number of cyberpsychology studies consist of clinical trials, which can last several months (or even years) and involve a significant investment of economic resources. On the other side, finding adequate fundings is becoming the most pressing challenge for most cyberpsychologists.

This is due to several factors. First, governments university funding has fallen dramatically in most countries and the trend for the next years is not encouraging. Second, competition for grants is very high and it is likely to remain so. A third, - and perhaps less obvious - factor is that Cyberpsychology research tends to attract less fundings than other allied disciplines, i.e. medicine. Given this situation, what can be done to allow cyberpsychologists to keep furthering their research?

A possible strategy is to improve “lateral thinking” and find a way to optimize costs. This can be done, for example, by taking advantage of free, open source software/service/tools to support the different phases of the research process – design, implementation, collaboration, monitoring, data analysis, reporting, etc. These open-source tools are not only free, but sometimes even more powerful than existing proprietary software and services. For example, a fairly comprehensive set of free office productivity tools can be found online. These include word processor, spreadsheet (i.e. the OpenOffice suite), slide presentations, graphic programs (i.e. Gimp, http://www.gimp.org/).

As concerns the implementation of laboratory experiments, several software platforms are available for programming psychological studies. For example, PsychoPy is a user-friendly open-source application that allows the presentation of stimuli and collection of data for a wide range of neuroscience, psychology and psychophysics experiments. For the analysis of data, possible alternatives to commercial statistical packages include the R language for statistical computing, a free software environment for statistical computing and graphics (coupled with R-Commander or Rstudio for those who are not comfortable with line-command interfaces). And when it is time to writing a paper, free tools exist designed for the production of technical and scientific documentation, such as the popular program LaTeX, which can be used in combination to reference manager software like JabRef.

And what about Virtual Reality? Our NeuroVR platform is a free tool that young researchers (i.e. MS students, PhD students) can use to move their first scientific steps in the virtual realm.

Needless to say, the most expensive budget item in a research plan remains personnel costs. However, I think that by having a look at the many free scientific tools, resources and services that are available, it might be possible to significantly reduce the costs; at the same time, this approach offers the opportunity to support the growth of the open source community in our discipline.

11:24 Posted in Cybertherapy, Research tools | Permalink | Comments (0)

Nov 17, 2015

The new era of Computational Biomedicine

In recent years, the increasing convergence between nanotechnology, biomedicine and health informatics have generated massive amounts of data, which are changing the way healthcare research, development, and applications are done.

Clinical data integrate physiological data, enabling detailed descriptions of various healthy and diseased states, progression, and responses to therapies. Furthermore, mobile and home-based devices monitor vital signs and activities in real-time and communicate with personal health record services, personal computers, smartphones, caregivers, and health care professionals.

However, our ability to analyze and interpret multiple sources of data lags far behind today’s data generation and storage capacity. Consequently, mathematical and computational models are increasingly used to help interpret massive biomedical data produced by high-throughput genomics and proteomics projects. Advanced applications of computer models that enable the simulation of biological processes are used to generate hypotheses and plan experiments.

The emerging discipline of computational biomedicine is concerned with the application of computer-based techniques and particularly modelling and simulation to human health. Since almost ten years, this vision is at the core of an European-funded program called “Virtual Physiological Human”. The goal of this initiative is to develop next-generation computer technologies to integrate all information available for each patient, and generated computer models capable of predicting how the health of that patient will evolve under certain conditions.

In particular, this programme is expected, over the next decades, to transform the study and practice of healthcare, moving it towards the priorities known as ‘4P's’: predictive, preventative, personalized and participatory medicine. Future developments of computational biomedicine may provide the possibility of developing not just qualitative but truly quantitative analytical tools, that is, models, on the basis of the data available through the system just described. Information not available today (large cohort studies nowadays include thousands of individuals whereas here we are talking about millions of records) will be available for free. Large cohorts of data will be available for online consultation and download. Integrative and multi-scale models will benefit from the availability of this large amount of data by using parameter estimation in a statistically meaningful manner. At the same time distribution maps of important parameters will be generated and continuously updated. Through a certain mechanism, the user will be given the opportunity to express his interest on this or that model so to set up a consensus model selection process. Moreover, models should be open for consultation and annotation. Flexible and user friendly services have many potential positive outcomes. Some examples include simulation of case studies, tests, and validation of specific assumptions on the nature or related diseases, understanding the world-wide distribution of these parameters and disease patterns, ability to hypothesize intervention strategies in cases such as spreading of an infectious disease, and advanced risk modeling.

11:25 Posted in Blue sky, ICT and complexity, Physiological Computing, Positive Technology events | Permalink

Aug 23, 2015

Gartner hype cycle sees digital humanism as emergent trend

The journey to digital business continues as the key theme of Gartner, Inc.'s "Hype Cycle for Emerging Technologies, 2015." New to the Hype Cycle this year is the emergence of technologies that support what Gartner defines as digital humanism — the notion that people are the central focus in the manifestation of digital businesses and digital workplaces.

The Hype Cycle for Emerging Technologies report is the longest-running annual Hype Cycle, providing a cross-industry perspective on the technologies and trends that business strategists, chief innovation officers, R&D leaders, entrepreneurs, global market developers and emerging-technology teams should consider in developing emerging-technology portfolios.

"The Hype Cycle for Emerging Technologies is the broadest aggregate Gartner Hype Cycle, featuring technologies that are the focus of attention because of particularly high levels of interest, and those that Gartner believes have the potential for significant impact," said Betsy Burton, vice president and distinguished analyst at Gartner. "This year, we encourage CIOs and other IT leaders to dedicate time and energy focused on innovation, rather than just incremental business advancement, while also gaining inspiration by scanning beyond the bounds of their industry."

Major changes in the 2015 Hype Cycle for Emerging Technologies include the placement of autonomous vehicles, which have shifted from pre-peak to peak of the Hype Cycle. While autonomous vehicles are still embryonic, this movement still represents a significant advancement, with all major automotive companies putting autonomous vehicles on their near-term roadmaps. Similarly, the growing momentum (from post-trigger to pre-peak) in connected-home solutions has introduced entirely new solutions and platforms enabled by new technology providers and existing manufacturers.

16:32 Posted in ICT and complexity | Permalink | Comments (1)

Jun 09, 2015

Informativa sulla privacy e sui cookies

Grazie al Codice in materia di protezione dei dati personali hai il diritto di sapere come vengono usati i tuoi dati e di chiedermi di cancellarli.

I tuo dati non saranno nè venduti, nè ceduti gratuitamente od in nessun modo devoluti a terzi che potrebbero farne uso per altri scopi pubblicitari o di spam.

Di seguito è spiegato cosa sono i Cookies, consiglio questo video del Garante della Privacy, che spiega molto bene cosa sono e cosa fanno.

I cookies utilizzati sono:

– tecnici, tra cui Google Analytics, con l’IP reso anonimo. Questo vuol dire che Google riceve i dati delle visite in maniera aggregata. La tua privacy è protetta, e io so comunque quante persone hanno visto le mie pagine. Se vuoi disattivare GA, puoi scaricare il tool.

I cookies NON utilizzati sono:

– di terze parti, tra cui gli widget di Facebook, Twitter, Google Plus, YouTube, Pocket, JetPack, Disqus e MailChimp. Io non ho il controllo di questi cookies. Ti invito a leggere le varie informative ed ad agire di conseguenza per tutelare la privacy.

Se vuoi disabilitare i cookies dal tuo browser,

Firefox, Chrome, Internet Explorer, Safari e Opera , cliccando sul link del browser è spiegato come fare.

E infine, sul sito del Garante della Privacy è possibile informarsi su come proteggere i propri dati personali.

12:33 Posted in Privacy & Cookie Law | Permalink | Comments (0)

Apr 05, 2015

Are you concerned about AI?

Recently, a growing number of opinion leaders have started to point out the potential risks associated to the rapid advancement of Artificial Intelligence. This shared concern has led an interdisciplinary group of scientists, technologists and entrepreneurs to sign an open letter (http://futureoflife.org/misc/open_letter/), drafted by the Future of Life Institute, which focuses on priorities to be considered as Artificial Intelligence develops as well as on the potential dangers posed by this paradigm.

The concern that machines may soon dominate humans, however, is not new: in the last thirty years, this topic has been widely represented in movies (i.e. Terminator, the Matrix), novels and various interactive arts. For example, australian-based performance artist Stelarc has incorporated themes of cyborgization and other human-machine interfaces in his work, by creating a number of installations that confront us with the question of where human ends and technology begins.

In his 2005 well-received book “The Singularity Is Near: When Humans Transcend Biology” (Viking Penguin: New York), inventor and futurist Ray Kurzweil argued that Artificial Intelligence is one of the interacting forces that, together with genetics, robotic and nanotechnology, may soon converge to overcome our biological limitations and usher in the beginning of the Singularity, during which Kurzweil predicts that human life will be irreversibly transformed. According to Kurzweil, will take place around 2045 and will probably represent the most extraordinary event in all of human history.

Ray Kurzweil’s vision of the future of intelligence is at the forefront of the transhumanist movement, which considers scientific and technological advances as a mean to augment human physical and cognitive abilities, with the final aim of improving and even extending life. According to transhumanists, however, the choice whether to benefit from such enhancement options should generally reside with the individual. The concept of transhumanism has been criticized, among others, by the influential american philosopher of technology, Don Ihde, who pointed out that no technology will ever be completely internalized, since any technological enhancement implies a compromise. Ihde has distinguished four different relations that humans can have with technological artifacts. In particular, in the “embodiment relation” a technology becomes (quasi)transparent, allowing a partial symbiosis of ourself and the technology. In wearing of eyeglasses, as Ihde examplifies, I do not look “at” them but “through” them at the world: they are already assimilated into my body schema, withdrawing from my perceiving.

According to Ihde, there is a doubled desire which arises from such embodiment relations: “It is the doubled desire that, on one side, is a wish for total transparency, total embodiment, for the technology to truly "become me."(...) But that is only one side of the desire. The other side is the desire to have the power, the transformation that the technology makes available. Only by using the technology is my bodily power enhanced and magnified by speed, through distance, or by any of the other ways in which technologies change my capacities. (…) The desire is, at best, contradictory. l want the transformation that the technology allows, but I want it in such a way that I am basically unaware of its presence. I want it in such a way that it becomes me. Such a desire both secretly rejects what technologies are and overlooks the transformational effects which are necessarily tied to human-technology relations. This lllusory desire belongs equally to pro- and anti-technology interpretations of technology.” (Ihde, D. (1990). Technology and the Lifeworld: From Garden to Earth. Bloomington: Indiana, p. 75).

Despite the different philosophical stances and assumptions on what our future relationship with technology will look like, there is little doubt that these questions will become more pressing and acute in the next years. In my personal view, technology should not be viewed as mean to replace human life, but as an instrument for improving it. As William S. Haney II suggests in his book “Cyberculture, Cyborgs and Science Fiction: Consciousness and the Posthuman” (Rodopi: Amsterdam, 2006), “each person must choose for him or herself between the technological extension of physical experience through mind, body and world on the one hand, and the natural powers of human consciousness on the other as a means to realize their ultimate vision.” (ix, Preface).

23:26 Posted in AI & robotics, Blue sky, ICT and complexity | Permalink | Comments (0)

From the Experience Economy to the Transformation Economy

In 1999, Joseph Pine and James Gilmore wrote a seminal book titled “The Experience Economy” (Harvard Business School Press, Boston, MA) that theorized the shift from a service-based economy to an experience-based economy. According to these authors, in the new experience economy the goal of the purchase is no longer to own a product (be it a good or service), but to use it in order to enjoy a compelling experience. An experience, thus, is a whole-new type of offer: in contrast to commodities, goods and services, it is designed to be as personal and memorable as possible. Just as in a theatrical representation, companies stage meaningful events to engage customers in a memorable and personal way, by offering activities that provide engaging and rewarding experiences.

Indeed, if one looks back at the past ten years, the concept of experience has become more central to several fields, including tourism, architecture, and – perhaps more relevant for this column – to human-computer interaction, with the rise of “User Experience” (UX). The concept of UX was introduced by Donald Norman in a 1995 article published on the CHI proceedings (D. Norman, J. Miller, A. Henderson: What You See, Some of What's in the Future, And How We Go About Doing It: HI at Apple Computer. Proceedings of CHI 1995, Denver, Colorado, USA).

Norman argued that focusing exclusively on usability attribute (i.e. easy of use, efficacy, effectiveness) when designing an interactive product is not enough; one should take into account the whole experience of the user with the system, including users’ emotional and contextual needs. Since then, the UX concept has assumed an increasing importance in HCI.

As McCarthy and Wright emphasized in their book “Technology as Experience” (MIT Press, 2004): “In order to do justice to the wide range of influences that technology has in our lives, we should try to interpret the relationship between people and technology in terms of the felt life and the felt or emotional quality of action and interaction.” (p. 12).

However, according to Pine and Gilmore experience may not be the last step of what they call as “Progression of Economic Value”. They speculated further into the future, by identifying the “Transformation Economy” as the likely next phase. In their view, while experiences are essentially memorable events which stimulate the sensorial and emotional levels, transformations go much further in that they are the result of a series of experiences staged by companies to guide customers learning, taking action and eventually achieving their aspirations and goals.

In Pine and Gilmore terms, an aspirant is the individual who seeks advice for personal change (i.e. a better figure, a new career, and so forth), while the provider of this change (a dietist, a university) is an elictor. The elictor guide the aspirant through a series of experiences which are designed with certain purpose and goals. According to Pine and Gilmore, the main difference between an experience and a trasformation is that the latter occurs when an experience is customized: “When you customize an experience to make it just right for an individual - providing exactly what he needs right now - you cannot help changing that individual. When you customize an experience, you automatically turn it into a transformation, which companies create on top of experiences (recall that phrase: “a life-transforming experience”), just as they create experiences on top of services and so forth” (p. 244).

A further key difference between experiences and transformations concerns their effects: because an experience is inherently personal, no two people can have the same one. Likewise, no individual can undergo the same transformation twice: the second time it’s attempted, the individual would no longer be the same person (p. 254-255). But what will be the impact of this upcoming, “transformation economy” on how people relate with technology? If in the experience economy the buzzword is “User Experience”, in the next stage the new buzzword might be “User Transformation”.

Indeed, we can see some initial signs of this shift. For example, FitBit and similar self-tracking gadgets are starting to offer personalized advices to foster enduring changes in users’ lifestyle; another example is from the fields of ambient intelligence and domotics, where there is an increasing focus towards designing systems that are able to learn from the user’s behaviour (i.e. by tracking the movement of an elderly in his home) to provide context-aware adaptive services (i.e. sending an alert when the user is at risk of falling). But likely, the most important ICT step towards the transformation economy could take place with the introduction of next-generation immersive virtual reality systems. Since these new systems are based on mobile devices (an example is the recent partnership between Oculus and Samsung), they are able to deliver VR experiences that incorporate information on the external/internal context of the user (i.e. time, location, temperature, mood etc) by using the sensors incapsulated in the mobile phone.

By personalizing the immersive experience with context-based information, it will be possibile to induce higher levels of involvement and presence in the virtual environment. In case of cyber-therapeutic applications, this could translate into the development of more effective, transformative virtual healing experiences.

23:14 | Permalink | Comments (0)

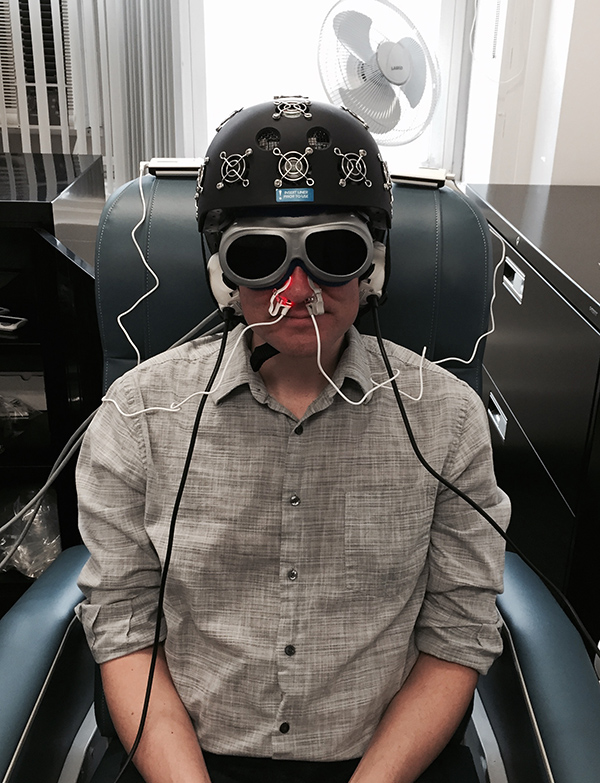

LED therapy for neurorehabilitation

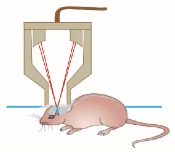

A staffer in Dr. Margaret Naeser’s lab demonstrates the equipment built especially for the research: an LED helmet (Photomedex), intranasal diodes (Vielight), and LED cluster heads placed on the ears (MedX Health). The real and sham devices look identical. Goggles are worn to block out the red light to avoid experimental artifacts. The near-infrared light is beyond the visible spectrum and cannot be seen. (credit: Naeser lab)

Researchers at the VA Boston Healthcare System are testing the effects of light therapy on brain function in the Veterans with Gulf War Illness study.

Veterans in the study wear a helmet lined with light-emitting diodes that apply red and near-infrared light to the scalp. They also have diodes placed in their nostrils, to deliver photons to the deeper parts of the brain.

The light is painless and generates no heat. A treatment takes about 30 minutes.

The therapy, though still considered “investigational” and not covered by most health insurance plans, is already used by some alternative medicine practitioners to treat wounds and pain.

The light from the diodes has been shown to boost the output of nitric oxide near where the LEDs are placed, which improves blood flow in that location.

“We are applying a technology that’s been around for a while,” says lead investigator Dr. Margaret Naeser, “but it’s always been used on the body, for wound healing and to treat muscle aches and pains, and joint problems. We’re starting to use it on the brain.”

Naeser is a research linguist and speech pathologist for the Boston VA, and a research professor of neurology at Boston University School of Medicine (BUSM).

How LED therapy works

The LED therapy increases blood flow in the brain, as shown on MRI scans. It also appears to have an effect on damaged brain cells, specifically on their mitochondria. These are bean-shaped subunits within the cell that put out energy in the form of a chemical known as ATP. The red (600 nm) and NIR (800–900nm) wavelengths penetrate through the scalp and skull by about 1 cm to reach brain cells and spur the mitochondria to produce more ATP. That can mean clearer, sharper thinking, says Naeser.

Nitric oxide is also released and diffused outside the cell wall, promoting local vasodilation and increased blood flow.

Naeser says brain damage caused by explosions, or exposure to pesticides or other neurotoxins — such as in the Gulf War — could impair the mitochondria in cells. She believes light therapy can be a valuable adjunct to standard cognitive rehabilitation, which typically involves “exercising” the brain in various ways to take advantage of brain plasticity and forge new neural networks.

“The light-emitting diodes add something beyond what’s currently available with cognitive rehabilitation therapy,” says Naeser. “That’s a very important therapy, but patients can go only so far with it. And in fact, most of the traumatic brain injury and PTSD cases that we’ve helped so far with LEDs on the head have been through cognitive rehabilitation therapy. These people still showed additional progress after the LED treatments. It’s likely a combination of both methods would produce the best results.”

Results published from 11 TBI patients

The LED approach has its skeptics, but Naeser’s group has already published some encouraging results in the peer-reviewed scientific literature.

Last June in the Journal of Neurotrauma, they reported in an open-access paper, the outcomes of LED therapy in 11 patients with chronic TBI, ranging in age from 26 to 62. Most of the injuries occurred in car accidents or on the athletic field. One was a battlefield injury, from an improvised explosive device (IED).

Neuropsychological testing before the therapy and at several points thereafter showed gains in areas such as executive function, verbal learning, and memory. The study volunteers also reported better sleep and fewer PTSD symptoms.

The study authors concluded that the pilot results warranted a randomized, placebo-controlled trial — the gold standard in medical research.

That’s happening now, thanks to VA support. One trial, already underway, aims to enroll 160 Gulf War veterans. Half the veterans will get the real LED therapy for 15 sessions, while the others will get a mock version, using sham lights.

Then the groups will switch, so all the volunteers will end up getting the real therapy, although they won’t know at which point they received it. After each Veteran’s last real or sham treatment, he or she will undergo tests of brain function.

Naeser points out that “because this is a blinded, controlled study, neither the participant nor the assistant applying the LED helmet and the intranasal diodes is aware whether the LEDs are real or sham — they both wear goggles that block out the red LED light.” The near-infrared light is invisible.

Upcoming trials

Other trials of the LED therapy are getting underway:

- Later this year, a trial will launch for Veterans age 18 to 55 who have both traumatic brain injury (TBI) and post-traumatic stress disorder, a common combination in recent war Veterans. The VA-funded study will be led by Naeser’s colleague Dr. Jeffrey Knight, a psychologist with VA’s National Center for PTSD and an assistant professor of psychiatry at BUSM.

- Dr. Yelena Bogdanova, a clinical psychologist with VA and assistant professor of psychiatry at BUSM, will lead a VA-funded trial looking at the impact of LED therapy on sleep and cognition in Veterans with blast TBI.

- Naeser is collaborating on an Army study testing LED therapy, delivered via the helmets and the nose diodes, for active-duty soldiers with blast TBI. The study, funded by the Army’s Advanced Medical Technology Initiative, will also test the feasibility and effectiveness of using only the nasal LED devices — and not the helmets — as an at-home, self-administered treatment. The study leader is Dr. Carole Palumbo, an investigator with VA and the Army Research Institute of Environmental Medicine, and an associate professor of neurology at BUSM.

Naeser hopes the work will validate LED therapy as a viable treatment for veterans and others with brain difficulties. She also foresees potential for conditions such as depression, stroke, dementia, and even autism.

According to sources cited by the authors, i is estimated that there are 5,300,000 Americans living with TBI-related disabilities. The annual economic cost is estimated to be between $60 and $76.5 billion. It is estimated that 15–40% of soldiers returning from Iraq and Afghanistan as part of Operation Enduring Freedom/Operation Iraqi Freedom (OEF/OIF) report at least one TBI. And within the past 10 years, the diagnosis of concussion in high school sports has increased annually by 16.5%.

The research was supported by U.S. Department of Veterans Affairs. National Institutes of Health, American Medical Society for Sports Medicine, and American College of Sports Medicine-American Medical Society for Sports Medicine Foundation.

22:59 Posted in Brain training & cognitive enhancement, Cybertherapy | Permalink | Comments (0)

Mar 19, 2015

EEG-Powered Glasses Turn Dark to Help Keep Focus

Via Medgadget

Keeping mental focus while working, studying, or driving can be a serious challenge. A new product looking for funding on Kickstarter may help users maintain focus and to train the brain to keep the mind from wandering. The Narbis device is a combination of an EEG sensor and a pair of glasses whose lenses can go from transparent to opaque.

The user puts on the glasses and adjusts the dry EEG electrodes to make contact with the skull. The EEG component continuously monitors brainwave activity, noticing when the user starts to drift off mentally. When that happens, the glasses fade to darkness and the wearer is effectively forced to snap back to attention. The EEG recognizes fresh activity within the brain, immediately clearing the glasses and letting the wearer get back to task.

The team behind the Narbis believes that a couple sessions per week of wearing the device can help improve mental focus even when not using the system. Here’s their promo looking to fund the manufacturing of the device on Kickstarter.

23:35 | Permalink | Comments (0)

The neuroscience of mindfulness meditation

The neuroscience of mindfulness meditation.

Nat Rev Neurosci. 2015 Mar 18;

Authors: Tang YY, Hölzel BK, Posner MI

Abstract. Research over the past two decades broadly supports the claim that mindfulness meditation - practiced widely for the reduction of stress and promotion of health - exerts beneficial effects on physical and mental health, and cognitive performance. Recent neuroimaging studies have begun to uncover the brain areas and networks that mediate these positive effects. However, the underlying neural mechanisms remain unclear, and it is apparent that more methodologically rigorous studies are required if we are to gain a full understanding of the neuronal and molecular bases of the changes in the brain that accompany mindfulness meditation.

23:31 Posted in Meditation & brain | Permalink | Comments (0)

Ultrasound treats Alzheimer’s disease, restoring memory in mice

Scanning ultrasound treatment of Alzheimer’s disease in mouse model (credit: Gerhard Leinenga and Jürgen Götz/Science Translational Medicine)

University of Queensland researchers have discovered that non-invasive scanning ultrasound (SUS) technology* can be used to treat Alzheimer’s disease in mice and restore memory by breaking apart the neurotoxic Amyloid-β (Aβ) peptide plaques that result in memory loss and cognitive decline.

The method can temporarily open the blood-brain barrier (BBB), activating microglial cells that digest and remove the amyloid plaques that destroy brain synapses.

Treated AD mice displayed improved performance on three memory tasks: the Y-maze, the novel object recognition test, and the active place avoidance task.

The next step is to scale the research in higher animal models ahead of human clinical trials, which are at least two years away. In their paper in the journal Science Translational Medicine, the researchers note possible hurdles. For example, the human brain is much larger, and it’s also thicker than that of a mouse, which may require stronger energy that could cause tissue damage. And it will be necessary to avoid excessive immune activation.

The researchers also plan to see whether this method clears toxic protein aggregates in other neurodegenerative diseases and restores executive functions, including decision-making and motor control. It could also be used as a vehicle for drug or gene delivery, since the BBB remains the major obstacle for the uptake by brain tissue of therapeutic agents.

Previous research in treating Alzheimer’s with ultrasound used magnetic resonance imaging (MRI) to focus the ultrasonic energy to open the BBB for more effective delivery of drugs to the brain.

23:27 Posted in Neurotechnology & neuroinformatics | Permalink | Comments (0)