Oct 13, 2005

Free Survey - Human Movement Tracking and Stroke Rehab

This free-downloadable report by Huiyu Zhou and Huosheng Hu from Essex University (UK) reviews recent progress in human movement tracking systems in general, and stroke rehabilitation in particular.

It is a wonderful resource for all researchers interested in technology-enhanced rehabilitation after stroke

17:20 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality, cybertherapy

Sep 29, 2005

Virtual reality helps stroke patients learn to drive again

Sept. 27 issue of Neurology reports results of a study, which has investigated the effect of simulator-based training on driving after stroke. The research has involved 83 subacute stroke patients randomly assigned to either simulator-based training or control group. Then, all patients were evaluated in off-road and on-road performance tests to assess their driving ability after training.  Results showed that virtual reality training improved driving ability, especially for well educated and less disabled stroke patients. However, authors warn that findings of the study may have been modified as a result of the large number of dropouts and the possibility of some neurologic recovery unrelated to training.

Results showed that virtual reality training improved driving ability, especially for well educated and less disabled stroke patients. However, authors warn that findings of the study may have been modified as a result of the large number of dropouts and the possibility of some neurologic recovery unrelated to training.

More to explore

A. E. Akinwuntan, W. De Weerdt, H. Feys, J. Pauwels, G. Baten, P. Arno, and C. Kiekens Effect of simulator training on driving after stroke: A randomized controlled trial, Neurology 2005 65: 843-850

Sung H. You, et al., Virtual Reality–Induced Cortical Reorganization and Associated Locomotor Recovery in Chronic Stroke: An Experimenter-Blind Randomized Study, Stroke, Jun 2005; 36: 1166 - 1171.Sveistrup, H. Motor rehabilitation using virtual reality, Journal of NeuroEngineering and Rehabilitation 2004, 1:10 (download full text)

16:25 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Sep 23, 2005

The Virtusphere

Via Engadget

VirtuSphere is a 360-degree VR environment that allows for moving in any direction. The device consists of a large hollow sphere, placed on a special platform that allows the sphere to rotate in any direction as the user moves within it. Sensors under the sphere provide subject speed and direction to the computer running the simulation and users can interact with virtual objects using a special manipulator.

According to Virtusphere staff, the device has several potential applications in the field of training/simulations, health/rehabilitation, gaming and more. The device cost should range between $50K and $100K.

More to explore

13:30 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Sep 12, 2005

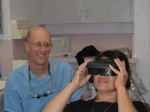

Virtual goggles can help alleviate patients' anxiety during dental procedures

Via Wired

Dentists are starting to use eyeglass systems for easing patients' anxiety and pain during dental procedures. Introducing a distraction has long been known to help reduce pain for some people during surgical operations.  Because virtual reality is a uniquely effective new form of distraction, it makes an ideal candidate for pain control.

Because virtual reality is a uniquely effective new form of distraction, it makes an ideal candidate for pain control.

More to explore

Virtual reality in pain therapy

15:15 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Aug 03, 2005

You can walk and there is no limit

Via the Presence-L Listserv

(From The Seattle Times)

Christina Siderius, Monday, July 25, 2005

Walk in cyberspace: With virtual-reality software, users feel like they're moving even if they're actually sitting on the couch. VirtuSphere wanted to create a device that would allow the user to move limitlessly while using such software — without bumping into walls or falling over. The solution: VirtuSphere, founded in November, created a giant hollow ball that works like a spinning hamster wheel.

Wearing a head-mounted display, a user can step inside the 8.5-foot-tall sphere and experience physical movements while the mind is in cyberspace. "The purpose is to enable natural motion," said Palladin. "You can walk and there is no limit."

Wearing a head-mounted display, a user can step inside the 8.5-foot-tall sphere and experience physical movements while the mind is in cyberspace. "The purpose is to enable natural motion," said Palladin. "You can walk and there is no limit."

How it works: As the user moves, the ball rolls, sending coordinates to a computer. The computer evaluates the information and relays it back to the user's display in the form of a changed view. The sphere, which costs between $50,000 and $100,000, can be made compatible with any computer-based simulations.. READ THE FULL ARTICLE

19:20 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Jul 21, 2005

VR is going to be cheap

Virtual Reality Therapy (VRT) offers treatment using simulated real life experience provided in a confidential setting. VRT has shown its effectiveness in several common phobias, including claustrophobia, fear of heights, fear of spiders, fear of driving, fear of flying, and fear of public speaking. As a person goes through the VR program, the therapist teaches the client relaxation techniques to deal with the symptoms provoked by exposure to the virtual environment.

However, until recently, the use of VRT was severely limited by the lack of inexpensive immersive equipment. This opportunity could come along in the fourth quarter of this year, when 3001AD, a company that builds high-end VR machines for large-scale entertainment centers, will introduce a headset that can be used in combination with the Xbox and the Playstation game consoles and several blockbuster game titles.  The system, called "Trimersion" and recently demonstrated at the E3 trade show, promises 360-degree head tracking, uses a binocular video subsystem and delivers a QVGA resolution (320 x 240). Mark Rifkin, senior vice president of 3001 AD suggested retail pricing to come in just under $500.

The system, called "Trimersion" and recently demonstrated at the E3 trade show, promises 360-degree head tracking, uses a binocular video subsystem and delivers a QVGA resolution (320 x 240). Mark Rifkin, senior vice president of 3001 AD suggested retail pricing to come in just under $500.

More to explore:

Cybertherapy.info the reference site for VR therapy

Virtual reality in behavioral neuroscience and beyond, Nature Neuroscience, 1089-1092, Oct. 2002

Virtual Reality Therapy, Harvard Mental Health Letter, on-line, April 2003

Fake worlds offer real medicine, Journal of the American Medical Association, 290 (10), 2107-2109, 22/29 Oct 2003

15:15 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Jul 16, 2005

Video games for health

Video games have been often criticized by for their negative psychological effects on children. However, a growing number of studies show that video game playing can be useful in health. In an editorial published on the authoritative British Medical Journal, Mark Griffiths, professor of gambling studies, describes how video games are used in health care. One innovative application is their use in pain management. The attention needed to play can distract the player from the sensation of pain, a strategy that has been reported and evaluated among paediatric patients.

Video games have also been used as a form of physiotherapy or occupational therapy in many different groups of people. Such games focus attention away from potential discomfort and, unlike more traditional therapeutic activities, they do not rely on passive movements and sometimes painful manipulation of the limbs.

Read the full-text article published on BMJ (2005;331:122-123)

12:50 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality therapy

Apr 08, 2005

!!! Groundbreaking virtual reality SONY patent - A step closer to the Matrix !!!

07 April 2005

Exclusive from New Scientist Print Edition

Jenny Hogan Barry Fox

Imagine movies and computer games in which you get to smell, taste and perhaps even feel things. That's the tantalising prospect raised by a patent on a device for transmitting sensory data directly into the human brain - granted to none other than the entertainment giant Sony.

The technique suggested in the patent is entirely non-invasive. It describes a device that fires pulses of ultrasound at the head to modify firing patterns in targeted parts of the brain, creating "sensory experiences" ranging from moving images to tastes and sounds. This could give blind or deaf people the chance to see or hear, the patent claims.

While brain implants are becoming increasingly sophisticated, the only non-invasive ways of manipulating the brain remain crude. A technique known as transcranial magnetic stimulation can activate nerves by using rapidly changing magnetic fields to induce currents in brain tissue. However, magnetic fields cannot be finely focused on small groups of brain cells, whereas ultrasound could be.

If the method described by Sony really does work, it could have all sorts of uses in research and medicine, even if it is not capable of evoking sensory experiences detailed enough for the entertainment purposes envisaged in the patent.

Details are sparse, and Sony declined New Scientist's request for an interview with the inventor, who is based in its offices in San Diego, California. However, independent experts are not dismissing the idea out of hand. "I looked at it and found it plausible," says Niels Birbaumer, a pioneering neuroscientist at the University of Tübingen in Germany who has created devices that let people control devices via brain waves.

The application contains references to two scientific papers presenting research that could underpin the device. One, in an echo of Galvani's classic 18th-century experiments on frogs' legs that proved electricity can trigger nerve impulses, showed that certain kinds of ultrasound pulses can affect the excitability of nerves from a frog's leg. The author, Richard Mihran of the University of Colorado, Boulder, had no knowledge of the patent until New Scientist contacted him, but says he would be concerned about the proposed method's long-term safety.

Sony first submitted a patent application for the ultrasound method in 2000, which was granted in March 2003. Since then Sony has filed a series of continuations, most recently in December 2004 (US 2004/267118).

Elizabeth Boukis, spokeswoman for Sony Electronics, says the work is speculative. "There were not any experiments done," she says. "This particular patent was a prophetic invention. It was based on an inspiration that this may someday be the direction that technology will take us."

More to explore

Virtual Reality

Transcranial Magnetic Stimulation

Matrix: the movie

Press releases

Australian IT - Sony patents 'real' Matrix

News24 - Sony eyes 'real-life Matrix'

Forbes.com - Sony takes first step to patent 'real-life Matrix

Dawn International - Real life Matrix in the making

Timesonline - Sony takes 3-D cinema directly to the brain

Australian Financial Review - First step to patent 'real-life Matrix'

14:25 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Apr 06, 2005

Remote healthcare monitoring not so distant

The IST programme funded-project developed a comprehensive monitoring system to capture, transmit and distribute vital health data to doctors, carers and family. Pilot tests of the E-Care system indicate that doctors, nurses, patients and their families found E-Care reliable, simple to use and an effective method to improve the quality of care while reducing costs.

E-Care’s system dynamically produces data depending who accesses a patient's record. A doctor will see all the health information, a system or medical administrator will see data relevant to them, while patients, their friends or family, will see another set of data, all coming from the one file.

The system will monitor patients with chronic, or long-term, illnesses such as diabetes or cardiovascular disease, and patients discharged after an operation or serious medical crises, such as stroke victims.

It can acquire vital information about a patient who lives far away from medical support, and it can alert medical staff if there is a dangerous change in patient's status. With e-Care’s system doctors spend less time going to see patients and more time treating them. It also means real-time monitoring without high staff or capital costs.

"Citizens with long-term illnesses as well as those who are in post-surgery state, or predisposed to illness, need monitoring of their health until their condition becomes stable," says E-Care project director Mariella Devoti.

"They, as well as their family and friends, also need an efficient way to collaborate with their doctor and get informed about their state. Until now, monitoring of the health condition of such people could only be accomplished by prearranged visits from a doctor, or by visits to the local hospital for a check-up. However, this is an inefficient solution, as well as costly, as these visits would scarcely be on a daily basis."

"[By] increasing the demand for long-term care, often at home, there are fewer facilities and medical staff available per patient," says Devoti. "This situation represents an important challenge for our society and it is urgent to provide consistent solutions to avoid a deep deterioration of the quality of life of millions of people."

Building a state-of-the-art remote monitoring system

Ten partners from Italy, Greece, the UK, Germany and Cyprus joined forces to develop a remote monitoring system that could take vital data from patients, automatically add the data to the patient's chart and render the information accessible via computer for analysis at a hospital or clinic.

"E-Care makes best use of state-of-the-art know-how from a wide spectrum of disciplines, ranging from medical devices and software to workflow management system, [which brings] experience from business modelling," Devoti says.

The system includes nine components deployed across two primary elements: patient monitoring and the central system.

On the patient side there's a wireless intelligent sensor network (WISE), bio-medical sensors and a radio terminal. WISE consists of a series of monitors that track signs like activity, temperature, pulse, blood pressure and glucose or other personal data like weight, pain measurement and drug conformance. Data collected by the sensors are sent to the transmitter that sends them to the central system.

But the system can work two ways. SMS messages sent to the drug conformance device to remind the patients when they need to take medication. Patients send a confirmation once they take the drugs.

The central system includes a medical data manager (MDM), E-Care repository, collaboration module, workflow system, security system and user Web applications.

The MDM automatically checks patient data against the patient's record and any doctor's notes. If there is a disturbing change in the patient's vital signs, for example high glucose levels in a diabetic, an alarm is sent directly to the patient's physician. This provides peace of mind for patient and family and ensures a doctor or medics can respond rapidly to any problems that arise. Similarly, the MDM can alert paramedical staff or a doctor if patient data fails to arrive when expected.

The E-Care repository stores all patient data. The collaboration module allows user to communicate using real-time synchronous message, audio conferencing or videoconference. Patients and family can confer with their doctor, or GPs or nurses can confer with a specialist.

The workflow system controls overall system processes, while user Web applications dynamically format the patient data depending on who is accessing the information and what access rights they have. Layouts for doctors, patients, system administrators, medical administrators and patient relatives, each with different information, are possible.

Researchers sought to avoid re-inventing the wheel by using standard and widely adopted technologies where possible. Transmission is across standard telecoms protocols such as GSM, 3G, bluetooth, radio and landline broadband. It means the system will work with any modern hospital or clinic.

Positive results from pilots

E-Care deployed in three pilot programmes between February 2003 and February 2004 and the overall result was very positive.

"The final validation results for E-Care showed a general satisfaction among doctors and medical staff, system and medical administrators, patients and their family," says coordinator Devoti.

Users praised its practicability, reliability, effectiveness and patient acceptance. Specifically, medical staff at Aldia in Italy, one of the project partners, gave some suggestions of the possible uses of the system, for example cardiovascular disease, or patients with chronic obliterating arteriopathy.

Diabetes specialists praised the glucose monitoring system. It allows the doctor to change patient treatment based on vital sign analysis. Similarly the blood pressure monitor was particularly of interest for patients with stroke or cardiovascular disease.

With the project validation complete the partners will fine tune the system and search for commercial opportunities. Remote monitoring is not so distant.

Contact:

Mariella Devoti

coordinator@e-care-project.org

Source: Based on information from E-Care

15:55 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, telemedicine

Apr 04, 2005

Virtual reality for disabled individuals

From the "Presence" listserv

By ERIN BELL

September 23, 2004

Special to The Globe and Mail

Anyone who's used Sony's EyeToy for the PlayStation 2 console knows how much fun it can be to put yourself into a video game with the help of a camera. More advanced technology developed in Canada is being used to help people with special needs learn skills and experience things that used to be beyond their reach.

The technology comes from a partnership between integrator Xperiential Learning Solutions Inc. and Toronto developer Jestertek Inc. (formerly the Vivid Group), whose virtual reality systems have been installed in places ranging from museums and science centres to the hockey and basketball halls of fame.

The pair's Experiential Learning Product Suite is aimed at people with physical, mental or behavioural disabilities.

Xperiential's founder, Theo D'Hollander, has a son with autism and relatives who suffer from cerebral palsy and Down syndrome. "It made me realize that this whole area [of technology for people with disabilities] is almost like a generation behind, it's in the industrial age when the rest of the world is in the Internet age," he said. "Today's technology is great for things like information gathering, but it has actually created more distance between people with disabilities and the life or job opportunities they need. The answer is to use technology to help create new experiences for them."

Like Sony's EyeToy, the Experiential Learning Product Suite uses cameras to capture a person's image and project it onto a monitor or large screen, combining it in real-time with the computer-generated action. The player can participate in virtual reality scenarios such as snowboarding, soccer, boxing, racing, and even mountain climbing, controlling the action by moving parts of their body. It's there the similarities cease, however.

Using cameras that capture at least 30 frames a second and hardware much more powerful than a game console, the suite can adapt to a player's physical characteristics and abilities.

Sensitivity, speed and range of motion are adjustable, allowing people to control programs with tiny gestures -- from a shrug to a toe-twitch -- letting a bedridden person see what it's like to ride a horse, or someone without the use of their hands play a virtual musical instrument.

The profile for each user can be fine-tuned as their mobility or skills improve. The partners are also working on a way to allow people to compete or collaborate on-line.

"It's very much a motivational experience for the kids or adults with disabilities who use the system," Jestertek president Vincent John Vincent said.

"Children with cognitive disabilities have short attention spans,"Mr. D'Hollander added. "With these programs, they're engaged by the games and music. There's something enticing about seeing themselves on television, and the idea that they're inside a computer game."

A study by the University of Ottawa is looking at ways to use the suite to make children's home-exercise rehabilitation programs more engaging.

"This gives people, especially those with some cognitive impairments or disabilities, the opportunity to have an experience that could not be possible otherwise," explained Dr. Heidi Seistrup, associate professor in the University's School of Rehabilitation Sciences.

"For example, they could play volleyball even though they're in a wheelchair, just by moving their fingers. The environment can be tweaked to allow someone with a very limited range of motion to play against someone who has a full range of motion. You put them on a level playing field, which you can't do in real life very easily."

In other cases, the suite is used to teach life skills. There are modules that can train people to sort a load of laundry, teach basic traffic safety, or show how to serve customers in a doughnut shop.

The system can be a social behaviour coach, too, Mr.

D'Hollander said. "Having a child with autism go to a family gathering at Christmas or go into a crowded mall is a big issue, because the initial encounter is so intense they can't handle it.

We can create a tape of family settings or a mall, and allow children to get used to it by interacting with [the virtual crowd] before they encounter the real thing, helping them over that social hurdle."

A site licence for the Experiential Learning Product Suite is $5,600 (including hardware for a single user and several dozen applications), and systems for additional users can be purchased for around $750 each. There's also a basic unit that sells for around $400, including about a dozen games, to deliver extra entertainment and exercise-related programs in a home setting.

Over the past several months, Xperiential has sold about 30 site licences to community living homes, rehabilitation centres and school boards across Ontario, as well as to customers in the United States and Europe, Mr. D'Hollander said.

A unit was recently installed at Community Living Oakville in Oakville, Ont. "We use it three times a week, and it's awesome,"

day service worker Kelleigh Melito said. "They love the dancing, racing and snowboarding. Because of our location, they can't get out much to go for walks, because there are no sidewalks; this is how they get their exercise."

Don Seymour, executive director of developmental services for Lambton County, Ont., said he hopes to use the virtual reality units in all the special needs homes under his jurisdiction.

"I watched a fellow we support who is in a wheelchair get in front of the camera and look at the screen, and all of a sudden realize he was in a racecar," Mr. Seymour said. "All he had to do was move his shoulders to race this car around a track. For a person who has limited mobility with their arms or legs, to be able to steer a racecar on a big screen was incredible."

"You hear about this stuff a dozen times in a year, and then to actually find something that has immediate flexibility to our folks is quite something," he added.

17:50 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Mar 23, 2005

VR for PTSD rehabilitation in Israel

From Israel21c, "A focus beyond conflict"

Watching it - in three dimensions and full sound while wearing a head-mounted display helmet -may help hundreds of Israelis who have witnessed real terror attacks overcome post-traumatic stress disorder (PTSD), and is the basis of a new therapy for treating particularly resistant cases of PTSD.

The treatment is just one of dozens of novel applications of virtual reality (VR) technology which were demonstrated recently at the University of Haifa during the Third VR Symposium. Weiss, the person who brought together many of the world's leading VR wizards - and who is herself involved in several cutting edge VR applications, is a strictly observant Israeli who lives in the ultra-Orthodox neighborhood of B'nai Brak.

"It's not exactly normal," admits Weiss to ISRAEL21c, laughing at the contrast between her traditional way of life and the 'Brave New World' that characterizes her professional pursuits.

But Weiss sees no contradiction between the two. "I have always been interested in different technologies and my goal has always been to help people," says the researcher, whose library has volumes of Psalms and kinesiology textbooks side by side.

An occupational therapist by training, Weiss grew up in Canada and taught at McGill University in Montreal for many years, before immigrating to Israel in 1991 with her Israel-born husband. For the last four years she has been a researcher and lecturer at the University of Haifa, and a member of its newly-established Laboratory for Innovations in Rehabilitation Technology.

Weiss's interest in VR was piqued when she read an article by one of the pioneers in the field, Prof. Albert 'Skip' Rizzo of the University of Southern California, nearly a decade ago. That ultimately led to a close collaboration with Rizzo, who also attended this month's symposium.

What interests her about the field? "Look at this," says Weiss, showing a videotape of a woman with a spinal cord injury doing traditional physiotherapy. The therapist hands her a plastic ring which she must grasp without losing her balance - then another ring, and another, and another. "Let's face it. It's very static and very boring."

Now she shows a videotape of another patient who is also learning to balance himself - only he is watching himself on a giant screen, against a breath-taking mountain backdrop, swatting at balls in the sky. Every ball he hits turns into a colourful bird. The scene is virtual, but the man's movements - he is leaping and swatting with increasing determination - are very real.

"It's interesting and motivating," explains Weiss. "I have yet to meet a patient - of any age - who didn't like it. So it's very effective." (In a newer version, she notes excitedly, patients will wear a glove which vibrates whenever they make contact with a virtual ball - further increasing the sense of realness.)

The symposium Weiss organized, which brought leading VR experts from the US, Canada, Europe, Japan and Israel, to Haifa showed the dizzying range of new VR technologies dedicated to health and rehabilitation - from a robotic dog, who can be a reliable companion for the elderly - "no need to feed him or take him for walks," noted the researcher who demonstrated the small, black, yelping Sony invention - to 3D interactive games that could some day be used for early diagnoses of Alzheimer's disease, treatment of attention deficit disorder, and rehabilitation of patients who have suffered central nervous system injuries.

"Virtual reality has completely revolutionized the field of occupational therapy," says Weiss, who is personally involved in several innovative VR projects, including the simulated bus bombing program designed to treat Israelis suffering from severe post-traumatic stress.

That program - developed together with Dr. Naomi Josman, Prof.Eli Somer and Ayelet Reisberg, all of the University of Haifa, as well as with American researchers - is designed to expose patients in a controlled manner to the traumatic incident which they are often unable to remember, but which has a powerful and debilitating effect on their lives.

The realistic rendering of the bus bombing triggers the patient's memories - the first vital step on the path to overcoming trauma. (The simulation does not include all the gruesome details of the attack, but rather just enough to help the patient recall what happened.)

It was Josman who first came up with the idea of using such a treatment in Israel. She was attending a conference in the United States when she saw how University of Washington Prof. Hunter Hoffman had applied VR to successfully treat Americans suffering from PTSD following the 9/11 attack on the Twin Towers.

Similar programs have also been used recently to help American veterans traumatized by their tour of duty in Iraq, and even Vietnam veterans for whom no other treatment has proven effective.

Through close collaboration with Hoffman, the U. of Haifa team developed an Israeli version of the program which is now being used to treat the first few patients.

"If our pilot study is effective, we will launch a full-scale clinical trial," says Weiss, "and hopefully we will be able to provide a solution for those PTSD patients who have been resistant to more traditional cognitive therapy."

In another application of VR technology, Weiss and U. of Haifa colleagues have developed a program to help stroke victims relearn the basic skills required to shop on their own. The patient composes a grocery list and makes his or her way through a 'virtual supermarket,' seeking the right products, pulling them off the shelves and into a shopping cart, while announcements of sales are broadcast on the loudspeaker system.

"It's the first such program designed to improve both cognitive and motor skills of stroke victims," she notes.

Last week, the American Occupational Therapy Foundation (AOTF) invited Weiss to join its Academy of Research, the highest scholarly honor that the AOTF confers.

"Your work clearly helps to move the profession ahead, and demonstrates powerful evidence of the importance of assistive technology in helping persons with disabilities participate in the occupations of their choice, while improving the quality of their lives," the AOTF wrote in its letter to Weiss.

For Weiss, virtual reality is not only the focus of research, but a way of life - at least in her work. She communicates with her colleagues around the world by tele-conference and, of course, email - and notes that she has never even met her close collaborator Hoffman even though they have been

communicating several times a week for years. She also taught an entire university course last semester - without ever attending a lecture hall. Instead, she sat in the comfort of her B'nai Brak home, wearing a headset and microphone to deliver a weekly videoconferenced lesson on assistance technology to students who sat in their own homes.

"They could see a video of me, and whenever a student wanted to speak I would see the icon of a hand being raised. We even had guest lecturers from abroad. The students really appreciated not having to come to the university late at night for the course," says Weiss, who was pleased to be able to - once again - harness technology to help make people's lives a little easier.

10:30 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality, cybertherapy

Mar 22, 2005

Virtual reality games alleviate pain in children

From BioMed Central

Immersion in a virtual world of monsters and aliens helps children feel less pain during the treatment of severe injuries such as burns, according to a preliminary study by Karen Grimmer and colleagues from the Women's and Children's Hospital in Adelaide, Australia.

A virtual reality game is a computer game especially designed to completely immerse the user in a simulated environment. Unlike other computer games, the game is played wearing a special headset with two small computer screens and a special sensor, which allows the player to interact with the game and feel a part of its almost dreamlike world. "Owing to its ability to allow the user to immerse and interact with the artificial environment that he/she can visualize, the game-playing experience is engrossing", explain the authors.

Children with severe burns suffer great pain and emotional trauma, especially during the cleaning and dressing of their wounds. They are usually given strong painkiller drugs, muscle relaxants or sedatives, but these are often not enough to completely alleviate pain and anxiety. These medications also have side effects such as drowsiness, nausea or lack of energy.

Grimmer and colleagues asked seven children, aged five to eighteen, to play a virtual reality game while their dressing was being changed. The children were also given the usual amount of painkillers. The researchers assessed the pain the children felt when they were playing and then compared it to the amount of pain felt when painkillers were used alone.

To measure the intensity of the pain, the team used the Faces Scale, which attributes a score from 0 to 10, wherein 10 represents maximum pain, to a facial manifestation of pain. For younger children they used 5 different faces representing no pain to very bad pain. The researchers also interviewed the nurses and parents present during the dressing change.

The average pain score when the children received painkillers alone was 4.1/10. It decreased to 1.3/10 when the children had played a game and been given painkillers. Because the sample size was so small the researchers analysed their results per child, and they found that all but one child lost at least 2 points on the scale when they were playing the game. The parents and nurses confirmed these results and said that the children clearly showed less signs of pain when they played the game.

"We found that virtual reality coupled with analgesics was significantly more effective in reducing pain responses in children than analgesic only" conclude the authors.

This is only a preliminary study, but the researchers are hopeful. They propose to test virtual reality on more subjects, possibly with games appropriate to each age group, in the hope that it could one day greatly reduce, if not completely replace, the use of painkillers.

This press release is based on the article:

The efficacy of playing a virtual reality game in modulating pain for children with acute burn injuries: A single blinded randomized controlled trial Debashish Das, Karen Grimmer, Tony Sparnon, Sarah McRae, Bruce Thomas BMC Pediatrics 2005 4:27 (3 March 2005)

19:30 Posted in Cybertherapy | Permalink | Comments (0) | Tags: virtual reality, cybertherapy

Mar 18, 2005

Does medical technology really help doctors?

From WIRED

By Kristen Philipkoski

Nevertheless, the Centers for Medicare & Medicaid Services, or CMS, which provides health insurance to seniors and the poor, is giving a few technologies a shot at improving the government organization's dismal track record managing patients with chronic conditions.

Medicare's history of not adequately covering preventive health services has created a culture of patients waiting until things get really bad before they'll head to the hospital. Such acute care is far more expensive than the ongoing maintenance that can fend off emergencies in the first place.

As part of the Medicare Modernization Act of 2003, CMS is sponsoring nine pilot projects involving 180,000 patients and using technologies administrators hope will improve preventive care. Officials anticipate that the program could, for example, help a diabetes patient get to the doctor before she requires a leg amputation, or allow a doctor to begin a new prescription or diet before his patient suffers heart failure.

How expensive is chronic care? Two-thirds of Medicare money ($236.5 billion in 2001) is spent on just 20 percent of those enrolled, according to a 2002 report (.pdf) from Partnership for Solutions, a group that studies chronic health conditions. Everyone in that 20 percent is coping with five or more chronic conditions. The first of the baby boomers officially become seniors in 2011, and it is clear Medicare needs help, particularly in its chronic-care approach.

"This needs to happen anyway," said Sandy Foote, senior adviser for the Chronic Care Improvement Program at CMS.

CMS has chosen patients with chronic-care needs to participate in pilot projects that will implement technologies ranging from automated phone reminder systems and interactive in-home devices that ask patients questions about their health to hospital technologies for physicians.

CMS is paying health-management organizations like McKesson and Health Dialog to deliver technologies to those patients. But if after three years Medicare doesn't see a substantial improvement in health benefits and costs compared to a randomly selected group of about 100,000 control patients, CMS will ask for an unspecified portion of the fees back.

"We're not going to lock into a technology that may very well be outdated soon," Foote said. "We're paying organizations to help people in very individualized, very personalized ways to reduce their health risks, and they can keep refining how they do that."

That means, for example, McKesson is also not bound by the technologies it has chosen to implement. If something looks like it's not benefiting -- or actually is hindering -- patients or physicians, McKesson or any of the other management organizations can nix a technology for one that might work better.

The program sets up an exceptional potential for change and innovation in a hefty, bureaucratic government organization. And recent studies showing the failure of some medical technologies suggest that flexibility might be a good thing.

Two studies published in the March 9 issue of the Journal of the American Medical Association found that technologies designed to make physicians' jobs easier sometimes didn't, and in some cases the tools actually made the doctors' work more difficult.

"The system should not control the process of doing medicine but respond to how the hospital works," said the University of Pennsylvania's Dr. Ross Koppel, the lead author of the JAMA study. "Very often the software designers expect the users to wrap themselves like pretzels around the software, rather than making it respond to the hospital's needs."

18:05 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, telemedicine

Feb 28, 2005

Virtual-Reality Movies Put a New Face on "User-Friendly"

FROM THE PRESENCE-L LISTSERV: (From UB News, the news service of the University of Buffalo)

Virtual-Reality Movies Put a New Face on "User-Friendly"

Intense psychological drama requires computer agents to be "more human"

Release date: Thursday, February 24, 2005

These improvisational computer agents are expected to influence the development of electronic devices of tomorrow, making them much more user-friendly because they will be able to respond to the idiosyncratic needs of each user.

The researchers' virtual-reality drama, The Trial The Trail, is a brand new type of dramatic entertainment, where instead of identifying with the protagonist, the audience becomes the protagonist.

The multidisciplinary team formed two years ago when Josephine Anstey, assistant professor in the Department of Media Study in the UB College of Arts and Sciences, was looking for ways to make VR dramas more believable. At the same time, Stuart C. Shapiro, Ph.D., professor in the Department of Computer Science and Engineering in the UB School of Engineering and Applied Sciences, was seeking applications to challenge the computerized cognitive agent called CASSIE that he and his colleagues had developed.

"We started thinking, 'What happens if you put a powerful artificial intelligence system -- which is what Stu has developed -- together with drama and stories?'" recalls Anstey. "The potential seems endless. You can get to the point where you have virtual characters that can believably respond to humans in real-time."

Instead of sitting in a darkened theater and passively watching a story unfold, human audience members in this interactive drama stand up, don gloves and headgear and become immersed virtually in the world of the characters on the screen.

Instead of using a joystick to compete against virtual characters as in a video game, the actions and utterances of human users determine how the virtual characters respond, based on an ever- growing "library" of actions and verbal communications with which the VR characters are endowed.

"Because of these attributes, we are creating a more intense psychological drama for users," said Anstey. "We are creating characters that are more similar to those you'd find in a novel."

The UB team fully explored these concepts in a paper presented last year entitled "Psycho-Drama in VR."

By necessity, added Shapiro, those characters are computational agents that must be capable of behaving in sophisticated and very human-like ways, attributes that also can help take "user- friendliness" for computers and other electronic devices to new heights.

"This is a step in the design and implementation of computer agents that are aware of themselves and their actions, as well as the environment they are in," he explained, "so this work is relevant to any application in which people interact with a device or system."

Shapiro referred to the team's approach toward its virtual characters as "cognitively realistic."

"We use a kind of computational 'self-perception' so that just the way that hearing people can pace their speech more effectively than deaf people, here's an agent that 'hears' computationally and can respond to what's happening," he explained. "The agent has some perception of itself and some level of self-awareness."

While other computer scientists are exploring multiple agent systems, he continued, this project is more demanding because the agents in the drama must be able to "perceive" themselves and then respond to the user.

So, as the human user proceeds through the drama, his or her actions are being recorded computationally over the Internet, interpreted psychologically and used to prompt the responses by the virtual characters.

In a sense, the computational agents in the drama must improvise around the human user, who is acting spontaneously without a script.

This, Anstey says, is a grand departure from the way that humans experience other types of computational dramas, such as video games.

"Very few computer games' characters have psychological lives that you can respond to," she says.

Because of this, the drama is different every time, a factor that the researchers say is both a more challenging and exciting type of entertainment, while also more computationally demanding.

In the UB drama, the main VR character is a dramatic representation of CASSIE, the cognitive agent, based on SNePS, Semantic Network Processing System, a knowledge- representation system developed over the past several decades by Shapiro and William Rapaport, Ph.D., UB associate professor of computer science, and scores of UB graduate students.

SNePS endows a computational agent with the ability to perform reasoning tasks, make inferences and do belief revision, where it can correct itself if it obtains additional information that indicates that it was misled initially.

"Cassie is one of the few intelligent agents that can process and communicate in natural language," said Shapiro. "You can talk to her in English."

He explained that one SNePS runs for each agent in the drama, and they are learning to communicate and interfere with one another.

"For computer-based fiction to really emerge, there has to be a lot of work using intelligent agents," said Anstey. "Anybody interested in the fiction and drama of the future can't ignore this.

Writers will have to build these skills, will have to, in a way, think more like computer scientists."

In addition to Anstey and Shapiro, the UB team consists of David Pape, assistant professor in the Department of Media Study in the UB College of Arts and Sciences; Anthony Ekeh, a graduate student in computational linguistics; Michael Kandefer, a doctoral candidate, and Trupti Devdas Nayak, a graduate student, both in the Department of Computer Science and Engineering, and Orkan Telhan, a graduate student in media study.

Contact: Ellen Goldbaum, goldbaum@buffalo.edu

Phone: 716-645-5000 ext 1415

Fax: 716-645-3765

16:35 Posted in Cybertherapy | Permalink | Comments (0) | Tags: virtual reality therapy

Feb 11, 2005

Cibex-Trazer VR system for neurorehabilitation

Retrieved from: the PRESENCE - Listserve

It may sound like science fiction, but it’s exactly what Bob Verdun, the new director of the Pawtucket Family YMCA, wants to achieve with a cutting-edge piece of fitness equipment called the Trazer.

The Trazer is a serious machine, using optical sensors, an electronic "beacon," and interactive, virtual reality software to facilitate exercise training, sports testing and rehabilitation -- but Verdun is more interested in its kid-appeal.

That’s because the Trazer is the fitness equivalent of the nutritious Twinkie: A virtual reality video game designed to make excise universally accessible and, believe it or not, fun.

"There is a resistance for kids who are not good at team sports to join a school team, and these are usually the kids who need exercise the most," said Ray Giannelli, the senior vice president of research and development for Cybex International, the Massachusetts-based company that will ship three of the first production Trazers to Pawtucket this spring.

The Trazer, said Giannelli, will give sports-adverse kids a fighting chance to stay in shape by putting simple, effective exercises within the familiar context of a video game.

"A lot of kids today are on the computer all day, e-mailing or chatting or playing video games," said Verdun, "This is the perfect bridge to exercise, a viable way to win the war against childhood obesity."

Here’s how it works:

Optical sensors mounted beneath a sleek, high-definition video display are trained to register the movements of an electronic beacon in a 6-foot, three-dimensional space.

When the beacon is attached to a belt worn by the user, his or her movements are mimicked in real-time by a virtual character in a virtual world.

An easy-to-navigate options menu features interactive programs for performance testing and sports training, as well as rehabilitative movement therapy, kinesthetic learning and fitness fun.

There are programs designed for the elderly to help them strengthen neglected muscles and maintain a full range of motion, and games designed to keep kids excited about physical activity.

In one game called "Trap Attack," players are required to move their virtual self across a three-dimensional chessboard, in sync with a roving red cursor.

Play the game on a basic difficulty setting, and the cursor moves relatively slowly, one square at a time. Crank up the difficulty, and trapdoors will appear, forcing you to jump over them.

Another game, "Goalie Wars," allows the player to intercept and "catch" soccer balls thrown by a virtual goalie. Once caught, a ball can be thrown back by lunging forward, and if you fake-out your polygonal opponent, you score a point.

Although optical sensors and virtual reality video games are impressive, they’re not exactly new.

The real meat and potatoes of Trazer is its ability to analyze and tabulate the data it collects. While you play "Goalie Wars" or "Trap Attack," the Trazer is measuring your reaction time, acceleration, speed, power, balance, agility, jumping height, endurance, heart rate and caloric output.

These statistics are displayed and saved after the completion of each exercise, a feature which will allows serious athletes, convalescents and kids to quantify their performance and track their progress.

As they’re fond of saying at Cybex, Trazer has more in common with a flight simulator than an exercise machine.

The Trazer is a versatile exercise machine, but Verdun and his colleagues are hoping it will be a crowd-pleaser as well.

Although the Trazer is still in development, a video demonstration of its capabilities and a hands-on demonstration of the more conventional Cybex Arc Trainer -- a high-tech step- machine -- are expected to be the main attractions at the YMCA’s open house on Saturday.

Scheduled for Saturday, from 10 a.m. to 12 p.m., the open house is a sneak preview: a way to show current and prospective members what they can expect from the Y’s $8 million facelift -- a soup-to-nuts overhaul which will include renovated facilities, expanded program space and brand-new, state-of-the-art fitness equipment.

The ultimate goal is to make the YMCA more family-friendly and appeal to old people, young people and everyone in between. To Verdun’s thinking, what better way to bring the 115-year-old building into the realm of iPods and Instant Messages than a video game.

"We want parents and children to know that there isn’t anything they can’t do at our facility," Verdun said in a recent interview.

"Families can come here, exercise together, and share an experience that is affordable, safe and fun.

"You don’t think about moving around when you’re on the Trazer, and that’s exactly what we want. The essence of the YMCA is having fun."

15:30 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Jan 28, 2005

VR to treat PTSD

From Wired

With a push of a button, special effects will appear -- a mosque's call to prayer, a sandstorm, the sounds of bullets or bombs. "We can put a person in a VR headset and have them walk down the streets of Baghdad," Rizzo said. "They can ride in a Humvee, fly in a helicopter over a battle scene or drive on a desert road."

This is no video game, nor is it a training device. Rizzo and colleagues are developing a psychological tool to treat post-traumatic stress disorder, or PTSD, by bringing soldiers back to the scenes that still haunt them. A similar simulation is in the works for victims of the World Trade Center attacks.

PTSD treatment, the newest frontier in the intersection between virtual reality and mental health, is one of the hot topics this week at the 13th annual Medicine Meets Virtual Reality conference, which began Wednesday in Long Beach, California. Rizzo and others will explore plans to expand virtual reality's role in mental health by adding more elements like touch and the ability to interact with simulations. "The driving vision is a holodeck," Rizzo said. "If you look at the holodeck, and all the things people do in Star Trek, that's what we'd like to be able to do."

Powerful computers are cheaper -- the necessary machines used to cost as much as $175,000 but now the Virtual Reality Medical Center in San Diego, one of about 10 private VR mental-health clinics in the United States, picks up its hardware at Fry's Electronics. VR helmets -- which allow users to turn their heads and see things above, below and behind them in the 360-degree virtual world -- cost as little as a few thousand dollars. And perhaps most importantly, the graphics are more advanced, thanks to partnerships with video-game developers.

At the San Diego clinic, graphics designers are developing a remarkably realistic virtual world based on digital photos and audio from San Diego International Airport. Patients afraid of flying will be able to take a virtual tour of the airport, from the drop-off area through the ticket counter, metal detectors and waiting areas. The simulation is so precise that users can enter restrooms, peruse magazines at the newsstand or wander around the food court; recordings will allow the virtual PA system to offer the requisite incomprehensible announcements.

The clinic already offers a simulation of a flight. At $120 a session, patients sit in actual airplane seats and watch a simulation of a takeoff, accurate all the way down to announcements by flight attendants and pilots. At takeoff, actual airplane audio -- engines revving, landing gear retracting -- is channeled into subwoofers below the seat, providing a dead-on simulation of what a passenger feels. Even the view outside the window is based on actual digital video from a flight.

"Exposure therapy" has long been a common treatment for phobias. "It's a gradual reversal of avoidance," said psychologist Hunter Hoffman, a researcher who studies VR at the University of Washington. "You start by having them hold their ground. A lot of phobics have mental misunderstandings about what would happen if they face the thing they're afraid of. A spider phobic, they may think they're going to have a heart attack -- they think if they don't leave the room, they'll go insane. They have these unrealistic theories about what will happen."

17:25 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality, cybertherapy

Jan 20, 2005

Play scents like you play music!!

disc themes to work much like a music CD player. Just insert one of the themed discs and push play. The player then rotates through five scents on each disc, one by one with a new scent every 30 minutes.

disc themes to work much like a music CD player. Just insert one of the themed discs and push play. The player then rotates through five scents on each disc, one by one with a new scent every 30 minutes.

The player shuts off automatically after all five scents have been played. You can stop the player or skip through the scent tracks at any time. Together, the Scentstories player and disc create a new-to-the-world scent experience.

Articles

"As Scentstories suggests, smell is the next big accessory for the home ... pop in a scented disc and go barefoot on the shore" The New York Times, August 5, 2004

"And now the latest entrant ... Scentstories from Procter & Gamble, an electric machine that amounts to a mini-jukebox of nice smells" USA Today, August 3, 2004

"We then sneak-sniffed the "Celebrate the Holidays" disc (the sixth disc, not on the market until October). Track 2, "baking holiday pie" was this tester's fave overall." The Chicago Tribune, August 15, 2004

"Smoky candles aren't exactly office-friendly. So what's a girl to do when she craves a Zen like moment on the job? Enter Febreze Scentstories. Discs you slip into a boom box-like gadget for two-plus hours of varying scents like lavender or vanilla. "I love that you can adjust the intensity so the smell isn't overwhelming" one relaxed tester comments" Self magazine, September 2004

14:45 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Jan 18, 2005

Simulating Human Touch

FROM THE PRESENCE-L LISTSERV:

From InformIT.com

Haptics: The Technology of Simulating Human Touch

Date: Jan 14, 2005

By Laurie Rowell.

When haptics research — that is, the technology of touch — moves from theory into hardware and software, it concentrates on two areas: tactile human-computer interfaces and devices that can mimic human physical touch. In both cases, that means focusing on artificial hands. Here you can delve into futuristic projects on simulating touch.

At a lunch table some time back, I listened to several of my colleagues eagerly describing the robots that would make their lives easier. Typical was the servo arm mounted on a sliding rod in the laundry room. It plucked dirty clothes from the hamper one at a time. Using information from the bar code—which new laws would insist be sewn into every label—the waldo would sort these items into a top, middle, or lower nylon sack.

As soon as a sack was full of, say, permanent press or delicates, the hand would tip the contents into the washing machine. In this way, garments could be shepherded through the entire cycle until the shirts were hung on a nearby rack, socks were matched and pulled together, and pajamas were patted smooth and stacked on the counter.

Sounds like a great idea, right? I mean, how hard could be for a robotic hand to feel its way around a collar until it connects with a label? As it turns out, that's pretty tricky. In fact, one of the things that keeps us from those robotic servants that we feel sure are our due and virtual reality that lets us ski without risking a broken leg is our limited knowledge of touch.

We understand quite a bit about how humans see and hear, and much of that information has been tested and refined by our interaction with computers over the past several years. But if we are going to get VR that really lets us practice our parasailing, the reality that we know has to be mapped and synthesized and presented to our touch so that it is effectively "fooled." And if we want androids that can sort the laundry, they have to be able to mimic the human tactile interface.

That leads us to the study of haptics, the technology of touch.

Research that explores the tactile intersection of humans and computers can be pretty theoretical, particularly when it veers into the realm of psychophysics. Psychophysics is the branch of experimental psychology that deals with the physical environment and the reactive perception of that environment.

Researchers in the field try, through experimentation, to determine parameters such as sensory thresholds for signal perception, to determine perceptual boundaries.

But once haptics research moves from theory into hardware and software, it concentrates on two primary areas of endeavor:

tactile human-computer interfaces and devices that can mimic human physical touch, most specifically and most commonly artificial hands.

Substitute Hands

A lot of information can be conveyed by the human hand.

Watching The Quiet Man the other night, I was struck by the scene in which the priest, played by Ward Bond, insists that Victor M shake hands with John Wayne. Angrily, M complies, but clearly the pressure he exerts far exceeds the requirements of the gesture. Both men are visibly "not wincing" as the Duke drawls, "I never could stand a flabby handshake myself."

When they release and back away from each other, the audience is left flexing its collective fingers in response.

In this particular exchange, complex social messages are presented to audience members, who recognize the indicators of pressure, position, and grip without being involved in the tactile cycle. Expecting mechanical hands to do all that ours can is a tall order, so researchers have been inching that way for a long time by making them do just some of the things ours can.

Teleoperators, for example, are distance-controlled robotic arms and hands that were first built to touch things too hot for humans to handle—specifically, radioactive substances in the Manhattan Project in the 1950s.

While operators had to be protected from radiation by a protective wall, the radioactive material itself had to be shaped with careful precision. A remote-controlled servo arm seemed like the perfect solution.

Accordingly, two identical mechanical arms were stationed on either side of a 1m-thick quartz window. The joints of one were connected to the joints of the other by means of pulleys and steel ribbons. In other words, whatever an operator made the arm do on one side of the barrier was echoed by the device on the other side.

These were effective and useful instruments, allowing the operator to move toxic substances from a remote location, but they were "dumb." They offered no electronic control and were not linked to a computer.

Modern researchers working on this problem would be concentrating now on devices that could "feel" the density, shape, and character of the materials that were perhaps miles away, seen only on a computer screen. This kind of teleoperator depends on a haptic interface and requires some understanding of how touch works.

Worlds in Your Hand

To build a mechanical eye—say, a camera—you need to study optics. To build a receiver, you need to understand acoustics and how these work with the human ear. Similarly, if you expect to build an artificial hand—or even a finger that perceives tactile sensation—you need to understand skin biomechanics.

At the MIT Touch Lab, where numerous projects in the realm of haptics are running at any given time, one project seeks to mimic the skin sensitivity of the primate fingertip as closely as possible, concentrating on having it react to touch as the human finger would.

The research is painstaking and exacting, involving, for example, precise friction and compressibility measurements of the fingerpads of human subjects. Fingertip dents and bends in response to edges, corners, and surfaces have provided additional data. At the same time, magnetic resonance imaging

(MRI) and high-frequency ultrasound show how skin behaves in response to these stimuli on the physical plane.

Not satisfied with the close-ups that they could get from available devices, the team developed a new tool, the Ultrasound Backscatter Microscope (UBM), which shows the papillary ridges of the fingertip and the layers of skin underneath in far greater detail than an MRI.

As researchers test reactions to surfaces from human and monkey participants, the data they gather is mapped and recorded to emerging 2D and 3D fingertip models. At this MIT project and elsewhere, human and robot tactile sensing is simulated by means of an array of mechanosensors presented in some medium that can be pushed, pressed, or bent.

In the Realm of Illusion

Touch might well be the most basic of human senses, its complex messages easily understood and analyzed even by the crib and pacifier set. But what sets it apart from other senses is its dual communication conduit, allowing us to send information by the same route through which we perceive it. In other words, those same fingers that acknowledge your receipt of a handshake send data on their own.

In one project a few years back, Peter J. Berkelman and Ralph L.

Hollis began stretching reality in all sorts of bizarre ways. Not only could humans using their device touch things that weren't there, but they could reach into a three-dimensional landscape and, guided by the images appearing on a computer screen, move those objects around.

This was all done with a device built at the lab based on Lorentz force magnetic levitation (Lorenz force is the force exerted on a charged particle in an electromagnetic field). The design depended upon a magnetic tool levitated or suspended over a surface by means of electromagnetic coils.

To understand the design of this maglev device, imagine a mixing bowl with a joystick bar in the middle. Now imagine that the knob of the joystick floats barely above the stick, with six degrees of freedom. Coils, magnet assemblies, and sensor assemblies fill the basin, while a rubber ring makes the top comfortable for a human operator to rest a wrist. This whole business is set in the top of a desk-high metal box that holds the power supplies, amplifiers, and control processors.

Looking at objects on a computer screen, a human being could take hold of the levitated tool and try to manipulate the objects as they were displayed. Force-feedback data from the tool itself provided tactile information for holding, turning, and moving the virtual objects.

What might not be obvious from this description is that this model offered a marvel of economy, replacing the bulk of previous systems with an input device that had only one moving part.

Holding the tool—or perhaps pushing at it with a finger—the operator could "feel" the cube seen on the computer screen:

edges, corners, ridges, and flat surfaces. With practice, operators could use the feedback data to maneuver a virtual peg into a virtual hole with unnerving reliability.

Notice something here: An operator could receive tactile impressions of a virtual object projected on a screen. In other words, our perception of reality was starting to be seriously messed around with here.

HUI, Not GUI

Some of the most interesting work in understanding touch has been done to compensate for hearing, visual, or tactile impairments.

At Stanford, the TalkingGlove was designed to support individuals with hearing limitations. It recognized American Sign Language finger spelling to generate text on a screen or synthesize speech. This device applied a neural-net algorithm to map the movement of the human hand to an instrumented glove to produce a digital output. It was so successful that it spawned a commercial application in the Virtex Cyberglove, which was later purchased by Immersion and became simply the Cyberglove.

Current uses include virtual reality biomechanics and animation.

At Lund University in Sweden, work is being done in providing haptic interfaces for those with impaired vision. Visually impaired computer users have long had access to Braille displays or devices that provide synthesized speech, but these just give text, not graphics, something that can be pretty frustrating for those working in a visual medium like the Web. Haptic interfaces offer an alternative, allowing the user to feel shapes and textures that could approximate a graphical user interface.

At Stanford, this took shape in the 1990s as the "Moose," an experimental haptic mouse that gave new meaning to the terms drag and drop, allowing the user to feel a pull to suggest one and then feel the sudden loss of mass to signify the other. As users approached the edge of a window, they could feel the groove; a check box repelled or attracted, depending on whether it was checked. Some of the time, experimental speech synthesizers were used to "read" the text.

Such research has led to subsequent development of commercial haptic devices, such as the Logitech iFeel Mouse, offering the promise of new avenues into virtual words for the visually impaired.

Where Is This Taking Us?

How is all this research doing toward getting us to virtual realities and actual robot design? Immersion and other companies offer a variety of VR gadgets emerging from the study of haptics, but genuine simulated humans are pretty far out on the horizons.

What we have is a number of researchers around the globe working on perfecting robotic hands, trying to make them not only hold things securely, but also send and receive messages as our own do. Here is a representative sampling:

The BarrettHand

[]

BH8-262: Originally developed by Barrett Technology for NASA but now available commercially, it offers a three-fingered grasper with four degrees of freedom, embedded intelligence, and the ability to hold on to any geometric shape from any angle.

The Anatomically Correct Testbed (ACT) Hand

[]: A project at Carnegie Mellon's Robotics Institute, this is an ambitious effort to create a synthetic human hand for several purposes. These include having the hand function as a teleoperator or prosthetic, as an investigative tool for examining complex neural control of human hand movement, and as a model for surgeons working on damaged human hands. Still in its early stages, the project has created an actuated index finger that mimics human muscle behavior.

Cyberhand []: This collaboration of researchers and developers from Italy, Spain, Germany, and Denmark proposes to create a prosthetic hand that connects to remaining nerve tissue. It will use one set of electrodes to record and translate motor signals from the brain, and a second to pick up and conduct sensory signals from the artificial hand to nerves of the arm for transport through regular channels to the brain.

Research does not produce the products we'll be seeing in common use during the next few years. It produces their predecessors. But many of the scientists in these labs later create marketable devices. Keep an eye on these guys; they are the ones responsible for the world we'll be living in, the one with bionic replacement parts, robotic housekeepers, and gym equipment that will let us fly through virtual skies.

16:15 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Jan 10, 2005

EMagin Z800 3D Visor

The eMagin Z800 3D Visor is the first product to deliver on the promise of an immersive 3D

computing experience. Anyone can now surround themselves with the visual data they need without the limits of traditional displays and all in complete privacy. Now, gamers can play

"virtually inside" their games, personally immersed in the action. PC users can experience

and work with their data in a borderless environment.

360 degree panoramic view

Two high-contrast eMagin SVGA 3D OLED Microdisplays deliver fluid full-motion video in more than 16.7 million colors. Driving the user’s experience is the highly responsive head-tracking system that provides a full 360-degree angle of view. eMagin’s specially developed optics deliver a bright, crisp image.

Weighing less than 8 oz, the eMagin Z800 3D Visor is compact and comfortable. While the eMagin OLED displays are only 0.59 inch diagonal, the picture is big – the equivalent of a 105-inch movie screen viewed at 12 feet.

Only eMagin OLED displays provide brilliant, rich colors in full 3D with no flicker and no screen smear. eMagin’s patented OLED-on-silicon technology enhances the inherently fast refresh rates of OLED materials with onchip signal processing and data buffering at each pixel site. This enables each pixel to continuously emit only the colors they are programmed to show. Full-color data is buffered under every pixel built into each display, providing flicker-free stereovision capability.

READ SPECIFICATIONS

The Z800’s head-tracking system enables users to “see” their data in full 3Dsurround viewing with just a turn of the head. Virtual multiple monitors can also be simulated. Designers, publishers and engineers can view multiple drawings and renderings as if they were each laid out on an artist’s table, even in 3D. The eMagin Z800 3D Visor integrates state-of-the-art audio with high-fidelity stereo sound and a built-in noisecanceling microphone system to complete the immersive experience.

* Brilliant 3D stereovision with hi-fi sound for an immersive experience

* Superb high-contrast OLED displays delivering more than 16.7 million colors

* Advanced 360 degree head-tracking that takes you “inside” the game

* Comfortable, lightweight, USB-powered visor; PC compatible

13:10 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality

Jan 05, 2005

Computer generated brain surgery to help trainees

FROM THE PRESENCE-L LISTSERV:

[From E-Health Insider (<http://www.e-health-insider.com/news/item.cfm?ID=988>)...

Researchers at the University of Nottingham have developed a

virtual reality brain surgery simulator for trainee surgeons that

combines haptics with three-dimensional graphics to give what

they claim is the most realistic model in the world.

A 'map' of the brain surface is produced by the software, which

also renders the tweezers or other surgical implement and shows

any incisions made into the virtual brain. The simulator is

controlled by a device held by the user, which uses a robotic

mechanism to give the same pressure and resistance as it would

if it were touching a real brain.

Map of brain on virtual surgery simulator

Dr Michael Vloeberghs, senior lecturer in paediatric neurosurgery

at the University's School of Human Development, who led the

development team, said that the new system would benefit

trainees: "Traditionally a large amount of the training that

surgeons get is by observing and performing operations under

supervision. However, pressures on resources, staff shortages

and new EU directives on working hours mean that this teaching

time is getting less and less.

"This simulator will allow surgeons to become familiar with

instruments and practice brain surgery techniques with

absolutely no risk to the patient whatsoever."

The pilot software was developed with the Queen's Medical

Centre, in Nottingham, which contains a Simulation Centre in

which dummies are often used for surgical training.

Dr Vloeberghs says that the haptic system is an improvement on

the existing system: "Dummies can only go so far – you're still

limited by the physical precense, and you can't do major surgery

on dummies... you can simulate electrically and phonetically what

is happening, but nothing more than that."

Adib Becker, Professor of Mechanical Engineering at the

university, said that the technology could be developed for the

future, and that brain surgery online could even be possible: "If

you project maybe four or five years from now, it may be possible

for a surgeon to operate on a patient totally remotely.

"So the surgeons would be located somewhere else in the world

and can communicate through the internet, and can actually feel

the operation as they are seeing it on the screen."

The team hopes that the piloted software, which was funded by a

grant of £300,000 from the Engineering and Physical Sciences

Research Council (EPSRC), will help train surgeons to a higher

level before their first operation on live patients, thereby

increasing safety.

15:05 Posted in Cybertherapy | Permalink | Comments (0) | Tags: Positive Technology, virtual reality, cybertherapy