Nov 07, 2006

The future of music experience

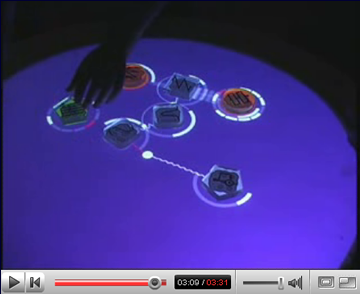

check out this youtube video showing "reactable", an amazing music instrument with a tangible interface

23:00 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Efficacy of biofeedback for migraine

Efficacy of biofeedback for migraine: A meta-analysis.

Pain. 2006 Nov 1;

Authors: Nestoriuc Y, Martin A

In this article, we meta-analytically examined the efficacy of biofeedback (BFB) in treating migraine. A computerized literature search of the databases Medline, PsycInfo, Psyndex and the Cochrane library, enhanced by a hand search, identified 86 outcome studies. A total of 55 studies, including randomized controlled trials as well as pre-post trials, met our inclusion criteria and were integrated. A medium effect size (d =0.58, 95% CI=0.52, 0.64) resulted for all BFB interventions and proved stable over an average follow-up phase of 17 months. Also, BFB was more effective than control conditions. Frequency of migraine attacks and perceived self-efficacy demonstrated the strongest improvements. Blood-volume-pulse feedback yielded higher effect sizes than peripheral skin temperature feedback and electromyography feedback. Moderator analyses revealed BFB in combination with home training to be more effective than therapies without home training. The influence of the meta-analytical methods on the effect sizes was systematically explored and the results proved to be robust across different methods of effect size calculation. Furthermore, there was no substantial relation between the validity of the integrated studies and the direct treatment effects. Finally, an intention-to-treat analysis showed that the treatment effects remained stable, even when drop-outs were considered as nonresponders.

22:49 Posted in Biofeedback & neurofeedback | Permalink | Comments (0) | Tags: biofeedback

TagCrowd

From the TagCrowd blog

TagCrowd is a web application for visualizing word frequencies in any user-supplied text by creating what is popularly known as a tag cloud.

Today, tag clouds are primarily used for navigation and visualization on websites that employ user-generated metadata (tags) as a categorization scheme. (Flickr is a good example.)

TagCrowd is trying to do something different.

When we look at a tag cloud, we see not only a richly informative, beautiful image that communicates much in a single glance. We see a whole new approach to text.

Potential uses for tag clouds extend far outside the online realm: as topic summaries for written works, as name tags for conferences, as resumes in a single glance, as analyses for survey data, as visual poetry; the list goes on.

In the future, TagCrowd will develop into a suite of experimental tools and tutorials that empower social and collaborative uses for tag clouds and related visualizations.

00:28 Posted in Information visualization | Permalink | Comments (0) | Tags: information visualization

Talking space

From Textually.org (via Designboom)

Yang Shi Wei and Shawn Wein Shin from Taiwan have designed "Talking Space", a lampshade that creates a space to close off voice during phone conversations

In the designers' own words:

People enjoy the feeling at party. We always hear much sound about laugh, games, music or play... It's good!! Let we relax and excite. Everything is fine. Playing with your friend. But now, your phone is ringing. It maybe tells an important message to you. Can you talk with the other side on noisy place? You need to leave, and try to find another place to close off voice. Going to outside ?! It's just one of way to solve problems. Have another way?

00:17 Posted in Wearable & mobile | Permalink | Comments (0) | Tags: mobile phones

SLAM - Mobile social networking from Microsoft Research

Via LADS

SLAM is a mobile based social networking application deveoped by Microsoft Research’s Community Technologies Group, which enables lightweight, group-centric real-time communication, photo-sharing and location awareness.

From LADS:

From the user perspective, “Slam” means a group of people with whom you can share you exchange messages and share photos easily through your mobile phone. Slam works on group level, which means that any messages sent to a Slam gets delivered to all the members in the group. To use Slam you need to install the Slam application on your Windows Mobile based devices and need a unlimited data plan. So any messages sent from your smart phone will be over HTTP instead of using SMS. If you don’t have Windows based device, you can still communicate with your Slams through SMS, since each Slam has a different phone number to enable SMS delivery.

Slam delivers a pretty impressive feature set on the smart phones. Besides exchanging messages, users can view private and public Slams, create Slam, invite people to an existing Slam, join Slams, view Slam members, view and upload photos, manage their Slams, and view unread messages. While viewing a member profile Slam also indicates if you the user has any an intersecting Slam group with you.

One of the best features Slam team is working on is to provide location information of people in your Slam group. Once group members set their privacy level to allow appropriate Slam groups to view their location information, Slam maps the location information onto a Windows Live map on your mobile. This feature is more of a proof of concept and works only on few phone including Audiovox 5600, I-Mate Sp3 and i-Mate SP5 for some of the Seattle users of Cingular/AT&T and T-Mobile.

Visit also the Microsoft Slam Team Blog

00:00 Posted in Locative media, Social Media | Permalink | Comments (0) | Tags: social networks, locative media

Nov 06, 2006

Iris recognition technology for mobile phones

Re-blogged from Pink Tentacle

Oki Electric announced the development of iris recognition technology for camera-equipped mobile phones. Unlike Oki’s previous iris recognition technology that relies on infrared cameras for the iris scan, the new technology uses ordinary cellphone cameras. With plans to make the technology commercially available in March 2007, Oki hopes to boost the security of cellphone payment systems. According to Oki, any camera-equipped cellphone or PDA can perform iris recognition once the special software is installed. Identification accuracy is said to be high, with only 1 in 100,000 scans resulting in error, and the system can tell the difference between flesh-and-blood eyes and photographs.

23:23 Posted in AI & robotics, Wearable & mobile | Permalink | Comments (0) | Tags: artificial intelligence, mobile phones

Dopamine used to prompt nerve tissue to regrow

Via Medgadget

Georgia Tech/Emory researchers are testing how to use dopamine to design polymer that could help damaged nerves reconnect. Their discovery might lead to the development of new therapies for a range of central and peripheral nervous system disorders:

The discovery is the first step toward the eventual goal of implanting the new polymer into patients suffering from neurological disorders, such as Alzheimer's, Parkinson's or epilepsy, to help repair damaged nerves. The findings were published online the week of Oct. 30 in the Proceedings of the National Academy of Sciences (PNAS).

"We showed that you could use a neurotransmitter as a building block of a polymer," said Wang. "Once integrated into the polymer, the transmitter can still elicit a specific response from nerve tissues."

The "designer" polymer was recognized by the neurons when used on a small piece of nerve tissue and stimulated extensive neural growth. The implanted polymer didn't cause any tissue scarring or nerve degeneration, allowing the nerve to grow in a hostile environment post injury.

When ready for clinical use, the polymer would be implanted at the damaged site to promote nerve regeneration. As the nerve tissue reforms, the polymer degrades.

Wang's team found that dopamine's structure, which contains two hydroxyl groups, is vital for the material's neuroactivity. Removing even one group caused a complete loss of the biological activity. They also determined that dopamine was more effective at differentiating nerve cells than the two most popular materials for culturing nerves -- polylysine and laminin. This ability means that the material with dopamine may have a better chance to successfully repair damaged nerves.

The success of dopamine has encouraged the team to set its sights on other neurotransmitters.

"Dopamine was a good starting point, but we are looking into other neurotransmitters as well," Wang said.

The team's next step is to verify findings that the material stimulates the reformation of synapses in addition to regrowth.

23:14 Posted in Neurotechnology & neuroinformatics | Permalink | Comments (0) | Tags: neurotechnology

New test superior to Mini Mental Status Examination

Via Medline

Geriatricians from Saint Louis University have developed a new test for diagnosing dementia - the Saint Louis University Mental Status Examination (SLUMS) - which appears to be more effective than the widely-used Mini Mental Status Examination (MMSE).

The study has been published in the current issue of the American Journal of Geriatric Psychiatry (14:900-910, November 2006)

From the news release

"This early detection of mild neurocognitive disorder by the SLUMS offers the opportunity for the clinicians to begin early treatment as it becomes available," says Syed Tariq, M.D., lead author and associate professor of geriatric medicine at Saint Louis University.

John Morley, M.D., director of the division of geriatric medicine at Saint Louis University, created the SLUMS to screen more educated patients and to detect early cognitive problems.

"There are potential treatments available and they slow down the progression of the disease," says Morley, who is a coinvestigator. "The earlier you treat, the better people seem to do. But families go through denial and sometimes miss diagnosing dementia until its symptoms are no longer mild."

The researchers found the new screening tool developed by SLU detects early cognitive problems missed by the MMSE.

"The Mini Mental Status Examination has limitations, especially with regard to its use in more educated patients and as a screen for mild neurocognitive disorder," Tariq says.

It takes a clinician about seven minutes to administer the SLUMS, which supplements the Mini Mental Status Examination by asking patients to perform tasks such as doing simple math computations, naming animals, recalling facts and drawing the hands on a clock.

23:00 Posted in Research tools | Permalink | Comments (0) | Tags: research tools

Boosting slow oscillations during sleep potentiates memory

Boosting slow oscillations during sleep potentiates memory

Nature advance online publication 5 November 2006

Authors: Lisa Marshall, Halla Helgadóttir, Matthias Mölle and Jan Born

There is compelling evidence that sleep contributes to the long-term consolidation of new memories. This function of sleep has been linked to slow (<1 Hz) potential oscillations, which predominantly arise from the prefrontal neocortex and characterize slow wave sleep. However, oscillations in brain potentials are commonly considered to be mere epiphenomena that reflect synchronized activity arising from neuronal networks, which links the membrane and synaptic processes of these neurons in time. Whether brain potentials and their extracellular equivalent have any physiological meaning per se is unclear, but can easily be investigated by inducing the extracellular oscillating potential fields of interest. Here we show that inducing slow oscillation-like potential fields by transcranial application of oscillating potentials (0.75 Hz) during early nocturnal non-rapid-eye-movement sleep, that is, a period of emerging slow wave sleep, enhances the retention of hippocampus-dependent declarative memories in healthy humans. The slowly oscillating potential stimulation induced an immediate increase in slow wave sleep, endogenous cortical slow oscillations and slow spindle activity in the frontal cortex. Brain stimulation with oscillations at 5 Hz—another frequency band that normally predominates during rapid-eye-movement sleep—decreased slow oscillations and left declarative memory unchanged. Our findings indicate that endogenous slow potential oscillations have a causal role in the sleep-associated consolidation of memory, and that this role is enhanced by field effects in cortical extracellular space.

22:35 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: cognitive enhancement

Nov 05, 2006

A strategy for computer-assisted mental practice in stroke rehabilitation

A strategy for computer-assisted mental practice in stroke rehabilitation.

Neurorehabil Neural Repair. 2006 Dec;20(4):503-7

Authors: Gaggioli A, Meneghini A, Morganti F, Alcaniz M, Riva G

OBJECTIVE: To investigate the technical and clinical viability of using computer-facilitated mental practice in the rehabilitation of upper-limb hemiparesis following stroke. DESIGN: A single-case study. SETTING: Academic-affiliated rehabilitation center.Participant. A 46-year-old man with stable motor deficit of the upper right limb following subcortical ischemic stroke.Intervention. Three computer-enhanced mental practice sessions per week at the rehabilitation center, in addition to usual physical therapy. A custom-made virtual reality system equipped with arm-tracking sensors was used to guide mental practice. The system was designed to superimpose over the (unseen) paretic arm a virtual reconstruction of the movement registered from the nonparetic arm. The laboratory intervention was followed by a 1-month home-rehabilitation program, making use of a portable display device. MAIN OUTCOME MEASURES: Pretreatment and posttreatment clinical assessment measures were the upper-extremity scale of the Fugl-Meyer Assessment of Sensorimotor Impairment and the Action Research Arm Test. Performance of the affected arm was evaluated using the healthy arm as the control condition. RESULTS: The patient's paretic limb improved after the first phase of intervention, with modest increases after home rehabilitation, as indicated by functional assessment scores and sensors data. CONCLUSION: Results suggest that technology-supported mental training is a feasible and potentially effective approach for improving motor skills after stroke.

22:29 Posted in Cybertherapy, Mental practice & mental simulation | Permalink | Comments (0) | Tags: mental practice, cybertherapy

US Presidential Speeches Tag Cloud 1776-2006

Re-blogged from IFTF's future now

A very interesting way to see the changes in rhetoric over time (you can move the slider around to see trends in word usage)

The above tag cloud shows the popularity, frequency, and trends in the usages of words within speeches, official documents, declarations, and letters written by the Presidents of the US between 1776 - 2006 AD.

The dataset consists of over 360 documents downloaded from Encyclopedia Britannica and ThisNation.com.

22:15 Posted in Information visualization | Permalink | Comments (0) | Tags: information visualization

Nano-optical switches to restore sight?

From Emerging Technology Trends

Californian researchers are using light to control biological nanomolecules and proteins. They think it's possible to put some of their nano-photoswitches in the cells of the retina, restoring light sensitivity in people with degenerative blindness such as macular degeneration...

Read the full article

22:11 Posted in Neurotechnology & neuroinformatics | Permalink | Comments (0) | Tags: neuroinformatics, enhanced cognition

New Scientist tech: Web pioneers call for new 'web science' discipline

From New Scientist tech

| The social interactions that glue the World Wide Web together are now so complex it has outgrown the relatively narrow field of computer science.. |

read the full story here

22:07 Posted in Research tools | Permalink | Comments (0) | Tags: research tools

An extended EM algorithm for joint feature extraction and classification in BCI

An extended EM algorithm for joint feature extraction and classification in brain-computer interfaces.

Neural Comput. 2006 Nov;18(11):2730-61

Authors: Li Y, Guan C

For many electroencephalogram (EEG)-based brain-computer interfaces (BCIs), a tedious and time-consuming training process is needed to set parameters. In BCI Competition 2005, reducing the training process was explicitly proposed as a task. Furthermore, an effective BCI system needs to be adaptive to dynamic variations of brain signals; that is, its parameters need to be adjusted online. In this article, we introduce an extended expectation maximization (EM) algorithm, where the extraction and classification of common spatial pattern (CSP) features are performed jointly and iteratively. In each iteration, the training data set is updated using all or part of the test data and the labels predicted in the previous iteration. Based on the updated training data set, the CSP features are reextracted and classified using a standard EM algorithm. Since the training data set is updated frequently, the initial training data set can be small (semi-supervised case) or null (unsupervised case). During the above iterations, the parameters of the Bayes classifier and the CSP transformation matrix are also updated concurrently. In online situations, we can still run the training process to adjust the system parameters using unlabeled data while a subject is using the BCI system. The effectiveness of the algorithm depends on the robustness of CSP feature to noise and iteration convergence, which are discussed in this article. Our proposed approach has been applied to data set IVa of BCI Competition 2005. The data analysis results show that we can obtain satisfying prediction accuracy using our algorithm in the semisupervised and unsupervised cases. The convergence of the algorithm and robustness of CSP feature are also demonstrated in our data analysis.

21:54 Posted in Brain-computer interface | Permalink | Comments (0) | Tags: brain-computer interface

Neural internet: web surfing with brain potentials

Neural internet: web surfing with brain potentials for the completely paralyzed.

Neurorehabil Neural Repair. 2006 Dec;20(4):508-15

Authors: Karim AA, Hinterberger T, Richter J, Mellinger J, Neumann N, Flor H, Kübler A, Birbaumer N

Neural Internet is a new technological advancement in brain-computer interface research, which enables locked-in patients to operate a Web browser directly with their brain potentials. Neural Internet was successfully tested with a locked-in patient diagnosed with amyotrophic lateral sclerosis rendering him the first paralyzed person to surf the Internet solely by regulating his electrical brain activity. The functioning of Neural Internet and its clinical implications for motor-impaired patients are highlighted.

21:53 Posted in Brain-computer interface, Cybertherapy, Future interfaces | Permalink | Comments (0) | Tags: brain-computer interface

HCI Researcher - London

Via Usability News

Deadine: 7 November 2006

CENTRE FOR HCI DESIGN, CITY UNIVERSITY LONDON

Researcher in Human-Computer Interaction

Fixed term for three years

23.5K - 27K pounds pa inc

Closing date for applications: 7th November 2006.

We are seeking a researcher with a background in computing and human-computer interaction to join a 3 year EPSRC-funded research project that will investigate handover in healthcare settings. This is an

exciting opportunity to get involved in a major study of clinical handover and to contribute to the design of innovative technological solutions to enhance the efficacy of handover. The researcher will be primarily

responsible for implementing novel interactive systems to support handover using technologies such as PDAs, telemedicine and interactive whiteboards.

Clinical handover is the handing over of responsibility and care for patients from one individual or team to another. It has been shown to make a vital contribution to the safety and effectiveness of clinical work, yet current practice is highly variable. Handovers are often

impromptu, informal and supported by ad hoc artefacts such as paper-based notes. While there have been small-scale studies of clinical handover in specific settings, there is a lack of basic research. We will address this by conducting extensive field studies of handover in a range of healthcare settings, by developing a generic model of handover and by designing and prototyping novel software and hardware solutions to support handover.

Candidates should have a good BSc in a computing discipline with a significant HCI component. An MSc in a relevant discipline and research experience in HCI are desirable. Experience in designing and implementing interactive systems on a range of software and hardware platforms is essential. Candidates should also have a keen interest in healthcare issues and an awareness of current IT developments for healthcare.

This post is based in the Centre for HCI Design (HCID) at City University London, an independent research centre in the School of Informatics. HCID has an international reputation in human-computer interaction and software engineering research, including studies of work and human-system interaction, usability evaluation, accessibility, requirements engineering, and system modelling.

In return, we offer a comprehensive package of in-house staff training and development, and benefits that include a final salary pension scheme.

Actively working to promote equal opportunity and diversity.

For more information and an application pack, visit www.city.ac.uk/jobs or write to Recruitment Team, HR Department, City University, Northampton Square, London EC1V 0HB, quoting job reference number BD/10514.

21:45 Posted in Research institutions & funding opportunities | Permalink | Comments (0) | Tags: funding opportunities

Graded motor imagery for pathologic pain

Graded motor imagery for pathologic pain. A randomized controlled trial.

Neurology. 2006 Nov 2;

Authors: Moseley GL

Phantom limb and complex regional pain syndrome type 1 (CRPS1) are characterized by changes in cortical processing and organization, perceptual disturbances, and poor response to conventional treatments. Graded motor imagery is effective for a small subset of patients with CRPS1. OBJECTIVE: To investigate whether graded motor imagery would reduce pain and disability for a more general CRPS1 population and for people with phantom limb pain. METHODS: Fifty-one patients with phantom limb pain or CRPS1 were randomly allocated to motor imagery, consisting of 2 weeks each of limb laterality recognition, imagined movements, and mirror movements, or to physical therapy and ongoing medical care. RESULTS: There was a main statistical effect of treatment group, but not diagnostic group, on pain and function. The mean (95% CI) decrease in pain between pre- and post-treatment (100 mm visual analogue scale) was 23.4 mm (16.2 to 30.4 mm) for the motor imagery group and 10.5 mm (1.9 to 19.2 mm) for the control group. Improvement in function was similar and gains were maintained at 6-month follow-up. CONCLUSION: Motor imagery reduced pain and disability in these patients with complex regional pain syndrome type I or phantom limb pain, but the mechanism, or mechanisms, of the effect are not clear.

21:27 Posted in Mental practice & mental simulation | Permalink | Comments (0) | Tags: motor imagery

Tongue Piercing by a Yogi: QEEG Observations

Tongue Piercing by a Yogi: QEEG Observations.

Appl Psychophysiol Biofeedback. 2006 Nov 3;

Authors: Peper E, Wilson VE, Gunkelman J, Kawakami M, Sata M, Barton W, Johnston J

This study reports on the QEEG observations recorded from a yogi during tongue piercing in which he demonstrated voluntary pain control. The QEEG was recorded with a Lexicor 1620 from 19 sites with appropriate controls for impedence and artifacts. A neurologist read the data for abnormalities and the QEEG was analyzed by mapping, single and multiple hertz bins, coherence, and statistical comparisons with a normative database. The session included a meditation baseline and tongue piercing. During the meditative baseline period the yogi's QEEG maps suggesting that he was able to lower his brain activity to a resting state. This state showed a predominance of slow wave potentials (delta) during piercing and suggested that the yogi induced a state that may be similar to those found when individuals are under analgesia. Further research should be conducted with a group of individuals who demonstrate exceptional self-regulation to determine the underlying mechanisms, and whether the skills can be used to teach others how to manage pain.

21:25 Posted in Meditation & brain | Permalink | Comments (0) | Tags: meditation

Nov 03, 2006

High sensitivity to multisensory conflicts in agoraphobia exhibited by virtual reality

High sensitivity to multisensory conflicts in agoraphobia exhibited by virtual reality.

Eur Psychiatry. 2006 Oct;21(7):501-8

Authors: Viaud-Delmon I, Warusfel O, Seguelas A, Rio E, Jouvent R

The primary aim of this study was to evaluate the effect of auditory feedback in a VR system planned for clinical use and to address the different factors that should be taken into account in building a bimodal virtual environment (VE). We conducted an experiment in which we assessed spatial performances in agoraphobic patients and normal subjects comparing two kinds of VEs, visual alone (Vis) and auditory-visual (AVis), during separate sessions. Subjects were equipped with a head-mounted display coupled with an electromagnetic sensor system and immersed in a virtual town. Their task was to locate different landmarks and become familiar with the town. In the AVis condition subjects were equipped with the head-mounted display and headphones, which delivered a soundscape updated in real-time according to their movement in the virtual town. While general performances remained comparable across the conditions, the reported feeling of immersion was more compelling in the AVis environment. However, patients exhibited more cybersickness symptoms in this condition. The result of this study points to the multisensory integration deficit of agoraphobic patients and underline the need for further research on multimodal VR systems for clinical use.

23:30 Posted in Virtual worlds | Permalink | Comments (0) | Tags: virtual reality, cybertherapy

Nov 01, 2006

Synthecology

Re-blogged from Networked Performance

Synthecology combines the possibilities of tele-immersive collaboration with a new architecture for virtual reality sound immersion to create a environment where musicians from all locations can interactively perform and create sonic environments.

Compose, sculpt, and improvise with other musicians and artists in an ephemeral garden of sonic lifeforms. Synthecology invites visitors in this digitally fertile space to create a musical sculpture of sythesized tones and sound samples provided by web inhabitants. Upon entering the garden, each participant can pluck contributed sounds from the air and plant them, wander the garden playing their own improvisation or collaborate with other participants to create/author a new composition.

As each new 'seed' is planted and grown, sculpted and played, this garden becomes both a musical instrument and a composition to be shared with the rest of the network. Every inhabitant creates, not just as an individual composer shaping their own themes, but as a collaborator in real time who is able to improvise new soundscapes in the garden by cooperating with other avatars from diverse geographical locations.

Virtual participants are fully immersed in the garden landscape through the use of passive stereoscopic technology and spatialized audio to create a networked tele-immersive environment where all inhabitants can collaborate, socialize and play. Guests from across the globe are similarly embodied as avatars through out this environment, each experiencing the audio and visual presence of the others.

Continue to read the full post here

23:51 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart, creativity and computers