Dec 08, 2009

FaceBots

The world's first robot with its own Facebook page (and that can use its information in conversations with "friends") has been developed by Nikolaos Mavridis and collaborators from the Interactive Robots and Media Lab at the United Arab Emirates University.

The main hypothesis of the FaceBots project is that long-term human robot interaction will benefit by reference to "shared memories" and "events relevant to shared friends" in human-robot dialogues.

More to explore:

-

N. Mavridis, W. Kazmi and P. Toulis, "Friends with Faces: How Social Networks Can Enhance Face Recognition and Vice Versa", contributed book chapter to Computational Social Networks Analysis: Trends, Tools and Research Advances, Springer Verlag, 2009. pdf

N. Mavridis, W. Kazmi, P. Toulis, C. Ben-AbdelKader, "On the synergies between online social networking, Face Recognition, and Interactive Robotics", CaSoN 2009. pdf

N. Mavridis, C. Datta et al, "Facebots: Social robots utilizing and publishing social information in Facebook", IEEE HRI 2009. pdf

22:41 Posted in AI & robotics, Social Media | Permalink | Comments (0) | Tags: robotics, artificial intelligence, social networks

Mar 16, 2008

A second life for AI

Source: Eetimes

Passing the Turing test - the holy grail of AI (a human conversing with a computer can't tell it's not human) - may now be possible in a limited way with the world's fastest supercomputer (IBM's Blue Gene) and mimicking the behavior of a human-controlled avatar in a virtual world, according to AI experts at Rensselaer Polytechnic Institute. "We are building a knowledge base that corresponds to all of the relevant background for our synthetic character--where he went to school, what his family is like, and so on," said Selmer Bringsjord, head of Rensselaer's Cognitive Science Department and leader of the research project. The researchers plan to engineer, from the start, a full-blown intelligent character and converse with him in an interactive virtual environment, like Second Life.

read full article here

23:46 Posted in AI & robotics, Virtual worlds | Permalink | Comments (0) | Tags: virtual reality, artificial intelligence

Dec 19, 2007

Avatar-controlled robots

Via KurzweilAI.net

Researchers at Korea Advanced Institute of Science and Technology have developed a system for controlling physical robots using software robots, displayed as virtual-reality avatars.

Article

23:53 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, virtual reality

Dec 04, 2007

Simroid

Via Pink Tentacle

Simroid is a robotic dental patient designed by Kokoro Company Ltd as a training tool for dentists.

The simulated patient can follow spoken instructions, closely monitor a dentist’s performance during mock treatments, and react in a human-like way to mouth pain thanks to mouth sensors.

08:37 Posted in AI & robotics | Permalink | Comments (0) | Tags: robotics, artificial intelligence

Oct 25, 2007

Japanese android recognizes and uses body language

Via Pink Tentacle

Japan’s National Institute of Information and Communications Technology (NICT) researchers have developed an autonomous humanoid robot that can recognize and use body language. According to the press release, the android can use nonverbal communication skills such as gestures and touch to facilitate natural interaction with humans. NICT researchers envision future applications of this technology in robots that can work in the home or assist with rescue operations when disaster strikes.

NICT press release (japanese)

22:45 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Oct 07, 2007

NIPS 2007 WORKSHOP: Robotics Challenges for Machine Learning

Dates: 7-8 December, 2007

Organizers:

Jan Peters (Max Planck Institute for Biological Cybernetics & USC), Marc Toussaint (Technical University of Berlin)

http://www.robot-learning.de

email: nips07@robot-learning.de

Acceptance Notification: October 26, 2007

22:12 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Oct 01, 2007

Artificial brain falls for optical illusions

Via New Scientist

A computer program that emulates the human brain falls for the same optical illusions humans do.

It suggests the illusions are a by-product of the way babies learn to filter their complex surroundings. Researchers say this means future robots must be susceptible to the same tricks as humans are in order to see as well as us.

For some time, scientists have believed one class of optical illusions result from the way the brain tries to disentangle the colour of an object and the way it is lit. An object may appear brighter or darker, either because of the shade of its colour, or because it is in bright light or shadows.

The brain learns how to tackle this through trial and error when we are babies, the theory goes. Mostly it gets it right, but occasionally a scene contradicts our previous experiences. The brain gets it wrong and we perceive an object lighter or darker than it really is – creating an illusion

Read full article

22:15 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence

Sep 20, 2007

Japanese seniors bored with robot

From Robot.net

According to a Reuters report, Japanese senior citizens quickly become bored with the simple robots so far introduced into nursing homes:

"The residents liked ifbot for about a month before they lost interest. Stuffed animals are more popular." Ifbot is a small robot that can converse, sing, express emotions, and even present trivia quizzes to senior citizens. According to the article the robot has spent most of the past year sitting in a corner, unused. Another robot, Hopis, that looked like a furry pink dog has gone out of production due to poor sales. Hopis was designed to monitor blood sugar, blood pressue, and body temperature. One problem may be that both robots are little more than advanced toys. Neither can help elderly people with day-to-day problems they face such as getting around their house, reading small print, or taking a bath. The elderly have found utilitarian improvements in existing devices more useful: height-adjustable countertops or extra-big control buttons on household gadgets. Whether seniors will find robots that can help with their utilitarian needs more to their liking than fluffy pupbots that sing remains to be seen"

21:25 Posted in AI & robotics | Permalink | Comments (0) | Tags: robotics, artificial intelligence

Sep 16, 2007

EU researchers implanted an artificial cerebellum inside a robot

Roland Piquepaille reports that "An international team of European researchers has implanted an artificial cerebellum - the portion of the brain that controls motor functions - inside a robotic system. This EU-funded project is dubbed SENSOPAC, an acronym for "SENSOrimotor structuring of perception and action for emerging cognition". One of the goals of this project is to design robots able to interact with humans in a natural way. This project, which should be completed at the end of 2009, also wants to produce robots which would act as home-helpers for disabled people, such as persons affected by neurological disorders, such as Parkinson's disease."

SENSOPAC website

22:39 Posted in AI & robotics | Permalink | Comments (0) | Tags: robotics, artificial intelligence

Sep 13, 2007

Telepresence robot for interpersonal communication with the elderly

Developing a Telepresence Robot for Interpersonal Communication with the Elderly in a Home Environment.

Telemed J E Health. 2007 Aug;13(4):407-424

Authors: Tsai TC, Hsu YL, Ma AI, King T, Wu CH

"Telepresence" is an interesting field that includes virtual reality implementations with human-system interfaces, communication technologies, and robotics. This paper describes the development of a telepresence robot called Telepresence Robot for Interpersonal Communication (TRIC) for the purpose of interpersonal communication with the elderly in a home environment. The main aim behind TRIC's development is to allow elderly populations to remain in their home environments, while loved ones and caregivers are able to maintain a higher level of communication and monitoring than via traditional methods. TRIC aims to be a low-cost, lightweight robot, which can be easily implemented in the home environment. Under this goal, decisions on the design elements included are discussed. In particular, the implementation of key autonomous behaviors in TRIC to increase the user's capability of projection of self and operation of the telepresence robot, in addition to increasing the interactive capability of the participant as a dialogist are emphasized. The technical development and integration of the modules in TRIC, as well as human factors considerations are then described. Preliminary functional tests show that new users were able to effectively navigate TRIC and easily locate visual targets. Finally the future developments of TRIC, especially the possibility of using TRIC for home tele-health monitoring and tele-homecare visits are discussed.

22:32 Posted in AI & robotics, Telepresence & virtual presence | Permalink | Comments (0) | Tags: robotics, artificial intelligence, telepresence

Jul 26, 2007

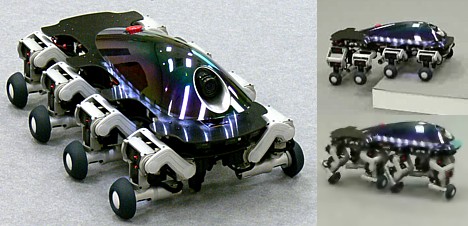

Halluc II: 8-legged robot vehicle

Researchers at the Chiba Institute of Technology have developed a robotic vehicle with eight wheels and legs designed to drive or walk over rugged terrain. The agile robot, which the developers aim to put into practical use within the next five years, can move sideways, turn around in place and drive or walk over a wide range of obstacles.

The researchers hope the robot’s abilities will help out with rescue operations, and they would like to see Halluc II’s technology put to use in transportation for the mobility-impaired.

Here’s a short video of the model in action.

19:05 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Jul 14, 2007

RunBot

Researchers from Germany and the United Kingdom have developed a bipedal walking robot, capable of self-stabilizing via a highly-developed learning process.

From the study abstract:

In this study we present a planar biped robot, which uses the design principle of nested loops to combine the self-stabilizing properties of its biomechanical design with several levels of neuronal control. Specifically, we show how to adapt control by including online learning mechanisms based on simulated synaptic plasticity. This robot can walk with a high speed (>3.0 leg length/s), self-adapting to minor disturbances, and reacting in a robust way to abruptly induced gait changes. At the same time, it can learn walking on different terrains, requiring only few learning experiences. This study shows that the tight coupling of physical with neuronal control, guided by sensory feedback from the walking pattern itself, combined with synaptic learning may be a way forward to better understand and solve coordination problems in other complex motor tasks.

The paper: Adaptive, Fast Walking in a Biped Robot under Neuronal Control and Learning (Manoonpong P, Geng T, Kulvicius T, Porr B, Worgotter F (2007) Adaptive, Fast Walking in a Biped Robot under Neuronal Control and Learning. PLoS Comput Biol 3(7): e134)

16:18 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Jul 11, 2007

Using a Robot to Teach Human Social Skills

Via KurzweilAI

A humanoid robot designed to teach autistic children social skills has begun testing in British schools. Known as KASPAR (Kinesics and Synchronisation in Personal Assistant Robotics), the $4.33 million bot smiles, simulates surprise and sadness

Read full article

21:26 Posted in AI & robotics, Cybertherapy | Permalink | Comments (0) | Tags: artificial intelligence, robotics, cybertherapy

Jul 06, 2007

Epigenetic Robotics 2007 (Extended Deadline)

Via NeuroBot

5-7 November 2007, Piscataway, NJ, USA

Seventh International Conference on Epigenetic Robotics: Modeling Cognitive Development in Robotic Systems

http://www.epigenetic-robotics.org

Email: epirob07@epigenetic-robotics.org

Location:

Rutgers, The State University of New Jersey,

Piscataway, NJ, USA

*Extended* Submission Deadline: 1 August 2007

16:16 Posted in AI & robotics, Call for papers | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Apr 29, 2007

First DARPA Limb Prototype

From the DARPA press release

(via Medgadget)

An international team led by the Johns Hopkins University Applied Physics Laboratory (APL) in Laurel, Md., has developed a prototype of the first fully integrated prosthetic arm that can be controlled naturally, provide sensory feedback and allows for eight degrees of freedom--a level of control far beyond the current state of the art for prosthetic limbs. Proto 1, developed for the Defense Advanced Research Projects Agency (DARPA) Revolutionizing Prosthetics Program, is a complete limb system that also includes a virtual environment used for patient training, clinical configuration, and to record limb movements and control signals during clinical investigations.

The DARPA prosthetics program is an ambitious effort to provide the most advanced medical and rehabilitative technologies for military personnel injured in the line of duty. Over the last year, the APL-led Revolutionizing Prosthetics 2009 (RP 2009) team has worked to develop a prosthetic arm that will restore significant function and sensory perception of the natural limb. Proto 1 and its virtual environment system were delivered to DARPA ahead of schedule, and Proto 1 was fitted for clinical evaluations conducted by team partners at the Rehabilitation Institute of Chicago (RIC) in January and February.

"This progress represents the first major step in a very challenging program that spans four years and involves more than 30 partners, including government agencies, universities, and private firms from the United States, Europe, and Canada," says APL's Stuart Harshbarger, who leads the program. "The development of this first prototype within the first year of this program is a remarkable accomplishment by a highly talented and motivated team and serves as validation that we will be able to implement DARPA's vision to provide, by 2009, a mechanical arm that closely mimics the properties and sensory perception of a biological limb."

APL, which was responsible for much of the design and fabrication of Proto 1, and other team members are already hard at work on a second prototype, expected to be unveiled in late summer. It will have more than 25 degrees of freedom and the strength and speed of movement approaching the capabilities of the human limb, combined with more than 80 individual sensory elements for feedback of touch, temperature, and limb position.

"There is still significant work to be done to determine how best to control this number of degrees of freedom, and ultimately how to incorporate sensory feedback based on these sensory inputs within the human nervous system," Harshbarger says. "The APL team is already driving a virtual model of Proto 2 with data recorded during the clinical evaluation of Proto 1, and the team is working to identify a robust set of grasps that can be controlled by a second patient later this year."

Another exciting development is the functional demonstration of Injectable MyoElectric Sensor (IMES) devices--very small injectable or surgically implantable devices used to measure muscle activity at the source verses surface electrodes on the skin that were used during testing of the first prototype.

18:59 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Apr 27, 2007

Evolution of visually guided behavior in artificial agents

Evolution of visually guided behavior in artificial agents.

Network. 2007 Mar;18(1):11-34

Authors: Boots B, Nundy S, Purves D

Recent work on brightness, color, and form has suggested that human visual percepts represent the probable sources of retinal images rather than stimulus features as such. Here we investigate the plausibility of this empirical concept of vision by allowing autonomous agents to evolve in virtual environments based solely on the relative success of their behavior. The responses of evolved agents to visual stimuli indicate that fitness improves as the neural network control systems gradually incorporate the statistical relationship between projected images and behavior appropriate to the sources of the inherently ambiguous images. These results: (1) demonstrate the merits of a wholly empirical strategy of animal vision as a means of contending with the inverse optics problem; (2) argue that the information incorporated into biological visual processing circuitry is the relationship between images and their probable sources; and (3) suggest why human percepts do not map neatly onto physical reality.

13:06 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Apr 22, 2007

Seventh International Conference on Epigenetic Robotics

Seventh International Conference on Epigenetic Robotics: Modeling Cognitive Development in Robotic Systems

Call for Papers: Epigenetic Robotics 2007

5-7 November 2007, Piscataway, NJ, USA

Location: Rutgers, The State University of New Jersey, Piscataway, NJ, USA

22:17 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Apr 11, 2007

MOBI

Graham Smith is a leading expert in the fields of telepresence, virtual reality, videoconferencing and robotics. He has worked with leading Canadian high tech companies for more than 14 years, including Nortel, Vivid Effects, VPL, BNR and IMAX. Graham initiated and headed the Virtual Reality Artist Access Program at the world-renowned McLuhan Program at the University of Toronto, and has lectured internationally. He holds numerous patents in the field of telepresence and panoramic imaging, and was recognized in Macleans magazine as one of the top 100 Canadians to watch.

23:05 Posted in AI & robotics, Telepresence & virtual presence | Permalink | Comments (0) | Tags: robotics, artificial intelligence, telepresence

Mar 16, 2007

Socially assistive robotics for post-stroke rehabilitation

Journal of NeuroEngineering and Rehabilitation

Maja J Matari

Background: Although there is a great deal of success in rehabilitative robotics applied to patient recovery post stroke, most of the research to date has dealt with providing physical assistance. However, new rehabilitation studies support the theory that not all therapy need be hands-on. We describe a new area, called socially assistive robotics, that focuses on non-contact patient/user assistance. We demonstrate the approach with an implemented and tested post-stroke recovery robot and discuss its potential for effectiveness. Results: We describe a pilot study involving an autonomous assistive mobile robot that aids stroke patient rehabilitation by providing monitoring, encouragement, and reminders. The robot navigates autonomously, monitors the patient's arm activity, and helps the patient remember to follow a rehabilitation program. We also show preliminary results from a follow-up study that focused on the role of robot physical embodiment in a rehabilitation context. Conclusion: We outline and discuss future experimental designs and factors toward the development of effective socially assistive post-stroke rehabilitation robots.

21:46 Posted in AI & robotics | Permalink | Comments (0) | Tags: robotics, artificial intelligence

Robot/computer-assisted motivating systems for personalized, home-based, stroke rehabilitation

21:45 Posted in AI & robotics | Permalink | Comments (0) | Tags: robotics, artificial intelligence