Apr 18, 2006

Cogain project: Communication by Gaze Interaction

The EU-funded five-year project COGAIN (Communication by Gaze Interaction) will attempt to make eye-tracking technologies more affordable for people with disabilities and extend the potential use of the devices to enable users to live more independently.

From the project website:

COGAIN is a network of excellence on Communication by Gaze Interaction, supported by the European Commission's IST 6th framework program. COGAIN integrates cutting-edge expertise on interface technologies for the benefit of users with disabilities. The network aims to gather Europe's leading expertise in eye tracking integration with computers in a research project on assistive technologies for citizens with motor impairments. Through the integration of research activities, the network will develop new technologies and systems, improve existing gaze-based interaction techniques, and facilitate the implementation of systems for everyday communication.

IST 6th framework program. COGAIN integrates cutting-edge expertise on interface technologies for the benefit of users with disabilities. The network aims to gather Europe's leading expertise in eye tracking integration with computers in a research project on assistive technologies for citizens with motor impairments. Through the integration of research activities, the network will develop new technologies and systems, improve existing gaze-based interaction techniques, and facilitate the implementation of systems for everyday communication.

Read the full report

20:30 Posted in Research tools | Permalink | Comments (0) | Tags: Positive Technology

The Guardian: Now the bionic man is real

Via VRoot

From the article: The 1970s gave us the six-million-dollar man. Thirty years and quite a bit of inflation later we have the six-billion-dollar human: not a physical cyborg as such, instead an umbrella term for the latest developments in the growing field of technology for human enhancement.

Helping the blind to see again, being able to carry enormous loads without the prospect of backache and a prosthetic robotic hand that works (almost) like a real one were some of the ideas presented at a recent meeting of engineers, physicists, biologists and computer scientists organised by the American Association of Anatomists...

Read full article

20:29 Posted in AI & robotics | Permalink | Comments (0) | Tags: Positive Technology

VRoot: Polhemus announces new tracker

Polhemus, the industry leader in 6 Degree of Freedom (6DOF) motion capture, tracking and digitizing technologies is proud to announce MINUTEMAN™, the new low cost (under $1,500) 3-Degree-of-Freedom (3DOF) tracking product. MINUTEMAN represents a quantum leap in new technology and state of the art Digital Signal Processor (DSP) electronics which results in a major price reduction for tracking technology. MINUTEMAN is drift free, has the speed of 75 Hz per sensor and offers ease of use via an intuitive Graphical User Interface (GUI). The electronics unit (E-Pod), which is powered by the USB interface and contains the electromagnetic source, is only slightly larger than a pack of playing cards. Full InertiaCube2 emulation software is also provided for plug-and-play hardware replacement without having to worry about rewriting code. The combination of all these attributes clearly positions MINUTEMAN as a new class of electromagnetic tracking, offering significant improvements over competitive technologies.

20:20 Posted in Virtual worlds | Permalink | Comments (0) | Tags: Positive Technology

$25K Prize for Neurobiology

Via Brain Waves

$25,000 Eppendorf & Science Prize for Neurobiology is now accepting entries. Deadline: June 15, 2006.

From the website:

The Eppendorf & Science Prize for Neurobiology acknowledges the increasing importance of this research in advancing our understanding of how the brain and nervous system function a quest that seems destined for dramatic expansion in the coming decades. This international prize, established in 2002, is intended to encourage and support the work of promising young neurobiologists who have received their PhD or MD within the past 10 years. The prize is awarded annually to one young scientist for the most outstanding neurobiological research conducted by him/her during the past three years, as described in a 1,000-word entrance essay.

20:05 Posted in Research institutions & funding opportunities | Permalink | Comments (0) | Tags: Positive Technology

Brain waves: Neurotechnology Revenues Reach $110 Billion

Via Brain Waves

According to the Neurotechnology Industry 2006 Report, neurotechnology revenues reach $110 Billion.

Produced by Neuroinsight, the report provides a unified market-based framework to help investors, companies and governments quantify opportunities, determine risks and understand the dynamics of this market.

20:01 Posted in Neurotechnology & neuroinformatics | Permalink | Comments (0) | Tags: Positive Technology

United States Patent: Nervous system manipulation by electromagnetic field from monitors

I found this US technology patent, which claims a method for manipulating the nervous system of a subject via broadcast television signals, DVD, and computer terminal (if the link does not work, go to this page and type the number 6,506,148 in the "Query" bar):

| Inventors: | Loos; Hendricus G. (3019 Cresta Way, Laguna Beach, CA 92651) |

| Appl. No.: | 872528 |

| Filed: | June 1, 2001 |

11:35 Posted in Persuasive technology | Permalink | Comments (0) | Tags: Positive Technology

WMMNA: Children 'bond with robots'

Researchers from Sony Intelligence Dynamics Laboratories and a nursery school in San Diego are conducting an experiment that focuses on how children can develop emotions toward robots. Results of this research could be used to develop smarter and friendlier humanoid robots, with a huge commercial potential.

11:17 Posted in AI & robotics | Permalink | Comments (0) | Tags: Positive Technology

High Speed, Light-based Brain Activity Detector

From Neuromarketing

Neuroscientists Gabriele Gratton and Monica Fabiani at the University of Illinois Beckman Institute’s Cognitive Neuroimaging Laboratory are using very intense near-infrared illumination to measure neuronal activity in the cortex:

The EROS is a new non-invasive brain imaging method that we are developing at the CNL. Our research has determined that this technique possesses a unique combination of spatial and temporal resolution. This makes it possible to use EROS to measure the activity in localized cortical areas. For this reason, EROS can be used to analyze the relative timing of activity in different areas, to study the order of recruitment of different cortical areas, and to examine the connections between areas. These are all questions that are difficult to study with other brain imaging methods.

According to these researchers, the EROS system can measure very short intervals of activity, down to the millisecond level. Its biggest shortcoming is the inability to detect activity more than a few centimeters deep, but it is a relative unexpensive technique (as compared to fMRI and PET) that is not invasive to the test subject.

More information about EROS can be found in this paper entitled: "Fast and Localized Event-Related Optical Signals (EROS) in the Human Occipital Cortex: Comparisons with the Visual Evoked Potential and fMRI" (Neuroimage 6, 168–180 (1997)

11:05 Posted in Research tools | Permalink | Comments (0) | Tags: Positive Technology

Moving while being seated

Via Emerging Technology Trends

Researchers from Max Plank Institute (Germany) and Chalmers University of Technology (Sweden) have developed a new virtual reality prototype, which gives users the illusion of movement while being seated. According to developers, this approach could lead to commercial low-cost VR simulators in the near future.

The simulator "exploits a vection illusion of the brain, which makes us believe we are moving when actually we are stationary. The same can be experienced, for instance, when you are stopped at a traffic light in your car and the car next to you edges forward. Your brain interprets this peripheral visual information as though you are moving backwards".

The simulator "exploits a vection illusion of the brain, which makes us believe we are moving when actually we are stationary. The same can be experienced, for instance, when you are stopped at a traffic light in your car and the car next to you edges forward. Your brain interprets this peripheral visual information as though you are moving backwards".

More information about the scientific background of this approach can be found in the paper entitled "Influence of Auditory Cues on the Visually Induced Self-Motion Illusion (Circular Vection) in Virtual Reality" (PDF format, 9 pages, 1.22 MB).

The VR system is the main outcome of the EU-funded project POEMS "Perceptually Oriented Ego — Motion Simulation".

10:41 Posted in Virtual worlds | Permalink | Comments (0) | Tags: Positive Technology

Apr 17, 2006

PhD in Computing/Virtual Reality & Neuroscience - Bournemouth, UK

Applicants must have (or expect to receive) a good honours degree or equivalent in computer science or related discipline and demonstrate:

* An excellent understanding of Human-Computer Interaction

* Strong object oriented design and programming skills

* Strong computer graphics knowledge and experience

Studentships will be funded at £12,500 per year, starting 01/10/2006. Please send applications (including covering letter, CV and two academic referees) to the address below.

Dr Fiona Knight

Graduate School Manager

The Graduate School

Bournemouth University

PG63 Talbot Campus

Fern Barrow, Poole

Dorset BH12 5BB

United Kingdom

Tel: +44 (0)1202 965902

Fax: +44 (0)1202 965069

Email: graduateschool@bournemouth.ac.uk

14:28 Posted in Research institutions & funding opportunities | Permalink | Comments (0) | Tags: Positive Technology

Apr 16, 2006

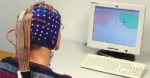

Neurofeedback for the Treatment of Epilepsy

Foundation and Practice of Neurofeedback for the Treatment of Epilepsy.

Appl Psychophysiol Biofeedback. 2006 Apr 14;

Authors: Sterman MB, Egner T

This review provides an updated overview of the neurophysiological rationale, basic and clinical research literature, and current methods of practice pertaining to clinical neurofeedback. It is based on documented findings, rational theory, and the research and clinical experience of the authors. While considering general issues of physiology, learning principles, and methodology, it focuses on the treatment of epilepsy with sensorimotor rhythm (SMR) training, arguably the best established clinical application of EEG operant conditioning. The basic research literature provides ample data to support a very detailed model of the neural generation of SMR, as well as the most likely candidate mechanism underlying its efficacy in clinical treatment. Further, while more controlled clinical trials would be desirable, a respectable literature supports the clinical utility of this alternative treatment for epilepsy. However, the skilled practice of clinical neurofeedback requires a solid understanding of the neurophysiology underlying EEG oscillation, operant learning principles and mechanisms, as well as an in-depth appreciation of the ins and outs of the various hardware/software equipment options open to the practitioner. It is suggested that the best clinical practice includes the systematic mapping of quantitative multi-electrode EEG measures against a normative database before and after treatment to guide the choice of treatment strategy and document progress towards EEG normalization. We conclude that the research literature reviewed in this article justifies the assertion that neurofeedback treatment of epilepsy/seizure disorders constitutes a well-founded and viable alternative to anticonvulsant pharmacotherapy.

20:53 Posted in Biofeedback & neurofeedback | Permalink | Comments (0) | Tags: Positive Technology

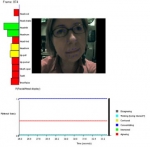

Emotion mapping

Via the Observer

30-year-old artist Christian Nold has created a "emotion mapping" device that allows people to compare their moods with their surroundings. It measures not just major reactions that tend to stick in the memory, but also the degrees of stimulation caused by speaking to a stranger, crossing the road or listening to birdsong.  Emotion mapping is the result of the combination of two existing technologies: skin galvanic response sensor, which records the changing sweat levels on the skin as a measure of mental arousal and Global Positioning System. By calling up data from the finger cuffs, emotion mapping displays the user's fluctuating level of arousal, expressed as peaks and troughs along the route. So a walk down a country lane might produce only a mild curve. But dashing across a busy road or being confronted by a mugger might show up as a sudden spike.

Emotion mapping is the result of the combination of two existing technologies: skin galvanic response sensor, which records the changing sweat levels on the skin as a measure of mental arousal and Global Positioning System. By calling up data from the finger cuffs, emotion mapping displays the user's fluctuating level of arousal, expressed as peaks and troughs along the route. So a walk down a country lane might produce only a mild curve. But dashing across a busy road or being confronted by a mugger might show up as a sudden spike.

And here is the tool and what Christian did with it. Its actually worth seeing - especially the "Google Earth" version of the emotionmap:

11:55 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Apr 14, 2006

Emotional Social Intelligence Prosthetic

Via Wired

Wired reports that MIT researchers are developing a wearable computer with a computational model of "mind-reading".

The prosthetic device should be able to infer affective-cognitive mental states from head and facial displays of people in real time, and communicate these inferences to the wearer via sound and/or tactile feedback.

According to MIT researchers, the system could help people afflicted by autism (who lack the ability to ascertain others' emotional status) in doing the "mind reading". A broader motivation of the project is to explore the role of technology in promoting social-emotional intelligence of people and to understand more about how social-emotional skills interact with learning.

A prototype of ESIP was unveiled at at the Body Sensor Networks 2006 international workshop at MIT's Media Lab last week. The video cameras captured facial expressions and head movements, then fed the information to a desktop computer that analyzed the data and gave real-time estimates of the individuals' mental states, in the form of color-coded graphs.

19:20 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: Positive Technology

Apr 13, 2006

PervasiveHealth 2006

Call for Papers

PervasiveHealth Conference 2006

Inssbruck, Austria, November 29th - December 1st 2006.

www.pervasivehealth.org

Sponsored by:

-IEEE EMB Society,

-ACM Association for Computing Machinery

-CREATE-NET Center for REsearch And Telecommunication Experimentation for NETworked communities,

-ICST International Communication Sciences and Technology Association

Pervasive healthcare may be defined from two perspectives. First, it is the development and application of pervasive computing (or ubiquitous computing, ambient intelligence) technologies for healthcare, health and wellness management. Second, it seeks to make healthcare available to anyone, anytime, and anywhere by removing locational, time and other restraints while increasing both the coverage and quality of healthcare.

Topics of Interest:

- Design and use of bio-sensors

- Mobile devices for patient monitoring

- Wireless and wear-able devices for pervasive healthcare -Patient monitoring in diverse environments (indoor, outdoor, hospitals, nursing homes, assisted living) -Continuous vs event-driven monitoring of patients -Using mobile devices for healthcare information storage, update, and transmission -Sensing of vital signs and transmission -Home monitoring for cardiac arrhythmias -Preventative care: Technologies to improve overall health of people one wouldn't normally call "patients"

Technologies "situated in the environment"

- The use of "environmental" technologies such as cameras, interfaces, and sensor networks -Data fusion in pervasive healthcare environment -Managing knowledge in pervasive healthcare -Content-based encoding of medical information - Forming ad hoc wireless networks for enhanced monitoring of patients -Networking support for pervasive healthcare (location tracking, routing, scalable architectures, dependability, and quality of access) - Managing healthcare emergency vehicles and routing -Network support for mobile telemedicine -Ubiquitous health care and monitoring at home -Ubiquitous medical network and appliances in homes or hospitals -Ubiquitous computing support for medical works in hospitals

Medical aspects of pervasive healthcare

-Pervasive healthcare applications

-Specific requirements of vital signs in pervasive healthcare environment -Diversity of patients and their specific requirements -Representation of medical information in pervasive healthcare environment (multimedia, resolution, processing and storage requirements) -Role of medical protocols in pervasive healthcare -Improved delivery of healthcare services -The usability of wireless-based solutions in healthcare -Physiological models for interpreting medical sensor data -Decision support algorithms for sensor analysis

Management of pervasive healthcare

-Security and privacy in pervasive healthcare -Training of healthcare professional for pervasive healthcare -Managing the integration of wireless solutions in pervasive healthcare -Increasing coverage of healthcare services -Legal and regulatory issues in pervasive healthcare -Insurance payments and cost aspects -Role of HIPAA (Health Insurance Portability and Accountability Act of 1996) in pervasive healthcare

Paper Submissions:

Submissions must follow IEEE's conference style two-column format including figures and references. Conference papers will be published by IEEE and CD proceedings will be distributed during the conference days.

Papers submitted to PERVASIVEHEALTH will consist of 10 pages maximum.

Deadlines

-Paper Submission Deadline: June 15th, 2006

-Acceptance: September 1st, 2005

-Camera Ready: September 14th, 2006

19:10 Posted in Call for papers | Permalink | Comments (0) | Tags: Positive Technology

Apr 12, 2006

Neural Interfaces: Chip ramps up neuron-to-computer communication

Via VRoot (From New Scientist):

A specialised microchip that could communicate with thousands of individual brain cells has been developed by European scientists.

The device will help researchers examine the workings of interconnected brain cells, and might one day enable them to develop computers that use live neurons for memory.

The computer chip is capable of receiving signals from more than 16,000 mammalian brain cells, and sending messages back to several hundred cells. Previous neuron-computer interfaces have either connected to far fewer individual neurons, or to groups of neurons clumped together.

22:59 Posted in Brain-computer interface | Permalink | Comments (0) | Tags: Positive Technology

Social Network Mnemonics for Teenagers

Nicolas Nova (Pasta and Vinegar) has found an article describing a a curious device: Telebeads: Social Network Mnemonics for Teenagers by Jean-Baptiste Labrune and Wendy Mackay (IDC2006)

From the article:

This article presents the design of Telebeads, a conceptual exploration of mobile mnemonic artefacts. Developed together with five 10-14 year olds across two participatory design sessions, we address the problem of social network massification by allowing teenagers to link individuals or groups with wearable objects such as handmade jewelery. We propose different concepts and scenarios using mixed-reality mobile interactions to augment crafted artefacts and describe a working prototype of a bluetooth luminous ring. We also discuss what such communication appliances may offer in the future with respect to interperception, experience networks and creativity analysis.

The ring addresses two primary functions requested by the teens: providing a physical instantiation of a particular person in a wearable object and allowing direct communication with that person. (…) We have just completed an ejabberd server, running on Linux on a PDA, which will serve as a smaller, but more powerful telebead interface

22:51 Posted in Wearable & mobile | Permalink | Comments (0) | Tags: Positive Technology

Computer simulations of the mind

Scientific American Mind has a free online article about computer simulations of the mind. The article analyzes how recent technological advances are narrowing the gap between human brains and circuitry.

22:42 Posted in AI & robotics | Permalink | Comments (0) | Tags: Positive Technology

New BCI shown off at CEBIT

Via New Scientist

Researchers at the Fraunhofer Institute in Berlin and Charité, the medical school of Berlin Humboldt University in Germany, have developed and succesfully tested a brain-computer interface, which could provide a way for paralysed patients to operate computers, or for amputees to operate electronically controlled artificial limbs.

The device allows to type messages onto a computer screen by mentally controlling the movement of a cursor. A user must wear a cap containing EEG electrodes, and imagine moving their left or right arm in order to manoeuvre the cursor around.

The device was recently presented at the CeBit electronics fair in Hanover, Germany.

Read full article

22:34 Posted in Brain-computer interface | Permalink | Comments (0) | Tags: Positive Technology

Apr 11, 2006

First-person experience and usability of co-located interaction in a projection-based virtual environment

From VRoot

First-person experience and usability of co-located interaction in a projection-based virtual environment

A. Simon

Virtual Reality Software and Technology, Proceedings of the ACM symposium on Virtual reality software and technology, Pages: 23 - 30, 2005;

Large screen projection-based display systems are very often not used by a single user alone, but shared by a small group of people. We have developed an interaction paradigm allowing multiple users to share a virtual environment in a conventional single-view stereoscopic projection-based display system, with each of the users handling the same interface and having a full first-person experience of the environment. Multi-viewpoint images allow the use of spatial interaction techniques for multiple users in a conventional projection-based display.

21:00 Posted in Virtual worlds | Permalink | Comments (0) | Tags: Positive Technology

New book about Ubiquitous Computing

Via Smart Mobs

Jon Lebkowsky (WorldChanging) interviews Adam Greenfield about his new book "Everyware: The Dawning Age of Ubiquitous Computing"

WorldChanging: So you're actually coming from a user experience perspective in your analysis of ubicomp?

WorldChanging: So you're actually coming from a user experience perspective in your analysis of ubicomp?

Adam Greenfield: That's the genesis of it, yeah. That was the real emotional hook for me, just thinking about people having to configure their toilets and people having to configure their teapots to boil a kettle of tea. And just

taking a direct analogy with the technical systems that are around us now - you know, dropped cellphone calls and the blue screen of death, and everything that we're familiar with from the PC and mobile infrastructure.

WorldChanging: The blue toilet of death! (Laughter.)

Adam Greenfield: Can you imagine? And I think what heightened the sense of urgency was that this stuff was moving beyond prototypes in short order. It was moving toward consumer products, toward the digital home and digital convergence. The products were starting to be packaged and shipped. And still nobody was talking about the nonlinear interactions of network systems in one space all operating at once - it's as if none of the people who were designing them had, not so much thought, but felt what it would be like to sit in the middle of a room where you've got fifteen different technical interfaces around you, and you're responding to all of them at once, and they're all responding to you at once.

A List Apart presents an introduction of Everyware. Free sample sections are available at the Everyware mini site.

20:50 Posted in Pervasive computing | Permalink | Comments (0) | Tags: Positive Technology