Feb 08, 2013

Social sciences get into the Cloud

Scientific disciplines are usually classified in two broad categories: natural sciences and social sciences. Natural sciences investigate the physical, chemical and biological aspects of Earth, the Universe and the life forms that inhabit it. Social sciences (also defined human sciences) focus on the origin and development of human beings, societies, institutions, social relationships etc.

Natural sciences are often regarded as “hard” research disciplines, because they are based on precise numeric predictions about experimental data. Social sciences, on the other hand, are seen as “soft” because they tend to rely on more descriptive approaches to understand their object of study.

So, for example, while it has been possible to predict the existence and properties of the Higgs boson from the Standard Model of particle physics, it is not possible to predict the existence and properties of a psychological effect or phenomenon, at least with the same level of precision.

However, the most important difference between natural and social sciences is not in their final objective (since in both fields, hypotheses must be tested by empirical approaches), but in the methods and tools that they use to pursue that objective. Galileo Galilei argued that we cannot understand the universe “(…) if we do not first learn the language and grasp the symbols, in which it is written. This book is written in the mathematical language, and the symbols are triangles, circles and other geometrical figures, without whose help it is impossible to comprehend a single word of it; without which one wanders in vain through a dark labyrinth.”

But unlike astronomy, physics and chemistry, which are able to read the “book of nature” using increasingly sophisticated glasses (such as microscopes, telescopes, etc.), social sciences have no such tools to investigate social and mental processes within their natural contexts. To understand these phenomena, researchers can either focus on macroscopic aggregates of behaviors (i.e. sociology) or analyse microscopic aspects within controlled settings (i.e. psychology).

Despite these limitations, there is no doubt that social sciences have produced interesting findings: today we know much more about the human being than we did a century ago. But at the same time, advances in natural sciences have been far more impressive and groundbreaking. From the discovery of atomic energy to the sequencing of human genome, natural sciences have changed our life and could do it even more in the next decades.

However, thanks to the explosive growth of information and communication technologies, this state of things may change soon and lead to a paradigm shift in the way social phenomena are investigated. Actually, thanks to the pervasive diffusion of Internet and mobile computing devices, most of our daily activities leave a digital footprint, which provide data on what we have done and with whom.

Every single hour of our life produces an observable trace, which can be translated into numbers and aggregated to identify specific patterns or trends that would be otherwise impossible to quantify.

Thanks to the emergence of cloud computing, we are now able to collect these digital footprints in large online databases, which can be accessed by researchers for scientific purposes. These databases represent for social scientists the “book written in the mathematical language”, which they can eventually read. An enormous amount of data is already available - embedded in online social networks, organizations digital archives, or saved in the internal memory of our smartphones/tablets/PCs – although it is not always accessible (because within the domain of private companies and government agencies).

Social scientists are starting to realize that the advent of “big data” is offering unprecedented opportunities for advancing their disciplines. For example, Lazer and coll. recently published on Science (2009, 323:5915, pp. 721-723) a sort of “manifesto” of Computational Social Science, in which they explain the potential of this approach in collecting and analyzing data at a scale that may reveal patterns of individual and group behaviors.

However, in order to exploit this potential, social scientists have to open their minds to learn new tools, methods and approaches. Actually, the ability to analyse and make sense of huge quantities of data that change over time require mathematics and informatics skills that are usually not included in the training of the average social scientist. But acquiring new mathematical competences may not be enough. The majority of research psychologists, for example, is not familiar with using new technologies such as mobile computing tools, sensors or virtual environments. However, these tools may become the equivalent in psychology to what microscopes are for biology or telescopes are for astronomy.

If social scientists will open their minds to this new horizon, their impact on society could be at least as revolutionary as the one that natural scientists have produced in the last two centuries. The emergence of computational social science will not only allow scientists to predict many social phenomena, but also to unify levels of analysis that have been until now separately addressed, e.g. the neuro-psychological and the psycho-social levels.

At the same time, the transformative potential of this emerging science requires also a careful reflection on its ethical implications for the protection of privacy of participants.

18:29 Posted in Research tools | Permalink | Comments (0)

The Human Brain Project awarded $1.6 billion by the EU

At the end of January, the European Commission has officially announced the selection of the Human Brain Project (HBP) as one of its two FET Flagship projects. Federating more than 80 European and international research institutions, the Human Brain Project is planned to last ten years (2013-2023). The cost is estimated at 1.19 billion euros.

The project is the first attempt to “reconstruct the brain piece by piece and building a virtual brain in a supercomputer”. Lead by neuroscientist Henry Markram, the project was launched in 2005 as a joint research initiative between the Brain Mind Institute at the École Polytechnique Fédérale de Lausanne (EPFL) and the information technology giant IBM.

Using the impressive processing power of IBM’s Blue Gene/L supercomputer, the project reached its first milestone in December 2006, simulating a rat cortical column. As of July 2012, Henry Markram’s team has achieved the simulation of mesocircuits containing approximately 1 million neurons and 1 billion synapses (which is comparable with the number of nerve cells present in a honey bee brain). The next step, planned in 2014, will be the modelling of a cellular rat brain, with 100 mesocircuits totalling a hundred million cells. Finally, the team plans to simulate a full human brain (86 billion neurons) by the year 2023.

Watch the video overview of the Human Brain Project

Nov 11, 2012

The applification of health

Thanks to the accellerated diffusion of smartphones, the number of mobile healthcare apps has been growing exponentially in the past few years. Applications now exist to help patients managing diabetes, sharing information with peers, and monitoring mood, just to name a few examples.

Such “applification” of health is part of a larger trend called “mobile health” (or mHealth), which broadly refers to the provision of health-related services via wireless communications. Mobile health is a fast-growing market: according to a report by PEW Research as early as in 2011, 17 percent of mobile users were using their phones to look up health and medical information, and Juniper recently estimated that in the same year 44 million health apps were downloaded.

The field of mHealth has received a great deal of attention by the scientific community over the past few years, as evidenced by the number of conferences, workshops and publications dedicated to this subject; international healthcare institutions and organizations are also taking mHealth seriously.

For example, the UK Department of Health recently launched the crowdsourcing project Maps and Apps, to support the use of existing mobile phone apps and health information maps, as well as encourage people to put forward ideas for new ones. The initiative resulted in the collection of 500 health apps voted most popular by the public and health professionals, as well as a list of their ideas for new apps. At the moment of writing this post, the top-rated app is Moodscope, an application that allows users to measure, track and record comments on their mood. Other popular apps include HealthUnlocked, an online support network that connects people, volunteers and professionals to help learn, share and give practical support to one another, and FoodWiz.co, an application created by a mother of children with food allergies that which allows users to scan the bar codes on food to instantly find out which allergens are present. An app to help patients manage diabetes could not be missing from the list: Diabetes UK Tracker allows the patient to enter measurements such as blood glucose, caloric intake and weight, which can be displayed as graphs and shared with doctors; the software also features an area where patients can annotate medical information, personal feelings and thoughts.

The astounding popularity of Maps and Apps initiative suggests the beginning of a new era in medical informatics, yet this emerging vision is not without caveats. As recently emphasized by Niall Boyce on the June issue of The Lancet Technology, the main concern associated with the use of apps as a self-management tool is the limited evidence of their effectivenes in improving health. Differently from other health interventions, mHealth apps have not been subject to rigorous testing. A potential reason for the lack of randomized evaluations is the fact that most of these apps reach consumers/patients directly, without passing through the traditional medical gatekeepers. However, as Boyce suggests, the availability of trial data would not only benefit patients, but also app developers, who could bring to the market more effective and reliable products. A further concern is related to privacy and security of medical data. Although most smartphone-based medical applications apply state-of-the-art secure protocols, the wireless utilization of these devices opens up new vulnerabilities to patients and medical facilities. A recent bulletin issued by the U.S. Department of Homeland Security lists five of the top mobile medical device security risks:

- Insider: The most common ways employees steal data involves network transfer, be that email, remote access, or file transfer;

- Malware: These include keystroke loggers and Trojans, tailored to harvest easily accessible data once inside the network;

- Spearphishing: This highly-customized technique involves an email-based attack carrying malicious attack disguised as coming from a legitimate source, and seeking specific information;

- Web: DHS lists silent redirection, obfuscated JavaScript and search engine optimization poisoning among ways to penetrate a network then, ultimately, access an organization’s data;

- Lost equipment: A significant problem because it happens so frequently, even a smartphone in the wrong hands can be a gateway into a health entity’s network and records. And the more that patient information is stored electronically, the greater the number of people potentially affected when equipment is lost or stolen.

In conclusion, the “applification of healthcare” is at the same time a great opportunity for patients and a great responsibility medical professionals and developers. In order to exploit this opportunity while mitigating risks, it is essential to put in place quality evaluation procedures, which allow to monitor and optimize the effectiveness of these applications according to evidence-based standards. For example, iMedicalApps, provides independent reviews of mobile medical technology and applications by a team of physicians and medical students. Founded by founded by Dr. Iltifat Husain, an emergency medical resident at the Wake Forest University School of Medicine, iMedicalApps has been referred by Cochrane Collaboration as an evidence-based trusted Web 2.0 website.

More to explore:

Read the PVC report: Current and future state of mhealth (PDF FULL TEXT)

Watch the MobiHealthNews video report: What is mHealth?

23:27 Posted in Cybertherapy, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Oct 27, 2012

Using Activity-Related Behavioural Features towards More Effective Automatic Stress Detection

Giakoumis D, Drosou A, Cipresso P, Tzovaras D, Hassapis G, Gaggioli A, Riva G.

PLoS One. 2012;7(9):e43571. doi: 10.1371/journal.pone.0043571. Epub 2012 Sep 19

This paper introduces activity-related behavioural features that can be automatically extracted from a computer system, with the aim to increase the effectiveness of automatic stress detection. The proposed features are based on processing of appropriate video and accelerometer recordings taken from the monitored subjects. For the purposes of the present study, an experiment was conducted that utilized a stress-induction protocol based on the stroop colour word test. Video, accelerometer and biosignal (Electrocardiogram and Galvanic Skin Response) recordings were collected from nineteen participants. Then, an explorative study was conducted by following a methodology mainly based on spatiotemporal descriptors (Motion History Images) that are extracted from video sequences. A large set of activity-related behavioural features, potentially useful for automatic stress detection, were proposed and examined. Experimental evaluation showed that several of these behavioural features significantly correlate to self-reported stress. Moreover, it was found that the use of the proposed features can significantly enhance the performance of typical automatic stress detection systems, commonly based on biosignal processing.

Full paper available here

13:36 Posted in Emotional computing, Research tools, Wearable & mobile | Permalink | Comments (0)

Aug 04, 2012

The Virtual Brain

The Virtual Brain project promises "to deliver the first open simulation of the human brain based on individual large-scale connectivity", by "employing novel concepts from neuroscience, effectively reducing the complexity of the brain simulation while still keeping it sufficiently realistic".

The Virtual Brain team includes well-recognized neuroscientists from all over the world. In the video below, Dr. Randy McIntosh explains what the project is about.

First teaser release of The Virtual Brain software suite is available for download – for Windows, Mac and Linux: http://thevirtualbrain.org/

13:34 Posted in Blue sky, Neurotechnology & neuroinformatics, Research tools | Permalink | Comments (0)

Apr 20, 2012

Mental workload during brain-computer interface training

Mental workload during brain-computer interface training.

Ergonomics. 2012 Apr 16;

Authors: Felton EA, Williams JC, Vanderheiden GC, Radwin RG

Abstract. It is not well understood how people perceive the difficulty of performing brain-computer interface (BCI) tasks, which specific aspects of mental workload contribute the most, and whether there is a difference in perceived workload between participants who are able-bodied and disabled. This study evaluated mental workload using the NASA Task Load Index (TLX), a multi-dimensional rating procedure with six subscales: Mental Demands, Physical Demands, Temporal Demands, Performance, Effort, and Frustration. Able-bodied and motor disabled participants completed the survey after performing EEG-based BCI Fitts' law target acquisition and phrase spelling tasks. The NASA-TLX scores were similar for able-bodied and disabled participants. For example, overall workload scores (range 0-100) for 1D horizontal tasks were 48.5 (SD = 17.7) and 46.6 (SD 10.3), respectively. The TLX can be used to inform the design of BCIs that will have greater usability by evaluating subjective workload between BCI tasks, participant groups, and control modalities. Practitioner Summary: Mental workload of brain-computer interfaces (BCI) can be evaluated with the NASA Task Load Index (TLX). The TLX is an effective tool for comparing subjective workload between BCI tasks, participant groups (able-bodied and disabled), and control modalities. The data can inform the design of BCIs that will have greater usability.

19:37 Posted in Brain-computer interface, Research tools | Permalink | Comments (0)

Apr 04, 2012

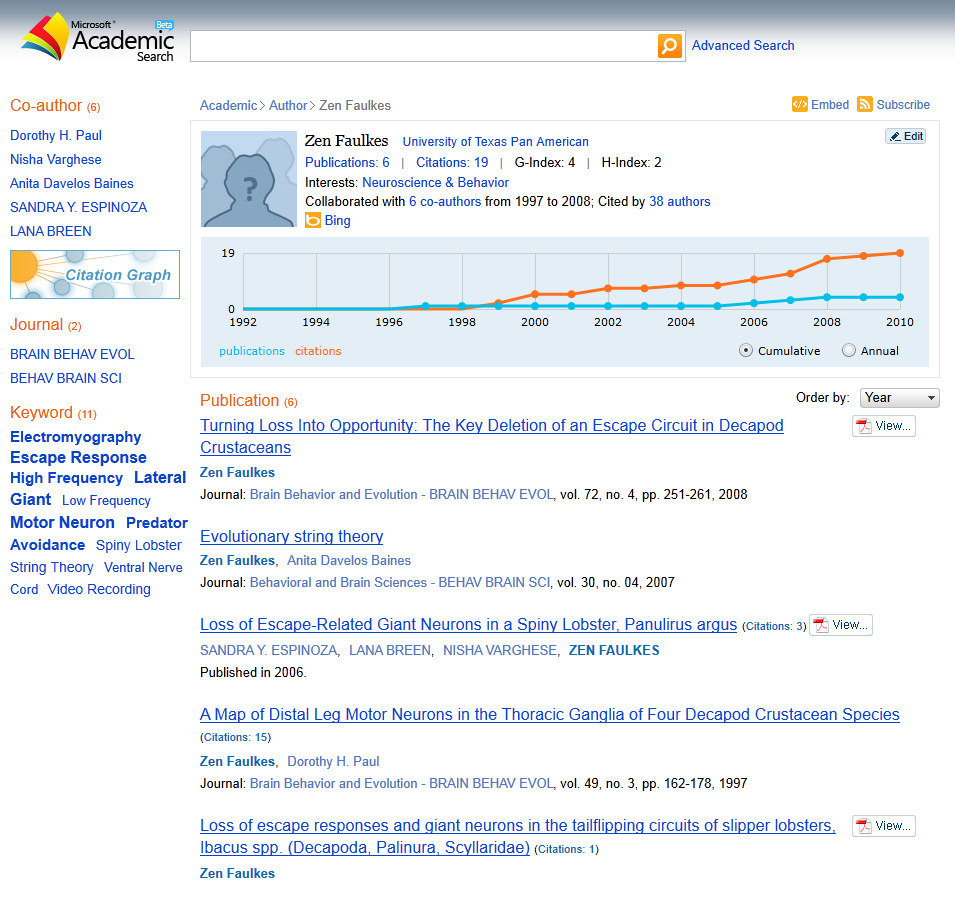

Microsoft Academic Search: nice Science 2.0 service, wrong H-index calculation!

Yesterday I came across Microsoft Academic Search, supposingly the main contender of Google Scholar Citations.

Although the service provides a plenty of interesting and useful features (including customizable author profile pages, a visual explorer, open API and many others), I don't like AT ALL the fact that it publishes an author's profile, including publication's profile and list, without asking the permission to do that (as Google Scholar does).

But what is even worse, is that the data published are, in most cases, incomplete or incorrect (or at least, they seemed incorrect for most of the authors that I included in my search). And this is not limited to the list of the list of publications (which could be understandable) but it also affects the calculation of the H-index (which measures both the productivity and impact of a scholar, based on the set of the author's most cited papers and the number of citations that they have received in other publications).

Since in the last few years the H-index has become critical to measure researcher's importance, displaying an inaccurate value of this index on a author's profile is NOT appropriate. While Microsoft may object that its service is open for users to edit the content (actually, the Help Center informs that "If you find any wrong or out-of-date information about author profile, publication profile or author publication list, you can make corrections or updates directly online") my point is that you CANNOT FORCE someone to correct otherwise potentially wrong data concerning scientific productivity.

One could even suspect that Microsoft purposefully underestimates the H-index to encourage users to join the service in order to edit their data. I do not think that this is the case, but at the same time, I cannot exclude this possibility.

Anyway, these are my two cents and I welcome your comments.

p.s. Interested in other Science 2.0 topics? Join us on Linkedin

11:30 Posted in Research tools, Social Media | Permalink | Comments (0)

Mar 31, 2012

Barriers to and mediators of brain-computer interface user acceptance

Barriers to and mediators of brain-computer interface user acceptance: focus group findings.

Ergonomics. 2012 Mar 29

Authors: Blain-Moraes S, Schaff R, Gruis KL, Huggins JE, Wren PA

Abstract. Brain-computer interfaces (BCI) are designed to enable individuals with severe motor impairments such as amyotrophic lateral sclerosis (ALS) to communicate and control their environment. A focus group was conducted with individuals with ALS (n=8) and their caregivers (n=9) to determine the barriers to and mediators of BCI acceptance in this population. Two key categories emerged: personal factors and relational factors. Personal factors, which included physical, physiological and psychological concerns, were less important to participants than relational factors, which included corporeal, technological and social relations with the BCI. The importance of these relational factors was analysed with respect to published literature on actor-network theory (ANT) and disability, and concepts of voicelessness and personhood. Future directions for BCI research are recommended based on the emergent focus group themes. Practitioner Summary: This manuscript explores human factor issues involved in designing and evaluating brain-computer interface (BCI) systems for users with severe motor disabilities. Using participatory research paradigms and qualitative methods, this work draws attention to personal and relational factors that act as barriers to, or mediators of, user acceptance of this technology.

13:34 Posted in Brain-computer interface, Research tools | Permalink | Comments (0)

May 21, 2011

Science 2.0

The emergence of Web 2.0 has resulted in a number of new communication and participation tools. Wiki, rss, weblogs and social networks have turned the Internet into a writable platform, where the user acts as a “prosumer”, that is a consumer and a producer of information. For many, however, social computing is not just a new phase in the evolution of ICT, but a new model of generation and diffusion of knowledge, which has a potentially transformative impact on social and cultural processes. The rapid and pervasive diffusion of social computing requires organizations and institutions to face new challenges and rethink their modus operandi.

Science, as any other cultural enterprise, is likely to be deeply affected by the social media revolution. This is not surprising, considering the close relationship that has always existed between the development of science and the development of the Internet. When Tim Berners Lee, a researcher of the European Particle Physics Lab (CERN) in Switzerland, created the networked hypertext, his main goal was to develop an effective solution to facilitate communication among members of the high-energy physics community, who were located in several countries. In his original proposal to CERN’s management written in March 1989, Berners-Lee suggested “the integration of a hypertext system with existing data, so as to provide a universal system, and to achieve critical usefulness at an early stage”.

Since those early days of its development, of course, the Web has changed enormously, offering researchers opportunities that are probably beyond the imagination of its inventor. Today’s social media tools and services have the potential to radically transform the way science is conducted, financed and communicated. This “Science 2.0 revolution” unfolds along three major directions: open collaboration, open data and open publication/access.

Open collaboration refers to the possibility of using the tools provided by social networks, wikis and forums to share information and know-how. This strategy allows researchers to exchange protocols, techniques, experimental procedures and find solutions to common issues. Open collaboration networks provide a powerful collaboration and learning environment, where experts from different disciplines can join their forces to develop new projects, write grant proposals, plan studies etc.

The second trend, open data, concerns the publication and re-use of scientific data such as maps, genomes, chemical compounds, medical etc. without price or permission. Although the concept is not new, it has gained momentum in recent years thanks to the raising popularity of social computing. Advocates of this approach believe that the public availability and reusability of research data not only reduce wasteful duplication of effort, but also permit faster progress in science, since different teams can use the same data to test a variety of hypotheses. Recently, prominent exponents of the open data movement have authored a set of principles – the “Panton Principles” aimed at articulating a view of what best practice should be with respect to data publication for science. A key goal of these principles is the elimination of uncertainty for researchers who wish to use the data about what exactly they are allowed to do with it.

The third emerging trend in Science 2.0, open access, concerns the provision of unrestricted online access to articles published in scholarly journals. Supporters of open access argue that this approach brings researchers increased visibility, usage and impact for their work. On the other hand, critics of OA do not believe that this model is economically sustainable, and express concerns about quality control. Another criticism is that since some OA journals require payment on behalf of the author, this could generate conflicts of interest and have a negative impact on the perceived neutrality of peer review, as there would be a financial incentive for journals to publish more articles.

Wikis, blogs and the other Web 2.0 technologies are paving a way towards providing new means of collaboration, education and communication for researchers. However, the successful adoption of this approach depends heavily on the ability to create a deep understanding of scientist’s current practices, needs and expectations.

More to explore:

- Science 2.0: Science 2.0 is an open professional network on Linkedin aimed at connecting researchers, consultants and companies and institutions interested in the impact of social media on science and technology.

- Research Gate:ResearchGate is a community for researchers in the science and technology fields that includes advanced semantic search capabilities. Launched in 2008 by Ijad Madisch, Horst Fickenscher and Sören Hofmayer, this “Facebook for scientists” has gathered a user base of more than 700.000 researchers worldwide.

- Labmeeting: Labmeeting, founded by Mark Kaganovich, Jeremy England, Dan Kaganovich, and Joseph Perla, is an online platform for scientists. It is designed as a document-sharing service for scientific papers and protocols. Members can upload their papers in PDF form, organize them, search them, and share them with other lab members.

- Openwetware: Created by graduate students at MIT in 2005, OpenWetWare is a wiki whose mission is "to support open research, education, publication, and discussion in biological sciences and engineering." All content is available under free content licenses.

- Many Eyes: Many Eyes is data-sharing site from the Visual Communication Lab at IBM. The platform allows users to upload data and then produce graphic representations for others to view and comment upon.

- Open Clinica: is an open source platform for clinical research, including electronic data capture (EDC) and clinical data management capabilities.

- Directory of Open Access Journals: DOAJ covers free, full text scientific and scholarly journals with different subjects and languages. The current directory (as of February 2011) includes 6100 journals and 506687 articles.

13:18 Posted in Research tools | Permalink | Comments (0)

Mar 03, 2011

Online predictive tools for mental illness: The OPTIMI Project

OPTIMI is a r&d project funded by the European Commission under funded by the European Union's 7th Framework Programme "Personal Health Systems - Mental Health" .

The project has two key goals goals: a) the development of new tools to monitor coping behavior in individuals exposed to high levels of stress; b) the development of online interventions to improve this behavior and reduce the incidence of depression.

To achieve its first goal, OPTIMI will develop technology-based tools to monitor the physiological state and the cognitive, motor and verbal behavior of high risk individuals over an extended period of time and to detect changes associated with stress, poor coping and depression.

A series of “calibration trials” will allow the project will test a broad range of technologies. These will include wearable EEG and ECG sensors to detect subjects’ physiological and cognitive state, accelerometers to characterize their physical activity, and voice analysis to detect signs of depression. These automated measurements will be complemented with electronic diaries, in which subjects report their own behaviors and the stressful situations to which they are exposed. Further, the project will use machine learning to identify patterns in the behavioral and physiological data that predict the findings from the psychologist and the corticol measurements.

Although the project's objectives are very ambitious, OPTIMI represents one of the most advanced initiatives in the field of Positive Technology, so I am very excited to follow its progresses and see how far it can go.

19:03 Posted in Cybertherapy, Pervasive computing, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Mar 02, 2011

The Key to Unlocking the Virtual Body: Virtual Reality in the Treatment of Obesity and Eating Disorders

The Key to Unlocking the Virtual Body: Virtual Reality in the Treatment of Obesity and Eating Disorders

Giuseppe Riva, Journal of Diabetes Science and Technology, Volume 5, Issue 2, March 2011

Obesity and eating disorders are usually considered unrelated problems with different causes. However, various studies identify unhealthful weight-control behaviors (fasting, vomiting, or laxative abuse), induced by a negative experience of the body, as the common antecedents of both obesity and eating disorders. But how might negative body image—common to most adolescents, not only to medical patients—be behind the development of obesity and eating disorders? In this paper, I review the “allocentric lock theory” of negative body image as the possible antecedent of both obesity and eating disorders. Evidence from psychology and neuroscience indicates that our bodily experience involves the integration of different sensory inputs within two different reference frames: egocentric (first-person experience) and allocentric (third-person experience). Even though functional relations between these two frames are usually limited, they influence each other during the interaction between long- and short-term memory processes in spatial cognition. If this process is impaired either through exogenous (e.g., stress) or endogenous causes, the egocentric sensory inputs are unable to update the contents of the stored allocentric representation of the body. In other words, these patients are locked in an allocentric (observer view) negative image of their body, which their sensory inputs are no longer able to update even after a demanding diet and a significant weight loss. This article discusses the possible role of virtual reality in addressing this problem within an integrated treatment approach based on the allocentric lock theory.

19:35 Posted in Cybertherapy, Research tools, Virtual worlds | Permalink | Comments (0)

Dec 27, 2010

Metaverse Creativity

Intellect has announced the publication of the groundbreaking new journal Metaverse Creativity, which is the first refereed journal focusing on the examination of creativity in user-defined online virtual worlds such as Second Life.

While such creative activity includes artistic activity, this definition should in no way be limited to artistic output alone but should encompass the output of the various disciplines of design – such as fashion and object design, landscaping and virtual architecture – that are currently all amply manifest in Second Life.

Creativity in a metaverse manifests under unique conditions and parameters that are engendered by the virtual environment itself and it is intrinsically related to these in its very act of realization. Thus metaverse creativity cannot be separated from the underlying Metanomic system (metaverse economy), the legal issues of ownership and copyright, the very geography and related atmospheric/lighting conditions upon which the output is rendered, or the underlying computational system which generates this.

The inaugural issue includes a fascinating editorial by Elif Ayiter and Yacov Sharir.

For a complete list of articles with accompanying abstracts visit: http://www.atypon-link.com/INT/toc/mecr/1/1.

Issue 1 is FREE to view online: http://www.atypon-link.com/INT/toc/mecr/1/1

To subscribe please visit the journal's page for details: http://bit.ly/epvHg3

A Call for Papers is available here

14:24 Posted in Call for papers, Research tools | Permalink | Comments (0)

Oct 19, 2010

Neurocognitive systems related to real-world prospective memory

Neurocognitive systems related to real-world prospective memory.

PLoS One. 2010;5(10):

Authors: Kalpouzos G, Eriksson J, Sjölie D, Molin J, Nyberg L

BACKGROUND: Prospective memory (PM) denotes the ability to remember to perform actions in the future. It has been argued that standard laboratory paradigms fail to capture core aspects of PM. METHODOLOGY/PRINCIPAL FINDINGS: We combined functional MRI, virtual reality, eye-tracking and verbal reports to explore the dynamic allocation of neurocognitive processes during a naturalistic PM task where individuals performed errands in a realistic model of their residential town. Based on eye movement data and verbal reports, we modeled PM as an iterative loop of five sustained and transient phases: intention maintenance before target detection (TD), TD, intention maintenance after TD, action, and switching, the latter representing the activation of a new intention in mind. The fMRI analyses revealed continuous engagement of a top-down fronto-parietal network throughout the entire task, likely subserving goal maintenance in mind. In addition, a shift was observed from a perceptual (occipital) system while searching for places to go, to a mnemonic (temporo-parietal, fronto-hippocampal) system for remembering what actions to perform after TD. Updating of the top-down fronto-parietal network occurred at both TD and switching, the latter likely also being characterized by frontopolar activity. CONCLUSION/SIGNIFICANCE: Taken together, these findings show how brain systems complementary interact during real-world PM, and support a more complete model of PM that can be applied to naturalistic PM tasks and that we named PROspective MEmory DYnamic (PROMEDY) model because of its dynamics on both multi-phase iteration and the interactions of distinct neurocognitive networks.

00:09 Posted in Research tools | Permalink | Comments (0) | Tags: neurocognitive systems, prospective memory, virtual reality, eye-tracking

Aug 24, 2010

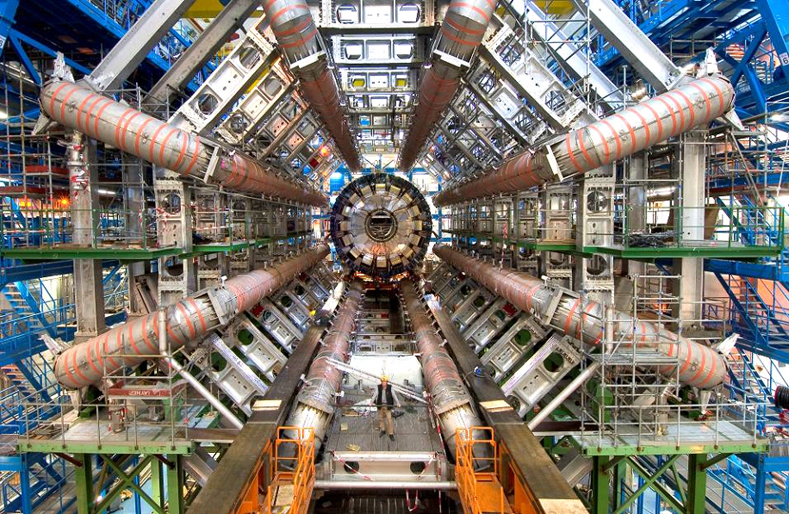

LHC rap

The Large Hadron Collider, the world's largest and highest-energy particle accelerator, promises to revolutionize our knowledge of the universe and advance our understanding of the most fundamental laws of nature.

But if you are not very good at quantum physics, do not worry: you can still get an explanation in RAP format of what the LHC is all about.

A 'sound' explanation, indeed!

17:47 Posted in Research tools | Permalink | Comments (0) | Tags: large hadron collider, rap, research tool

Feb 13, 2010

The Limits of Agency in Walking Humans

The Limits of Agency in Walking Humans.

Neuropsychologia. 2010 Feb 6;

Authors: Kannape OA, Schwabe L, Tadi T, Blanke O

An important principle of human ethics is that individuals are not responsible for actions performed when unconscious. Recent research found that the generation of an action and the building of a conscious experience of that action (agency) are distinct processes and crucial mechanisms for self-consciousness. Yet, previous agency studies have focussed on actions of a finger or hand. Here, we investigate how agents consciously monitor actions of the entire body in space during locomotion. This was motivated by previous work revealing that (1) a fundamental aspect of self-consciousness concerns a single and coherent representation of the entire spatially situated body and (2) clinical instances of human behaviour without consciousness occur in rare neurological conditions such as sleepwalking or epileptic nocturnal wandering. Merging techniques from virtual reality, full-body tracking, and cognitive science of conscious action monitoring, we report experimental data about consciousness during locomotion in healthy participants. We find that agents consciously monitor the location of their entire body and its locomotion only with low precision and report that while precision remains low it can be systematically modulated in several experimental conditions. This shows that conscious action monitoring in locomoting agents can be studied in a fine-grained manner. We argue that the study of the mechanisms of agency for a person's full body may help to refine our scientific criteria of selfhood and discuss sleepwalking and related conditions as alterations in neural systems encoding motor awareness in walking humans.

13:50 Posted in Research tools | Permalink | Comments (0) | Tags: agency, ethics, walking, action, neuropsychology

Feb 04, 2010

MedlinePlus Now Available for Mobile Phones

![]()

From the press release:

The National Library of Medicine's Mobile Medline Plus builds on the NLM's MedlinePlus Internet service, which provides authoritative consumer health information to over 10 million visitors per month. These visitors access MedlinePlus (http://medlineplus.gov) from throughout the United States as well many other countries, and use desktop computers, laptops and even mobile devices to get there.

Mobile MedlinePlus is available in English and Spanish (http://m.medlineplus.gov/spanish) and includes a subset of content from the full Web site. It includes summaries for over 800 diseases, wellness topics, the latest health news, an illustrated medical encyclopedia, and information on prescription and over-the-counter medications.

Mobile MedlinePlus can also help you when you're trying to choose an over-the-counter cold medicine at the drug store.

And if you're traveling abroad, you can use Mobile MedlinePlus to learn about safe drinking water.

15:07 Posted in Research tools | Permalink | Comments (0) | Tags: medline, mobile

Jan 09, 2010

Interface Fantasy - A Lacanian Cyborg Ontology

Cyberspace is first and foremost a mental space. Therefore we need to take a psychological approach to understand our experiences in it. In Interface Fantasy, André Nusselder uses the core psychoanalytic notion of fantasy to examine our relationship to computers and digital technology. Lacanian psychoanalysis considers fantasy to be an indispensable "screen" for our interaction with the outside world; Nusselder argues that, at the mental level, computer screens and other human-computer interfaces incorporate this function of fantasy: they mediate the real and the virtual.

Interface Fantasy illuminates our attachment to new media: why we love our devices; why we are fascinated by the images on their screens; and how it is possible that virtual images can provide physical pleasure. Nusselder puts such phenomena as avatars, role playing, cybersex, computer psychotherapy, and Internet addiction in the context of established psychoanalytic theory. The virtual identities we assume in virtual worlds, exemplified best by avatars consisting of both realistic and symbolic self-representations, illustrate the three orders that Lacan uses to analyze human reality: the imaginary, the symbolic, and the real.

Nusselder analyzes our most intimate involvement with information technology—the almost invisible, affective aspects of technology that have the greatest impact on our lives. Interface Fantasy lays the foundation for a new way of thinking that acknowledges the pivotal role of the screen in the current world of information. And it gives an intelligible overview of basic Lacanian principles (including fantasy, language, the virtual, the real, embodiment, and enjoyment) that shows their enormous relevance for understanding the current state of media technology.

Read more at MIT press.

13:14 Posted in Research tools | Permalink | Comments (0) | Tags: cyborg, cyberspace, psychoanalysis, lacan, mit

Jan 07, 2010

Why $0.00 is the future of business

In this video, Chris Anderson (chief editor of Wired) explains why giving your products for free is a winning strategy. The "freemium" (free+premium) approach enhances customers' expectations and their involvement in your work. Once you have gained the attention of customers, you can choose other approaches, i.e. selling higher-quality products or proposing better packages, in order to generate revenues.

19:21 Posted in Research tools | Permalink | Comments (0) | Tags: chris anderson, business, freemium

Dec 12, 2009

A History of Game Controllers Diagram

17:21 Posted in Research tools | Permalink | Comments (0) | Tags: game controllers

Oct 29, 2009

Social Media counts

08:34 Posted in Research tools | Permalink | Comments (0) | Tags: social media, web 2.0