Feb 09, 2014

A high-fidelity virtual environment for the study of paranoia

A high-fidelity virtual environment for the study of paranoia.

Schizophr Res Treatment. 2013;2013:538185

Authors: Broome MR, Zányi E, Hamborg T, Selmanovic E, Czanner S, Birchwood M, Chalmers A, Singh SP

Abstract. Psychotic disorders carry social and economic costs for sufferers and society. Recent evidence highlights the risk posed by urban upbringing and social deprivation in the genesis of paranoia and psychosis. Evidence based psychological interventions are often not offered because of a lack of therapists. Virtual reality (VR) environments have been used to treat mental health problems. VR may be a way of understanding the aetiological processes in psychosis and increasing psychotherapeutic resources for its treatment. We developed a high-fidelity virtual reality scenario of an urban street scene to test the hypothesis that virtual urban exposure is able to generate paranoia to a comparable or greater extent than scenarios using indoor scenes. Participants (n = 32) entered the VR scenario for four minutes, after which time their degree of paranoid ideation was assessed. We demonstrated that the virtual reality scenario was able to elicit paranoia in a nonclinical, healthy group and that an urban scene was more likely to lead to higher levels of paranoia than a virtual indoor environment. We suggest that this study offers evidence to support the role of exposure to factors in the urban environment in the genesis and maintenance of psychotic experiences and symptoms. The realistic high-fidelity street scene scenario may offer a useful tool for therapists.

22:25 Posted in Cybertherapy, Research tools, Virtual worlds | Permalink | Comments (0)

Bodily maps of emotions

Via KurzweilAI.net

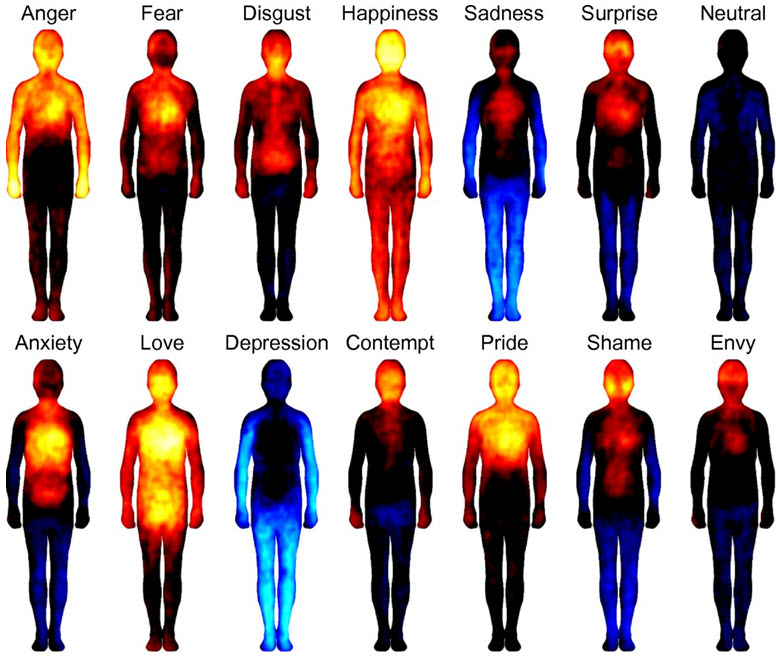

Bodily topography of basic (Upper) and nonbasic (Lower) emotions associated with words. The body maps show regions whose activation increased (warm colors) or decreased (cool colors) when feeling each emotion. (Credit: Lauri Nummenmaa et al./PNAS)

Researchers at Aalto University in Finland have compiled maps of emotional feelings associated with culturally universal bodily sensations, which could be at the core of emotional experience.

The researchers found that the most common emotions trigger strong bodily sensations, and the bodily maps of these sensations were topographically different for different emotions. The sensation patterns were, however, consistent across different West European and East Asian cultures, highlighting that emotions and their corresponding bodily sensation patterns have a biological basis.

The research was carried out on line, and over 700 individuals from Finland, Sweden and Taiwan took part in the study. The researchers induced different emotional states in their Finnish and Taiwanese participants. Subsequently the participants were shown pictures of human bodies on a computer, and asked to color the bodily regions whose activity they felt increasing or decreasing.

“Unraveling the subjective bodily sensations associated with human emotions may help us to better understand mood disorders such as depression and anxiety, which are accompanied by altered emotional processing, autonomic nervous system activity, and somatosensation (body sensations),” the researchers said in an open-access paper in Proceedings of the National Academy of Sciences. “These topographical changes in emotion-triggered sensations in the body could provide a novel biomarker for emotional disorders.”

Abstract of PNAS paper

Emotions are often felt in the body, and somatosensory feedback has been proposed to trigger conscious emotional experiences. Here we reveal maps of bodily sensations associated with different emotions using a unique topographical self-report method. In five experiments, participants (n = 701) were shown two silhouettes of bodies alongside emotional words, stories, movies, or facial expressions. They were asked to color the bodily regions whose activity they felt increasing or decreasing while viewing each stimulus. Different emotions were consistently associated with statistically separable bodily sensation maps across experiments. These maps were concordant across West European and East Asian samples. Statistical classifiers distinguished emotion-specific activation maps accurately, confirming independence of topographies across emotions. We propose that emotions are represented in the somatosensory system as culturally universal categorical somatotopic maps. Perception of these emotion-triggered bodily changes may play a key role in generating consciously felt emotions.

21:57 Posted in Emotional computing, Research tools | Permalink | Comments (0)

Feb 02, 2014

Effect of Meditation on Cognitive Functions in Context of Aging and Neurodegenerative Diseases

Effect of Meditation on Cognitive Functions in Context of Aging and Neurodegenerative Diseases.

Front Behav Neurosci. 2014;8:17

Authors: Marciniak R, Sheardova K, Cermáková P, Hudeček D, Sumec R, Hort J

Abstract. Effect of different meditation practices on various aspects of mental and physical health is receiving growing attention. The present paper reviews evidence on the effects of several mediation practices on cognitive functions in the context of aging and neurodegenerative diseases. The effect of meditation in this area is still poorly explored. Seven studies were detected through the databases search, which explores the effect of meditation on attention, memory, executive functions, and other miscellaneous measures of cognition in a sample of older people and people suffering from neurodegenerative diseases. Overall, reviewed studies suggested a positive effect of meditation techniques, particularly in the area of attention, as well as memory, verbal fluency, and cognitive flexibility. These findings are discussed in the context of MRI studies suggesting structural correlates of the effects. Meditation can be a potentially suitable non-pharmacological intervention aimed at the prevention of cognitive decline in the elderly. However, the conclusions of these studies are limited by their methodological flaws and differences of various types of meditation techniques. Further research in this direction could help to verify the validity of the findings and clarify the problematic aspects.

19:53 Posted in Meditation & brain, Research tools | Permalink | Comments (0)

Dec 24, 2013

The Creative Link: Investigating the Relationship Between Social Network Indices, Creative Performance and Flow in Blended Teams

The Creative Link: Investigating the Relationship Between Social Network Indices, Creative Performance and Flow in Blended Teams

Andrea Gaggioli, Elvis Mazzoni, Luca Milani, Giuseppe Riva

This study presents findings of an exploratory study, which has investigated the relationship between indices of social network structure, flow and creative performance in students collaborating in blended setting. Thirty undergraduate students enrolled in a Media Psychology course were included in five groups, which were tasked with designing a new technology-based psychological application. Team members collaborated over a twelve-week period using two main modalities: face-to-face meeting sessions in the classroom (once a week) and virtually using a groupware tool. Social network indicators of group interaction and presence indices were extracted from communication logs, whereas flow and product creativity were assessed through survey measures. Findings showed that specific social network indices (in particular those measuring decentralization and neighbor interaction) were positively related with flow experience. More broadly, results indicated that selected social network indicators can offer useful insight into the creative collaboration process. Theoretical and methodological implications of these results are drawn.

20:36 Posted in Creativity and computers, Research tools | Permalink | Comments (0)

Nov 23, 2013

Overwhelming technology disrupting life and causing stress new study shows

A new study shows that over one third of people feel overwhelmed by technology today and are more likely to feel less satisfied with their life as a whole.

The study, conducted by the University of Cambridge and sponsored by BT, surveyed 1,269 people including in-depth interviews with families in the UK, also found that people who felt in control of their use of communications technology were more likely to be more satisfied with life.

Read the full story

18:06 Posted in Research tools | Permalink | Comments (0)

Oct 31, 2013

Brain Decoding

Via IEET

Neuroscientists are starting to decipher what a person is seeing, remembering and even dreaming just by looking at their brain activity. They call it brain decoding.

In this Nature Video, we see three different uses of brain decoding, including a virtual reality experiment that could use brain activity to figure out whether someone has been to the scene of a crime.

23:57 Posted in Information visualization, Neurotechnology & neuroinformatics, Research tools, Virtual worlds | Permalink | Comments (0)

Mobile EEG and its potential to promote the theory and application of imagery-based motor rehabilitation

Mobile EEG and its potential to promote the theory and application of imagery-based motor rehabilitation.

Int J Psychophysiol. 2013 Oct 18;

Authors: Kranczioch C, Zich C, Schierholz I, Sterr A

Abstract. Studying the brain in its natural state remains a major challenge for neuroscience. Solving this challenge would not only enable the refinement of cognitive theory, but also provide a better understanding of cognitive function in the type of complex and unpredictable situations that constitute daily life, and which are often disturbed in clinical populations. With mobile EEG, researchers now have access to a tool that can help address these issues. In this paper we present an overview of technical advancements in mobile EEG systems and associated analysis tools, and explore the benefits of this new technology. Using the example of motor imagery (MI) we will examine the translational potential of MI-based neurofeedback training for neurological rehabilitation and applied research.

23:50 Posted in Mental practice & mental simulation, Research tools, Wearable & mobile | Permalink | Comments (0)

Sep 10, 2013

BITalino: Do More!

BITalino is a low-cost toolkit that allows anyone from students to professional developers to create projects and applications with physiological sensors. Out of the box, BITalino already integrates easy to use software & hardware blocks with sensors for electrocardiography (ECG), electromyography (EMG), electrodermal activity (EDA), an accelerometer, & ambient light. Imagination is the limit; each individual block can be snapped off and combined to prototype anything you want. You can connect others sensors, including your own custom designs.

18:22 Posted in Physiological Computing, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Aug 07, 2013

On Phenomenal Consciousness

A recent introductory talk on the problem that consciousness and qualia presents to physicalism by Frank C. Jackson.

14:00 Posted in Research tools, Telepresence & virtual presence | Permalink | Comments (0)

Welcome to wonderland: the influence of the size and shape of a virtual hand on the perceived size and shape of virtual objects

Welcome to wonderland: the influence of the size and shape of a virtual hand on the perceived size and shape of virtual objects.

PLoS One. 2013;8(7):e68594

Authors: Linkenauger SA, Leyrer M, Bülthoff HH, Mohler BJ

The notion of body-based scaling suggests that our body and its action capabilities are used to scale the spatial layout of the environment. Here we present four studies supporting this perspective by showing that the hand acts as a metric which individuals use to scale the apparent sizes of objects in the environment. However to test this, one must be able to manipulate the size and/or dimensions of the perceiver's hand which is difficult in the real world due to impliability of hand dimensions. To overcome this limitation, we used virtual reality to manipulate dimensions of participants' fully-tracked, virtual hands to investigate its influence on the perceived size and shape of virtual objects. In a series of experiments, using several measures, we show that individuals' estimations of the sizes of virtual objects differ depending on the size of their virtual hand in the direction consistent with the body-based scaling hypothesis. Additionally, we found that these effects were specific to participants' virtual hands rather than another avatar's hands or a salient familiar-sized object. While these studies provide support for a body-based approach to the scaling of the spatial layout, they also demonstrate the influence of virtual bodies on perception of virtual environments.

13:56 Posted in Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

What Color is My Arm? Changes in Skin Color of an Embodied Virtual Arm Modulates Pain Threshold

What Color is My Arm? Changes in Skin Color of an Embodied Virtual Arm Modulates Pain Threshold.

Front Hum Neurosci. 2013;7:438

Authors: Martini M, Perez-Marcos D, Sanchez-Vives MV

It has been demonstrated that visual inputs can modulate pain. However, the influence of skin color on pain perception is unknown. Red skin is associated to inflamed, hot and more sensitive skin, while blue is associated to cyanotic, cold skin. We aimed to test whether the color of the skin would alter the heat pain threshold. To this end, we used an immersive virtual environment where we induced embodiment of a virtual arm that was co-located with the real one and seen from a first-person perspective. Virtual reality allowed us to dynamically modify the color of the skin of the virtual arm. In order to test pain threshold, increasing ramps of heat stimulation applied on the participants' arm were delivered concomitantly with the gradual intensification of different colors on the embodied avatar's arm. We found that a reddened arm significantly decreased the pain threshold compared with normal and bluish skin. This effect was specific when red was seen on the arm, while seeing red in a spot outside the arm did not decrease pain threshold. These results demonstrate an influence of skin color on pain perception. This top-down modulation of pain through visual input suggests a potential use of embodied virtual bodies for pain therapy.

13:49 Posted in Biofeedback & neurofeedback, Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

What Color Is Your Night Light? It May Affect Your Mood

When it comes to some of the health hazards of light at night, a new study suggests that the color of the light can make a big difference.

Read full story on Science Daily

13:46 Posted in Emotional computing, Future interfaces, Research tools | Permalink | Comments (0)

Detecting delay in visual feedback of an action as a monitor of self recognition

Detecting delay in visual feedback of an action as a monitor of self recognition.

Exp Brain Res. 2012 Oct;222(4):389-97

Authors: Hoover AE, Harris LR

Abstract. How do we distinguish "self" from "other"? The correlation between willing an action and seeing it occur is an important cue. We exploited the fact that this correlation needs to occur within a restricted temporal window in order to obtain a quantitative assessment of when a body part is identified as "self". We measured the threshold and sensitivity (d') for detecting a delay between movements of the finger (of both the dominant and non-dominant hands) and visual feedback as seen from four visual perspectives (the natural view, and mirror-reversed and/or inverted views). Each trial consisted of one presentation with minimum delay and another with a delay of between 33 and 150 ms. Participants indicated which presentation contained the delayed view. We varied the amount of efference copy available for this task by comparing performances for discrete movements and continuous movements. Discrete movements are associated with a stronger efference copy. Sensitivity to detect asynchrony between visual and proprioceptive information was significantly higher when movements were viewed from a "plausible" self perspective compared with when the view was reversed or inverted. Further, we found differences in performance between dominant and non-dominant hand finger movements across the continuous and single movements. Performance varied with the viewpoint from which the visual feedback was presented and on the efferent component such that optimal performance was obtained when the presentation was in the normal natural orientation and clear efferent information was available. Variations in sensitivity to visual/non-visual temporal incongruence with the viewpoint in which a movement is seen may help determine the arrangement of the underlying visual representation of the body.

13:18 Posted in Research tools, Telepresence & virtual presence | Permalink | Comments (0)

Jul 23, 2013

A mobile data collection platform for mental health research

A mobile data collection platform for mental health research

22:57 Posted in Pervasive computing, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes

Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes.

Proc Natl Acad Sci USA. 2013 Jul 15;

Authors: Banakou D, Groten R, Slater M

Abstract. An illusory sensation of ownership over a surrogate limb or whole body can be induced through specific forms of multisensory stimulation, such as synchronous visuotactile tapping on the hidden real and visible rubber hand in the rubber hand illusion. Such methods have been used to induce ownership over a manikin and a virtual body that substitute the real body, as seen from first-person perspective, through a head-mounted display. However, the perceptual and behavioral consequences of such transformed body ownership have hardly been explored. In Exp. 1, immersive virtual reality was used to embody 30 adults as a 4-y-old child (condition C), and as an adult body scaled to the same height as the child (condition A), experienced from the first-person perspective, and with virtual and real body movements synchronized. The result was a strong body-ownership illusion equally for C and A. Moreover there was an overestimation of the sizes of objects compared with a nonembodied baseline, which was significantly greater for C compared with A. An implicit association test showed that C resulted in significantly faster reaction times for the classification of self with child-like compared with adult-like attributes. Exp. 2 with an additional 16 participants extinguished the ownership illusion by using visuomotor asynchrony, with all else equal. The size-estimation and implicit association test differences between C and A were also extinguished. We conclude that there are perceptual and probably behavioral correlates of body-ownership illusions that occur as a function of the type of body in which embodiment occurs.

22:28 Posted in Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

The Size of Electronic Consumer Devices Affects Our Behavior

Re-blogged from Textually.org

What are our devices doing to us? We already know they're snuffing our creativity - but new research suggests they're also stifling our drive. How so? Because fussing with them on average 58 minutes a day leads to bad posture, FastCompany reports.

The body posture inherent in operating everyday gadgets affects not only your back, but your demeanor, reports a new experimental study entitled iPosture: The Size of Electronic Consumer Devices Affects Our Behavior. It turns out that working on a relatively large machine (like a desktop computer) causes users to act more assertively than working on a small one (like an iPad).That poor posture, Harvard Business School researchers Maarten Bos and Amy Cuddy find, undermines our assertiveness.

Read more.

22:23 Posted in Research tools, Wearable & mobile | Permalink | Comments (0)

Jul 18, 2013

Advanced ‘artificial skin’ senses touch, humidity, and temperature

Artificial skin (credit: Technion) Technion-Israel Institute of Technology scientists have discovered how to make a new kind of flexible sensor that one day could be integrated into “electronic skin” (e-skin) — a covering for prosthetic limbs.

Read full story

18:14 Posted in Pervasive computing, Research tools, Wearable & mobile | Permalink | Comments (0)

US Army avatar role-play Experiment #3 now open for public registration

Military Open Simulator Enterprise Strategy (MOSES) is secure virtual world software designed to evaluate the ability of OpenSimulator to provide independent access to a persistent, virtual world. MOSES is a research project of the United States Army Simulation and Training Center. STTC’s Virtual World Strategic Applications team uses OpenSimulator to add capability and flexibility to virtual training scenarios.

18:12 Posted in Research tools, Telepresence & virtual presence | Permalink | Comments (0)

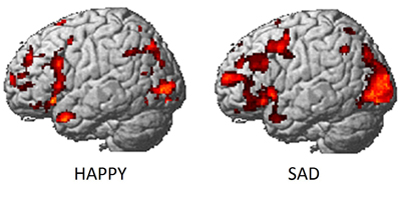

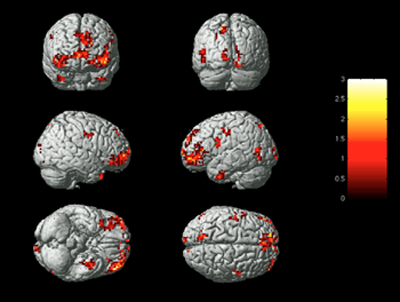

Identifying human emotions based on brain activity

For the first time, scientists at Carnegie Mellon University have identified which emotion a person is experiencing based on brain activity.

The study, published in the June 19 issue of PLOS ONE, combines functional magnetic resonance imaging (fMRI) and machine learning to measure brain signals to accurately read emotions in individuals. Led by researchers in CMU’s Dietrich College of Humanities and Social Sciences, the findings illustrate how the brain categorizes feelings, giving researchers the first reliable process to analyze emotions. Until now, research on emotions has been long stymied by the lack of reliable methods to evaluate them, mostly because people are often reluctant to honestly report their feelings. Further complicating matters is that many emotional responses may not be consciously experienced.

Identifying emotions based on neural activity builds on previous discoveries by CMU’s Marcel Just and Tom M. Mitchell, which used similar techniques to create a computational model that identifies individuals’ thoughts of concrete objects, often dubbed “mind reading.”

“This research introduces a new method with potential to identify emotions without relying on people’s ability to self-report,” said Karim Kassam, assistant professor of social and decision sciences and lead author of the study. “It could be used to assess an individual’s emotional response to almost any kind of stimulus, for example, a flag, a brand name or a political candidate.”

One challenge for the research team was find a way to repeatedly and reliably evoke different emotional states from the participants. Traditional approaches, such as showing subjects emotion-inducing film clips, would likely have been unsuccessful because the impact of film clips diminishes with repeated display. The researchers solved the problem by recruiting actors from CMU’s School of Drama.

“Our big breakthrough was my colleague Karim Kassam’s idea of testing actors, who are experienced at cycling through emotional states. We were fortunate, in that respect, that CMU has a superb drama school,” said George Loewenstein, the Herbert A. Simon University Professor of Economics and Psychology.

For the study, 10 actors were scanned at CMU’s Scientific Imaging & Brain Research Center while viewing the words of nine emotions: anger, disgust, envy, fear, happiness, lust, pride, sadness and shame. While inside the fMRI scanner, the actors were instructed to enter each of these emotional states multiple times, in random order.

Another challenge was to ensure that the technique was measuring emotions per se, and not the act of trying to induce an emotion in oneself. To meet this challenge, a second phase of the study presented participants with pictures of neutral and disgusting photos that they had not seen before. The computer model, constructed from using statistical information to analyze the fMRI activation patterns gathered for 18 emotional words, had learned the emotion patterns from self-induced emotions. It was able to correctly identify the emotional content of photos being viewed using the brain activity of the viewers.

To identify emotions within the brain, the researchers first used the participants’ neural activation patterns in early scans to identify the emotions experienced by the same participants in later scans. The computer model achieved a rank accuracy of 0.84. Rank accuracy refers to the percentile rank of the correct emotion in an ordered list of the computer model guesses; random guessing would result in a rank accuracy of 0.50.

Next, the team took the machine learning analysis of the self-induced emotions to guess which emotion the subjects were experiencing when they were exposed to the disgusting photographs. The computer model achieved a rank accuracy of 0.91. With nine emotions to choose from, the model listed disgust as the most likely emotion 60 percent of the time and as one of its top two guesses 80 percent of the time.

Finally, they applied machine learning analysis of neural activation patterns from all but one of the participants to predict the emotions experienced by the hold-out participant. This answers an important question: If we took a new individual, put them in the scanner and exposed them to an emotional stimulus, how accurately could we identify their emotional reaction? Here, the model achieved a rank accuracy of 0.71, once again well above the chance guessing level of 0.50.

“Despite manifest differences between people’s psychology, different people tend to neurally encode emotions in remarkably similar ways,” noted Amanda Markey, a graduate student in the Department of Social and Decision Sciences.

A surprising finding from the research was that almost equivalent accuracy levels could be achieved even when the computer model made use of activation patterns in only one of a number of different subsections of the human brain.

“This suggests that emotion signatures aren’t limited to specific brain regions, such as the amygdala, but produce characteristic patterns throughout a number of brain regions,” said Vladimir Cherkassky, senior research programmer in the Psychology Department.

The research team also found that while on average the model ranked the correct emotion highest among its guesses, it was best at identifying happiness and least accurate in identifying envy. It rarely confused positive and negative emotions, suggesting that these have distinct neural signatures. And, it was least likely to misidentify lust as any other emotion, suggesting that lust produces a pattern of neural activity that is distinct from all other emotional experiences.

Just, the D.O. Hebb University Professor of Psychology, director of the university’s Center for Cognitive Brain Imaging and leading neuroscientist, explained, “We found that three main organizing factors underpinned the emotion neural signatures, namely the positive or negative valence of the emotion, its intensity — mild or strong, and its sociality — involvement or non-involvement of another person. This is how emotions are organized in the brain.”

In the future, the researchers plan to apply this new identification method to a number of challenging problems in emotion research, including identifying emotions that individuals are actively attempting to suppress and multiple emotions experienced simultaneously, such as the combination of joy and envy one might experience upon hearing about a friend’s good fortune.

12:30 Posted in Emotional computing, Research tools | Permalink | Comments (0)

Mar 11, 2013

Is virtual reality always an effective stressors for exposure treatments? Some insights from a controlled trial

Is virtual reality always an effective stressors for exposure treatments? Some insights from a controlled trial.

BMC psychiatry, 13(1) p. 52, 2013

Federica Pallavicini, Pietro Cipresso, Simona Raspelli, Alessandra Grassi, Silvia Serino, Cinzia Vigna, Stefano Triberti, Marco Villamira, Andrea Gaggioli, Giuseppe Riva

Abstract. Several research studies investigating the effectiveness of the different treatments have demonstrated that exposure-based therapies are more suitable and effective than others for the treatment of anxiety disorders. Traditionally, exposure may be achieved in two manners: in vivo, with direct contact to the stimulus, or by imagery, in the person’s imagination. However, despite its effectiveness, both types of exposure present some limitations that supported the use of Virtual Reality (VR). But is VR always an effective stressor? Are the technological breakdowns that may appear during such an experience a possible risk for its effectiveness? (...)

Full paper available here (open access)

14:11 Posted in Cybertherapy, Research tools, Virtual worlds | Permalink | Comments (0)