Oct 16, 2006

I/O Plant

The always interesting Mauro Cherubini's moleskin has a post about a tool for designing a content that utilize plants as an input-output interface. Dubbed I/O Plant, the system allows to connect actuators, sensors and database servers to living plants, making them a part of an electric circuit or a network terminal.

22:55 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Oct 04, 2006

Affective communication in the metaverse

Via IEET

Have a look at this thought-provoking article by Russel Blackford on affective communication in mediated environments:

One of the main conclusions I’ve been coming to in my research on the moral issues surrounding emerging technologies is the danger that they will be used in ways that undermine affective communication between human beings - something on which our ability to bond into societies and show moment-by-moment sympathy for each other depends. Anthropological and neurological studies have increasingly confirmed that human beings have a repertoire of communication by facial expression, voice tone, and body language that is largely cross-cultural, and which surely evolved as we evolved as social animals.

The importance of this affective repertoire can be seen in the frequent complaints in internet forums that, “I misunderstood because I couldn’t hear your tone of voice or see the expression on your face.” The internet has evolved emoticons as a partial solution to the problem, but flame wars still break out over observations that would lead to nothing like such violent verbal responses if those involved were discussing the same matters face to face, or even on the telephone. I almost never encounter truly angry exchanges in real life, though I may be a bit sheltered, or course, but I see them on the internet all the time. Partly, it seems to be that people genuinely misunderstand where others are coming from with the restricted affective cues available. Partly, however, it seems that people are more prepared to lash out hurtfully in circumstances where they are not held in check by the angry or shocked looks and the raised voices they would encounter if they acted in the same way in real life.

This is one reason to be sightly wary of the internet. It’s not a reason to ban the internet, which produces all sorts of extraordinary utilitarian benefits. Indeed, even the internet’s constraint on affective communication may have advantages - it may free up shy people to say things that they would be too afraid to say in real life.

Read the full article

22:30 Posted in Future interfaces | Permalink | Comments (0) | Tags: affective computing

Sep 24, 2006

SmartRetina used as a navigating tool in Google earth

Via Nastypixel

SmartRetina is a system that allows to capture the user’s hand gestures and recognize them as computer actions.

22:51 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Aug 04, 2006

Tablescape Plus: Upstanding Tiny Displays on Tabletop Displays

Placing physical objects on a tabletop display is common for intuitive tangible input. The overall goal of our project is to increase the possibility of the interactive physical objects. By utilizing the tabletop objects as projection screens as well as input equipment, we can change the appearance and role of each object easily. To achieve this goal, we propose a novel tablescape display system, "Tabletop Plus." Tabletop Plus can project separate images on the tabletop horizontal screen and on vertically placed objects simlutaneously. No special electronic devices are installed on these objects. Instead, we attached a paper marker underneath these objests for vision-based recogniton. Projected images change according to the angle, position and ID of each placed object. In addition, the displayed images are not occluded by users' hands since all equipment is installed inside the table.

Example application :: Tabletop Theater (pictured above): When you put a tiny display on a tabletop miniature park, an animated character appears and moves according to the position and direction of it. In addition, the user can change the actions of the characters by their positional relationships.

17:21 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Aug 02, 2006

3D blog

Thanks to Giuseppe Riva (reporting from Siggraph 2006)

12:50 Posted in Future interfaces, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Jul 29, 2006

E-finger

This new fingertip-mounted device uses miniature thin-film force sensors, a tri-axial accelerometer, and a motion tracker. A real-time, network-based, multi-rate data-acquisition system was developed using LabVIEW virtual instrumentation technology. Biomechanical models based on human-subject studies were applied to the sensing algorithm. A new 2D touch-based painting application (Touch Painter) was developed with a new portable, touch-based projection system (Touch Canvas). Also, a new 3D object-digitizing application (Tactile Tracer) was developed to determine object properties by dynamic tactile activities, such as rubbing, palpation, tapping, and nail-scratching.

20:31 Posted in Future interfaces | Permalink | Comments (0)

Jul 21, 2006

Tangible query interfaces

A new method for querying relational databases & live data streams through the manipulation of physical objects. Parameterized query fragments are embodied as physical tokens ("parameter wheels"). These tokens are manipulated, interpreted, & graphically augmented on a series of sliding racks, to which data visualizations adapt in real time.

see also bumptop desktop metaphor.

14:04 Posted in Future interfaces | Permalink | Comments (0) | Tags: tangible interfaces

Jul 18, 2006

"TV for the brain" patented by Sony

00:21 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Jul 05, 2006

Hearing with Your Eyes

Via Medgadget

Manabe Hiroyuki has developed a machine that could eventually allow you to control things in your environment simply by looking at it. From the abstract:

A headphone-type gaze detector for a full-time wearable interface is proposed. It uses a Kalman filter to analyze multiple channels of EOG signals measured at the locations of headphone cushions to estimate gaze direction. Evaluations show that the average estimation error is 4.4® (horizontal) and 8.3® (vertical), and that the drift is suppressed to the same level as in ordinary EOG. The method is especially robust against signal anomalies. Selecting a real object from among many surrounding ones is one possible application of this headphone gaze detector. Soon we'll be able to turn on the Barry Manilow and turn down the lights with a simple sexy stare.

23:17 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Jun 04, 2006

Interface and Society

Re-blogged from Networked Performance

Interface and Society: Deadline call for works: July 1 - see call; Public Private Interface workshop: June 10-13; Mobile troops workshop: September 13-16; Conference: November 10-11 2006; Exhibition opening and performance: November 10, 2006.

In our everyday life we constantly have to cope more or less successfully with interfaces. We use the mobile phone, the mp3 player, and our laptop, in order to gain access to the digital part of our life. In recent years this situation has lead to the creation of new interdisciplinary subjects like "Interaction Design" or "Physical Computing".

We live between two worlds, our physical environment and the digital space. Technology and its digital space are our second nature and the interfaces are our points of access to this technosphere.

Since artists started working with technology they have been developing interfaces and modes of interaction. The interface itself became an artistic thematic.

The project INTERFACE and SOCIETY investigates how artists deal with the transformation of our everyday life through technical interfaces. With the rapid technological development a thoroughly critique of the interface towards society is necessary.

The role of the artist is thereby crucial. S/he has the freedom to deal with technologies and interfaces beyond functionality and usability. The project INTERFACE and SOCIETY is looking at this development with a special focus on the artistic contribution.

INTERFACE and SOCIETY is an umbrella for a range of activities throughout 2006 at Ateleir Nord in Oslo.

18:33 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

May 22, 2006

3rd International Conference on Enactive Interfaces

Via VRoot.org

The 3rd International Conference on Enactive Interfaces, promoted by the European Network of Excellence ENACTIVE INTERFACES, will be held in Montpellier (France) on November 20-21, 2006.

The aim of the conference is to encourage the emergence of a multidisciplinary research community in a new field of research and on a new generation of human-computer interfaces called Enactive Interfaces.

From the website:

Enactive Interfaces are inspired by a fundamental concept of “interaction” that has not been exploited by other approaches to the design of human-computer interface technologies. Mainly, interfaces have been designed to present information via symbols, or icons.

In the symbolic approach, information is stored as words, mathematical symbols or other symbolic systems, while in the iconic approach information is stored in the form of visual images, such as diagrams and illustrations.

ENACTIVE knowledge is information gained through perception-action interactions with the environment. Examples include information gained by grasping an object, by hefting a stone, or by walking around an obstacle that occludes our view. It is gained through intuitive movements, of which we often are not aware. Enactive knowledge is inherently multimodal, because motor actions alter the stimulation of multiple perceptual systems. Enactive knowledge is essential in tasks such as driving a car, dancing, playing a musical instrument, modelling objects from clay, performing sports, and so on.

Enactive knowledge is neither symbolic nor iconic. It is direct, in the sense that it is natural and intuitive, based on experience and the perceptual consequences of motor acts.

ENACTIVE / 06 will highlight convergences between the concept of Enaction and the sciences of complexity. Biological, cognitive, perceptual or technological systems are complex dynamical systems exhibiting (in)stability properties that are consequential for the agent-environment interaction. The conference will provide new insights, through the prism of ENACTIVE COMPLEXITY, about human interaction with multimodal interfaces.

23:15 Posted in Future interfaces | Permalink | Comments (0) | Tags: Positive Technology

Feb 14, 2006

Forearm implanted chip to create an human-Ipod network

Researchers at the Korea Advanced Institute of Science and Technology (KAIST) presented a chip that is implanted in a user’s forearm to function as an audio signal transmission wire that links to an iPod. Many of the presentations featured devices that conserved power, though this chip goes a step further, harnessing the human body’s natural conductive properties to create personal-area networks. It is not practical to wire together the numerous devices that people carry with them, and Bluetooth connections fall prey to interference, leading scientists to explore the application of the human body as a networking cable. The Korean scientists augmented an iPod nano with their wideband signaling chip. When a user kept his finger pressed to the device, it transmitted data at 2 Mbps, at a consumption rate lower than 10 microwatts. Researchers from the University of Utah also presented a chip that scans brainwave activity by wirelessly streaming data through monitors in the hopes of creating prosthetics that quadriplegics could operate with their brain waves, though both projects are still in the preliminary research stages.

(…)

These chips are not something that will be included in one of Apple Computer CEO Steve Jobs’ Macworld keynotes anytime soon.

11:34 Posted in Future interfaces | Permalink | Comments (0) | Tags: Positive Technology

Feb 07, 2006

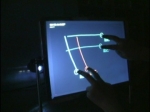

Multi-Touch Interaction Research

via WMMNA

Jefferson Y. Han and coll. have developed multi-touch sensing technologies based on a technique called FTIR (frustrated total internal reflection), originally developed for fingerprint image acquisition. It acquires true touch information at high spatial and temporal resolutions, and is scalable to very large installations.

Multi-touch sensing allows a user to interact with a system with more than one finger at a time, as in chording and bi-manual operations. Such sensing devices are also able to accommodate multiple users simultaneously, which is especially useful for larger interaction scenarios such as interactive walls and tabletops.

Multi-touch sensing allows a user to interact with a system with more than one finger at a time, as in chording and bi-manual operations. Such sensing devices are also able to accommodate multiple users simultaneously, which is especially useful for larger interaction scenarios such as interactive walls and tabletops.

See MPEG-1 demo and visit the project's web site

12:44 Posted in Future interfaces | Permalink | Comments (0) | Tags: Positive Technology

Sep 15, 2005

ICare Haptic Interface for the blind

Via the Presence-L Listserv

(from the iCare project web site)

iCare Haptic Interface will allow individuals who are blind to explore objects using their hands. Their hand movements will be captured through the Datagloves and spatial features of the object will be captured through the video cameras. The system will find correlations between spatial features, hand movements and haptic sensations for a given object. In the test phase, when the object is detected by the camera, the system will inform the user of the presence of the object by generating characteristic haptic feedback. I Care Haptic Interface will be an interactive display where users can seek information from the system, manipulate virtual objects to actively explore them and recognize the objects.

The system will find correlations between spatial features, hand movements and haptic sensations for a given object. In the test phase, when the object is detected by the camera, the system will inform the user of the presence of the object by generating characteristic haptic feedback. I Care Haptic Interface will be an interactive display where users can seek information from the system, manipulate virtual objects to actively explore them and recognize the objects.

More to explore

15:35 Posted in Future interfaces | Permalink | Comments (0) | Tags: Positive Technology, Future interfaces

Sep 05, 2005

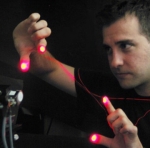

Laser-based tracking for HCI

Via FutureFeeder

Smart Laser Scanner is a high resolution human interfaces that tracks one’s bare fingers as it moves through space in 3Ds. Developed by Alvaro Cassinelli, Stephane Perrin & Masatoshi Ishikawa, the system uses a laser, an array of moveable micro-mirrors, a photodector (an instrument that detects light), and special software written by the team to collect finger-motion data.  The laser sends a beam of light to the array of micro-mirrors, which redirect the laser beam to shine on the finger tip. A photodector senses light reflected off the finger and works with the software to minutely and quickly adjust the micro-mirrors so that they constantly beam the laser light onto the fingertip. According to Cassinelli and his co-workers, the system's components could be eventually integrated into portable devices.

The laser sends a beam of light to the array of micro-mirrors, which redirect the laser beam to shine on the finger tip. A photodector senses light reflected off the finger and works with the software to minutely and quickly adjust the micro-mirrors so that they constantly beam the laser light onto the fingertip. According to Cassinelli and his co-workers, the system's components could be eventually integrated into portable devices.

More to explore

The Smart Laser Scanner website (demo videos available for download)

15:25 Posted in Future interfaces | Permalink | Comments (0) | Tags: Positive Technology, Future interfaces