Jun 22, 2010

New gesture-based system from MIT

Researchers at MIT have developed a new gesture-based system that combines a standard webcam, colored lycra gloves, and a software that includes a dataset of pictures. This simple and cheap system allows to translate hands gestures into a computer-generated 3d-model of the hand in realtime. Once the webcam has captured an image of the glove, the software matches it with the corresponding hand position stored in the visual dataset and triggers the answer. This approach reduces computation time as there is no need to calculate the relative positions of the fingers, palm, and back of the hand on the fly.

The inexpensive gesture-based recognition system developed at MIT could have applications in games, industry and education. I also envisage a potential application in the field of motor rehabilitation.

Credit: Jason Dorfman/CSAIL

20:40 Posted in Future interfaces | Permalink | Comments (0) | Tags: gesture recognition, future interfaces, minority report

Mar 04, 2010

Skinput Turns Your Body Into a Touchscreen

Via New Scientist via Stefano Besana

Researchers at Carnegie Mellon University and Microsoft’s Redmond research lab have developed a working prototype of a system called Skinput that effectively turns your body surface into both screen and input device.

Skinput makes use of a microchip-sized pico projector embedded in an armband to beam an image onto a user’s forearm or hand. When the user taps a menu item or other control icon on the skin, an acoustic detector also in the armband analyzes the ultralow-frequency sound to determine which region of the display has been activated.

The technology behind Skinput is described in this paper the group will present in April at the Computer-Human Interaction conference in Atlanta.

Check out the video:

14:49 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces, skinput, human-computer confluence

Mar 01, 2010

Curious displays

Curious Displays from Julia Tsao on Vimeo.

18:34 | Permalink | Comments (0) | Tags: future interfaces, lcd, multitouch

Feb 04, 2010

BiDi Screen, 3D gesture interaction in thin screen device

Via Chris Jablonski's blog

Researchers at the Massachusetts Institute of Technology have created a working prototype of a bidirectional LCD (captures and displays images) that allows a viewer to control on-screen objects without the need for any peripheral controllers or even touching the screen. In near Minority Report fashion, interaction is possible with just a wave of the hand.

The BiDi is inspired by emerging LCDs that use embedded optical sensors to detect multiple points of contact and exploits the spatial light modulation capability of LCDs to allow lensless imaging without interfering with display functionality. According to MIT researchers, this technology can lead to a wide range of applications, such as in-air gesture control of everything from CE devices like mobile phones to flat-panel TVs.

BiDi Screen, 3D gesture interaction in thin screen device from Matt Hirsch on Vimeo.

13:03 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces, lcd, multitouch

Jun 26, 2009

Reactable

From the Reactable website:

The Reactable is a revolutionary new electronic musical instrument designed to create and perform the music of today and tomorrow. It combines state of the art technologies with a simple and intuitive design, which enables musicians to experiment with sound, change its structure, control its parameters and be creative in a direct and refreshing way, unlike anything you have ever known before.

The Reactable uses a so called tangible interface, where the musician controls the system by manipulating tangible objects. The instrument is based on a translucent and luminous round table, and by putting these pucks on the Reactable surface, by turning them and connecting them to each other, performers can combine different elements like synthesizers, effects, sample loops or control elements in order to create a unique and flexible composition.

As soon as any puck is placed on the surface, it is illuminated and starts to interact with the other neighboring pucks, according to their positions and proximity. These interactions are visible on the table surface which acts as a screen, giving instant feedback about what is currently going on in the Reactable turning music into something visible and tangible.

Additionally, performers can also change the behavior of the objects by touching and interacting with the table surface, and because the Reactable technology is “multi-touch”, there is not limit to the number of fingers that can be used simultaneously. As a matter of fact, the Reactable was specially designed so that it could also be used by several performers at the same time, thus opening up a whole new universe of pedagogical, entertaining and creative possibilities with its collaborative and multi-user capabilities

20:09 Posted in Creativity and computers, Future interfaces | Permalink | Comments (0) | Tags: creativity, music, future interfaces

Jun 23, 2009

Project NATAL: The gaming revolution has arrived

I am probably not the first to post about Microsoft's NATAL project, but who cares?

The fact is, I literally lack the words to express how deep I am impressed by this new gaming technology.

I have no idea if/when this product will come to the shops, but it's hard to believe that Microsoft will have any more competitors in the game industry after its launch.

Announced during Microsoft's annual E3 press conference, Project Natal is the point of arrival of several years of r&d by an Israeli start-up called 3DV Systems, which Microsoft recently acquired. Microsoft Xbox Senior Vice President Don Mattrick did state that Project Natal would be compatible with every Xbox 360, but the cost is top secret..

The technology (see video below), allows users contolling games, movies, and anything else on their Xbox system with their body alone, and without touching any hardware.

If it's a real product and not just a marketing invention, it could also have important applications in the field of cybertherapy, in particular for neuro-motor rehabilitation. The advantages of this technology are quite clear: there is nothing to wear for the patient and it's possibile to use motivational gaming scenarios of all kinds.

17:25 Posted in Future interfaces | Permalink | Comments (0) | Tags: natural human-computer interaction, future interfaces

Apr 06, 2009

Violet Mirror

The company Violet (best known for the Nabaztag) has invented the "Violet Mirror", a RFID chip reader that can be connected to the PC. The RFID can be attached to any object and scripted to trigger applications and multimedia content automatically or communicate over the Internet.

This is a usage scenario described in the product's website:

"8:40 am – you’re getting ready to leave home. On your desk, next to your computer, a halo of light is quietly pulsating. You swiftly flash your car keys at this mysterious device. A voice speaks out: "today, rain 14°C". The voice continues: "you will get there in 15 minutes". Your computer screen displays an image from the webcam located along the route you’re planning to travel, while the voice reads out your horoscope for the day. At the same moment, your friends can see your social network profile update to "It’s 8:40, I’m leaving the house". At the office, your favourite colleague receives an email to say that you won’t be long. And finally, just as you walk through the door, your computer locks.

You personally "scripted" this morning’s scenario: you decided to give your car keys all these powers, because the time you pick them up signals the fact you’re soon going to leave the house.

What if you could obtain information, access services, communicate with the world, play or have fun just by showing things to a mirror, a Mir:ror which, as if by magic, could make all your everyday objects come alive, and connect them to the Internet’s endless wealth of possibilities?

Mir:ror is as simple to use as looking in the mirror - it gives access to information or triggers actions with disarming ease: simply place an object near to its surface. Mir:ror is a power conferred upon each of us to easily program the most ordinary of objects. The revolution of the Internet of Things suddenly becomes a simple, obvious, daily reality that’s within anyone’s reach."

Watch the video

19:11 Posted in Future interfaces, Wearable & mobile | Permalink | Comments (0) | Tags: internet of things, future interfaces, rfid

Apr 24, 2008

Human area network (HAN) technology

A new product by NTT, called “Firmo,” allows users to communicate with electronic devices by touching them. A card-sized transmitter carried in the user’s pocket transmits data across the surface of the human body. When the user touches a device, the electric field is converted back into a data signal that can be read by the device.

For now, a set of 5 card-sized transmitters and 1 receiver goes for around 800,000 yen ($8,000), but NTT expects the price to come down when they begin mass production.

Read more

13:12 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Mar 14, 2008

Nerve-tapping neckband used in 'telepathic' chat

From NewScientist

A neckband that translates thought into speech by picking up nerve signals has been used to demonstrate a "voiceless" phone call for the first time.

With careful training a person can send nerve signals to their vocal cords without making a sound. These signals are picked up by the neckband and relayed wirelessly to a computer that converts them into words spoken by a computerised voice.

13:31 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Mar 09, 2008

Cyber Goggles: High-tech memory aid

From Pink Tentacle

Researchers at the University of Tokyo have created a smart video goggle system that should records everything the wearer looks at, recognizes and assigns names to objects that appear in the video, and creates an easily searchable database of the recorded footage.

23:56 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Nov 04, 2007

Tactile Video Displays

Via Medgadget

Researchers at National Institute of Standards and Technology have developed a tactile graphic display to help visually impaired people to perceive images.

From the NIST press release

ELIA Life Technology Inc. of New York, N.Y., licensed for commercialization both the tactile graphic display device and fingertip graphic reader developed by NIST researchers. The former, first introduced as a prototype in 2002, allows a person to feel a succession of images on a reusable surface by raising some 3,600 small pins (actuator points) into a pattern that can be locked in place, read by touch and then reset to display the next graphic in line. Each image-from scanned illustrations, Web pages, electronic books or other sources-is sent electronically to the reader where special software determines how to create a matching tactile display. (For more information, see "NIST 'Pins' Down Imaging System for the Blind".)

An array of about 100 small, very closely spaced (1/10 of a millimeter apart) actuator points set against a user's fingertip is the key to the more recently created "tactile graphic display for localized sensory stimulation." To "view" a computer graphic with this technology, a blind or visually impaired person moves the device-tipped finger across a surface like a computer mouse to scan an image in computer memory. The computer sends a signal to the display device and moves the actuators against the skin to "translate" the pattern, replicating the sensation of the finger moving over the pattern being displayed. With further development, the technology could possibly be used to make fingertip tactile graphics practical for virtual reality systems or give a detailed sense of touch to robotic control (teleoperation) and space suit gloves.

Press release: NIST Licenses Systems to Help the Blind 'See' Images

18:40 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Oct 27, 2007

LevelHead

Re-blogged from Networked Performance

levelHead is an interactive game that uses a cube, a webcam, and pattern recognition. When the cube is rotated or tilted in front of the camera the user will be able to see ‘inside’ the cube and guide a small avatar through six different rooms.

Pattern recognition has already been used in several other projects, but this is a new way of using it, and a new way of thinking of the technology. The idea behind the game itself is rather simple. When the cube is tilted the avatar moves in the corresponding direction. The goal of the game is to guide him through a maze of rooms connected by doors, and lead him to the outside world.

According to the creater, Julian Oliver, the game is currently in development, but will be released as open-source soon.

Check out the explanatory video.

15:25 Posted in Augmented/mixed reality | Permalink | Comments (0) | Tags: future interfaces

Oct 12, 2007

The Hands-on Computer

Perceptive Pixel’s Interactive Media Wall (aka the Multi-Touch wall) got the 2007 Breakthrough Award, the annual prize assigned by the journal Popular Mechanics to "cutting-edge projects and ideas leading to a better world". The Minority Report interface supports diverse functions, such as navigate, locate, and manipulate information, all handled through multi-touch gesturing.

22:10 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Sep 08, 2007

3D motion capture using a webcam

14:46 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Jul 29, 2007

Lomak (Light Operated Mouse And Keyboard) Gets 2007 IDEA Gold

Lomak International Limited was awarded the Gold Prize in the Computer Equipment category in IDSA's 2007 Awards.

Company explains its technology:

Lomak (light operated mouse and keyboard) is designed for people that have difficulty with, or are unable to use, a standard computer keyboard and mouse. A hand or head pointer controls a beam of light that highlights then confirms the key or mouse functions on the keyboard. By confirming each key, only the correct selection is entered, which reduces errors and increases input speed.In addition to speed and accuracy, Lomak offers a number of advantages over other access methods including;

- versatility and ease of use and training (people can be up and running with it almost immediately)

- it requires no calibration and can operate in any ambient conditions

- it does not require software (i.e. no dedicated computers are required for users with disabilities; converselyusers can log into their own PCs without assistance)

- it does not require any screen area (no on-screen keyboard or mouse menu is required)

- it can be used with any application (e.g. proprietary software such as accounting/payroll applications and other business software)

Lomak is ideal for a work environment as it is easy to install, use and manage. It requires little or no technical support as from a systems perspective it is recognised as simply a USB keyboard and mouse.

20:49 Posted in Enactive interfaces | Permalink | Comments (0) | Tags: future interfaces

Jul 17, 2007

Plumi — free software video sharing platform

EngageMedia are very excited to announce the release of Plumi, a free software video sharing platform. Plumi enables you to create a sophisticated video sharing and community site out-of-the-box. In a web landscape where almost all video sharing sites keep their distribution platform under lock and key Plumi is one contribution to creating a truly democratic media.

00:23 | Permalink | Comments (0) | Tags: future interfaces

Gesture-control for regular TV

Australian engineers Prashan Premaratne and Quang Nguye have designed a novel gesture-control for regular TV.

The controller's built-in camera can recognise seven simple hand gestures and work with up to eight different gadgets around the home. According to designers:

“Crucially for anyone with small children, pets or gesticulating family members, the software can distinguish between real commands and unintentional gestures“.

Premaratne and Nguye predict the system availability on the market within three year.

00:13 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Delicate Boundaries

Re-blogged from We Make Money Not Art

Delicate Boundaries, a work by Chris Sugrue, uses human touch to dissolve the barrier of the computer screen. Using the body as a means of exchange, the system explores the subtle boundaries that exist between foreign systems and what it might mean to cross them. Lifelike digital animations swarm out of their virtual confinement onto the skin of a hand or arm when it makes contact with a computer screen creating an imaginative world where our bodies are a landscape for digital life to explore.

Video.

00:03 Posted in Cyberart, Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Jul 06, 2007

Tangible 3D display

Via NewScientist

Japanese NTT has unveiled a system that makes three dimensional images solid enough to grasp. The device creates the illusion of depth perception and provides haptic feedback

I believe that among its potential applications, this technology could be effectively used in the rehabilitation of the upper limb following stroke

From NewScientist

NTT engineer Shiro Ozawa, who developed the system, envisages various applications. "You would be able to take the hand, or gently pat the head, of your beloved grandchild who lives far away from you," he says.

Anthony Steed, who works with haptic systems at University College London, UK, says the real-time image capture made possible by the Tangible 3D system is especially interesting.

His own research group has performed related work. But this involved connecting a haptic device to a 2D display on which the user's hands are projected, rather than allowing users to manipulate virtual objects directly. He thinks the NTT system could make the interaction feel much more real, although the haptic glove could hinder this.

Steed's group wants to use such technology to make valuable museum exhibits touchable and is working with the British Museum in London towards this goal.

16:30 Posted in Enactive interfaces | Permalink | Comments (0) | Tags: future interfaces, haptics

Jul 03, 2007

Pentagon to Merge Next-Gen Binoculars With Soldiers' Brains

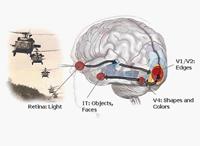

From Pentagon to Merge Next-Gen Binoculars With Soldiers' Brains by Sharon Weinberger, Wired:

"U.S. Special Forces may soon have a strange and powerful new weapon in their arsenal: a pair of high-tech binoculars 10 times more powerful than anything available today, augmented by an alerting system that literally taps the wearer's prefrontal cortex to warn of furtive threats detected by the soldier's subconscious.

In a new effort dubbed "Luke's Binoculars" -- after the high-tech binoculars Luke Skywalker uses in Star Wars -- the Defense Advanced Research Projects Agency is setting out to create its own version of this science-fiction hardware. And while the Pentagon's R&D arm often focuses on technologies 20 years out, this new effort is dramatically different -- Darpa says it expects to have prototypes in the hands of soldiers in three years...

The most far-reaching component of the binocs has nothing to do with the optics: it's Darpa's aspirations to integrate EEG electrodes that monitor the wearer's neural signals, cueing soldiers to recognize targets faster than the unaided brain could on its own. The idea is that EEG can spot "neural signatures" for target detection before the conscious mind becomes aware of a potential threat or target."

22:09 Posted in Brain-computer interface | Permalink | Comments (0) | Tags: future interfaces, brain-computer interface