Aug 04, 2006

Whole-brain connectivity diagrams

Via BrainTechSci

In a previous post I covered BrainMaps, the interactive zoomable high-resolution diagram for whole-brain connectivity. Now the project provides a powerful new feature, the interactive visualization of brain connectivity in 3D.

From the download page:

Welcome to nodes3D, a 3D graph visualization program written by Issac Trotts in consultation with Shawn Mikula, in the labs of Edward G. Jones. On startup, nodes3d will download a graph of gross neuroanatomical connectivity from the MySQL database at brainmaps.org. Future versions will probably support loading of graphs from files or other databases

12:50 Posted in Information visualization, Research tools | Permalink | Comments (0) | Tags: neurotechnology

TransVision06

The TransVision 2006 annual conference of the World Transhumanist Association, Helsinki 17-19 August 2006, organized by the WTA and the Finnish Transhumanist Association, will be open to remote visitors in the virtual reality world of Second Life.

TransVision06, August 17-19: University of Helsinki, Finland, Europe

This year the theme of the conference will be Emerging Technologies of Human Enhancement. We'll be looking at recent and ongoing technological developments and discussing associated ethical and philosophical questions.

We will hold a mixed reality event between the Helsinki conference hall and Second Life:

The Second Life event will take place in the uvvy island in SL. To attend, use the Second Life map in the client, look for region uvvy and teleport.

The real time video stream from Helsinki will be displayed in SL.

Some presentations will also be displayed in SL in Power Point -like format.

Some PCs running the SL client will be available in the conference hall in Helsinki for SL users (at least 3 plus myself (gp)).

Some SL users will be simultaneously present in both worlds who can relay questions from the remote SL audience to speakers.

The SL event will be projected on a screen in Helsinki.

There will be a single text chat space for Second Life users and IRC chat users. So those whose computers are too slow or for some other reason don't want to use Second Life can use a combination of IRC and the video feed to interact with the conference participants in both Helsinki and Second Life.

See also:

http://community.livejournal.com/transvision06/

http://community.livejournal.com/transvision06/1346.html

12:35 Posted in Brain training & cognitive enhancement, Positive Technology events | Permalink | Comments (0) | Tags: Transhumanism

Aug 03, 2006

Virtual bots teach each other

From New Scientist Tech

"Robots that teach one another new words through interaction with their surroundings have been demonstrated by UK researchers. The robots, created by Angelo Cangelosi and colleagues at Plymouth University, UK, currently exist only as computer simulations. But the researchers say their novel method of communication could someday help real-life robots cooperate when faced with a new challenge. They could also help linguists understand how human languages develop, they say..."

Continue to read the full article on New Scientist

Watch the video

21:11 Posted in AI & robotics, Virtual worlds | Permalink | Comments (0) | Tags: artificial intelligence

empathic painting

Via New Scientist

A team of computer scientists (Maria Shugrina and Margrit Betke from Boston University, US, and John Collomosse from Bath University, UK) have created a video display system (the "Empatic Painting") that tracks the expressions of onlookers and metamorphoses to match their emotions:

"For example, if the viewer looks angry it will apply a red hue and blurring. If, on the other hand, they have a cheerful expression, it will introduce increase the brightness and colour of the image." (New Scientist )

See a video of the empathic painting (3.4MG .avi, required codec).

20:50 Posted in Cyberart, Emotional computing | Permalink | Comments (0) | Tags: emotional computing

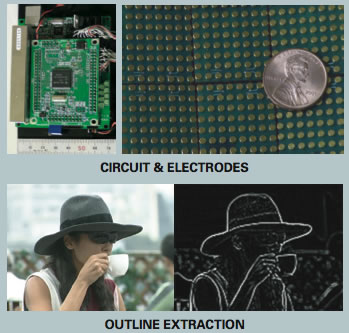

Forehead Retina System

Va Technovelgy

Researchers at at the University of Tokyo, in joint collaboration with EyePlusPlus, Inc., have developed a system that uses tactile sensations in the forehead as a "substitute retina" to enable visually impaired people to "see" the outlines of objects.

The Forhead Retina System (FRS) uses a special headband to selectively stimulate different mechanoreceptors in forehead skin to allow visually impaired people to perceive a picture of what lies in front of them.

The system has been presented at the Emerging Technology session of Siggraph 2006. From the conference website:

Although electrical stimulation has a long scientific history, it has not been used for practical purposes because stable stimulation was difficult to achieve, and mechanical stimulation provided much better spatial resolution. This project shows that stable control of electrical stimulation is possible by using very short pulses, and anodic stimulation can provide spatial resolution equal to mechanical stimulation. This result was presented at SIGGRAPH 2003 as SmartTouch, a visual-to-tactile conversion system for skin on the finger using electrical stimulation. Because the Forehead Retina System shows that stable stimulation on the "forehead skin" is also quite possible, it leads to a new application of electrical stimulation.

Goals

According to a 2003 World Health Organization report, up to 45 million people are totally blind, while 135 million live with low vision. However, there is no standard visual substitution system that can be conveniently used in daily life. The goal of this project is to provide a cheap, lightweight, yet fully functional system that provides rich, dynamic 2D information to the blind.

Innovations

The big difference between finger skin and forehead skin is the thickness. While finger skin has a thick horny layer of more than 0.7 mm, the forehead skin is much thinner (less than 0.02mm). Therefore, if the electrode directly contacts the skin, concentrated electrical potential causes unnecessary nerve stimulation and severe pain. The Forehead Retina System uses an ionic gel sheet with the same thickness and electrical impedance as the horny layer of finger skin. When the gel sheet is placed between the electrode and the forehead, stable sensation is assured.

Compared to current portable electronic devices, most of the proposed "portable" welfare devices are not portable in reality. They are bulky and heavy, and they have a limited operation time. By using electrical stimulation, the Forehead Retina System partially solves these problems. Nevertheless, driving 512 electrodes with more than 300 volts is quite a difficult task, and it normally requires a large circuit space. The system uses a high-voltage switching integrated circuit, which is normally used to drive micro-machines such as digital micro-mirror devices. With fast switching, current pulses are allocated to appropriate electrodes. This approach enables a very large volune of stimulation and system portability at the same time.

Vision

This project demonstrates only the static capabilities of the display. Other topics pertaining to the display include tactile recognition using head motion (active touch), gray-scale expression using frequency fluctuation, and change in sensation after long-time use due to sweat. These will be the subjects of future studies.

The current system uses only basic image processing to convert the visual image to tactile sensation. Further image processing, including motion analysis, pattern and color recognition, and depth perception is likely to become necessary in the near future.

It is important to note that although many useful algorithms have been proposed in the field of computer vision, they can not always be used unconditionally. The processing must be performed in real time, and the system must be small and efficient for portability. Therefore, rather than incorporating elegant but expensive algorithms, the combination of bare minimum image processing and training is practical. The system is now being tested with the visually impaired to determine the optimum balance that good human interfaces have always achieved.

18:14 Posted in Brain training & cognitive enhancement | Permalink | Comments (0)

Sixth International Conference on Epigenetic Robotics

Via Human Technology

Sixth International Conference on Epigenetic Robotics: Modeling Cognitive Development in Robotic Systems

Dates: 20-22 September 2006

Location: Hopital de la Salpêtrière, Paris, France

![]()

From the conference website:

In the past 5 years, the Epigenetic Robotics annual workshop has established itself as a unique place where original research combining developmental sciences, neuroscience, biology, and cognitive robotics and artificial intelligence is being presented.

Epigenetic systems, either natural or artificial, share a prolonged developmental process through which varied and complex cognitive and perceptual structures emerge as a result of the interaction of an embodied system with a physical and social environment.

Epigenetic robotics includes the two-fold goal of understanding biological systems by the interdisciplinary integration between social and engineering sciences and, simultaneously, that of enabling robots and artificial systems to develop skills for any particular environment instead of programming them for solving particular goals for the environment in which they happen to reside.

Psychological theory and empirical evidence is being used to inform epigenetic robotic models, and these models should be used as theoretical tools to make experimental predictions in developmental psychology.

17:55 Posted in AI & robotics, Positive Technology events | Permalink | Comments (0)

Aug 02, 2006

3D blog

Thanks to Giuseppe Riva (reporting from Siggraph 2006)

12:50 Posted in Future interfaces, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

The Huggable

Via Siggraph2006 Emerging Technology website

The Huggable is a robotic pet developed by MIT researchers for therapy applications in children's hospitals and nursing homes, where pets are not always available. The robotic teddy has full-body sensate skin and smooth, quiet voice coil actuators that is able to relate to people through touch. Further features include "temperature, electric field, and force sensors which it uses to sense the interactions that people have with it. This information is then processed for its affective content, such as, for example, whether the Huggable is being petted, tickled, or patted; the bear then responds appropriately".

The Huggable has been unveiled at the Siggraph2006 conference in Boston. From the conference website:

Enhanced Life

Over the past few years, the Robotic Life Group at the MIT Media Lab has been developing "sensitive skin" and novel actuator technologies in addition to our artificial-intelligence research. The Huggable combines these technologies in a portable robotic platform that is specifically designed to leave the lab and move to healthcare applications.

Goals

The ultimate goal of this project is to evaluate the Huggable's usefulness as a therapy for those who have limited or no access to companion-animal therapy. In collaboration with nurses, doctors, and staff, the technology will soon be applied in pilot studies at hospitals and nursing homes. By combining Huggable's data-collection capabilities with its sensing and behavior, it may be possible to determine early onset of a person's behavior change or detect the onset of depression. The Huggable may also improve day-to-day life for those who may spend many hours in a nursing home alone staring out a window, and, like companion-animal therapy, it could increase their interaction with other people in the facility.

Innovations

The core technical innovation is the "sensitive skin" technology, which consists of temperature, electric-field, and force sensors all over the surface of the robot. Unlike other robotic applications where the sense of touch is concerned with manipulation or obstacle avoidance, the sense of touch in the Huggable is used to determine the affective content of the tactile interaction. The Huggable's algorithms can distinguish petting, tickling, scratching, slapping, and poking, among other types of tactile interactions. By combining the sense of touch with other sensors, the Huggable detects where a person is in relation to itself and responds with relational touch behaviors such as nuzzling.

Most robotic companions use geared DC motors, which are noisy and easily damaged. The Huggable uses custom voice-coil actuators, which provide soft, quiet, and smooth motion. Most importantly, if the Huggable encounters a person when it tries to move, there is no risk of injury to the person.

Another core technical innovation is the Huggable' combination of 802.11g networking with a robotic companion. This allows the Huggable to be much more than a fun, interactive robot. It can send live video and data about the person's interactions to the nursing staff. In this mode, the Huggable functions as a team member working with the nursing home or hospital staff and the patient or resident to promote the Huggable owner's overall health.

Vision

As poorly staffed nursing homes and hospitals become larger and more overcrowded, new methods must be invented to improve the daily lives of patients or residents. The Huggable is one of these technological innovations. Its ability to gather information and share it with the nursing staff can detect problems and report emergencies. The information can also be stored for later analysis by, for example, researchers who are studying pet therapy.

12:05 Posted in AI & robotics, Emotional computing, Serious games | Permalink | Comments (0) | Tags: cybertherapy, robotics, artificial intelligence

Photos transformed into 3D model

With Photosynth you can:

- Walk or fly through a scene to see photos from any angle.

- Seamlessly zoom in or out of a photograph whether it’s megapixels or gigapixels in size.

- See where pictures were taken in relation to one another.

- Find similar photos to the one you’re currently viewing.

- Explore a custom tour.

- Send a collection to a friend.

11:55 Posted in Virtual worlds | Permalink | Comments (0)

Home-based sensors could detect early signs of dementia

Via Medgadget

According to a study conducted by Oregon Health & Science University researchers, continuous, unobtrusive monitoring of in-home activity may be a reliable way of assessing changes in motor behaviors that may occur along with changes in memory. The study was presented last week at the 10th International Conference on Alzheimer's Disease and Related Disorders in Madrid.

From the university's press release:

"To see a trend over time, you need multiple measures - good days and bad days - and it often takes years to see that trend in a clinic setting," said Tamara Hayes, Ph.D., assistant professor of biomedical engineering at OHSU's OGI School of Science & Engineering, and the study's lead author. She noted that most clinic visits by elders are spaced over months or even years, and their memory and motor skills performances are evaluated in a small number of tests completed in a limited amount of time.

"In contrast, we're looking continuously at elders' activity in their own homes," Hayes said. "Since we're measuring a person's activity many times over a short period, we can understand their normal variability and identify trends. If there's a change over a period, you can see it quickly. "

Mild cognitive impairment is a known risk factor for dementia, a neurological disorder most commonly caused by Alzheimer's disease. Changes in clinical measures of activity, such as walking and finger-tapping speeds, have been shown to occur at about the same time as memory changes leading to dementia. By detecting subtle activity changes over time in the natural setting of an elder's home, researchers hope to more effectively identify when elders are starting to have trouble.

11:40 Posted in Cybertherapy, Persuasive technology, Pervasive computing | Permalink | Comments (0)

Animation can be outlet for victimized children, a tool for research

Animation is a proven vehicle for biting comedy, a la "The Simpsons" and "South Park." But some of the same qualities that make it work for comedy make it valuable, too, as an outlet for victimized children and for a new research method that tests the empathy of teachers who may deal with them, says Sharon Tettegah, a professor of curriculum and instruction at the University of Illinois at Urbana-Champaign.

Tettegah believes so strongly in the value of animation – specifically “animated narrative vignette simulations” – that she sought out a computer science professor at Illinois, Brian Bailey, to help develop her concept for a child-friendly program for producing them.

The program that resulted, called Clover, gives children, as well as adults, a tool for making and sharing their own vignettes about their personal and sometimes painful stories.

According to Tettegah, the program is the only one she is aware of that allows the user to write the narrative, script the dialogue, storyboard the graphics and add voice and animation, all within one application. Those four major aspects of producing a vignette gave rise to the name “Clover,” the plant considered to bring good luck in its four-leaf form.

A paper about Clover, written by Bailey, Tettegah and graduate student Terry Bradley, has been published in the July issue of the journal Interacting With Computers.

In other research, Tettegah has used animations as a tool for gauging the empathy of teachers and others who might deal with children and their stories of victimization. One study with college education majors, or teachers-in-training, showed only one in 10 expressing a high degree of empathy for the victim, she said.

A paper about that study has been accepted by the journal Contemporary Educational Psychology (CEP), with publication slated for later this year. The co-author of the study is Carolyn Anderson, a professor of educational psychology at Illinois.

11:30 Posted in Cybertherapy, Serious games | Permalink | Comments (0) | Tags: serious gaming

EJEL special issue on Communities of Practice and e-learning

In the issue of the Electronic Journal of E-Learning to be published in the summer of 2006, there will be a section focusing on Communities of Practice. This special section will examine, from a theoretical and practical perspective, how the concepts of Communities of Practice and e-learning can be combined.

Possible topics include:

- Communities of Practice for e-learning professionals;

- Establishing Communities of Practice to support e-learners

- Communities of Practice and informal e-learners;

- Technologies supporting Communities of Practice and e-learning

The Electronic Journal of e-Learning provides perspectives on topics relevant to the study, implementation and management of e-Learning initiatives.

The journal contributes to the development of both theory and practice in the field of e-Learning. The journal accepts academically robust papers, topical articles and case studies that contribute to the area of research in e-Learning.

10:33 Posted in Creativity and computers | Permalink | Comments (0) | Tags: collaborative computing

Urban Tapestries

Urban Tapestries aims to enable people to become authors of the environment around them – Mass Observation for the 21st Century. Like the founders of Mass Observation in the 1930s, we are interested creating opportunities for an "anthropology of ourselves" – adopting and adapting new and emerging technologies for creating and sharing everyday knowledge and experience; building up organic, collective memories that trace and embellish different kinds of relationships across places, time and communities.

It is part of an ongoing research programme of experiments with local groups and communities called Social Tapestries.

10:23 Posted in Information visualization, Telepresence & virtual presence, Wearable & mobile | Permalink | Comments (0) | Tags: locative media

ECGBL 2007: The European Conference on Games Based Learning

University of Paisley, Scotland, UK 25-26 October 2007

Over the last ten years, the way in which education and training is delivered has changed considerably with the advent of new technologies. One such new technology that holds considerable promise for helping to engage learners is Games-Based Learning (GBL).

The Conference offers an opportunity for scholars and practitioners interested in the issues related to GBL to share their thinking and research findings. The conference examines the question “Can Games-Based Learning Enhance Learning?” and seeks high-quality papers that address this question. Papers can cover various issues and aspects of GBL in education and training: technology and implementation issues associated with the development of GBL; use of mobile and MMOGs for learning; pedagogical issues associated with GBL; social and ethical issues in GBL; GBL best cases and practices, and other related aspects. We are particularly interested in empirical research that addresses whether GBL enhances learning. This Conference provides a forum for discussion, collaboration and intellectual exchange for all those interested in any of these fields of research or practice.

Important dates:

Abstract submission deadline: 17 May 2007

Notification of abstract acceptance: 24 May 2007

Full paper due for review: 5 July 2007

Notification of paper acceptance: 16 August 2007

Final paper due (with any changes): 6 September 2007

A full call for papers, online submission and registration forms and all other details are available on the conference website.

10:15 Posted in Creativity and computers, Positive Technology events, Virtual worlds | Permalink | Comments (0) | Tags: serious gaming

Aug 01, 2006

Drinking Games

Via Medgadget

Miles Cox, professor of the psychology of addictive behaviors at the University of Wales, is experimenting a computer-based approach to get alcoholics to ignore the potent cues that trigger their craving. The study has been covered by MIT Technology Review:

Just as these responses can be conditioned, they can also be de-conditioned, reasons Cox. His computer program helps abusers deal with the sight of alcohol, since it's often the first cue they experience in daily life. The program presents a series of pictures, beginning with an alcohol bottle inside a thick, colored frame. As fast as they can, users must identify the color of the frame. As users get faster, the test gets harder: the frame around the bottles becomes thinner. Finally, an alcohol bottle appears next to a soda bottle, both inside colored frames. Users must identify the color of the circle around the soda. The tasks teach users to "ignore the alcohol bottle" in increasingly difficult situations, says Cox.Such tests have long been used to study attention phenomena in alcohol abusers, but they have never been used for therapy, says Cox. His group adapted the test for this new purpose by adding elements of traditional therapy. Before the tests, users set goals on how quickly they want to react; a counselor makes sure the goals are achievable. After each session, users see how well they did. The positive feedback boosts users' motivation and mood, Cox says.

Find more on the ESRC study page

17:20 Posted in Cybertherapy, Serious games | Permalink | Comments (0) | Tags: serious gaming, cybertherapy

PTJ sitegraph

16:08 Posted in Information visualization | Permalink | Comments (0) | Tags: complex networks

Computer's schizophrenia diagnosis inspired by the brain

Via New Scientist

University of California at San Francisco researchers may have created a computerized diagnostic tool utilizing MRI based technology for determining whether someone has schizophrenia. From New Scientist:"Raymond Deicken at the University of California at San Francisco and colleagues have been studying the amino acid Nacetylaspartate (NAA). They found that levels of NAA in the thalamus region of the brain are lower in people with schizophrenia than in those without.To find out whether software could diagnose the condition from NAA levels, the team used a technique based on magnetic resonance imaging to measure NAA levels at a number of points within the thalamus of 18 people, half of whom had been diagnosed with schizophrenia. Antony Browne of the University of Surrey, UK, then analysed these measurements using an artificial neural network, a program that processes information by learning to recognise patterns in large amounts of data in a similar way to neurons in the brain.

Browne trained his network on the measurements of 17 of the volunteers to teach it which of the data sets corresponded to schizophrenia and which did not. He then asked the program to diagnose the status of the remaining volunteer, based on their NAA measurements. He ran the experiment 18 times, each time withholding a different person's measurements. The program diagnosed the patients with 100 per cent accuracy."

11:50 Posted in AI & robotics, Cybertherapy | Permalink | Comments (0)

Freq2

From Networked Performance (via Pixelsumo]

Freq2, by Squidsoup, uses your whole body to control the precise nature of a sound - a form of musical instrument. The mechanism used is to trace the outline of a person's shadow, using a webcam, and transform this line into an audible sound. Any sound can be described as a waveform - essentially a line - and so these lines can be derived from one's shadow. What you see is literally what you hear, as the drawn wave is immediately audible as a realtime dynamic drone.

Freq2 adds to this experience by adding a temporal component to the mix; a sonic composition in which to frame the instrument. The visuals, an abstract 3-dimensional landscape, extrudes in realtime into the distance, leaving a trail of the interactions that have occurred. This `memory' of what has gone before is reflected in the sounds, with long loops echoing passed interactions. The sounds, all generated in realtime from the live waveform, have also been built into more of a compositional soundscape, with the waveform being played at a range of pitches and with a rhythmic component.

Watch video

11:39 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart

Oribotics

via textually.org

Oribotics - by Matthew Gardiner - is the fusion of origami and technology, specifically 'bot' technology, such as robots, or intelligent computer agents known as bots. The system was designed to create an intimate connection between the audience and the bots; a cross between gardening, messaging a friend, and commanding a robot. It was developed during an Australian Network for Artists and Technology (ANAT) artists lab; achieved with Processing as an authoring tool, and connected a mobile phone via a USB cable.

"Ori-botics is a joining of two complex fields of study. Ori comes from the Japanese verb Oru literally meaning 'to fold'. Origami the Japanese word for paper folding, comes from the same root. Oribotics is origami that is controlled by robot technology; paper that will fold and unfold on command. An Oribot by definition is a folding bot. By this definition, anything that uses robotics/botics and folding together is an oribot. This includes some industrial applications already in existance, such as Miura's folds taken to space, and also includes my two latest works. Orimattic, and Oribotics."

11:38 Posted in AI & robotics, Cyberart | Permalink | Comments (0) | Tags: cyberart