Jul 18, 2006

Workshop on Emotion in HCI - London, UK

From Usability News

The topic of emotion in Human-Computer Interaction is of increasing interest to the HCI community. Since Rosalind Picard's fundamental publications on affective computing, research in this field has gained significant momentum.

Emotion research is largely grounded in psychology yet spans across numerous other disciplines. The challenge of such an interdisciplinary research area is developing a common vocabulary and research framework that a mature discipline requires. What is increasingly needed for advanced and serious work in this field is to place it on a rigorous footing, including developing theoretical fundamentals of HCI-related emotion research, understanding emotions' function in HCI, ethical and legal issues, and the practical implications and consequences for the HCI community.

The first workshop on emotion in HCI held in Edinburgh last year brought an interdisciplinary group of practitioners and researchers together for a lively exchange of ideas, discussion of common problems, and identification of domains to explore.

This year's workshop will build on the success of last year. Focus will be on discussion and joint work on selected topics. Participants will engage in developing further the themes from the first workshop in as wide an application spectrum as possible, such as internet applications, ambient intelligence, office work, control rooms, mobile computing, virtual reality, presence, and home applications.

You are cordially invited to become part of this interdisciplinary forum. This will be a very practical workshop with the participants working together to find new insights, views, ideas and solutions. We therefore invite contributions which will enrich the discussions by their innovative content, fundamental nature, or new perspective. We also encourage demos of products or prototypes related to the topic.

Topics addressed by the workshop are:

- How do applications currently make use of emotions and how could it be improved?

- What makes applications that support affective interactions successful?

- How do we know if affective interactions are successful, and how can we measure this success?

- What value might affective applications, affective systems, and affective interaction have?

- What requirements on sensing technologies are there in HCI?

- What technology is currently available for sensing affective states?

- How reliable is sensing technology?

- Are there reliable and replicable processes to include emotion in HCI design projects?

- What opportunities and risks are there in designing affective applications?

- What are the relationships between emotion, affect, personality, and engagement, and what do they mean for interactive systems design?

To become part of this discussion please submit an extended abstract of your ideas or demo description. Case studies describing current applications or prototypes are strongly encouraged, as well as presentations of products or prototypes that you have developed.

The abstract should be limited to about 800 words. Accepted contributions will be published on the workshop's homepage with the possibility to extend them to short papers of 4 pages. It is also planned to produce a special issue of a journal on the results of the workshop.

Please note that registration to the HCI conference is required in order to take part in the workshop (at least for the day of the workshop). Early bird registration deadline is 21st July.

Dates:

27 June - position paper deadline

11 July - notification of acceptance

21 July - early registration deadline

12 September - workshop

01:14 Posted in Emotional computing | Permalink | Comments (0) | Tags: emotional computing

Jul 08, 2006

Emotionally aware computer

According to The Herald, Cambridge professor Peter Robinson has developed a prototype of an “emotionally aware computer” that uses a camera to capture images of the user’s face, then determines facial expressions, and infers the user’s mood.

From the report:

‘Imagine a computer that could pick the right emotional moment to sell you something,” says Peter Robinson, of Cambridge University. “Imagine a future where websites and mobile phones could read our mind and react to our moods.”

It sounds like Orwellian fiction but this week, Robinson, a professor of computer technology, unveiled a prototype for just such a “mind-reading” machine. The first emotionally aware computer is on trial at the Royal Society Festival of Science in London…Once the software is perfected, Robinson believes it will revolutionise marketing. Cameras will be on computer monitors in internet cafes and behind telescreens in bars and waiting rooms. Computers will process our image and respond with adverts that connect to how we’re feeling.

20:43 Posted in Emotional computing | Permalink | Comments (0) | Tags: emotional computing

Jul 05, 2006

Panasonic Emotions Testing Line

Re-blogged from Networked Performance

The Panasonic Emotions Testing Line, by "nomadic" development designer JIŘÍ ČERNICKÝ, is a conceptual SD audio-prosthesis that aims to grasp the issue of the emotional deficits in society.

The device is a substitute, personal container for the emotions of users who are not able, or do not want, to experience life through their own emotional perceptions. It looks like a Walkman-type device with headphones that does not play music inside, but rather outside, of the head. The device facilitates the user's emotional communication with the world around him. It is not designed for his/her personal use only but is equipped with a tiny amplifier.

The memory of the SD Audio Player chip card contains a great amount of data containing recordings of authentic human emotions. For instance, if the user finds himself in a situation where he has to argue with someone, yet he does not want to get into confrontation and to waste his own emotions, he locates a password on his SD Audio Player representing an appropriate emotional response, which he then applies accordingly.

The SD Audio Player can also record and thus appropriate other people's emotions: sniveling, peevishness, sobbing, moaning, crying, gradual emotional collapse, breakdown, yelling by a beaten person, the state of mind between laughter and crying, the hysterical family argument from Fellini's film Amarcord, pubescent giggling, comforting and fondling of a baby, a feeling of well-being, enthusiastic effusions, wearing somebody out, cuddling, soothing, etc. Such recordings, including those from movies, can be further edited and modified on a computer. In this way, the user can appropriate the emotions that are conveyed by celebrities and other prominent individuals.

23:35 Posted in Emotional computing | Permalink | Comments (0) | Tags: emotional computing

Jul 03, 2006

Wish progress bar

the "wish project bar" is a tool which measures long-term goals or whishes, as it creates an emotional link to the passing of time. the progress bar runs for 18 years with each band represents 1 year, serving as a gentle reminder & requiring very little attention. Users can make a wish, set the time by turning the top cap & then leave to do its thing.

23:56 Posted in Emotional computing | Permalink | Comments (0)

Jun 06, 2006

Blushing Light

Re-blogged from Mocoloco

The Blushing Light designed by Nadine Jarvis and Jayne Potter blushes in response to the emotional pitch of a mobile phone. Through conversation, the lamp is activated by the Electromagnetic field (EMF) emitted from a mobile phone and continues blushing for 5 minutes after the call has ended; prolonging the memory of the otherwise transient conversation.

19:01 Posted in Emotional computing | Permalink | Comments (0) | Tags: emotional computing

Jun 04, 2006

EMOSIVE: A mobile service for the emotionally triggered

Re-blogged from Prototype/Interaction Design Cluster

![]()

emosive (formerly e:sense) is a new service for mobile devices which allows capturing, storing and sharing of fleeting emotional experiences. Based on the Cognitive Priming

theory, as we become more immersed in digital media through our mobile devices, our personal media inventories constantly act as memory aids, “priming” us to better recollect associative, personal (episodic) memories when facing an external stimulus. Being mobile and in a dynamic environment, these recollections are moving, both emotionally and quickly away from us. Counting on the fact that near-today’s personal media inventories will be accessed from mobile devices and shared with a close collective, emosive bundles text, sound and image animation to allow capturing these fleeting emotional experiences, then sharing and reliving them with cared others. Playfully stemming from the technical, thin jargon of the mobile world (SMS, MMS), emosive proposes a new, light format of instant messages, dubbed “IFM” – Instant Feeling Messages.

21:57 Posted in Emotional computing | Permalink | Comments (0) | Tags: emotional computing

May 15, 2006

Aesthetic Computing

From Amazon

In Aesthetic Computing, key scholars and practitioners from art, design, computer science, and mathematics lay the foundations for a discipline that applies the theory and practice of art to computing. Aesthetic computing explores the way art and aesthetics can play a role in different areas of computer science. One of its goals is to modify computer science by the application of the wide range of definitions and categories normally associated with making art. For example, structures in computing might be represented using the style of Gaudi or the Bauhaus school. This goes beyond the usual definition of aesthetics in computing, which most often refers to the formal, abstract qualities of such structures--a beautiful proof, or an elegant diagram. The contributors to this book discuss the broader spectrum of aesthetics--from abstract qualities of symmetry and form to ideas of creative expression and pleasure--in the context of computer science. The assumption behind aesthetic computing is that the field of computing will be enriched if it embraces all of aesthetics. Human-computer interaction will benefit--"usability," for example, could refer to improving a user's emotional state--and new models of learning will emerge.

Aesthetic Computing approaches its subject from a variety of perspectives. After defining the field and placing it in its historical context, the book looks at art and design, mathematics and computing, and interface and interaction. Contributions range from essays on the art of visualization and "the poesy of programming" to discussions of the aesthetics of mathematics throughout history and transparency and reflectivity in interface design.

21:45 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

May 13, 2006

MoBeeline

Via WMMNA

Chang Soo LEE and HyeJoo Lee have developed MoBeeline, a system that allows people to send data about a user's emotions to another's clothes via SMS.

From the project's web site:

MoBeeline is a compound word - Mobile + Beeline.

Our mobile service makes a straight line between two or more places.This project is an emotional mobile service based on wearable technology. The basic focus of this project is to stimulate people's emotions with an interaction between mobile and wearable technology, and to develop a social network service between friends.

The main goal is to create a wearable Bluetooth accessory that can receive data from a mobile phone. For example, let us assume that there are two mobile phone users. One user can send operative directions to the other's clothes as the user wants. Without the two users having to meet, they can share their feelings and emotions by sending signals to each other's clothes. Using our service, they will be changed the colors of each others garments, certain patterns or they can send emoticons to LEDs on the garment.

Watch the Video

19:54 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

May 10, 2006

We Feel Fine

From We Feel Fine project's website

Since August 2005, We Feel Fine, An Exploration of Human Emotion, in Six Movements, has been harvesting human feelings from a large number of weblogs. Every few minutes, the system searches the world's newly posted blog entries for occurrences of the phrases "I feel" and "I am feeling". When it finds such a phrase, it records the full sentence, up to the period, and identifies the "feeling" expressed in that sentence (e.g. sad, happy, depressed, etc.). Because blogs are structured in largely standard ways, the age, gender, and geographical location of the author can often be extracted and saved along with the sentence, as can the local weather conditions at the time the sentence was written. All of this information is saved.The result is a database of several million human feelings, increasing by 15,000 - 20,000 new feelings per day. At its core, We Feel Fine - by Jonathan Harris & Sepandar Kamvar - is an artwork authored by everyone. It will grow and change as we grow and change, reflecting what's on our blogs, what's in our hearts, what's in our minds. We hope it makes the world seem a little smaller, and we hope it helps people see beauty in the everyday ups and downs of life.

22:18 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Apr 21, 2006

The mood of your blog

Via Smart Mobs (New Scientist)

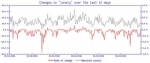

New Scientist has an article about a software called MoodViews that tracks mood swings across the 'blogosphere' and pinpoints the events behind them. Moodviews was created by Gilad Mishne and colleagues at Amsterdam University, The Netherlands. At present, MoodViews consists of three components, each offering a different view of global mood levels, the aggregate across all postings of the various moods:

- Moodgrapher tracks the global mood levels,

- Moodteller predicts them, and

- Moodsignals helps in understanding the underlying reasons for mood changes.

From the MoodViews website:

"Check out the impact of global events on global moods. Find out whether it is true that people drink more during the weekend. Observe states-of-mind with a cyclic nature; e.g., people feel energetic in the mornings and relaxed in the evening"

19:10 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Apr 16, 2006

Emotion mapping

Via the Observer

30-year-old artist Christian Nold has created a "emotion mapping" device that allows people to compare their moods with their surroundings. It measures not just major reactions that tend to stick in the memory, but also the degrees of stimulation caused by speaking to a stranger, crossing the road or listening to birdsong.  Emotion mapping is the result of the combination of two existing technologies: skin galvanic response sensor, which records the changing sweat levels on the skin as a measure of mental arousal and Global Positioning System. By calling up data from the finger cuffs, emotion mapping displays the user's fluctuating level of arousal, expressed as peaks and troughs along the route. So a walk down a country lane might produce only a mild curve. But dashing across a busy road or being confronted by a mugger might show up as a sudden spike.

Emotion mapping is the result of the combination of two existing technologies: skin galvanic response sensor, which records the changing sweat levels on the skin as a measure of mental arousal and Global Positioning System. By calling up data from the finger cuffs, emotion mapping displays the user's fluctuating level of arousal, expressed as peaks and troughs along the route. So a walk down a country lane might produce only a mild curve. But dashing across a busy road or being confronted by a mugger might show up as a sudden spike.

And here is the tool and what Christian did with it. Its actually worth seeing - especially the "Google Earth" version of the emotionmap:

11:55 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Apr 10, 2006

Linux devices: Emotional lamp

Via Linux devices

The "emotional lamp," is a WiFi-connected device that can be programmed to respond to real-world events by emanating sequences of gentle color.

Unlike a telephone or television, the lamp presents information without making intrusive or extensive time demands. Messages and information are diffused subtly into the general ambient, communicated through "color changes and their rate/rhythm of posting."

Unlike a telephone or television, the lamp presents information without making intrusive or extensive time demands. Messages and information are diffused subtly into the general ambient, communicated through "color changes and their rate/rhythm of posting."

Customizable, built-in functions include multi-day weather forecasts, stock market monitoring, traffic conditions on a daily commute route, receipt of a large number of emails or email from an important person, or Web site updates containing specified key words. Additional built-in functions are planned.

Personalization features enable the creation of "bouquets" of friends authorized to interact with the Dal lamp through email, SMS, a Dal lamp of their own, or a telephone gateway service maintained by Violet. From the Violet Website: "The messages are colored animations that can be created for each type of emotion you want to show. A personal language and grammar can be created between two persons: only they know what the lamp is expressing."

The Dal lamp has been exhibited at some of the world's most prestigious museums, including the Centre Pompidou in Paris and The City of Science and Industry in Seoul, Korea. It received the "Star of the Observeur de design, 2004," a design award from the French Agency for the Promotion of Industrial Creations.

17:40 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Mar 30, 2006

Device warns you if you're boring or irritating

The "emotional social intelligence prosthetic" device, which El Kaliouby is constructing along with MIT colleagues Rosalind Picard and Alea Teeters, consists of a camera small enough to be pinned to the side of a pair of glasses, connected to a hand-held computer running image recognition software plus software that can read the emotions these images show. If the wearer seems to be failing to engage his or her listener, the software makes the hand-held computer vibrate.

09:20 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Mar 20, 2006

WMMNA: Instant Feeling Messages

emosive is a service for mobile devices which allows capturing, storing and sharing of fleeting emotional experiences. Based on the Cognitive PrimingGo theory, as we become more immersed in digital media through our mobile devices, our personal media inventories constantly act as memory aids, "priming" us to better recollect associative, personal (episodic) memories when facing an external stimulus. Being mobile and in a dynamic environment, these recollections are moving, both emotionally and quickly away from us. emosive bundles text, sound and image animation to allow capturing these fleeting emotional experiences, then sharing and reliving them with cared others. emosive proposes a new format of instant messages, dubbed IFM – Instant Feeling Messages.

Have a look at the demo, it's a Flash application developed using FlickrFling and live data.

User scenario

While walking in the park and listening to a verse from his and his girlfriend Tina’s favorite tune – Madonna’s Little Star (“Never forget how to dream, Butterfly”), Jake sees a butterfly on a flower. Primed by the romantic musical immersion, Jake notices the colors of the butterfly and immediately loads a memory of Tina’s same-colored summer dress. Jake quickly clicks the emosive shortcut key sequence on his device. He snaps a photo of the butterfly and tags the image as "Butterfly". As Jack walks around the city, he captures other fleeting moments, making sure they are tagged to correspond with lyric words. He even adds some tagged images from his Flickr account. He then "wraps" everything as an IFM, previews it and sends it to Tina. When Tina accepts the IFM, it will stream to her phone and synchronize the tune and the images, based on the tagged lyric words. The stored IFM can also be viewed effectively as an emosive experience from any web-enabled browser.

The emosive (formerly e:sense) project was developed by the design team of the Designs Which Create Design workshop, held at the University Institute of Architecture of Venice (IUAV) 2006.

11:00 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Mar 16, 2006

KOTOHANA

Via Pink Tentacle

KOTOHANA is a flower-shaped terminal which allows to remotely communicate human emotions using LED light. LEDs change color according to the emotions felt by the remote person. Emotional state of the remote person is inferred by analysing affective correlates of voice; results of the analysis are sent via wireless LAN to the other terminal, where it is expressed as LED light. KOTOHANA is a joint project of NEC, NEC Design and SGI Japan

18:33 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Feb 11, 2006

Cloud

19:45 Posted in Emotional computing, Meditation & brain, Serious games | Permalink | Comments (0) | Tags: emotional computing, serious gaming

Jan 27, 2006

Using psychophysiological techniques to measure user experience with entertainment technologies

19:46 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Jan 20, 2006

E-motional linkage

21:21 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Jan 16, 2006

Mood-aware computing

Via smart mobs

Reseachers at Fraunhofer Institute are working on a system, which should be capable of estimating human emotions. Taking advantages of latest developments in image analysis, sensors and psychophysiology, their ultimative goal is to train computers to interpret users' emotions and to respond accordingly.

Read the full press release from the Institute website

11:15 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology

Jan 09, 2006

XPod: Emotion-aware mobile music player

A paper by Andor Dornbush, Kevin Fisher, Kyle McKay, Alex Prikhodko, and Zary Segall describe a mobile MP3 player, the Xbox, which is able to automatically select the song best suited to the emotive situation of the user.

Here is an excerpt from the article (I am quoting it from Nicholas' blog Pasta and Vinegar).

the notion of collecting human emotion and activity information from the user, and explore how this information could be used to improve the user experience with mobile music players.

(…)

a mobile MP3 player, XPod, which is able to automate the process of selecting the song best suited to the emotion and the current activity of the user. The XPod concept is based on the idea of automating much of the interaction between the music player and its user.

(…)

After an initial training period, the XPod is able to use its internal algorithms to make an educated selection of the song that would best fit its user’s emotion and situation. We use the data gathered from a streaming version of the BodyMedia SenseWear to detect different levels of user activity and emotion. After determining the state of the user the neural network engine compares the user’s current state, time, and activity levels to past user song preferences matching the existing set of conditions and makes a musical selection. The XPod system was trained to play different music based on the user’s activity level. A simple pattern was used so the state dependant customization could be verified. XPod successfully learned the pattern of listening behavior exhibited by the test user. As the training proceeded the XPod learned the desired behavior and chose music to match the preferences of the test user. XPod automates the process of choosing music best suited for a user’s current activity. The success of the initial implementation of XPod concepts provides the basis for further exploration of human- and emotion-aware mobile music players.

19:55 Posted in Emotional computing | Permalink | Comments (0) | Tags: Positive Technology, experience computing