Sep 19, 2010

Artificial skin projects could restore feeling to wearers of prosthetic limbs

Via Telemedicine and E-Health news

Research groups at Stanford University and the University of California at Berkeley are developing sensor-based artificial skin that could provide prosthetic and robotic limbs with a realistic sense of touch. Stanford's project is based on organic electronics and is capable of detecting the weight of a fly upon the artificial skin, according to Zhenan Bao, professor of chemical engineering at Stanford.

The highly sensitive surfaces could also help robots pick up delicate objects without breaking them, improve surgeons' control over tools used for minimally invasive surgery, and increase efficiency of touch screen devices, she noted. Meanwhile, UC Berkeley's "e-skin" uses low-power, integrated arrays of nanowire transistors, according to UC Berkeley Professor of Electrical Engineering and Computer Science Ali Javey.

Thus far, the skin, the first ever made out of inorganic single crystalline semiconductors, is able to detect pressure equivalent to the touch of a keyboard. "It's a technique that can be potentially scaled up," said study lead author Kuniharu Takei, post-doctoral fellow in electrical engineering and computer sciences at UC Berkeley. "The limit now to the size of the e-skin we developed is the size of the processing tools we are using."

20:31 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial skin, prosthetic, robotics, touch

Apr 07, 2010

Geminoid F: Remote-control female android

Researchers from the Intelligent Robotics Laboratory at Osaka University have teamed up with robot maker Kokoro Co., Ltd. to create a realistic-looking remote-control female android that mimics the facial expressions and speech of a human operator.

Modeled after a woman in her twenties, the android has long black hair, soft silicone skin, and a set of lifelike teeth that allow her to produce a natural smile.

According to the developers, the robot friendly and approachable appearance makes her suitable for receptionist work at sites such as museums. The researchers also plan to test her ability to put hospital patients at ease.

The research is being led by Osaka University professor Hiroshi Ishiguro, who is known for creating teleoperated robot twins such as the celebrated Geminoid HI-1, which was modeled after himself.

The new Geminoid F can produce facial expressions more naturally than its predecessors and it does so with a much more efficient design. While the previous Geminoid HI-1 model was equipped with 46 pneumatic actuators, the Geminoid F uses only 12.

In addition, the entire air servo control system is housed within the robot body and is powered by a small external compressor that runs on standard household electricity.

The Geminoid F easy-to-use teleoperation system, which was developed by ATR Intelligent Robotics and Communication Laboratories, consists of a smart camera that tracks the operator's facial movements. The corresponding data is relayed to the robot control system, which coordinates the movement of the pneumatic actuators to reproduce the expressions on the android face.

The efficient design makes the robot much cheaper to produce than previous models. Kokoro plans to begin selling copies of the Geminoid F next month for about 10 million yen ($110,000) each.

17:09 Posted in AI & robotics | Permalink | Comments (0) | Tags: geminoid, android, female

Dec 08, 2009

FaceBots

The world's first robot with its own Facebook page (and that can use its information in conversations with "friends") has been developed by Nikolaos Mavridis and collaborators from the Interactive Robots and Media Lab at the United Arab Emirates University.

The main hypothesis of the FaceBots project is that long-term human robot interaction will benefit by reference to "shared memories" and "events relevant to shared friends" in human-robot dialogues.

More to explore:

-

N. Mavridis, W. Kazmi and P. Toulis, "Friends with Faces: How Social Networks Can Enhance Face Recognition and Vice Versa", contributed book chapter to Computational Social Networks Analysis: Trends, Tools and Research Advances, Springer Verlag, 2009. pdf

N. Mavridis, W. Kazmi, P. Toulis, C. Ben-AbdelKader, "On the synergies between online social networking, Face Recognition, and Interactive Robotics", CaSoN 2009. pdf

N. Mavridis, C. Datta et al, "Facebots: Social robots utilizing and publishing social information in Facebook", IEEE HRI 2009. pdf

22:41 Posted in AI & robotics, Social Media | Permalink | Comments (0) | Tags: robotics, artificial intelligence, social networks

Sep 25, 2009

Miruko Eyeball Robotic Eye

Via Pink Tentacle

Miruko is a camera robot in the shape of an eyeball capable of tracking objects and faces. Worn on the player’s sleeve, Miruko’s roving eye scans the surroundings in search of virtual monsters that are invisible to the naked human eye. When a virtual monster is spotted, the mechanical eyeball rolls around in its socket and fixes its gaze on the monster’s location. By following Miruko’s line of sight, the player is able to locate the virtual monster and “capture” it via his or her iPhone camera.

In this video, Miruko’s creators demonstrate how the robotic eyeball can be used as an interface for a virtual monster-hunting game played in a real-world environment.

According to its creators, Miruko can be used for augmented reality games, security, and navigation.

18:31 Posted in AI & robotics | Permalink | Comments (0) | Tags: robotics, iphone, augmented reality

Sep 21, 2009

HAL: New assistive walking device

Japanese company Cyberdyne, with the scientific support provided by Professor Sankai of Tsukuba University, have developed the Hybrid Assistive Limb - HAL - a device designed to help people walk or carry heavy loads. The assistive walking system weights 10 kilogram and has a battery at the back. Embedded sensors collects electric signals that are delivered to the brain through the skin surface. Thanks to these sensors, the system can help users to move in the direction they are thinking. The walking speed is 1.8 km p/h.

Watch the HAL in action in this video:

17:10 Posted in AI & robotics | Permalink | Comments (0) | Tags: robotics

Jul 22, 2008

Robot butler

Here are the major functionalities of Care-O-bot 3:

- Omnidirectional Navigation: Care-O-bot 3 has an omnidirectional platform, with four steered and driven wheels. This kinematic system enables the robot to move in any desired direction and therefore also safely to negotiate narrow passages.

- Safe Manipulation: Care-O-bot 3 is equipped with a highly flexible, commercial arm with seven degrees of freedom as well as with a three-finger hand. This makes it capable of gripping and operating a large number of different everyday objects.

- 3D Environment Detection: A multiplicity of sensors enables Care-O-bot 3 to detect the environment in which it is operating. These range from stereo vision colour cameras and laser scanners to a 3D depth-image camera.

- Software Architecture/Middleware: Several interlinked computers are used to evaluate and control the sensors and actuators inside the robot. The system resources are coordinated and managed by a specially developed middleware which controls communications between the individual processes and which reacts appropriately in the event of a malfunction.

- Human-Machine Interaction: The primary interface between Care-Obot 3 and the user consists of a tray attached to the front of the robot, which carries objects for exchange between the human and the robot. The tray includes a touch screen and retracts automatically when not in use. A laser projector on the gripper also enables the robot to project information onto objects.

14:25 Posted in AI & robotics | Permalink | Comments (0)

Mar 16, 2008

A second life for AI

Source: Eetimes

Passing the Turing test - the holy grail of AI (a human conversing with a computer can't tell it's not human) - may now be possible in a limited way with the world's fastest supercomputer (IBM's Blue Gene) and mimicking the behavior of a human-controlled avatar in a virtual world, according to AI experts at Rensselaer Polytechnic Institute. "We are building a knowledge base that corresponds to all of the relevant background for our synthetic character--where he went to school, what his family is like, and so on," said Selmer Bringsjord, head of Rensselaer's Cognitive Science Department and leader of the research project. The researchers plan to engineer, from the start, a full-blown intelligent character and converse with him in an interactive virtual environment, like Second Life.

read full article here

23:46 Posted in AI & robotics, Virtual worlds | Permalink | Comments (0) | Tags: virtual reality, artificial intelligence

Dec 19, 2007

Avatar-controlled robots

Via KurzweilAI.net

Researchers at Korea Advanced Institute of Science and Technology have developed a system for controlling physical robots using software robots, displayed as virtual-reality avatars.

Article

23:53 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, virtual reality

Dec 07, 2007

Toyota unveils robot violinist

14:03 Posted in AI & robotics | Permalink | Comments (0)

Dec 04, 2007

Simroid

Via Pink Tentacle

Simroid is a robotic dental patient designed by Kokoro Company Ltd as a training tool for dentists.

The simulated patient can follow spoken instructions, closely monitor a dentist’s performance during mock treatments, and react in a human-like way to mouth pain thanks to mouth sensors.

08:37 Posted in AI & robotics | Permalink | Comments (0) | Tags: robotics, artificial intelligence

Nov 25, 2007

Brain2Robot

Electrodes attached to the patient's scalp measure the brain's electrical signals, which are amplified and transmitted to a computer. Highly efficient algorithms analyze these signals using a self-learning technique. The software is capable of detecting changes in brain activity that take place even before a movement is carried out. It can recognize and distinguish between the patterns of signals that correspond to an intention to raise the left or right hand, and extract them from the pulses being fired by millions of other neurons in the brain. These neural signal patterns are then converted into control instructions for the computer. "The aim of the project is to help people with severe motor disabilities to carry out everyday tasks. The advantage of our technology is that it is capable of translating an intended action directly into instructions for the computer," says team leader Florin Popescu. The Brain2Robot project has been granted around 1.3 million euros in research funding under the EU's sixth Framework Programme (FP6). Its focus lies on developing medical applications, in particular control systems for prosthetics, personal robots and wheelchairs. The researchers have also developed a "thought-controlled typewriter", a communication device that enables severely paralyzed patients to pick out letters of the alphabet and write texts. The robot arm could be ready for commercialization in just a few years' time.

Press release:Brain2Robot

Project page:Brain2Robot

23:50 Posted in AI & robotics, Brain-computer interface, Cybertherapy | Permalink | Comments (0) | Tags: brain-computer interface

Oct 25, 2007

Japanese android recognizes and uses body language

Via Pink Tentacle

Japan’s National Institute of Information and Communications Technology (NICT) researchers have developed an autonomous humanoid robot that can recognize and use body language. According to the press release, the android can use nonverbal communication skills such as gestures and touch to facilitate natural interaction with humans. NICT researchers envision future applications of this technology in robots that can work in the home or assist with rescue operations when disaster strikes.

NICT press release (japanese)

22:45 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Oct 07, 2007

NIPS 2007 WORKSHOP: Robotics Challenges for Machine Learning

Dates: 7-8 December, 2007

Organizers:

Jan Peters (Max Planck Institute for Biological Cybernetics & USC), Marc Toussaint (Technical University of Berlin)

http://www.robot-learning.de

email: nips07@robot-learning.de

Acceptance Notification: October 26, 2007

22:12 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Oct 01, 2007

Oribotics

Part of the Melbourne International Arts FestivalOribotics [network] is a unique art and technology installation in the Atrium at Federation Square, drawing on cutting edge research in biology, computing, and scientific origami. Discover living biomimetic works attached to the glass panes of the Atrium’s Fracture Galleries.

Seek out Oribotics [network] and you will find robots rooted to the architecture, surviving on solar power, with their faceted folded mechanical blossoms attracting data, moving in response to the physical audience and stimuli from online users at www.oribotics.net. In Oribotics [network] each robot is individually connected to the vastness of the internet, and to local mobile phone, Bluetooth and wifi networks, enabling interaction via mobile devices and the web.

Bring your laptop, PDA, or mobile phone, start up your bluetooth and wifi connections and ‘network’ with the Oribots. Or point your browser to www.oribotics.net and explore the virtual world of the oribots digestion. Oribotics [network]Matthew Gardiner’s research into the hybrid art / science field that fuses the ancient art of origami with robotic technology. Witness the results of four years development of intricately folded designs integrated with robotic mechanisms. continues multimedia artist

This is the most complex generation of oribots to date. With support from Arts Victoria Innovation fund, we are powering ahead into new realms.

At the moment we are working with compact computers (about the size of a greeting card), Micro Linear Actuators, designing flowers from water bombs, and using some of the strongest sticky tape in the world… If all that sounds a little odd, then you’d better read the blog. - Matthew Gardiner

Oribotics.net is designed and coded by Matthew Gardiner & My Trinh Gardiner at http://www.airstrip.com.au.

22:26 Posted in AI & robotics, Cyberart | Permalink | Comments (0) | Tags: robotics, cyberart

Artificial brain falls for optical illusions

Via New Scientist

A computer program that emulates the human brain falls for the same optical illusions humans do.

It suggests the illusions are a by-product of the way babies learn to filter their complex surroundings. Researchers say this means future robots must be susceptible to the same tricks as humans are in order to see as well as us.

For some time, scientists have believed one class of optical illusions result from the way the brain tries to disentangle the colour of an object and the way it is lit. An object may appear brighter or darker, either because of the shade of its colour, or because it is in bright light or shadows.

The brain learns how to tackle this through trial and error when we are babies, the theory goes. Mostly it gets it right, but occasionally a scene contradicts our previous experiences. The brain gets it wrong and we perceive an object lighter or darker than it really is – creating an illusion

Read full article

22:15 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence

Sep 27, 2007

A device for robotic upper extremity repetitive therapy

Design and control of RUPERT: a device for robotic upper extremity repetitive therapy.

IEEE Trans Neural Syst Rehabil Eng. 2007 Sep;15(3):336-46

Authors: Sugar TG, He J, Koeneman EJ, Koeneman JB, Herman R, Huang H, Schultz RS, Herring DE, Wanberg J, Balasubramanian S, Swenson P, Ward JA

The structural design, control system, and integrated biofeedback for a wearable exoskeletal robot for upper extremity stroke rehabilitation are presented. Assisted with clinical evaluation, designers, engineers, and scientists have built a device for robotic assisted upper extremity repetitive therapy (RUPERT). Intense, repetitive physical rehabilitation has been shown to be beneficial overcoming upper extremity deficits, but the therapy is labor intensive and expensive and difficult to evaluate quantitatively and objectively. The RUPERT is developed to provide a low cost, safe and easy-to-use, robotic-device to assist the patient and therapist to achieve more systematic therapy at home or in the clinic. The RUPERT has four actuated degrees-of-freedom driven by compliant and safe pneumatic muscles (PMs) on the shoulder, elbow, and wrist. They are programmed to actuate the device to extend the arm and move the arm in 3-D space. It is very important to note that gravity is not compensated and the daily tasks are practiced in a natural setting. Because the device is wearable and lightweight to increase portability, it can be worn standing or sitting providing therapy tasks that better mimic activities of daily living. The sensors feed back position and force information for quantitative evaluation of task performance. The device can also provide real-time, objective assessment of functional improvement. We have tested the device on stroke survivors performing two critical activities of daily living (ADL): reaching out and self feeding. The future improvement of the device involves increased degrees-of-freedom and interactive control to adapt to a user's physical conditions.

23:33 Posted in AI & robotics, Cybertherapy | Permalink | Comments (0) | Tags: cybertherapy

Sep 20, 2007

Japanese seniors bored with robot

From Robot.net

According to a Reuters report, Japanese senior citizens quickly become bored with the simple robots so far introduced into nursing homes:

"The residents liked ifbot for about a month before they lost interest. Stuffed animals are more popular." Ifbot is a small robot that can converse, sing, express emotions, and even present trivia quizzes to senior citizens. According to the article the robot has spent most of the past year sitting in a corner, unused. Another robot, Hopis, that looked like a furry pink dog has gone out of production due to poor sales. Hopis was designed to monitor blood sugar, blood pressue, and body temperature. One problem may be that both robots are little more than advanced toys. Neither can help elderly people with day-to-day problems they face such as getting around their house, reading small print, or taking a bath. The elderly have found utilitarian improvements in existing devices more useful: height-adjustable countertops or extra-big control buttons on household gadgets. Whether seniors will find robots that can help with their utilitarian needs more to their liking than fluffy pupbots that sing remains to be seen"

21:25 Posted in AI & robotics | Permalink | Comments (0) | Tags: robotics, artificial intelligence

Sep 16, 2007

EU researchers implanted an artificial cerebellum inside a robot

Roland Piquepaille reports that "An international team of European researchers has implanted an artificial cerebellum - the portion of the brain that controls motor functions - inside a robotic system. This EU-funded project is dubbed SENSOPAC, an acronym for "SENSOrimotor structuring of perception and action for emerging cognition". One of the goals of this project is to design robots able to interact with humans in a natural way. This project, which should be completed at the end of 2009, also wants to produce robots which would act as home-helpers for disabled people, such as persons affected by neurological disorders, such as Parkinson's disease."

SENSOPAC website

22:39 Posted in AI & robotics | Permalink | Comments (0) | Tags: robotics, artificial intelligence

Sep 13, 2007

Telepresence robot for interpersonal communication with the elderly

Developing a Telepresence Robot for Interpersonal Communication with the Elderly in a Home Environment.

Telemed J E Health. 2007 Aug;13(4):407-424

Authors: Tsai TC, Hsu YL, Ma AI, King T, Wu CH

"Telepresence" is an interesting field that includes virtual reality implementations with human-system interfaces, communication technologies, and robotics. This paper describes the development of a telepresence robot called Telepresence Robot for Interpersonal Communication (TRIC) for the purpose of interpersonal communication with the elderly in a home environment. The main aim behind TRIC's development is to allow elderly populations to remain in their home environments, while loved ones and caregivers are able to maintain a higher level of communication and monitoring than via traditional methods. TRIC aims to be a low-cost, lightweight robot, which can be easily implemented in the home environment. Under this goal, decisions on the design elements included are discussed. In particular, the implementation of key autonomous behaviors in TRIC to increase the user's capability of projection of self and operation of the telepresence robot, in addition to increasing the interactive capability of the participant as a dialogist are emphasized. The technical development and integration of the modules in TRIC, as well as human factors considerations are then described. Preliminary functional tests show that new users were able to effectively navigate TRIC and easily locate visual targets. Finally the future developments of TRIC, especially the possibility of using TRIC for home tele-health monitoring and tele-homecare visits are discussed.

22:32 Posted in AI & robotics, Telepresence & virtual presence | Permalink | Comments (0) | Tags: robotics, artificial intelligence, telepresence

Jul 26, 2007

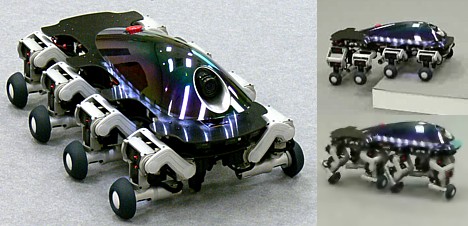

Halluc II: 8-legged robot vehicle

Researchers at the Chiba Institute of Technology have developed a robotic vehicle with eight wheels and legs designed to drive or walk over rugged terrain. The agile robot, which the developers aim to put into practical use within the next five years, can move sideways, turn around in place and drive or walk over a wide range of obstacles.

The researchers hope the robot’s abilities will help out with rescue operations, and they would like to see Halluc II’s technology put to use in transportation for the mobility-impaired.

Here’s a short video of the model in action.

19:05 Posted in AI & robotics | Permalink | Comments (0) | Tags: artificial intelligence, robotics