Dec 14, 2018

Transformative Experience Design

In the last couple of years, I and my team have been intensively working on a new research program in Positive Technology: Transformative Experience Design.

In short, the goal of this project is to understand how virtual reality, brain-based technologies and the language of arts can support transformative experiences, that is, emotional experiences that promote deep personal change.

About Transformative Experience Design

As noted by Miller and C’de Baca, there are experiences in life that are able to generate profound and long-lasting shifts in core beliefs and attitudes, including subjective self-transformation. These experiences have the capacity of changing not only what individuals know and value, but also how they see the world.

According to Mezirow’s Transformative Learning Theory, these experiences can be triggered by a “disorienting dilemma” usually related to a life crisis or major life transition (e.g., death, illness, separation, or divorce), which forces individuals to critically examine and eventually revise their core assumptions and beliefs. The outcome of a transformative experience is a significant and permanent change in the expectations – mindsets, perspectives and habits of mind – through which we filter and make sense of the world. For these characteristics, transformative experiences are gaining increasing attention not only in psychology and neuroscience, but also in philosophy.

From a psychological perspective, transformative change is often associated to specific experiential states, defined “self-transcendence experiences”. These are transient mental states that allow individuals experiencing something greater of themselves, reflecting on deeper dimensions of their existence and shaping lasting spiritual beliefs. These experiences encompass several mental states, including flow, positive emotions such as awe and elevation, “peak” experiences, “mystical” experiences and mindfulness (for a review, see Yaden et al.). Although the phenomenological profile of these experiential states can vary significantly in terms of quality and intensity, they are characterized by a diminished sense of self and increased feelings of connectedness to other people and one’s surroundings. Previous research has shown that self-transcendent experiences are important sources of positive psychological outcomes, including increased meaning in life, positive mood and life satisfaction, positive behavior change, spiritual development and pro-social attitudes.

One potentially interesting question related to self-transcendent experiences concerns whether, and to which extent, these mental states can be invited or elicited by means of interactive technologies. This question lies at the center of a new research program – Transformative Experience Design (TED) – which has a two-fold aims:

- to systematically investigate the phenomenological and neuro-cognitive aspects of self-transcendent experiences, as well as their implications for individual growth and psychological wellbeing; and

- to translate such knowledge into a tentative set of design principles for developing “e-experiences” that support meaning in life and personal growth.

The three pillars of TED: virtual reality, arts and neurotechnologies

I have identified three possible assets that can be combined to achieve this goal:

- The first strategy concerns the use of advanced simulation technologies, such as virtual, augmented and mixed reality, as the elective medium to generate controlled alteration of perceptual, motor and cognitive processes.

- The second asset regards the use of the language of arts to create emotionally-compelling storytelling scenarios.

- The third and final element of TED concerns the use of brain-based technologies, such as brain stimulation and bio/neurofeedback, to modulate neuro-physiological processes underlying self-transcendence mental states, using a closed-loop approach.

The central assumption of TED is that the combination of these means provides a broad spectrum of transformative possibilities, which include, for example, “what it is like” to embody another self or another life form, simulating peculiar neurological phenomena like synesthesia or out-of-body experiences, and altering time and space perception.

The safe and controlled use of these e-experiences hold the potential to facilitate self-knowledge and self-understanding, foster creative expression, develop new skills, and recognize and learn the value of others.

Example of TED research projects

Although TED is a recent research program, we are building a fast-growing community of researchers, artists and developers to shape the next generation of transformative experiences. Here is a list of recent projects and publications related to TED in different application contexts.

The Emotional Labyrinth

In this project I teamed with Sergi Bermudez i Badia and Mónica S. Cameirão from Madera Interactive Technologies Institute to realize the first example of emotionally-adaptive virtual reality application for mental health. So far, virtual reality applications in wellbeing and therapy have typically been based on pre-designed objects and spaces. In this project, we suggest a different approach, in which the content of a virtual world is procedurally generated at runtime (that is, through algorithmic means) according to the user’s affective responses. To demonstrate the concept, we developed a first prototype using Unity: the “Emotional Labyrinth”. In this VR experience, the user walks through a endless maze, whose structure and contents are automatically generated according to four basic emotional states: joy, sadness, anger and fear.

During navigation, affective states are dynamically represented through pictures, music, and animated visual metaphors chosen to represent and induce emotional states.

The underlying hypothesis is that exposing users to multimodal representations of their affective states can create a feedback loop that supports emotional self-awareness and fosters more effective emotional regulation strategies. We carried out a first study to (i) assess the effectiveness of the selected metaphors in inducing target emotions, and (ii) identify relevant psycho-physiological markers of the emotional experience generated by the labyrinth. Results showed that the Emotional Labyrinth is overall a pleasant experience in which the proposed procedural content generation can induce distinctive psycho-physiological patterns, generally coherent with the meaning of the metaphors used in the labyrinth design. Further, collected psycho-physiological responses such as electrocardiography, respiration, electrodermal activity, and electromyography are used to generate computational models of users' reported experience. These models enable the future implementation of the closed loop mechanism to adapt the Labyrinth procedurally to the users' affective state.

Awe in Virtual Reality

Awe is a compelling emotional experience with philosophical roots in the domain of aesthetics and religious or spiritual experiences. Both Edmund Burke’s (1759/1970 and Immanuel Kant’s (1764/2007) analyses of the sublime as a compelling experience that transcends one’s perception of beauty to something more profound are couched in terms that seem synonymous with the modern understanding of awe.

The contemporary psychological understanding of awe comes largely from a foundational article written by Keltner and Haidt (2003). According to their conceptualization, awe experiences encompass two key appraisals: the perception of vastness and the need to mentally attempt to accommodate this vastness into existing mental schemas.

Crucially, research has shown that experiencing awe is associated with positive transformative changes at both psychological and physical levels (e.g., Shiota et al., 2007; Schneider, 2009; Stellar et al., 2015). For example, awe can change our perspective toward even unknown others thus increasing our generous attitude toward them (Piff et al., 2015; Prade and Saroglou, 2016) and reducing aggressive behaviors (Yang et al., 2016). Generally, awe broadens our attentional focus (Sung and Yih, 2015), and extends time perception (Rudd et al., 2012). Furthermore, this emotion protects our immunity system against chronic and cardiovascular diseases and enhance our satisfaction toward life (Krause and Hayward, 2015; Stellar et al., 2015).

Considering the transformative potential of awe, I and my doctoral student Alice Chiricofocused on how to elicit intense feelings of this complex emotion using virtual reality. To this end, we modeled three immersive virtual environments (i.e., a forest including tall trees; a chain of mountains; and an earth view from deep space) designed to induce a feeling of perceptual vastness. As hypothesized, the three target environments induced a significantly greater awe than a "neutral" virtual environment (a park consisting of a green clearing with very few trees and some flowers). Full details of this study are reported here.

In another study, we examined the potential of VR-induced awe to foster creativity. To this end, we exposed participants both to an awe-inducing 3D-video and to a neutral one in a within-subject design. After each stimulation condition, participants reported the intensity and type of perceived emotion and completed two verbal tasks of the Torrance Tests of Creative Thinking (TTCT; Torrance, 1974), a standardized test to measure creativity performance. Results showed that awe positively affected key creativity components—fluidity, flexibility, and elaboration measured by the TTCT subtest—compared to a neutral stimulus, suggesting that (i) awe has a potential for boosting creativity, and (ii) VR is a powerful awe-inducing medium that can be used in different application contexts (i.e., educational, clinical etc.) where this emotion can make a difference.

However, not only graphical 3D environments can be used to induce awe; in another study, we showed that also 360° videos depicting vast natural scenarios are powerful stimuli to induce intense feelings of this complex emotion.

Immersive storytelling for psychotherapy and mental wellbeing

Growing research evidence indicates that VR can be effectively integrated in psychotherapyto treat a number of clinical conditions, including anxiety disorders, pain disorders and PTSD. In this context, VR is mostly used as simulative tool for controlled exposure to critical/fearful situations. The possibility of presenting realistic controlled stimuli and, simultaneously, of monitoring the responses generated by the user offers a considerable advantage over real experiences.

However, the most interesting potential of VR resides in its capacity of creating compelling immersive storytelling experiences. As recently noted by Brenda Wiederhold:

Virtual training simulations, documentaries, and experiences will, however, only be as effective as the emotions they spark in the viewer. To reach that point, the VR industry is faced with two obstacles: creating content that is enjoyable and engaging, and encouraging adoption of the medium among consumers. Perhaps the key to both problems is the recognition that users are not passive consumers of VR content. Rather, they bring their own thoughts, needs, and emotions to the worlds they inhabit. Successful stories challenge those conceptions, invite users to engage with the material, and recognize the power of untethering users from their physical world and throwing them into another. That isn’t just the power of VR—it’s the power of storytelling as a whole.

Thus, VR-based narratives can be used to generate an infinite number of “possible selves”, by providing a person a “subjective window of presence” into unactualized, but possible, worlds.

The emergence of immersive storytelling introduces the possibility of using VR in mental health from a different rationale than virtual reality-based exposure therapy. In this novel rationale, immersive stories, lived from a first-person perspective, provide the patient the opportunity of engaging emotionally with metaphoric narratives, eliciting new insights and meaning-making related to viewers’ personal world views.

To explore this new perspective, I have been collaborating with the Italian startup Become to test the potential of transformative immersive storytelling in mental health and wellbeing. An intriguing aspect of this strategy is that, in contrast with conventional virtual-reality exposure therapy, which is mostly used in combination with Cognitive-Behavioral Therapy interventions, immersive storytelling scenarios can be integrated in any therapeutic model, since all kinds of psychotherapy involve some form of ‘storytelling’.

In this project, we are interested in understanding, for example, whether the integration of immersive stories in the therapeutic setting can enhance the efficacy of the intervention and facilitate patients in expressing their inner thoughts, feelings, and life experiences.

Collaborate!

Are you a researcher, a developer, or an artist interested in collaborating in TED projects? Here is how:

- Drop me an email at: andrea.gaggioli@unicatt.it

- Sign into ResearchGate and visit Transformative Experience Design project's page

- Have a look at the existing projects and publications to find out which TED research line is more interesting to you.

Key references

[1] Miller, W. R., & C'de Baca, J. (2001). Quantum change: When epiphanies and sudden insights transform ordinary lives. New York: Guilford Press.

[2] Yaden, D. B., Haidt, J., Hood, R. W., Jr., Vago, D. R., & Newberg, A. B. (2017). The varieties of self-transcendent experience. Review of General Psychology, 21(2), 143-160.

[3] Gaggioli, A. (2016). Transformative Experience Design. In Human Computer Confluence. Transforming Human Experience Through Symbiotic Technologies, eds A. Gaggioli, A. Ferscha, G. Riva, S. Dunne, and I. Viaud-Delmon (Berlin: De Gruyter Open), 96–121.

Jun 21, 2016

New book on Human Computer Confluence - FREE PDF!

Two good news for Positive Technology followers.

1) Our new book on Human Computer Confluence is out!

2) It can be downloaded for free here

Human-computer confluence refers to an invisible, implicit, embodied or even implanted interaction between humans and system components. New classes of user interfaces are emerging that make use of several sensors and are able to adapt their physical properties to the current situational context of users.

A key aspect of human-computer confluence is its potential for transforming human experience in the sense of bending, breaking and blending the barriers between the real, the virtual and the augmented, to allow users to experience their body and their world in new ways. Research on Presence, Embodiment and Brain-Computer Interface is already exploring these boundaries and asking questions such as: Can we seamlessly move between the virtual and the real? Can we assimilate fundamentally new senses through confluence?

The aim of this book is to explore the boundaries and intersections of the multidisciplinary field of HCC and discuss its potential applications in different domains, including healthcare, education, training and even arts.

DOWNLOAD THE FULL BOOK HERE AS OPEN ACCESS

Please cite as follows:

Andrea Gaggioli, Alois Ferscha, Giuseppe Riva, Stephen Dunne, Isabell Viaud-Delmon (2016). Human computer confluence: transforming human experience through symbiotic technologies. Warsaw: De Gruyter. ISBN 9783110471120.

09:53 Posted in AI & robotics, Augmented/mixed reality, Biofeedback & neurofeedback, Blue sky, Brain training & cognitive enhancement, Brain-computer interface, Cognitive Informatics, Cyberart, Cybertherapy, Emotional computing, Enactive interfaces, Future interfaces, ICT and complexity, Neurotechnology & neuroinformatics, Positive Technology events, Research tools, Self-Tracking, Serious games, Technology & spirituality, Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink

May 26, 2016

From User Experience (UX) to Transformative User Experience (T-UX)

In 1999, Joseph Pine and James Gilmore wrote a seminal book titled “The Experience Economy” (Harvard Business School Press, Boston, MA) that theorized the shift from a service-based economy to an experience-based economy.

According to these authors, in the new experience economy the goal of the purchase is no longer to own a product (be it a good or service), but to use it in order to enjoy a compelling experience. An experience, thus, is a whole-new type of offer: in contrast to commodities, goods and services, it is designed to be as personal and memorable as possible. Just as in a theatrical representation, companies stage meaningful events to engage customers in a memorable and personal way, by offering activities that provide engaging and rewarding experiences.

Indeed, if one looks back at the past ten years, the concept of experience has become more central to several fields, including tourism, architecture, and – perhaps more relevant for this column – to human-computer interaction, with the rise of “User Experience” (UX).

The concept of UX was introduced by Donald Norman in a 1995 article published on the CHI proceedings (D. Norman, J. Miller, A. Henderson: What You See, Some of What's in the Future, And How We Go About Doing It: HI at Apple Computer. Proceedings of CHI 1995, Denver, Colorado, USA). Norman argued that focusing exclusively on usability attribute (i.e. easy of use, efficacy, effectiveness) when designing an interactive product is not enough; one should take into account the whole experience of the user with the system, including users’ emotional and contextual needs. Since then, the UX concept has assumed an increasing importance in HCI. As McCarthy and Wright emphasized in their book “Technology as Experience” (MIT Press, 2004):

“In order to do justice to the wide range of influences that technology has in our lives, we should try to interpret the relationship between people and technology in terms of the felt life and the felt or emotional quality of action and interaction.” (p. 12).

However, according to Pine and Gilmore experience may not be the last step of what they call as “Progression of Economic Value”. They speculated further into the future, by identifying the “Transformation Economy” as the likely next phase. In their view, while experiences are essentially memorable events which stimulate the sensorial and emotional levels, transformations go much further in that they are the result of a series of experiences staged by companies to guide customers learning, taking action and eventually achieving their aspirations and goals.

In Pine and Gilmore terms, an aspirant is the individual who seeks advice for personal change (i.e. a better figure, a new career, and so forth), while the provider of this change (a dietist, a university) is an elictor. The elictor guide the aspirant through a series of experiences which are designed with certain purpose and goals. According to Pine and Gilmore, the main difference between an experience and a transformation is that the latter occurs when an experience is customized:

“When you customize an experience to make it just right for an individual - providing exactly what he needs right now - you cannot help changing that individual. When you customize an experience, you automatically turn it into a transformation, which companies create on top of experiences (recall that phrase: “a life-transforming experience”), just as they create experiences on top of services and so forth” (p. 244).

A further key difference between experiences and transformations concerns their effects: because an experience is inherently personal, no two people can have the same one. Likewise, no individual can undergo the same transformation twice: the second time it’s attempted, the individual would no longer be the same person (p. 254-255).

But what will be the impact of this upcoming, “transformation economy” on how people relate with technology? If in the experience economy the buzzword is “User Experience”, in the next stage the new buzzword might be “User Transformation”.

Indeed, we can see some initial signs of this shift. For example, FitBit and similar self-tracking gadgets are starting to offer personalized advices to foster enduring changes in users’ lifestyle; another example is from the fields of ambient intelligence and domotics, where there is an increasing focus towards designing systems that are able to learn from the user’s behaviour (i.e. by tracking the movement of an elderly in his home) to provide context-aware adaptive services (i.e. sending an alert when the user is at risk of falling).

But likely, the most important ICT step towards the transformation economy could take place with the introduction of next-generation immersive virtual reality systems. Since these new systems are based on mobile devices (an example is the recent partnership between Oculus and Samsung), they are able to deliver VR experiences that incorporate information on the external/internal context of the user (i.e. time, location, temperature, mood etc) by using the sensors incapsulated in the mobile phone.

By personalizing the immersive experience with context-based information, it might be possibile to induce higher levels of involvement and presence in the virtual environment. In case of cyber-therapeutic applications, this could translate into the development of more effective, transformative virtual healing experiences.

Furthermore, the emergence of "symbiotic technologies", such as neuroprosthetic devices and neuro-biofeedback, is enabling a direct connection between the computer and the brain. Increasingly, these neural interfaces are moving from the biomedical domain to become consumer products. But unlike existing digital experiential products, symbiotic technologies have the potential to transform more radically basic human experiences.

Brain-computer interfaces, immersive virtual reality and augmented reality and their various combinations will allow users to create “personalized alterations” of experience. Just as nowadays we can download and install a number of “plug-ins”, i.e. apps to personalize our experience with hardware and software products, so very soon we may download and install new “extensions of the self”, or “experiential plug-ins” which will provide us with a number of options for altering/replacing/simulating our sensorial, emotional and cognitive processes.

Such mediated recombinations of human experience will result from of the application of existing neuro-technologies in completely new domains. Although virtual reality and brain-computer interface were originally developed for applications in specific domains (i.e. military simulations, neurorehabilitation, etc), today the use of these technologies has been extended to other fields of application, ranging from entertainment to education.

In the field of biology, Stephen Jay Gould and Elizabeth Vrba (Paleobiology, 8, 4-15, 1982) have defined “exaptation” the process in which a feature acquires a function that was not acquired through natural selection. Likewise, the exaptation of neurotechnologies to the digital consumer market may lead to the rise of a novel “neuro-experience economy”, in which technology-mediated transformation of experience is the main product.

Just as a Genetically-Modified Organism (GMO) is an organism whose genetic material is altered using genetic-engineering techniques, so we could define aTechnologically-Modified Experience (ETM) a re-engineered experience resulting from the artificial manipulation of neurobiological bases of sensorial, affective, and cognitive processes.

Clearly, the emergence of the transformative neuro-experience economy will not happen in weeks or months but rather in years. It will take some time before people will find brain-computer devices on the shelves of electronic stores: most of these tools are still in the pre-commercial phase at best, and some are found only in laboratories.

Nevertheless, the mere possibility that such scenario will sooner or later come to pass, raises important questions that should be addressed before symbiotic technologies will enter our lives: does technological alteration of human experience threaten the autonomy of individuals, or the authenticity of their lives? How can we help individuals decide which transformations are good or bad for them?

Answering these important issues will require the collaboration of many disciplines, including philosophy, computer ethics and, of course, cyberpsychology.

May 24, 2016

SignAloud: Gloves that Transliterate Sign Language into Text and Speech

10:25 Posted in Future interfaces, Wearable & mobile | Permalink | Comments (0)

Oct 12, 2014

New Scientist on new virtual reality headset Oculus Rift

From New Scientist

The latest prototype of virtual reality headset Oculus Rift allows you to step right into the movies you watch and the games you play

AN OLD man sits across the fire from me, telling a story. An inky dome of star-flecked sky arcs overhead as his words mingle with the crackling of the flames. I am entranced.

This isn't really happening, but it feels as if it is. This is a program called Storyteller – Fireside Tales that runs on the latest version of the Oculus Rift headset, unveiled last month. The audiobook software harnesses the headset's virtual reality capabilities to deepen immersion in the story. (See also "Plot bots")

Fireside Tales is just one example of a new kind of entertainment that delivers convincing true-to-life experiences. Soon films will get a similar treatment.

Movie company 8i, based in Wellington, New Zealand, plans to make films specifically for Oculus Rift. These will be more immersive than just mimicking a real screen in virtual reality because viewers will be able to step inside and explore the movie while they are watching it.

"We are able to synthesise photorealistic views in real-time from positions and directions that were not directly captured," says Eugene d'Eon, chief scientist at 8i. "[Viewers] can not only look around a recorded experience, but also walk or fly. You can re-watch something you love from many different perspectives."

The latest generation of games for Oculus Rift are more innovative, too. Black Hat Oculus is a two-player, cooperative game designed by Mark Sullivan and Adalberto Garza, both graduates of MIT's Game Lab. One headset is for the spy, sneaking through guarded buildings on missions where detection means death. The other player is the overseer, with a God-like view of the world, warning the spy of hidden traps, guards and passageways.

Deep immersion is now possible because the latest Oculus Rift prototype – known as Crescent Bay – finally delivers full positional tracking. This means the images that you see in the headset move in sync with your own movements.

This is the key to unlocking the potential of virtual reality, says Hannes Kaufmann at the Technical University of Vienna in Austria. The headset's high-definition display and wraparound field of view are nice additions, he says, but they aren't essential.

The next step, says Kaufmann is to allow people to see their own virtual limbs, not just empty space, in the places where their brain expects them to be. That's why Beijing-based motion capture company Perception, which raised more than $500,000 on Kickstarter in September, is working on a full body suit that gathers body pose estimation and gives haptic feedback – a sense of touch – to the wearer. Software like Fireside Tales will then be able to take your body position into account.

In the future, humans will be able to direct live virtual experiences themselves, says Kaufmann. "Imagine you're meeting an alien in the virtual reality, and you want to shake hands. You could have a real person go in there and shake hands with you, but for you only the alien is present."

Oculus, which was bought by Facebook in July for $2 billion, has not yet announced when the headset will be available to buy.

This article appeared in print under the headline "Deep and meaningful"

21:48 Posted in Virtual worlds, Wearable & mobile | Permalink | Comments (0)

Oct 06, 2014

Bionic Vision Australia’s Bionic Eye Gives New Sight to People Blinded by Retinitis Pigmentosa

Via Medgadget

Bionic Vision Australia, a collaboration between of researchers working on a bionic eye, has announced that its prototype implant has completed a two year trial in patients with advanced retinitis pigmentosa. Three patients with profound vision loss received 24-channel suprachoroidal electrode implants that caused no noticeable serious side effects. Moreover, though this was not formally part of the study, the patients were able to see more light and able to distinguish shapes that were invisible to them prior to implantation. The newly gained vision allowed them to improve how they navigated around objects and how well they were able to spot items on a tabletop.

The next step is to try out the latest 44-channel device in a clinical trial slated for next year and then move on to a 98-channel system that is currently in development.

This study is critically important to the continuation of our research efforts and the results exceeded all our expectations,” Professor Mark Hargreaves, Chair of the BVA board, said in a statement. “We have demonstrated clearly that our suprachoroidal implants are safe to insert surgically and cause no adverse events once in place. Significantly, we have also been able to observe that our device prototype was able to evoke meaningful visual perception in patients with profound visual loss.”

Here’s one of the study participants using the bionic eye:

First direct brain-to-brain communication between human subjects

Via KurzweilAI.net

An international team of neuroscientists and robotics engineers have demonstrated the first direct remote brain-to-brain communication between two humans located 5,000 miles away from each other and communicating via the Internet, as reported in a paper recently published in PLOS ONE (open access).

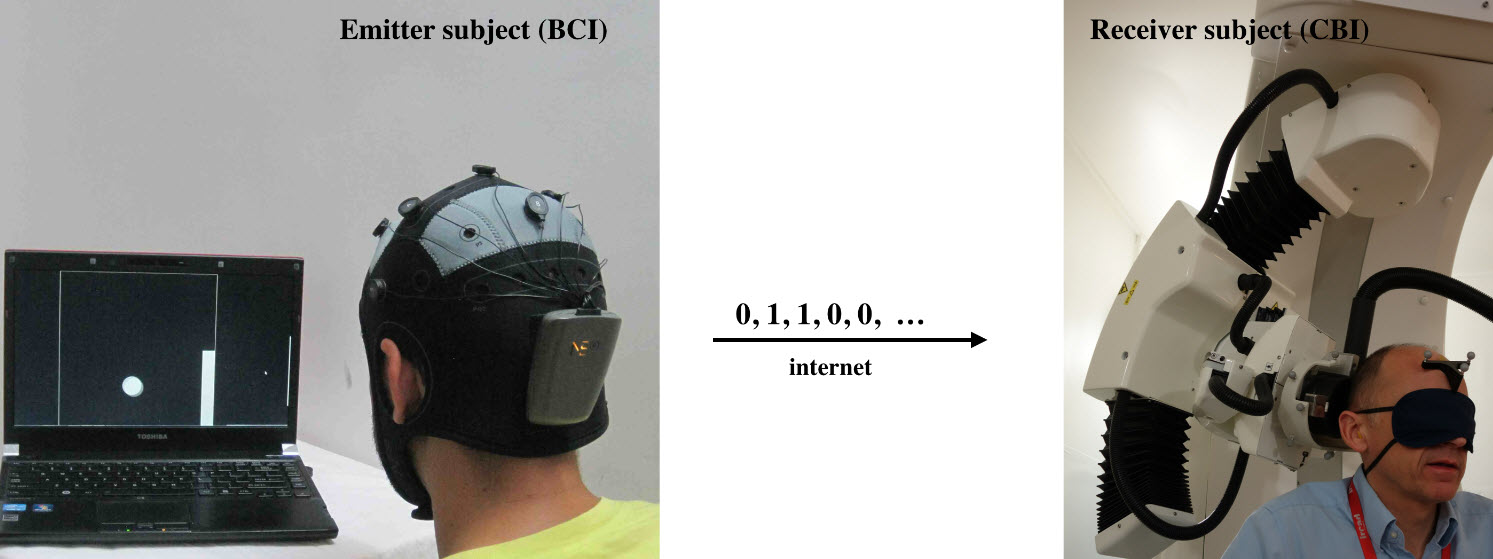

Emitter and receiver subjects with non-invasive devices supporting, respectively, a brain-computer interface (BCI), based on EEG changes, driven by motor imagery (left) and a computer-brain interface (CBI) based on the reception of phosphenes elicited by neuro-navigated TMS (right) (credit: Carles Grau et al./PLoS ONE)

In India, researchers encoded two words (“hola” and “ciao”) as binary strings and presented them as a series of cues on a computer monitor. They recorded the subject’s EEG signals as the subject was instructed to think about moving his feet (binary 0) or hands (binary 1). They then sent the recorded series of binary values in an email message to researchers in France, 5,000 miles away.

There, the binary strings were converted into a series of transcranial magnetic stimulation (TMS) pulses applied to a hotspot location in the right visual occipital cortex that either produced a phosphene (perceived flash of light) or not.

“We wanted to find out if one could communicate directly between two people by reading out the brain activity from one person and injecting brain activity into the second person, and do so across great physical distances by leveraging existing communication pathways,” explains coauthor Alvaro Pascual-Leone, MD, PhD, Director of the Berenson-Allen Center for Noninvasive Brain Stimulation at Beth Israel Deaconess Medical Center (BIDMC) and Professor of Neurology at Harvard Medical School.

A team of researchers from Starlab Barcelona, Spain and Axilum Robotics, Strasbourg, France conducted the experiment. A second similar experiment was conducted between individuals in Spain and France.

“We believe these experiments represent an important first step in exploring the feasibility of complementing or bypassing traditional language-based or other motor/PNS mediated means in interpersonal communication,” the researchers say in the paper.

“Although certainly limited in nature (e.g., the bit rates achieved in our experiments were modest even by current BCI (brain-computer interface) standards, mostly due to the dynamics of the precise CBI (computer-brain interface) implementation, these initial results suggest new research directions, including the non-invasive direct transmission of emotions and feelings or the possibility of sense synthesis in humans — that is, the direct interface of arbitrary sensors with the human brain using brain stimulation, as previously demonstrated in animals with invasive methods.

Brain-to-brain (B2B) communication system overview. On the left, the BCI subsystem is shown schematically, including electrodes over the motor cortex and the EEG amplifier/transmitter wireless box in the cap. Motor imagery of the feet codes the bit value 0, of the hands codes bit value 1. On the right, the CBI system is illustrated, highlighting the role of coil orientation for encoding the two bit values. Communication between the BCI and CBI components is mediated by the Internet. (Credit: Carles Grau et al./PLoS ONE)

“The proposed technology could be extended to support a bi-directional dialogue between two or more mind/brains (namely, by the integration of EEG and TMS systems in each subject). In addition, we speculate that future research could explore the use of closed mind-loops in which information associated to voluntary activity from a brain area or network is captured and, after adequate external processing, used to control other brain elements in the same subject. This approach could lead to conscious synthetically mediated modulation of phenomena best detected subjectively by the subject, including emotions, pain and psychotic, depressive or obsessive-compulsive thoughts.

“Finally, we anticipate that computers in the not-so-distant future will interact directly with the human brain in a fluent manner, supporting both computer- and brain-to-brain communication routinely. The widespread use of human brain-to-brain technologically mediated communication will create novel possibilities for human interrelation with broad social implications that will require new ethical and legislative responses.”

This work was partly supported by the EU FP7 FET Open HIVE project, the Starlab Kolmogorov project, and the Neurology Department of the Hospital de Bellvitge.

Google Glass can now display captions for hard-of-hearing users

Georgia Institute of Technology researchers have created a speech-to-text Android app for Google Glass that displays captions for hard-of-hearing persons when someone is talking to them in person.

“This system allows wearers like me to focus on the speaker’s lips and facial gestures, “said School of Interactive Computing Professor Jim Foley.

“If hard-of-hearing people understand the speech, the conversation can continue immediately without waiting for the caption. However, if I miss a word, I can glance at the transcription, get the word or two I need and get back into the conversation.”

Captioning on Glass display captions for the hard-of-hearing (credit: Georgia Tech)

The “Captioning on Glass” app is now available to install from MyGlass. More information here.

Foley and the students are working with the Association of Late Deafened Adults in Atlanta to improve the program. An iPhone app is planned.

Sep 21, 2014

First hands-on: Crescent Bay demo

I just tested the Oculus Crescent Bay prototype at the Oculus Connect event in LA.

I still can't close my mouth.

The demo lasted about 10 min, during which several scenes were presented. The resolution and framerate are astounding, you can turn completely around. I can say this is the first time in my life I can really say I was there.

I believe this is really the begin of a new era for VR, and I am sure I won't sleep tonight thinking about the infinite possibilities and applications of this technology. and I don't think I am exaggerating - if anything, I am underestimating

04:50 Posted in Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink | Comments (0)

Jul 29, 2014

Will city-dwellers actually use a no-cellphones lane on the sidewalk?

Via Textually.org

Sidewalk collisions involving pedestrians engrossed in their electronic devices have become an irritating (and sometimes dangerous) fact of city life. To prevent them, what about just creating a no cellphones lane on the sidewalk? Would people follow the signs? Thats what a TV crew decided to find out on a Washington, D.C., street Thursday, as part of a behavioral science experiment for a new National Geographic TV series. [via Quartz]

As expected, some pedestrians ignored the chalk markings designating a no-cellphones lane and a lane that warned pedestrians to walk at your own risk. Others didnt even see them because they were too busy staring at their phones. But others stopped, took pictures and posted themfrom their phones, of course.

22:51 Posted in Wearable & mobile | Permalink | Comments (0)

Combining Google Glass with consumer oriented BCI headsets

MindRDR connects Google Glass with a device to monitor brain activity, allowing users to take pictures and socialise them on Twitter or Facebook.

Once a user has decided to share an image, we analyse their brain data and provide an evaluation of their ability to control the interface with their mind. This information is attached to every shared image.

The current version of MindRDR uses commercially available brain monitor Neurosky MindWave Mobile to extract core metrics from the mind.

22:38 Posted in Neurotechnology & neuroinformatics, Wearable & mobile | Permalink | Comments (0)

Ekso bionic suit

Ekso is an exoskeleton bionic suit or a "wearable robot" designed to enable individuals with lower extremity paralysis to stand up and walk over ground with a weight bearing, four point reciprocal gait. Walking is achieved by the user’s forward lateral weight shift to initiate a step. Battery-powered motors drive the legs and replace neuromuscular function.

Ekso Bionics http://eksobionics.com/

14:59 Posted in AI & robotics, Cybertherapy, Wearable & mobile | Permalink | Comments (0)

Jul 09, 2014

Experiential Virtual Scenarios With Real-Time Monitoring (Interreality) for the Management of Psychological Stress: A Block Randomized Controlled Trial

The recent convergence between technology and medicine is offering innovative methods and tools for behavioral health care. Among these, an emerging approach is the use of virtual reality (VR) within exposure-based protocols for anxiety disorders, and in particular posttraumatic stress disorder. However, no systematically tested VR protocols are available for the management of psychological stress. Objective: Our goal was to evaluate the efficacy of a new technological paradigm, Interreality, for the management and prevention of psychological stress. The main feature of Interreality is a twofold link between the virtual and the real world achieved through experiential virtual scenarios (fully controlled by the therapist, used to learn coping skills and improve self-efficacy) with real-time monitoring and support (identifying critical situations and assessing clinical change) using advanced technologies (virtual worlds, wearable biosensors, and smartphones).

Full text paper available at: http://www.jmir.org/2014/7/e167/

Jun 30, 2014

Never do a Tango with an Eskimo

Apr 29, 2014

Evidence, Enactment, Engagement: The three 'nEEEds' of mental mHealth

Actually, according to my experience, citizens and public stakeholders are not well-informed or educated about mHealth. For example, to many people the idea of using phones to deliver mental health programs still sounds weird.

Yet the number of mental health apps is rapidly growing: a recent survey identified 200 unique mobile tools specifically associated with behavioral health.

These applications now cover a wide array of clinical areas including developmental disorders, cognitive disorders, substance-related disorders, as well as psychotic and mood disorders.

I think that the increasing "applification" of mental health is explained by three potential benefits of this approach:

- First, mobile apps can be integrated in different stages of treatment: from promoting awareness of disease, to increasing treatment compliance, to preventing relapse.

- Furthermore, mobile tools can be used to monitor behavioural and psychological symptoms in everyday life: self-reported data can be complemented with readings from inbuilt or wearable sensors to fine-tune treatment according to the individual patient’s needs.

- Last - but not least - mobile applications can help patients to stay on top of current research, facilitating access to evidence-based care. For example, in the EC-funded INTERSTRESS project, we investigated these potentials in the assessment and management of psychological stress, by developing different mobile applications (including the award-winning Positive Technology app) for helping people to monitor stress levels “on the go” and learn new relaxation skills.

In short, I believe that mental mHealth has the potential to provide the right care, at the right time, at the right place. However, from my personal experience I have identified three key challenges that must be faced in order to realize the potential of this approach.

I call them the three "nEEEds" of mental mHealth: evidence, engagement, enactment.

- Evidence refers to the need of clinical proof of efficacy or effectiveness to be provided using randomised trials.

- Engagement is related to the need of ensuring usability and accessibility for mobile interfaces: this goes beyond reducing use errors that may generate risks of psychological discomfort for the patient, to include the creation of a compelling and engaging user experience.

- Finally, enactment concerns the need that appropriate regulations enacted by competent authorities catch up with mHealth technology development.

Being myself a beneficiary of EC-funded grants, I can recognize that R&D investments on mHealth made by EC across FP6 and FP7 have contributed to position Europe at the forefront of this revolution. And the return of this investment could be strong: it has been predicted that full exploitation of mHealth solutions could lead to nearly 100 billion EUR savings in total annual EU healthcare spend in 2017.

I believe that a progressively larger portion of these savings may be generated by the adoption of mobile solutions in the mental health sector: actually, in the WHO European Region, mental ill health accounts for almost 20% of the burden of disease.

For this prediction to be fulfilled, however, many barriers must be overcome: thethree "nEEEds" of mental mHealth are probably only the start of the list. Hopefully, the Green Paper consultation will help to identify further opportunities and concerns that may be facing mental mHealth, in order to ensure a successful implementation of this approach.

23:51 Posted in Cybertherapy, Physiological Computing, Wearable & mobile | Permalink | Comments (0)

Apr 15, 2014

Android Wear

Via KurzweilAI.net

Google has announced Android Wear, a project that extends Android to wearables, starting with two watches, both due out this Summer: Motorola’s Moto 360 and LG’s G Watch.

Android Wear will show you info from the wide variety of Android apps, such as messages, social apps, chats, notifications, health and fitness, music playlists, and videos.

It will also enable Google Now functions — say “OK, Google” for flight times, sending a text, weather, view email, get directions, travel time, making a reservation, etc..

Google says it’s working with several other consumer-electronics manufacturers, including Asus, HTC, and Samsung; chip makers Broadcom, Imagination, Intel, Mediatek and Qualcomm; and fashion brands like the Fossil Group to offer watches powered by Android Wear later this year.

If you’re a developer, there’s a new section on developer.android.com/wear focused on wearables. Starting today, you can download a Developer Preview so you can tailor your existing app notifications for watches powered by Android Wear.

23:07 Posted in Wearable & mobile | Permalink | Comments (0)

Avegant - Glyph Kickstarter - Wearable Retinal Display

Via Mashable

Move over Google Glass and Oculus Rift, there's a new kid on the block: Glyph, a mobile, personal theater.

Glyph looks like a normal headset and operates like one, too. That is, until you move the headband down over your eyes and it becomes a fully-functional visual visor that displays movies, television shows, video games or any other media connected via the attached HDMI cable.

Using Virtual Retinal Display (VRD), a technology that mimics the way we see light, the Glyph projects images directly onto your retina using one million micromirrors in each eye piece. These micromirrors reflect the images back to the retina, producing a reportedly crisp and vivid quality.

22:56 Posted in Future interfaces, Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink | Comments (0)

Apr 06, 2014

Stick-on electronic patches for health monitoring

Researchers at at John A. Rogers’ lab at the University of Illinois, Urbana-Champaign have incorporated off-the-shelf chips into fexible electronic patches to allow for high quality ECG and EEG monitoring.

Here is the video:

23:45 Posted in Physiological Computing, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Mar 02, 2014

Myoelectric controlled avatar helps stop phantom limb pain

Reblogged from Medgadget

People unfortunate enough to lose an arm or a leg often feel pain in their missing limb, an unexplained condition known as phantom limb pain. Researchers at Chalmers University of Technology in Sweden decided to test whether they can fool the brain into believing the limb is still there and maybe stop the pain.

They attached electrodes to the skin of the remaining arm of an amputee to read the myoelectric signals from the muscles below. Additionally, the arm was tracked in 3D using a marker so that the data could be integrated into a moving generated avatar as well as computer games. The amputee moves the arm of the avatar like he would if his own still existed, while the brain becomes reacquainted with its presence. After repeated use, and playing video games that were controlled using the same myoelectric interface, the person in the study had significant pain reduction after decades of phantom limb pain.

Here’s a video showing off the experimental setup:

Study in Frontiers in Neuroscience: Treatment of phantom limb pain (PLP) based on augmented reality and gaming controlled by myoelectric pattern recognition: a case study of a chronic PLP patient…

22:35 Posted in Cybertherapy, Wearable & mobile | Permalink | Comments (0)

Feb 11, 2014

Neurocam

Via KurzweilAI.net

Keio University scientists have developed a “neurocam” — a wearable camera system that detects emotions, based on an analysis of the user’s brainwaves.

The hardware is a combination of Neurosky’s Mind Wave Mobile and a customized brainwave sensor.

The algorithm is based on measures of “interest” and “like” developed by Professor Mitsukura and the neurowear team.

The users interests are quantified on a range of 0 to 100. The camera automatically records five-second clips of scenes when the interest value exceeds 60, with timestamp and location, and can be replayed later and shared socially on Facebook.

The researchers plan to make the device smaller, more comfortable, and fashionable to wear.

19:31 Posted in Emotional computing, Neurotechnology & neuroinformatics, Wearable & mobile | Permalink | Comments (0)