Feb 02, 2014

A low-cost sonification system to assist the blind

Via KurzweilAI.net

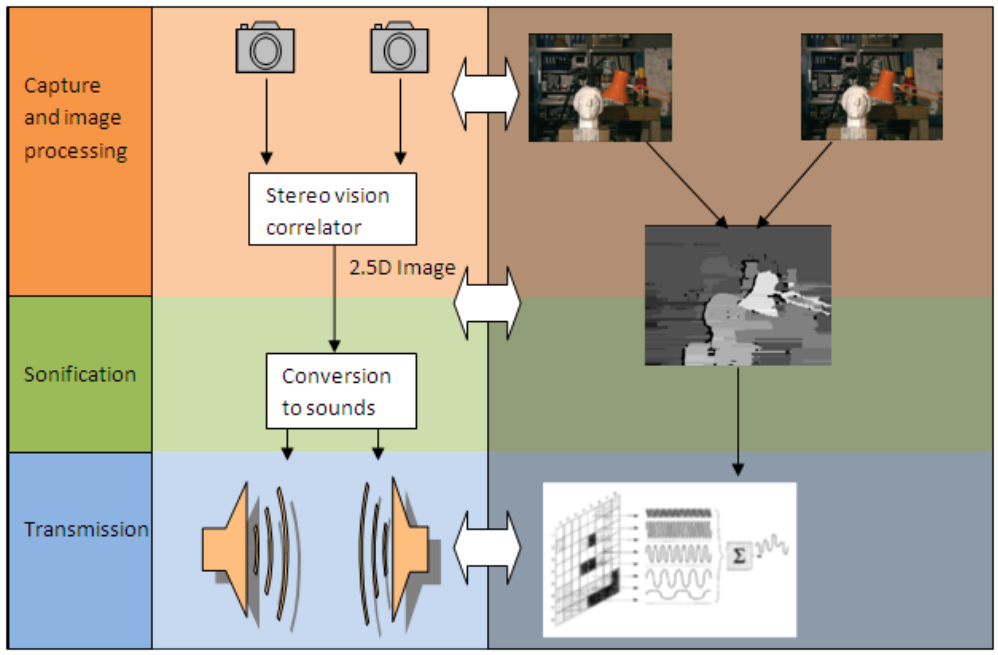

An improved assistive technology system for the blind that uses sonification (visualization using sounds) has been developed by Universidad Carlos III de Madrid (UC3M) researchers, with the goal of replacing costly, bulky current systems.

Called Assistive Technology for Autonomous Displacement (ATAD), the system includes a stereo vision processor measures the difference of images captured by two cameras that are placed slightly apart (for image depth data) and calculates the distance to each point in the scene.

Then it transmits the information to the user by means of a sound code that gives information regarding the position and distance to the different obstacles, using a small audio stereo amplifier and bone-conduction headphones.

19:48 Posted in Neurotechnology & neuroinformatics, Wearable & mobile | Permalink | Comments (0)

Jan 25, 2014

MemoryMirror: First Body-Controlled Smart Mirror

The Intel® Core™ i7-based MemoryMirror takes the clothes shopping experience to a whole different level, allowing shoppers to try on multiple outfits, then virtually view and compare previous choices on the mirror itself using intuitive hand gestures. Users control all their data and can remain anonymous to the retailer if they so choose. The Memory Mirror uses Intel integrated graphics technology to create avatars of the shopper wearing various clothing that can be shared with friends to solicit feedback or viewed instantly to make an immediate in-store purchase. Shoppers can also save their looks in mobile app should they decide to purchase at a later time online.

21:53 Posted in Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink | Comments (0)

Jan 20, 2014

The Future of Gesture Control - Introducing Myo

Thalmic Labs at TEDxToronto

23:17 Posted in Enactive interfaces, Future interfaces, Wearable & mobile | Permalink | Comments (0)

Jan 12, 2014

Wearable Pregnancy Ultrasound

Melody Shiue, an industrial design graduate from University of New South Wales, is proposing a wearable fetal ultrasound system to enhancing maternal-fetal bonding as a reassurance window. It is an e-textile based apparatus that uses 4D ultrasound. Latest stretchable display technology is also employed on the abdominal region, allowing other members of the family especially the father to connect with the foetus in its context. PreVue not only gives you the opportunity to interact and comprehend the physical growth of the baby, but also an early understanding of its personality as you see it yawning, rolling, smiling etc., bringing you closer till the day it finally rests into your arms.

More information at Tuvie

16:25 Posted in Future interfaces, Wearable & mobile | Permalink | Comments (0)

Dec 24, 2013

NeuroOn mask improves sleep and helps manage jet lag

Via Medgadget

A group of Polish engineers is working on a smart sleeping mask that they hope will allow people to get more out of their resting time, as well as allow for unusual sleeping schedules that would particularly benefit those who are often on-call. The NeuroOn mask will have an embedded EEG for brain wave monitoring, EMG for detecting muscle motion on the face, and sensors that can track whether your pupils are moving and whether they are going through REM. The team is currently raising money on Kickstarter where you can pre-order your own NeuroOn once it’s developed into a final product.

Nov 17, 2013

Wristify: Thermal Comfort via a Wrist Band

A team of MIT students and alumni hava developed a new low-cost solution to get the body temperature just right: Wristify, a wrist cuff that allows individuals to maintain a comfortable body temperature independent of their environment. In essence, their thermoelectric bracelet monitors air and skin temperature and then responds with pulses of hot or cold waveforms to the wrist.

The Wristify team recently won first prize and $10,000 in MIT’s Making and Designing Materials Engineering Competition (MADMEC)—that’s after months of development and 15 prototypes.

21:41 Posted in Wearable & mobile | Permalink | Comments (0)

Nov 16, 2013

Phonebloks

Phonebloks is a modular smartphone concept created by Dutch designer Dave Hakkens to reduce electronic waste. By attaching individual third-party components (called "bloks") to a main board, a user would create a personalized smartphone. These bloks can be replaced at will if they break or the user wishes to upgrade.

15:24 Posted in Future interfaces, Wearable & mobile | Permalink | Comments (0)

Nov 03, 2013

Neurocam wearable camera reads your brainwaves and records what interests you

Via KurzweilAI.net

The neurocam is the world’s first wearable camera system that automatically records what interests you, based on brainwaves, DigInfo TV reports.

It consists of a headset with a brain-wave sensor and uses the iPhone’s camera to record a 5-second GIF animation. It could also be useful for life-logging.

The algorithm for quantifying brain waves was co-developed by Associate Professor Mitsukura at Keio University.

The project team plans to create an emotional interface.

22:59 Posted in Brain-computer interface, Emotional computing, Wearable & mobile | Permalink | Comments (0)

Oct 31, 2013

Mobile EEG and its potential to promote the theory and application of imagery-based motor rehabilitation

Mobile EEG and its potential to promote the theory and application of imagery-based motor rehabilitation.

Int J Psychophysiol. 2013 Oct 18;

Authors: Kranczioch C, Zich C, Schierholz I, Sterr A

Abstract. Studying the brain in its natural state remains a major challenge for neuroscience. Solving this challenge would not only enable the refinement of cognitive theory, but also provide a better understanding of cognitive function in the type of complex and unpredictable situations that constitute daily life, and which are often disturbed in clinical populations. With mobile EEG, researchers now have access to a tool that can help address these issues. In this paper we present an overview of technical advancements in mobile EEG systems and associated analysis tools, and explore the benefits of this new technology. Using the example of motor imagery (MI) we will examine the translational potential of MI-based neurofeedback training for neurological rehabilitation and applied research.

23:50 Posted in Mental practice & mental simulation, Research tools, Wearable & mobile | Permalink | Comments (0)

Smart glasses that help the blind see

Via New Scientist

They look like snazzy sunglasses, but these computerised specs don't block the sun – they make the world a brighter place for people with partial vision.

These specs do more than bring blurry things into focus. This prototype pair of smart glasses translates visual information into images that blind people can see.

Many people who are registered as blind can perceive some light and motion. The glasses, developed by Stephen Hicks of the University of Oxford, are an attempt to make that residual vision as useful as possible.

They use two cameras, or a camera and an infrared projector, that can detect the distance to nearby objects. They also have a gyroscope, a compass and GPS to help orient the wearer.

The collected information can be translated into a variety of images on the transparent OLED displays, depending on what is most useful to the person sporting the shades. For example, objects can be made clearer against the background, or the distance to obstacles can be indicated by the varying brightness of an image.

Hicks has won the Royal Society's Brian Mercer Award for Innovation"" for his work on the smart glasses. He plans to use the £50,000 prize money to add object and text recognition to the glasses' abilities.

23:39 Posted in Neurotechnology & neuroinformatics, Wearable & mobile | Permalink | Comments (0)

Signal Processing Turns Regular Headphones Into Pulse Sensors

Via Medgadget

A new signal processing algorithm that enables any pair of earphones to detect your pulse was demonstrated recently at the Healthcare Device Exhibition 2013 in Yokohama, Japan. The technology comes from a joint effort of Bifrostec (Tokyo, Japan) and the Kaiteki Institute. It is built on the premise that the eardrum creates pressure waves with each heartbeat, which can be detected in a perfectly enclosed space. However, typically, earphones do not create a perfect seal, which is what gives everyone in a packed elevator the privilege to listen to that guy’s tunes. The new algorithm allows the software to process the pressure signal despite the lack of a perfect seal to determine a user’s pulse.

23:30 Posted in Physiological Computing, Wearable & mobile | Permalink | Comments (0)

Sep 10, 2013

BITalino: Do More!

BITalino is a low-cost toolkit that allows anyone from students to professional developers to create projects and applications with physiological sensors. Out of the box, BITalino already integrates easy to use software & hardware blocks with sensors for electrocardiography (ECG), electromyography (EMG), electrodermal activity (EDA), an accelerometer, & ambient light. Imagination is the limit; each individual block can be snapped off and combined to prototype anything you want. You can connect others sensors, including your own custom designs.

18:22 Posted in Physiological Computing, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Aug 07, 2013

OpenGlass Project Makes Google Glass Useful for the Visually Impaired

Re-blogged from Medgadget

Google Glass may have been developed to transform the way people see the world around them, but thanks to Dapper Vision’s OpenGlass Project, one doesn’t even need to be able to see to experience the Silicon Valley tech giant’s new spectacles.

Harnessing the power of Google Glass’ built-in camera, the cloud, and the “hive-mind”, visually impaired users will be able to know what’s in front of them. The system consists of two components: Question-Answer sends pictures taken by the user and uploads them to Amazon’s Mechanical Turk and Twitter for the public to help identify, and Memento takes video from Glass and uses image matching to identify objects from a database created with the help of seeing users. Information about what the Glass wearer “sees” is read aloud to the user via bone conduction speakers.

Here’s a video that explains more about how it all works:

13:37 Posted in Augmented/mixed reality, Wearable & mobile | Permalink | Comments (0)

Hive-mind solves tasks using Google Glass ant game

Re-blogged from New Scientist

Glass could soon be used for more than just snapping pics of your lunchtime sandwich. A new game will connect Glass wearers to a virtual ant colony vying for prizes by solving real-world problems that vex traditional crowdsourcing efforts.

Crowdsourcing is most famous for collaborative projects like Wikipedia and "games with a purpose" like FoldIt, which turns the calculations involved in protein folding into an online game. All require users to log in to a specific website on their PC.

Now Daniel Estrada of the University of Illinois in Urbana-Champaign and Jonathan Lawhead of Columbia University in New York are seeking to bring crowdsourcing to Google's wearable computer, Glass.

The pair have designed a game called Swarm! that puts a Glass wearer in the role of an ant in a colony. Similar to the pheromone trails laid down by ants, players leave virtual trails on a map as they move about. These behave like real ant trails, fading away with time unless reinforced by other people travelling the same route. Such augmented reality games already exist – Google's Ingress, for one – but in Swarm! the tasks have real-world applications.

Swarm! players seek out virtual resources to benefit their colony, such as food, and must avoid crossing the trails of other colony members. They can also monopolise a resource pool by taking photos of its real-world location.

To gain further resources for their colony, players can carry out real-world tasks. For example, if the developers wanted to create a map of the locations of every power outlet in an airport, they could reward players with virtual food for every photo of a socket they took. The photos and location data recorded by Glass could then be used to generate a map that anyone could use. Such problems can only be solved by people out in the physical world, yet the economic incentives aren't strong enough for, say, the airport owner to provide such a map.

Estrada and Lawhead hope that by turning tasks such as these into games, Swarm! will capture the group intelligence ant colonies exhibit when they find the most efficient paths between food sources and the home nest.

Read full story

13:12 Posted in Augmented/mixed reality, Creativity and computers, ICT and complexity, Wearable & mobile | Permalink | Comments (0)

Jul 30, 2013

How it feels (through Google Glass)

14:12 Posted in Augmented/mixed reality, Wearable & mobile | Permalink | Comments (0)

Jul 23, 2013

A mobile data collection platform for mental health research

A mobile data collection platform for mental health research

22:57 Posted in Pervasive computing, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

The Size of Electronic Consumer Devices Affects Our Behavior

Re-blogged from Textually.org

What are our devices doing to us? We already know they're snuffing our creativity - but new research suggests they're also stifling our drive. How so? Because fussing with them on average 58 minutes a day leads to bad posture, FastCompany reports.

The body posture inherent in operating everyday gadgets affects not only your back, but your demeanor, reports a new experimental study entitled iPosture: The Size of Electronic Consumer Devices Affects Our Behavior. It turns out that working on a relatively large machine (like a desktop computer) causes users to act more assertively than working on a small one (like an iPad).That poor posture, Harvard Business School researchers Maarten Bos and Amy Cuddy find, undermines our assertiveness.

Read more.

22:23 Posted in Research tools, Wearable & mobile | Permalink | Comments (0)

SENSUS Transcutaneous Pain Management System Approved for Use During Sleep

Via Medgadget

NeuroMetrix of out of Waltham, MA received FDA clearance for its SENSUS Pain Management System to be used by patients during sleep. This is the first transcutaneous electrical nerve stimulation system to receive a sleep indication from the FDA for pain control.

The device is designed for use by diabetics and others with chronic pain in the legs and feet. It’s worn around one or both legs and delivers an electrical current to disrupt pain signals being sent up to the brain.

22:18 Posted in Biofeedback & neurofeedback, Wearable & mobile | Permalink | Comments (0)

Jul 18, 2013

Advanced ‘artificial skin’ senses touch, humidity, and temperature

Artificial skin (credit: Technion) Technion-Israel Institute of Technology scientists have discovered how to make a new kind of flexible sensor that one day could be integrated into “electronic skin” (e-skin) — a covering for prosthetic limbs.

Read full story

18:14 Posted in Pervasive computing, Research tools, Wearable & mobile | Permalink | Comments (0)

May 06, 2013

An app for the mind

With the rapid adoption of mobile technologies and the proliferation of smartphones, new opportunities are emerging for the delivery of mental health services. And indeed, psychologists are starting to realize this potential: a recent survey by Luxton and coll. (2011) identified over 200 smartphone apps focused on behavioral health, covering a wide range of disorders, including developmental disorders, cognitive disorders, substance-related disorders as well as psychotic and mood disorders. These applications are used in behavioral health for several purposes, the most common of which are health education, assessment, homework and monitoring progress of treatment.

For example, T2 MoodTracker is an application that allows users to self-monitor, track and reference their emotional experience over a period of days, weeks and months using a visual analogue rating scale. Using this application, patients can self-monitor emotional experiences associated with common deployment-related behavioral health issues like post-traumatic stress, brain injury, life stress, depression and anxiety. Self-monitoring results can be used as self-help tool or they shared with a therapist or health care professional, providing a record of the patient’s emotional experience over a selected time frame.

Measuring objective correlatives of subjectively-reported emotional states is an important concern in research and clinical applications. Physiological and physical activity information provide mental health professionals with integrative measures, which can be used to improve understanding of patients’ self-reported feelings and emotions.

The combined use of wearable biosensors and smart phones offers unprecedented opportunities to collect, elaborate and transmit real-time body signals to the remote therapist. This approach is also useful to allow the patient collecting real-time information related to his/her health conditions and identifying specific trends. Insights gained by means of this feedback can empower the user to self-engage and manage his/her own health status, minimizing any interaction with other health care actors. One such tool is MyExperience, an open-source mobile platform that allows the combination of sensing and self-report to collect both quantitative and qualitative data on user experience and activity.

Other applications are designed to empower users with information for making better decisions, preventing life-style related conditions and preserving/enhancing cognitive performance. For example, BeWell monitors different user activities (sleep, physical activity, social interaction) and provides feedback to promote healthier lifestyle decisions.

Besides applications in mental health and wellbeing, smartphones are increasingly used in psychological research. The potential of this approach has been recently discussed by Geoffrey Miller in a review entitled “The Smartphone Psychology Manifesto”. According to Miller, smartphones can be effectively used to collect large quantities of ecologically valid data, in a easier and quicker way than other available research methodologies. Since the smartphone is becoming one of the most pervasive devices in our lives, it provides access to domains of behavioral data not previously available without either constant observation or reliance on self-reports only.

For example, the INTERSTRESS project, which I am coordinating, developed PsychLog, a psycho-physiological mobile data collection platform for mental health research. This free, open source experience sampling platform for Windows mobile allows collecting self-reported psychological data as well as ECG data via a bluetooth ECG sensor unit worn by the user. Althought PsychLog provides less features with respect to more advanced experience sampling platform, it can be easily configured also by researchers with no programming skills.

In summary, the use of smartphones can have a significant impact on both psychological research and practice. However, there is still limited evidence of the effectiveness of this approach. As for other mHealth applications, few controlled trials have tested the potential of mobile technology interventions in improving mental health care delivery processes. Therefore, further research is needed in order to determine the real cost-effectiveness of mobile cybertherapy applications.

16:18 Posted in Cybertherapy, Self-Tracking, Wearable & mobile | Permalink | Comments (0)