Nov 04, 2007

Tactile Video Displays

Via Medgadget

Researchers at National Institute of Standards and Technology have developed a tactile graphic display to help visually impaired people to perceive images.

From the NIST press release

ELIA Life Technology Inc. of New York, N.Y., licensed for commercialization both the tactile graphic display device and fingertip graphic reader developed by NIST researchers. The former, first introduced as a prototype in 2002, allows a person to feel a succession of images on a reusable surface by raising some 3,600 small pins (actuator points) into a pattern that can be locked in place, read by touch and then reset to display the next graphic in line. Each image-from scanned illustrations, Web pages, electronic books or other sources-is sent electronically to the reader where special software determines how to create a matching tactile display. (For more information, see "NIST 'Pins' Down Imaging System for the Blind".)

An array of about 100 small, very closely spaced (1/10 of a millimeter apart) actuator points set against a user's fingertip is the key to the more recently created "tactile graphic display for localized sensory stimulation." To "view" a computer graphic with this technology, a blind or visually impaired person moves the device-tipped finger across a surface like a computer mouse to scan an image in computer memory. The computer sends a signal to the display device and moves the actuators against the skin to "translate" the pattern, replicating the sensation of the finger moving over the pattern being displayed. With further development, the technology could possibly be used to make fingertip tactile graphics practical for virtual reality systems or give a detailed sense of touch to robotic control (teleoperation) and space suit gloves.

Press release: NIST Licenses Systems to Help the Blind 'See' Images

18:40 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Oct 12, 2007

The Hands-on Computer

Perceptive Pixel’s Interactive Media Wall (aka the Multi-Touch wall) got the 2007 Breakthrough Award, the annual prize assigned by the journal Popular Mechanics to "cutting-edge projects and ideas leading to a better world". The Minority Report interface supports diverse functions, such as navigate, locate, and manipulate information, all handled through multi-touch gesturing.

22:10 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Sep 08, 2007

3D motion capture using a webcam

14:46 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Aug 03, 2007

Mixed Feelings

From Wired 15.04:

See with your tongue. Navigate with your skin. Fly by the seat of your pants (literally). How researchers can tap the plasticity of the brain to hack our 5 senses — and build a few new ones

Read the full article here

14:01 Posted in Future interfaces | Permalink | Comments (0) | Tags: synesthesia

Jul 17, 2007

Gesture-control for regular TV

Australian engineers Prashan Premaratne and Quang Nguye have designed a novel gesture-control for regular TV.

The controller's built-in camera can recognise seven simple hand gestures and work with up to eight different gadgets around the home. According to designers:

“Crucially for anyone with small children, pets or gesticulating family members, the software can distinguish between real commands and unintentional gestures“.

Premaratne and Nguye predict the system availability on the market within three year.

00:13 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Delicate Boundaries

Re-blogged from We Make Money Not Art

Delicate Boundaries, a work by Chris Sugrue, uses human touch to dissolve the barrier of the computer screen. Using the body as a means of exchange, the system explores the subtle boundaries that exist between foreign systems and what it might mean to cross them. Lifelike digital animations swarm out of their virtual confinement onto the skin of a hand or arm when it makes contact with a computer screen creating an imaginative world where our bodies are a landscape for digital life to explore.

Video.

00:03 Posted in Cyberart, Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Jul 14, 2007

The EyesWeb Project

From InfoMus Lab: Laboratorio di Informatica Musicale’s, Genova, Italy

The EyesWeb Project - The EyesWeb research project aims at exploring and developing models of interaction by extending music language toward gesture and visual languages, with a particular focus on the understanding of affect and expressive content in gesture. For example, in EyesWeb we aim at developing methods able to distinguish the different expressive content from two instances of the same movement pattern, e.g., two performances of the same dance fragment. Our research addresses the fields of KANSEI Information Processing and of analysis and synthesis of expressiveness in movement. More.

The EyesWeb open platform (free download) has been originally conceived for supporting research on multimodal expressive interfaces and multimedia interactive systems. EyesWeb has also been widely employed for designing and developing real-time dance, music, and multimedia applications. It supports the user in experimenting computational models of non-verbal expressive communication and in mapping gestures from different modalities (e.g., human full-body movement, music) onto multimedia output (e.g., sound, music, visual media). It allows fast development and experiment cycles of interactive performance set-ups by including a visual programming language allowing mapping, at different levels, of movement and audio into integrated music, visual, and mobile scenery.

EyesWeb has been designed with a special focus on the analysis and processing of expressive gesture in movement, midi, audio, and music signals. It was the basic platform of the EU-IST Project MEGA and it has been employed in many artistic performances and interactive installations. More.

16:15 Posted in Future interfaces | Permalink | Comments (0) | Tags: cybermusic

Jun 20, 2007

Prometeus - The Media Revolution

a thought-provoking video about the media revolution

00:00 Posted in Future interfaces | Permalink | Comments (0)

May 02, 2007

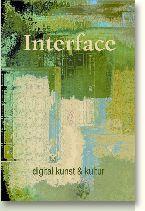

The Aesthetic Interface

The Aesthetic Interface :: 9-13 May 2007 :: Aarhus University, Denmark.

The interface is the primary cultural form of the digital age. Here the invisible technological dimensions of the computer are given form in order to meet human perception and agency. This encounter is enacted through aesthetic forms stemming not only from the functional domains and tools, but increasingly also from aesthetic traditions, the old media and from the new media aesthetics. This interplay takes place both in software interfaces, where aesthetic and cultural perspectives are gaining ground, in the digital arts and in our general technological culture - keywords range from experience oriented design and creative software to software studies, software art, new media, digital arts, techno culture and digital activism.

This conference will focus on how the encounter of the functional and the representational in the interface shapes contemporary art, aesthetics and culture. What are the dimensions of the aesthetic interface, what are the potentials, clashes and breakdowns? Which kinds of criticism, aesthetic praxes and forms of action are possible and necessary?

The conference is accompanied by an exhibition and workshops.

Christian Ulrik Andersen(DK): 'Writerly gaming' - social impact games

Inke Arns (DE): Transparency and Politics. On Spaces of the Political beyond the Visible, or: How transparency came to be the lead paradigm of the 21st century.

Morten Breinbjerg (DK): Music automata: the creative machine or how music and compositional practices is modelled in software

Christophe Bruno (F): Collective hallucination and capitalism 2.0

Geoff Cox (UK): Means-End of Software

Florian Cramer (DE/NL): What is Interface Aesthetics?

Matthew Fuller (UK): The Computation of Space

Lone Koefoed Hansen (DK): The interface at the skin

Erkki Huhtamo (USA/Fin): Multiple Screens - Intercultural Approaches to Screen Practice(s)

Jacob Lillemose (DK): Interfacing the Interfaces of Free Software. X-devian: The New Technologies to the People System

Henrik Kaare Nielsen (DK): The Interface and the Public Sphere

Søren Pold (DK): Interface Perception

Bodil Marie Thomsen (DK): The Haptic Interface

Jacob Wamberg (DK): Interface/Interlace, Or Is Telepresence Teleological?

Organised by: The Aesthetics of Interface Culture, Digital Aesthetics Research Center, TEKNE, Aarhus Kunstbygning, The Doctoral School in Arts and Aesthetics, .

Supported by: The Danish Research Council for the Humanities, The Aarhus University Research Foundation, The Doctoral School in Arts and Aesthetics, Aarhus University's Research Focus on the Knowledge Society, Region Midtjylland, Aarhus Kommune. The exhibition is supported by:Region Midtjylland, Århus Kommunes kulturpulje, Kunststyrelsen, Den Spanske Ambassade, Egetæpper.

23:44 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interface

Apr 01, 2007

Brain-controlled devices and games

Via pasta & vinegar

An article in The Economist about brain-controlled devices and games.

From the article:

At the moment, EEG's uses are mostly medical. Though the output of the electrodes is a set of crude brain waves, enough is now known about the healthy patterns of these waves for changes in them to be used to diagnose unhealthy abnormalities. Yet, because parts of a person's grey matter exhibit increased electric activity when they respond to stimuli or prepare for movements, there has always been the lingering hope that EEG might also manifest someone's thoughts in a machine-readable form that could be used for everyday purposes.

To realise that hope means solving two problems—one of hardware and one of software. The hardware problem is that existing EEG requires a helmet with as many as 120 electrodes in it, and that these electrodes have to be affixed to the scalp with a gel. The software problem is that many different types of brain waves have to be interpreted simultaneously and instantly. That is no mean computing task.

20:53 Posted in Future interfaces | Permalink | Comments (0) | Tags: brain-computer interface

Mar 10, 2007

Connecting Your Brain to the Game

Via KurzweilAI.net

The startup company Emotiv Systems has developed a wearable EEG system that allows players to mentally interact with video games, by controlling the on-screen action.

From Technology Review

Emotiv's system has three different applications. One is designed to sense facial expressions such as winks, grimaces, and smiles and transfer them, in real time, to an avatar. This could be useful in virtual-world games, such as Second Life, in which it takes a fair amount of training to learn how to express emotions and actions through a keyboard. Another application detects two emotional states, such as excitement and calm. Emotiv's chief product officer, Randy Breen, says that these unconscious cues could be used to modify a game's soundtrack or to affect the way that virtual characters interact with a player. The third set of software can detect a handful of conscious intentions that can be used to push, pull, rotate, and lift objects in a virtual world.

20:12 Posted in Future interfaces | Permalink | Comments (0) | Tags: neuroinformatics

Feb 01, 2007

Flickrfling

FlickrFling is an open source project for people who wish to explore in an innovative and rather surprising way the different data feeds that form the building blocks of today’s virtual world. By pointing the application, let’s say, to the CNN news feed, you will receive the last news in real time - rendered in pictures. The application will choose for each in-coming word an image from flickr’s database tagged with corresponding keywords.

22:53 Posted in Future interfaces | Permalink | Comments (0) | Tags: social computing

Jan 22, 2007

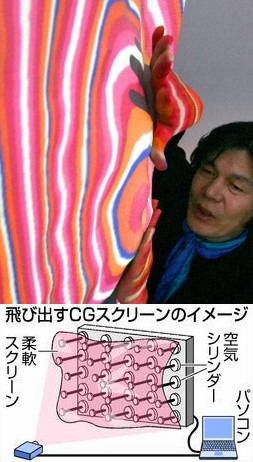

Gemotion screen shows video in living 3D

From Pink Tentacle

Gemotion is a soft, ‘living’ display that bulges and collapses in sync with the graphics on the screen, creating visuals that literally pop out at the viewer.

Yoichiro Kawaguchi, a well-known computer graphics artist and University of Tokyo professor, created Gemotion by arranging 72 air cylinders behind a flexible, 100 x 60 cm (39 x 24 inch) screen. As video is projected onto the screen, image data is relayed to the cylinders, which then push and pull on the screen accordingly.

“If used with games, TV or cinema, the screen could give images an element of power never seen before. It could lead to completely new forms of media,” says Kawaguchi.

The Gemotion screen will be on display from January 21 to February 4 as part of a media art exhibit (called Nihon no hyogen-ryoku) at National Art Center, Tokyo, which recently opened in Roppongi.

22:13 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Jan 10, 2007

Finger Touching Wearable Mobile Device

Re-blogged from Textually.org (via Yanko Design)

A wearable mobile device for enhanced chatting, by Designer Sunman Kwo.

"A new wearable device that anyone can communicate with that is easier and lighter in mobile circumstances corresponding to the 3.5G, 4G communication standard. Human hand is the most basic communication method.

For easier and simpler controls, it uses the instinctive input method "finger joint". Excluding the thumb, each finger joint makes up twelve buttons, with "the knuckle button", using the cell phone's 3X4 keypad, likely being the most popular input method."

22:21 Posted in Future interfaces, Wearable & mobile | Permalink | Comments (0) | Tags: mobile phones

Dec 14, 2006

reacTable

23:54 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Dec 06, 2006

Pocket Projectors

Via KurzweilAI.net

The Microvision system, composed of semiconductor lasers and a tiny mirror, will be small enough to integrate projection technology into a phone or an iPod.

18:39 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Nov 07, 2006

The future of music experience

check out this youtube video showing "reactable", an amazing music instrument with a tangible interface

23:00 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Nov 05, 2006

Neural internet: web surfing with brain potentials

Neural internet: web surfing with brain potentials for the completely paralyzed.

Neurorehabil Neural Repair. 2006 Dec;20(4):508-15

Authors: Karim AA, Hinterberger T, Richter J, Mellinger J, Neumann N, Flor H, Kübler A, Birbaumer N

Neural Internet is a new technological advancement in brain-computer interface research, which enables locked-in patients to operate a Web browser directly with their brain potentials. Neural Internet was successfully tested with a locked-in patient diagnosed with amyotrophic lateral sclerosis rendering him the first paralyzed person to surf the Internet solely by regulating his electrical brain activity. The functioning of Neural Internet and its clinical implications for motor-impaired patients are highlighted.

21:53 Posted in Brain-computer interface, Cybertherapy, Future interfaces | Permalink | Comments (0) | Tags: brain-computer interface

Oct 30, 2006

Throwable game-controllers

Re-blogged from New Scientist Tech

"Just what the doctor ordered? A new breed of throwable games controllers could turn computer gaming into a healthy pastime, reckons one Californian inventor. His "tossable peripherals" aim to get lazy console gamers up off the couch and out into the fresh air.

Each controller resembles a normal throwable object, like a beach ball, a football or a Frisbee. But they also connect via WiFi to a games console, like the PlayStation Portable. And each also contains an accelerometer capable of detecting speed and impact, an altimeter, a timer and a GPS receiver.

The connected console can then orchestrate a game of catch, awarding points for a good catch or deducting them if the peripheral is dropped hard on the ground. Or perhaps the challenge could be to can throw the object furthest, highest or fastest, with the connected computer keeping track of different competitors' scores.

Hardcore gamers, who cannot bear to be separated from a computer screen, could wear a head-mounted display that shows scores and other information. The peripheral can also emit a bleeps when it has been still for too long, to help the owner locate it in the long grass"

Read the full throwable game controller patent application

15:30 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces

Oct 26, 2006

DNA-based switch will allow interfacing organisms with computers

Re-blogged from Kurzweil.net

Researchers at the University of Portsmouth have developed an electronic switch based on DNA, a world-first bionanotechnology breakthrough that provides the foundation for the interface between living organisms and the computer world...

Read the full story here

19:37 Posted in Future interfaces | Permalink | Comments (0) | Tags: future interfaces