Aug 04, 2006

The state of the art in Assisted Cognition

The article entitled "Assistive technology for cognitive rehabilitation: State of the art", by LoPresti et al. provides a comprehensive review of Assisted Cognition, a research field that aims to develop and assess technological tools for individuals with either acquired impairments or developmental disorders.

The full-text of this article is available here

Authors: Edmund Frank LoPresti; Alex Mihailidis; Ned Kirsch

Neuropsychological Rehabilitation, Volume 14, Numbers 1-2/March-May 2004, pp. 5-39(35)

Abstract: For close to 20 years, clinicians and researchers have been developing and assessing technological interventions for individuals with either acquired impairments or developmental disorders. This paper offers a comprehensive review of literature in that field, which we refer to collectively as assistive technology for cognition (ATC). ATC interventions address a range of functional activities requiring cognitive skills as diverse as complex attention, executive reasoning, prospective memory, self-monitoring for either the enhancement or inhibition of specific behaviours and sequential processing. ATC interventions have also been developed to address the needs of individuals with information processing impairments that may affect visual, auditory and language ability, or the understanding of social cues. The literature reviewed indicates that ATC interventions can increase the efficiency of traditional rehabilitation practices by enhancing a person's ability to engage in therapeutic tasks independently and by broadening the range of contexts in which those tasks can be exercised. More importantly, for many types of impairments, ATC interventions represent entirely new methods of treatment that can reinforce a person's residual intrinsic abilities, provide alternative means by which activities can be completed or provide extrinsic supports so that functional activities can be performed that might otherwise not be possible. Although the major focus of research in this field will continue to be the development of new ATC interventions, over the coming years it will also be critical for researchers, clinicians, and developers to examine the multi-system factors that affect usability over time, generalisability across home and community settings, and the impact of sustained, patterned technological interventions on recovery of function.

19:05 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: assisted cognition

TransVision06

The TransVision 2006 annual conference of the World Transhumanist Association, Helsinki 17-19 August 2006, organized by the WTA and the Finnish Transhumanist Association, will be open to remote visitors in the virtual reality world of Second Life.

TransVision06, August 17-19: University of Helsinki, Finland, Europe

This year the theme of the conference will be Emerging Technologies of Human Enhancement. We'll be looking at recent and ongoing technological developments and discussing associated ethical and philosophical questions.

We will hold a mixed reality event between the Helsinki conference hall and Second Life:

The Second Life event will take place in the uvvy island in SL. To attend, use the Second Life map in the client, look for region uvvy and teleport.

The real time video stream from Helsinki will be displayed in SL.

Some presentations will also be displayed in SL in Power Point -like format.

Some PCs running the SL client will be available in the conference hall in Helsinki for SL users (at least 3 plus myself (gp)).

Some SL users will be simultaneously present in both worlds who can relay questions from the remote SL audience to speakers.

The SL event will be projected on a screen in Helsinki.

There will be a single text chat space for Second Life users and IRC chat users. So those whose computers are too slow or for some other reason don't want to use Second Life can use a combination of IRC and the video feed to interact with the conference participants in both Helsinki and Second Life.

See also:

http://community.livejournal.com/transvision06/

http://community.livejournal.com/transvision06/1346.html

12:35 Posted in Brain training & cognitive enhancement, Positive Technology events | Permalink | Comments (0) | Tags: Transhumanism

Aug 03, 2006

Forehead Retina System

Va Technovelgy

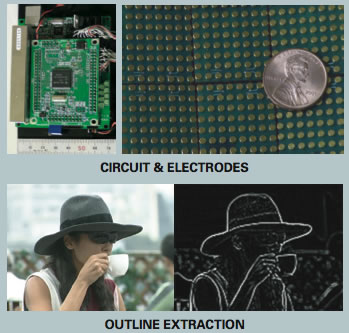

Researchers at at the University of Tokyo, in joint collaboration with EyePlusPlus, Inc., have developed a system that uses tactile sensations in the forehead as a "substitute retina" to enable visually impaired people to "see" the outlines of objects.

The Forhead Retina System (FRS) uses a special headband to selectively stimulate different mechanoreceptors in forehead skin to allow visually impaired people to perceive a picture of what lies in front of them.

The system has been presented at the Emerging Technology session of Siggraph 2006. From the conference website:

Although electrical stimulation has a long scientific history, it has not been used for practical purposes because stable stimulation was difficult to achieve, and mechanical stimulation provided much better spatial resolution. This project shows that stable control of electrical stimulation is possible by using very short pulses, and anodic stimulation can provide spatial resolution equal to mechanical stimulation. This result was presented at SIGGRAPH 2003 as SmartTouch, a visual-to-tactile conversion system for skin on the finger using electrical stimulation. Because the Forehead Retina System shows that stable stimulation on the "forehead skin" is also quite possible, it leads to a new application of electrical stimulation.

Goals

According to a 2003 World Health Organization report, up to 45 million people are totally blind, while 135 million live with low vision. However, there is no standard visual substitution system that can be conveniently used in daily life. The goal of this project is to provide a cheap, lightweight, yet fully functional system that provides rich, dynamic 2D information to the blind.

Innovations

The big difference between finger skin and forehead skin is the thickness. While finger skin has a thick horny layer of more than 0.7 mm, the forehead skin is much thinner (less than 0.02mm). Therefore, if the electrode directly contacts the skin, concentrated electrical potential causes unnecessary nerve stimulation and severe pain. The Forehead Retina System uses an ionic gel sheet with the same thickness and electrical impedance as the horny layer of finger skin. When the gel sheet is placed between the electrode and the forehead, stable sensation is assured.

Compared to current portable electronic devices, most of the proposed "portable" welfare devices are not portable in reality. They are bulky and heavy, and they have a limited operation time. By using electrical stimulation, the Forehead Retina System partially solves these problems. Nevertheless, driving 512 electrodes with more than 300 volts is quite a difficult task, and it normally requires a large circuit space. The system uses a high-voltage switching integrated circuit, which is normally used to drive micro-machines such as digital micro-mirror devices. With fast switching, current pulses are allocated to appropriate electrodes. This approach enables a very large volune of stimulation and system portability at the same time.

Vision

This project demonstrates only the static capabilities of the display. Other topics pertaining to the display include tactile recognition using head motion (active touch), gray-scale expression using frequency fluctuation, and change in sensation after long-time use due to sweat. These will be the subjects of future studies.

The current system uses only basic image processing to convert the visual image to tactile sensation. Further image processing, including motion analysis, pattern and color recognition, and depth perception is likely to become necessary in the near future.

It is important to note that although many useful algorithms have been proposed in the field of computer vision, they can not always be used unconditionally. The processing must be performed in real time, and the system must be small and efficient for portability. Therefore, rather than incorporating elegant but expensive algorithms, the combination of bare minimum image processing and training is practical. The system is now being tested with the visually impaired to determine the optimum balance that good human interfaces have always achieved.

18:14 Posted in Brain training & cognitive enhancement | Permalink | Comments (0)

Jul 28, 2006

Retina projector to help blind people

From New Scientist

Partially blind people can now read using a machine that projects images directly onto their retinal cells.

The Retinal Imaging Machine Vision System (RIMVS) can also be used to explore virtual buildings, allowing people to familiarise themselves with new places.

The device, developed by Elizabeth Goldring, a poetry professor at the Massachusetts Institute of Technology, who is herself partially blind, is designed for people who suffer vision loss due to obstructions such as haemorrhages or diseases that erode the cells on the retina.

The user looks through a viewfinder and the images are focused directly onto the retina. The person can guide the light to find the areas that still work best. The machine uses LED light and costs just $4000.

14:20 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: cognitive prosthetics

Jul 24, 2006

Design for Our Future Selves awards 2006

Re-blogged from World of Psychology (original post by Sandra Kiume)

The Design for Our Future Selves awards 2006 from the Royal College of Art offered seven awards for ‘An architecture, design or communication project which addresses a social issue or engages with a particular social group in order to improve independence, mobility, health or working life.’

Christopher Peacock won the Snowdon Award for Disability Projects and the Help the Aged Award for Independent Living with his invention handSteady. It’s an innovative device enabling people experiencing tremor (involuntary shaking caused by Parkinson’s disease, side effects from some medications and other conditions) to stabilize objects as they hold them.

Sohui Won, a finalist in Interaction Design, created psychological tools for treatment of loneliness in a project titled Weird Objects - Objects for autophobics and for all of us who experience loneliness and autophobia (fear of being alone).

Among the creations:

‘Communication with Myself – Talk to Myself’. Objects were designed to help autophobics better understand their problem. ‘Talk to Myself’ Mask allows users to literally, talk to themselves. ‘Not’ Removal Machine is a device that removes the word ‘not’ from speech allowing people to hear the positive version.

‘Communication with Environment – Talk to Trees’. Two animated objects were designed to help connect people to the environment, so they wouldn’t feel alone: ‘Eyeballs’ is a device that follows people all the time, wherever they go. ‘Whispering Machine’ transfers the sound of movement into whispering and laughter

22:04 Posted in Brain training & cognitive enhancement, Creativity and computers | Permalink | Comments (0)

Jul 18, 2006

TMS can improve subitizing ability

Re-blogged from Omnibrain

A joint venture of the Australian National University and the University of Sydney investigated whether repetitive transcranial magnetic stimulation, TMS, can improve a healthy person's ability to guess accurately the number of elements in a scene, the London Telegraph reported.

00:31 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: cognitive prosthetics

Second Geoethical Nanotechnology workshop

Re-blogged from KurzweilAI.net

The Terasem Movement announced today that its Second Geoethical Nanotechnology workshop will be held July 20, 2006 in Lincoln, Vermont. The public is invited to participate via conference call.The workshop will explore the ethics of neuronanotechnology and future mind-machine interfaces, including preservation of consciousness, implications for a future in which human and digital species merge, and dispersion of consciousness to the cosmos, featuring leading scientists and other experts in these areas.

The workshop proceedings are open to the public via real-time conference call and will be archived online for free public access. The public is invited to call a toll-free conference-call dial-in line from 9:00 a.m. - 6:00 p.m. ET. Callers from the continental US and Canada can dial 1-800-967-7135; other countries: (00+1) 719-457-2626.

Each workshop presentation is designed for a 15-20 minute delivery, followed by a 20 minute formal question and answer period, during which time questions from the worldwide audience will be invited. Presentations will also be available on the workshop's website

00:05 Posted in Brain training & cognitive enhancement, Brain-computer interface, Neurotechnology & neuroinformatics | Permalink | Comments (0) | Tags: neurotechnology

Novel BCI device will allow people to search through images faster

Via KurzweilAI.net

Researchers at Columbia University are combining the processing power of the human brain with computer vision to develop a novel device that will allow people to search through images ten times faster than they can on their own.

The "cortically coupled computer vision system," known as C3 Vision, is the brainchild of professor Paul Sajda, director of the Laboratory for Intelligent Imaging and Neural Computing at Columbia University. He received a one-year, $758,000 grant from Darpa for the project in late 2005.

The brain emits a signal as soon as it sees something interesting, and that "aha" signal can be detected by an electroencephalogram, or EEG cap. While users sift through streaming images or video footage, the technology tags the images that elicit a signal, and ranks them in order of the strength of the neural signatures. Afterwards, the user can examine only the information that their brains identified as important, instead of wading through thousands of images.

Read the full story on Wired

00:00 Posted in Brain training & cognitive enhancement, Brain-computer interface | Permalink | Comments (0) | Tags: brain-computer interface

Jul 17, 2006

Video games can improve performance in vision tasks

Three years ago, C. Shawn Green and Daphne Bavelier of the University of Rochester conducted a study in which they found that avid video game players were better at several different visual tasks compared to non-gamers ("Action Video Game Modifies Visual Attention," Nature, 2003). In particular, the study showed that video game players had increased visual attention capacity on a flanker distractor task, as well as improved ability to subitize (subitizing is the ability to enumerate a small array of objects without overtly counting each item).

The same authors have now completed a follow-up study that has been released in the current issue of Cognition. The new experiment's findings suggests that the data previously interpreted as supporting an increase in subitizing may actually reflect the deployment of a serial counting strategy on behalf of the video-game players.

23:35 Posted in Brain training & cognitive enhancement | Permalink | Comments (0)

Jul 08, 2006

Cognitive Computing symposium at IBM

Via Neurodudes

Recently, the Almaden Research center, part of IBM research, invited some provocative speakers for a dicussion on the topic of “Cognitive Computing”.

Powerpoint presentations and videos of the event are available online.

From the synopsis:

The 2006 Almaden Institute will focus on the theme of “Cognitive Computing” and will examine scientific and technological issues around the quest to understand how the human brain works. We will examine approaches to understanding cognition that unify neurological, biological, psychological, mathematical, computational, and information-theoretic insights. We focus on the search for global, top-down theories of cognition that are consistent with known bottom-up, neurobiological facts and serve to explain a broad range of observed cognitive phenomena. The ultimate goal is to understand how and when can we mechanize cognition.

Confirmed speakers include Toby Berger (Cornell), Gerald Edelman (The Neurosciences Institute), Joaquin Fuster (UCLA), Jeff Hawkins (Palm/Numenta), Robert Hecht-Nielsen (UCSD), Christof Koch (CalTech), Henry Markram (EPFL/BlueBrain), V. S. Ramachandran (UCSD), John Searle (UC Berkeley) and Leslie Valiant (Harvard). Confirmed panelists include: James Albus (NIST), Theodore Berger (USC), Kwabena Boahen (Stanford), Ralph Linsker (IBM), and Jerry Swartz (The Swartz Foundation).

20:38 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: cognitive computing

Jul 04, 2006

Blind Reader

Via KurzweilAI.net (press release)

The National Federation of the Blind (NFB) unveiled Monday a groundbreaking new device, the Kurzweil-National Federation of the Blind Reader.

The portable Reader, developed by the National Federation of the Blind and renowned inventor Ray Kurzweil, enables users to take pictures of and read most printed materials at the click of a button. Users merely hold "the camera that talks" over print -- a letter, bills, a restaurant menu, an airline ticket, a business card, or an office memo --- to hear the contents of the printed document played back in clear synthetic speech.

Combining a state-of-the-art digital camera with a powerful personal data assistant, the Reader puts the best available character-recognition software together with text-to-speech conversion technology in a single handheld device.

"I've worked on reading machines for the blind and with the National Federation of the Blind for over thirty years, and this has been the most rewarding experience I've had as an inventor," said Ray Kurzweil, who was the chief developer of the first omni-font optical character-recognition technology, the first print-to-speech reading machine for the blind, the first CCD flatbed scanner, the first text-to-speech synthesizer, and the first commercially marketed, large-vocabulary speech recognition engine.

"I have always said that the most exciting aspect of being an inventor is to experience the leap from dry formulas on a blackboard to actual improvements in people's lives, and I've had the reward of that experience with this project.

This is basically a software invention, he explained. "In addition to squeezing omni-font optical character recognition and text-to-speech synthesis into a pocket sized computer, we had to develop intelligent image enhancement software that could clean up the images of print received from a digital camera. Unlike the images produced by a flatbed scanner, images from a digital camera are subject to rotation, tilt, curvature, uneven illumination and other distortions. The image enhancement software we developed automatically corrects for these problems.

"Because this is a software based technology, our users will be able to benefit from the futurefuture, we envision the reader being able to identify real-world objects, and even people's faces. So, for example, the product may tell you that there is a cat in front of you, a lamp to your left, and sitting to your right is your ex-wife.'" improvements we make. In the

00:05 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: cognitive prosthetic

Jun 18, 2006

Artificial hippocampus to help Alzheimer's patients

Via Medgadget

According to journalist Jennifer Matthews (News14Carolina), neuroscientist Theodore Berger has developed the "first artificial hippocampus", which should help people suffering from Alzheimer's disease to form new memories.

"There's no reason why we can't think in terms of artificial brain parts in the same way we can think in terms of artificial eyes and artificial ears," said Theodore Berger, who does research at the University of Southern California.

Berger believes this new technology will help not only Alzheimer's disease patients, but also individuals suffering from other CNS diseases such as epilepsy, Parkinson's disease or stroke. Also, with this new technolgy, the brain would gain help in information processing. A computer chip will reroute the information, bypassing damaged area(s) of the hippocampus

18:26 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: neuroinformatics

May 10, 2006

Enhanced humans

From New Scientist

People with enhanced senses, superhuman bodies and sharpened minds are already walking among us. Are you ready for your upgrade?They're here and walking among us: people with technologically enhanced senses, superhuman bodies and artificially sharpened minds. The first humans to reach a happy, healthy 150th birthday may already have been born. And that's just the start of it. Are you ready for your upgrade, asks Graham Lawton

IT IS 2050, and Peter Schwartz is deciding what to do with the rest of his life. He has already had two successful careers and he wants another one before he dies, which he expects to happen in around 50 years. By then he'll be about 150, which isn't bad for a baby boomer, but he expects his son, now 60, to live a lot longer than that.

The world that Schwartz lives in is radically different from the one he grew up in. The industrial and information age has passed into history, overtaken by a revolution in bioscience that began around

Link to the complete article

22:29 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: Positive Technology

May 03, 2006

Warriors of the future will 'taste' battlefield

Via CNN

PENSACOLA, Florida (AP) -- In their quest to create the super warrior of the future, some military researchers aren't focusing on organs like muscles or hearts. They're looking at tongues.

By routing signals from helmet-mounted cameras, sonar and other equipment through the tongue to the brain, they hope to give elite soldiers superhuman senses similar to owls, snakes and fish.

Researchers at the Florida Institute for Human and Machine Cognition envision their work giving Army Rangers 360-degree unobstructed vision at night and allowing Navy SEALs to sense sonar in their heads while maintaining normal vision underwater -- turning sci-fi into reality.

The device, known as "Brain Port," was pioneered more than 30 years ago by Dr. Paul Bach-y-Rita, a University of Wisconsin neuroscientist. Bach-y-Rita began routing images from a camera through electrodes taped to people's backs and later discovered the tongue was a superior transmitter...

Continue to read the full article

14:58 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: Positive Technology

Apr 22, 2006

Neuromarketing: Brain Fitness Concept Challenged

13:07 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: Positive Technology

Apr 21, 2006

Intelligent Medical Implants Reports Artificial Vision Breakthrough

"The provoked visual perceptions were pleasant, according to the patients, and this was the first time they had seen something in many years-- in one case, several decades. Understandably, they reacted emotionally to their visual experience."

Hans-Juergen Tiedtke

Chief Executive Officer

IIP-Technologies GmbH

IIP-Technologies GmbH, on behalf of its parent company Intelligent Medical Implants AG ("IMI") (www.intmedimplants.com), announced today that a limited clinical study related to its ongoing Early Human Trial has demonstrated that IMI's patented, first-generation Learning Retinal Implant(TM) enabled patients to see light - as well as simple patterns - via a wireless transmission of data and energy. This is the first time in the history of the development of artificial vision that completely wireless transmission of data and energy into an implant in the eye of long-time blind persons has resulted in pattern recognition.

The Learning Retinal Implant has been successfully implanted in four patients for a duration of up to 13 weeks to date (the first implantation occurred in late-November 2005). Subsequent clinical testing of the IMI device with these patients commenced on schedule beginning in January 2006 at the University of Hamburg (Germany) Medical School under principal investigator Prof. Gisbert Richard, Professor of Ophthalmology. Each of the implantations has been "extremely well-tolerated" and fixation of the implant has been "stable, with no inflammatory reactions," according to Prof. Richard.

The Learning Retinal Implant has been successfully implanted in four patients for a duration of up to 13 weeks to date (the first implantation occurred in late-November 2005). Subsequent clinical testing of the IMI device with these patients commenced on schedule beginning in January 2006 at the University of Hamburg (Germany) Medical School under principal investigator Prof. Gisbert Richard, Professor of Ophthalmology. Each of the implantations has been "extremely well-tolerated" and fixation of the implant has been "stable, with no inflammatory reactions," according to Prof. Richard.

"Each of these blind persons had no visual perception at all, yet upon wireless stimulation of the retina via the Learning Retinal Implant, they were able to 'see' something,"said Hans-Juergen Tiedtke, CEO of IIP-Technologies, a subsidiary of IMI. "One patient, for example, a 65-year-old female from Marienberg, Germany, has not had sight for more than a half century. From early childhood she has suffered from RP, meaning that she has not seen normally for more than 60 years. Nevertheless, in her first pattern recognition test, she described continuous objects such as a half circle. There is no doubt that this result is extremely positive, given that she has had no sight for almost her entire life, yet was still able to immediately receive a visual perception from electrical stimulation.

"While further clinical testing is needed and planned, we are truly delighted by these early results. It is our expectation that, in the not-too-distant future, our Learning Retinal Implant System, along with rehabilitation, may allow patients to recognize objects by identifying their size, as well as their position, movements and shapes. In other words, a blind person, using our Learning Retinal Implant System, is expected to be able to move independently in an unfamiliar environment--thus enabling him or her to lead an autonomous life. Indeed, development of a wireless visual prosthesis that could be implanted permanently with good results is the 'Holy Grail' of artificial vision," added Mr. Tiedtke.

IMI's initial clinical-indication focus is blind persons with Retinitis Pigmentosa ('RP'), one of the two most common causes of vision loss in persons over the age of 50 by hereditary degenerative retinal diseases. RP is considered irreversible, and no treatment or cure is known to date. Several million people are affected worldwide.

13:30 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: Positive Technology

Apr 14, 2006

Emotional Social Intelligence Prosthetic

Via Wired

Wired reports that MIT researchers are developing a wearable computer with a computational model of "mind-reading".

The prosthetic device should be able to infer affective-cognitive mental states from head and facial displays of people in real time, and communicate these inferences to the wearer via sound and/or tactile feedback.

According to MIT researchers, the system could help people afflicted by autism (who lack the ability to ascertain others' emotional status) in doing the "mind reading". A broader motivation of the project is to explore the role of technology in promoting social-emotional intelligence of people and to understand more about how social-emotional skills interact with learning.

A prototype of ESIP was unveiled at at the Body Sensor Networks 2006 international workshop at MIT's Media Lab last week. The video cameras captured facial expressions and head movements, then fed the information to a desktop computer that analyzed the data and gave real-time estimates of the individuals' mental states, in the form of color-coded graphs.

19:20 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: Positive Technology

Mar 27, 2006

Wired: brain-training game

Can a new generation of mind-training games actually make you smarter?

Commentary by Clive Thompson (Wired)

A while ago, the science writer Steven Johnson was looking at an old IQ test known as the "Raven Progressive Matrices." Developed in the 1930s, it shows you a set of geometric shapes and challenges you to figure out the next one in the series. It's supposed to determine your ability to do abstract reasoning, but as Johnson looked at the little cubic Raven figures, he was struck by something: They looked like Tetris.

A light bulb went off. If Tetris looked precisely like an IQ test, then maybe playing Tetris would help you do better at intelligence tests. Johnson spun this conceit into his brilliant book of last year, Everything Bad Is Good For You, in which he argued that video games actually make gamers smarter. With their byzantine key commands, obtuse rule-sets and dynamic simulations of everything from water physics to social networks, Johnson argued, video games require so much cognitive activity that they turn us into Baby Einsteins -- not dull robots.

I loved the book, but it made me wonder: If games can inadvertently train your brain, why doesn't someone make a game that does so intentionally?

I should have patented the idea. Next month, Nintendo is releasing Brain Age, a DS game based on the research of the Japanese neuroscientist Ryuta Kawashima.

Read the full article

18:19 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: Positive Technology

Mar 26, 2006

Tango!

Via Engadget

A company named AbleNet is busting out an interesting new handheld device called Tango! which does the "AAC" thing (Augmentative and Alternative Communication - new one on us) to help kids learn English, or for those that are hearing or speech impaired, allowing for the easy creation of sentences. You can use predetermined phrases by punching through a few sub-categories, or make your own with the device's intuitive interface. The Tango! also allows the creation of your own items; the built-in camera can capture a new image to serve as an icon, and then an adult can record the correct phrase for the item, even with a simulated child's voice. The device can expand its functionality via USB, CompactFlash, and SD, with the option to use a keyboard, or even work over a cellphone down the line. Sadly the unit runs for a steep $6899, but hopefully AbleNet can get some quantity orders in and cut that down a bit.

A company named AbleNet is busting out an interesting new handheld device called Tango! which does the "AAC" thing (Augmentative and Alternative Communication - new one on us) to help kids learn English, or for those that are hearing or speech impaired, allowing for the easy creation of sentences. You can use predetermined phrases by punching through a few sub-categories, or make your own with the device's intuitive interface. The Tango! also allows the creation of your own items; the built-in camera can capture a new image to serve as an icon, and then an adult can record the correct phrase for the item, even with a simulated child's voice. The device can expand its functionality via USB, CompactFlash, and SD, with the option to use a keyboard, or even work over a cellphone down the line. Sadly the unit runs for a steep $6899, but hopefully AbleNet can get some quantity orders in and cut that down a bit.

20:40 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: Positive Technology

Mar 08, 2006

Electronic Memory Aids for Elderly Persons

Electronic Memory Aids for Community-Dwelling Elderly Persons: Attitudes, Preferences, and Potential Utilization

Cohen-Mansfield, Jiska, Creedon, Michael A., Malone, Thomas B., Kirkpatrick III, Mark J., Dutra, Lisa A., Herman, Randy Perse. The Journal of Applied Gerontology, Vol. 24 No. 1, February 2005 3-20.

This article focuses on the attitudes of community-dwelling elderly persons toward the use of electronic memory aids. Questionnaire data from 100 elderly volunteers indicate that more than one half were interested in an electronic memory device for at least one purpose. Those who said that they would use the device had higher levels of education, used more household electronic devices, were more likely to have someone available to help them use a device, and had more health problems than those who preferred to not use it. Most would use a memory aid to monitor medications and remember appointments, followed by remembering addresses and phone numbers. The expected use, design, preferred methods of instruction, and concerns about the device varied. Study results suggest the need to develop devices with different degrees of flexibility and complexity. Future studies should evaluate training methods to use such technology.

14:33 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: Positive Technology