Oct 07, 2006

Gamescenes

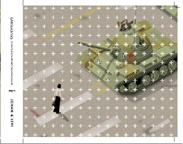

GameScenes. Art in the Age of Videogames is the first volume entirely dedicated to Game Art. Edited by Matteo Bittanti and Domenico Quaranta, GameScenes provides a detailed overview of the emerging field of Game Art, examining the complex interaction and intersection of art and videogames.

Video and computer game technologies have opened up new possibilities for artistic creation, distribution, and appreciation. In addition to projects that might conventionally be described as Internet Art, Digital Art or New Media Art, there is now a wide spectrum of work by practitioners that crosses the boundaries between various disciplines and practices. The common denominator is that all these practitioners use digital games as their tools or source of inspiration to make art. They are called Game Artists.

GameScenes explores the rapidly expanding world of Game Art in the works of over 30 international artists. Included are several milestones in this field, as well as some lesser known works. In addition to the editors' critical texts, the book contains contributions from a variety of international scholars that illustrate, explain, and contextualize the various artifacts.

M. Bittanti, D. Quaranta (editors), GameScenes. Art in the Age of Videogames, Milan, Johan & Levi 2006. Hardcover, 454 pages, 25 x 25 cm, 200+ hi-res illustrations, available from October 2006.

17:26 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart

Song and speech

Song and speech: brain regions involved with perception and covert production.

Neuroimage. 2006 Jul 1;31(3):1327-42

Authors: Callan DE, Tsytsarev V, Hanakawa T, Callan AM, Katsuhara M, Fukuyama H, Turner R

This 3-T fMRI study investigates brain regions similarly and differentially involved with listening and covert production of singing relative to speech. Given the greater use of auditory-motor self-monitoring and imagery with respect to consonance in singing, brain regions involved with these processes are predicted to be differentially active for singing more than for speech. The stimuli consisted of six Japanese songs. A block design was employed in which the tasks for the subject were to listen passively to singing of the song lyrics, passively listen to speaking of the song lyrics, covertly sing the song lyrics visually presented, covertly speak the song lyrics visually presented, and to rest. The conjunction of passive listening and covert production tasks used in this study allow for general neural processes underlying both perception and production to be discerned that are not exclusively a result of stimulus induced auditory processing nor to low level articulatory motor control. Brain regions involved with both perception and production for singing as well as speech were found to include the left planum temporale/superior temporal parietal region, as well as left and right premotor cortex, lateral aspect of the VI lobule of posterior cerebellum, anterior superior temporal gyrus, and planum polare. Greater activity for the singing over the speech condition for both the listening and covert production tasks was found in the right planum temporale. Greater activity in brain regions involved with consonance, orbitofrontal cortex (listening task), subcallosal cingulate (covert production task) were also present for singing over speech. The results are consistent with the PT mediating representational transformation across auditory and motor domains in response to consonance for singing over that of speech. Hemispheric laterality was assessed by paired t tests between active voxels in the contrast of interest relative to the left-right flipped contrast of interest calculated from images normalized to the left-right reflected template. Consistent with some hypotheses regarding hemispheric specialization, a pattern of differential laterality for speech over singing (both covert production and listening tasks) occurs in the left temporal lobe, whereas, singing over speech (listening task only) occurs in right temporal lobe.

17:18 Posted in Mental practice & mental simulation | Permalink | Comments (0) | Tags: mental practice

Oct 06, 2006

DietMate Weight Loss Computer

Via Mindware Forum

DietMate is a hand-held computer that provides a program of weight loss, cholesterol reduction, and hypertension control. DietMate is made by Personal Improvement Computer Systems (PICS), which also makes two other tiny computerized mindgadgets: SleepKey Insomnia Treatment Hand-held Computer and the QuitKey Smoking Cessation Hand-Held Computer.

From the PICS website:

DietMate provides a sophisticated, yet easy to use, nutrition and exercise program that is tailored to each user's nutritional requirements, food preferences, and habits. By providing hundreds of nutritionally balanced menus which can be customized as desired, DietMate picks up where calorie counters leave off.

DietMate also tracks calories, fat, saturated fat, cholesterol and sodium. It provides daily nutritional targets, charts progress and even creates a shopping list. DietMate has been proven effective in both weight and cholesterol reduction, in clinical studies funded by the National Heart Lung and Blood Institute.

10:05 Posted in Cybertherapy, Persuasive technology | Permalink | Comments (0) | Tags: persuasive technology, cybertherapy, hand-held computer

fMRI-compatible rehabilitation hand device

| Background: Functional magnetic resonance imaging (fMRI) has been widely used in studying human brain functions and neurorehabilitation. In order to develop complex and well-controlled fMRI paradigms, interfaces that can precisely control and measure output force and kinematics of the movements in human subjects are needed. Optimized state-of-the-art fMRI methods, combined with magnetic resonance (MR) compatible robotic devices for rehabilitation, can assist therapists to quantify, monitor, and improve physical rehabilitation. To achieve this goal, robotic or mechatronic devices with actuators and sensors need to be introduced into an MR environment. The common standard mechanical parts can not be used in MR environment and MR compatibility has been a tough hurdle for device developers. Methods: This paper presents the design, fabrication and preliminary testing of a novel, one degree of freedom, MR compatible, computer controlled, variable resistance hand device that may be used in brain MR imaging during hand grip rehabilitation. We named the device MR_CHIROD (Magnetic Resonance Compatible Smart Hand Interfaced Rehabilitation Device). A novel feature of the device is the use of Electro-Rheological Fluids (ERFs) to achieve tunable and controllable resistive force generation. ERFs are fluids that experience dramatic changes in rheological properties, such as viscosity or yield stress, in the presence of an electric field. The device consists of four major subsystems: a) an ERF based resistive element; b) a gearbox; c) two handles and d) two sensors, one optical encoder and one force sensor, to measure the patient induced motion and force. The smart hand device is designed to resist up to 50% of the maximum level of gripping force of a human hand and be controlled in real time. Results: Laboratory tests of the device indicate that it was able to meet its design objective to resist up to approximately 50% of the maximum handgrip force. The detailed compatibility tests demonstrated that there is neither an effect from the MR environment on the ERF properties and performance of the sensors, nor significant degradation on MR images by the introduction of the MR_CHIROD in the MR scanner. Conclusions: The MR compatible hand device was built to aid in the study of brain function during generation of controllable and tunable force during handgrip exercising. The device was shown to be MR compatible. To the best of our knowledge, this is the first system that utilizes ERF in MR environment. |

09:50 Posted in AI & robotics, Research tools | Permalink | Comments (0) | Tags: robotics, cybertherapy

Oct 05, 2006

First Teleportation Between Light and Matter

From SCIAM

Scientific American online reports about a physics experiment conducted by Eugene Polzik and his colleagues at the Niels Bohr Institute in Copenhagen, which looks like the "Beam-me-up, Scotty" technology of Star Trek:

At long last researchers have teleported the information stored in a beam of light into a cloud of atoms, which is about as close to getting beamed up by Scotty as we're likely to come in the foreseeable future. More practically, the demonstration is key to eventually harnessing quantum effects for hyperpowerful computing or ultrasecure encryption systems. Quantum computers or cryptography networks would take advantage of entanglement, in which two distant particles share a complementary quantum state. In some conceptions of these devices, quantum states that act as units of information would have to be transferred from one group of atoms to another in the form of light. Because measuring any quantum state destroys it, that information cannot simply be measured and copied. Researchers have long known that this obstacle can be finessed by a process called teleportation, but they had only demonstrated this method between light beams or between atoms...

Read the full story

23:31 Posted in Telepresence & virtual presence | Permalink | Comments (0) | Tags: telepresence

VR for teaching airway management in trauma

New equipment and techniques for airway management in trauma.

Curr Opin Anaesthesiol. 2001 Apr;14(2):197-209

Authors: Smith CE, Dejoy SJ

A patent, unobstructed airway is fundamental in the care of the trauma patient, and is most often obtained by placing a cuffed tube in the trachea. The presence of shock, respiratory distress, a full stomach, maxillofacial trauma, neck hematoma, laryngeal disruption, cervical spine instability, and head injury all combine to increase tracheal intubation difficulty in the trauma patient. Complications resulting from intubation difficulties include brain injury, aspiration, trauma to the airway, and death. The use of devices such as the gum-elastic bougie, McCoy laryngoscope, flexible and rigid fiberscopes, intubating laryngeal mask, light wand, and techniques such as rapid-sequence intubation, manual in-line axial stabilization, retrograde intubation, and cricothyroidotomy, enhance the ability to obtain a definitive airway safely. The management of the failed airway includes calling for assistance, optimal two-person bag-mask ventilation, and the use of the laryngeal mask airway, Combitube, or surgical airway. The simulation of airway management using realistic simulator tools (e.g. full-scale simulators, virtual reality airway simulators) is a promising modality for teaching physicians and advanced life support personnel emergency airway management skills.

23:09 Posted in Virtual worlds | Permalink | Comments (0) | Tags: virtual reality, virtual training

It is still a watch however

The MBW-100 watch lets you answer or reject calls, displays caller ID info, and even lets you play, pause and skip music tracks. The device connects to selected Sony Ericsson handsets (including K790 and K610 models) via Bluetooth 2.0. The stainless-steel, 6.6-ounce watch boasts analog dials and an OLED screen, and it lets you control a variety of phone functions, such as call handling (you can answer, reject or mute calls) and music playback (play, pause, and skip tracks)

22:54 Posted in Wearable & mobile | Permalink | Comments (0) | Tags: wereable, mobile

Teliris Launches VirtuaLive with HSL's Thoughts and Analysis

Teliris, a company that develops telepresence solutions, has announced its 4th generation offering. From the press release:

The VirtuaLive(TM) enhanced technology provides the most natural and intimate virtual meeting environment on the market, and is available in a broad set of room offerings designed to meet the specific needs of its customers.

Building on Teliris' third generation GlobalTable(TM) telepresence solutions, VirtuaLive(TM) provides enhanced quality video and broadband audio, realistically replicating an in-person meeting experience by capturing and transmitting the most subtle visual gestures and auditory cues.

"All future Teliris solutions will fall under the VirtuaLive(TM) umbrella of offerings," said Marc Trachtenberg, Teliris CEO. "With such an advanced technology platform and range of solutions, companies can select the immersive experience that best fits their business environment and goals."

VirtuaLive's(TM) next generation of Virtual Vectoring(TM) is at the center of the new offerings. It provides users with unparalleled eye-to-eye contact from site-to-site in multipoint meetings with various numbers of participants within each room. No other vendor offering can match the natural experience created by advanced technology in such diverse environments.

22:42 Posted in Telepresence & virtual presence | Permalink | Comments (0) | Tags: presence, social presence, telepresence

Social behavior and norms in virtual environments are comparable to those in the physical world

| Nick Yee and colleagues at Stanford University have investigated whether social behavior and norms in virtual environments are comparable to those in the physical world. To this end, they collected data from avatars in Second Life, in order to explore whether social norms of gender, interpersonal distance (IPD), and eye gaze transfer into virtual environments even though the modality of movement is entirely different. "Results showed that established findings of IPD and eye gaze transfer into virtual environments: 1) Malemale dyads have larger IPDs than female-female dyads, 2) male-male dyads maintain less eye contact than female-female dyads, and 3) decreases in IPD are compensated with gaze avoidance" According to Yee and coll., these findings suggest that social interactions in online virtual environments are governed by the same social norms as social interactions in the physical world. Yee, N., Bailenson, J.N. & Urbanek, M. (2006). The unbearable likeness of being digital: The persistence of nonverbal social norms in online virtual environments. Cyberspace and Behaviour, In Press. |

22:00 Posted in Telepresence & virtual presence | Permalink | Comments (0) | Tags: presence, social presence, telepresence

Oct 04, 2006

The neural basis of narrative imagery

The neural basis of narrative imagery: emotion and action.

Prog Brain Res. 2006;156:93-103

Authors: Sabatinelli D, Lang PJ, Bradley MM, Flaisch T

It has been proposed that narrative emotional imagery activates an associative network of stimulus, semantic, and response (procedural) information. In previous research, predicted response components have been demonstrated through psychophysiological methods in peripheral nervous system. Here we investigate central nervous system concomitants of pleasant, neutral, and unpleasant narrative imagery with functional magnetic resonance imaging. Subjects were presented with brief narrative scripts over headphones, and then imagined themselves engaged in the described events. During script perception, auditory association cortex showed enhanced activation during affectively arousing (pleasant and unpleasant), relative to neutral imagery. Structures involved in language processing (left middle frontal gyrus) and spatial navigation (retrosplenium) were also active during script presentation. At the onset of narrative imagery, supplementary motor area, lateral cerebellum, and left inferior frontal gyrus were initiated, showing enhanced signal change during affectively arousing (pleasant and unpleasant), relative to neutral scripts. These data are consistent with a bioinformational model of emotion that considers response mobilization as the measurable output of narrative imagery.

22:43 Posted in Mental practice & mental simulation | Permalink | Comments (0) | Tags: mental practice

Science & Consciousness Review back to life

Science & Consciousness Review, the online webzine/journal for the review of the scientific study of consciousness, is back online after a crash that occurred several months ago

22:40 Posted in Positive Technology events | Permalink | Comments (0) | Tags: science and consciousness

Affective communication in the metaverse

Via IEET

Have a look at this thought-provoking article by Russel Blackford on affective communication in mediated environments:

One of the main conclusions I’ve been coming to in my research on the moral issues surrounding emerging technologies is the danger that they will be used in ways that undermine affective communication between human beings - something on which our ability to bond into societies and show moment-by-moment sympathy for each other depends. Anthropological and neurological studies have increasingly confirmed that human beings have a repertoire of communication by facial expression, voice tone, and body language that is largely cross-cultural, and which surely evolved as we evolved as social animals.

The importance of this affective repertoire can be seen in the frequent complaints in internet forums that, “I misunderstood because I couldn’t hear your tone of voice or see the expression on your face.” The internet has evolved emoticons as a partial solution to the problem, but flame wars still break out over observations that would lead to nothing like such violent verbal responses if those involved were discussing the same matters face to face, or even on the telephone. I almost never encounter truly angry exchanges in real life, though I may be a bit sheltered, or course, but I see them on the internet all the time. Partly, it seems to be that people genuinely misunderstand where others are coming from with the restricted affective cues available. Partly, however, it seems that people are more prepared to lash out hurtfully in circumstances where they are not held in check by the angry or shocked looks and the raised voices they would encounter if they acted in the same way in real life.

This is one reason to be sightly wary of the internet. It’s not a reason to ban the internet, which produces all sorts of extraordinary utilitarian benefits. Indeed, even the internet’s constraint on affective communication may have advantages - it may free up shy people to say things that they would be too afraid to say in real life.

Read the full article

22:30 Posted in Future interfaces | Permalink | Comments (0) | Tags: affective computing

Oct 03, 2006

Netflix Prize

Netflix, an online movie rental service, is offering $1 million to the first person who can improve the accuracy of movie recommendations based on personal preferences by 10%. From the website:

The Netflix Prize seeks to substantially improve the accuracy of predictions about how much someone is going to love a movie based on their movie preferences. Improve it enough and you win one (or more) Prizes. Winning the Netflix Prize improves our ability to connect people to the movies they love

12:55 Posted in Research institutions & funding opportunities | Permalink | Comments (0) | Tags: research awards

Oct 02, 2006

Web Journals Take On Peer Review

LOS ANGELES - Scientists frustrated by the iron grip that academic journals hold over their research can now pursue another path to fame by taking their research straight to the public online.

Instead of having a group of hand-picked scholars review research in secret before publication, a growing number of internet-based journals are publishing studies with little or no scrutiny by the authors' peers. It's then up to rank-and-file researchers to debate the value of the work in cyberspace.

The web journals are threatening to turn the traditional peer-review system on its head. Peer review for decades has been the established way to pick apart research before it's made public.

Next month, the San Francisco-based nonprofit Public Library of Science will launch its first open peer-reviewed journal called PLoS ONE, focusing on science and medicine. Like its sister publications, it will make research articles available for free online by charging authors to publish.

Read the full story

17:03 Posted in Research tools | Permalink | Comments (0) | Tags: research tools

Japan to invest US$17.4 million in robotics research

|

Japan’s Ministry of Economy, Trade and Industry (METI) will invest over 2 billion yen (US$17.4 million) to support the development of intelligent robots that rely on their own decision-making skills in the workplace. The objective of METI’s robot budget is to support the development of key artificial intelligence technology for robots over the next 5 years, with the goal of introducing intelligent robots to the market by 2015. |

11:00 Posted in AI & robotics, Research institutions & funding opportunities | Permalink | Comments (0) | Tags: artificial intelligence, robotics

Oct 01, 2006

Color of My Sound

Color of My Sound is an Internet-based application that allows to assign colors to specific sounds. The project is inspired by the phenomenon of synesthesia, the mixing of the senses.

In CMS, users choose a sound category. Then, after listening, they can choose the color to which they are most strongly drawn. finally, they can see how others voted for that particular sound.

The Color of My Sound's original prototype has recently won a Silver Summit Creative Award, and is up for a 2006 Webby, in the NetArt category.

see also music animation machine & wolfram tones.

14:30 Posted in Emotional computing | Permalink | Comments (0) | Tags: emotional computing

SCACS

Re-blogged from information aesthetic

SCACS is a "Social Context-Aware Communication System" that collects information on social networks (i.e. academic co-author relationships networks) & visualizes them on wearable interfaces to facilitate face-to-face communications among people in physical environments. RFID sensors sense the identity of specific people (i.e. authors) nearby, & a wearable computer transforms the complex social network graphs into treemaps, which are then shown as augmented reality on a wearable interface (or head-mounted display).

link: aist-nara.ac.jp (pdf)

12:51 Posted in Augmented/mixed reality, Wearable & mobile | Permalink | Comments (0) | Tags: pervasive technology, augmented reality, mobile computing

HiResolution Bionic Ear System

|

Medgadget reports that Boston Scientific has received FDA approval of its cochlear implant Harmony™ HiResolution® Bionic Ear System, a device designed for severely deaf patients. From the press release: Developed by the Company's Neuromodulation Group, the Harmony System delivers 120 spectral bands, 5 - 10 times more than competing systems, helping to significantly increase hearing potential and quality of life for the severe-to-profoundly deaf. |

12:06 Posted in Brain training & cognitive enhancement | Permalink | Comments (0) | Tags: assisted cognition, bionics