Jul 30, 2013

How it feels (through Google Glass)

14:12 Posted in Augmented/mixed reality, Wearable & mobile | Permalink | Comments (0)

Jul 23, 2013

A mobile data collection platform for mental health research

A mobile data collection platform for mental health research

22:57 Posted in Pervasive computing, Research tools, Self-Tracking, Wearable & mobile | Permalink | Comments (0)

Augmented Reality - Projection Mapping

22:50 Posted in Augmented/mixed reality, Blue sky, Cyberart | Permalink | Comments (0)

The beginning of infinity

A little old video but still inspiring...

THE BEGINNING OF INFINITY from Jason Silva on Vimeo.

22:46 Posted in Blue sky | Permalink | Comments (0)

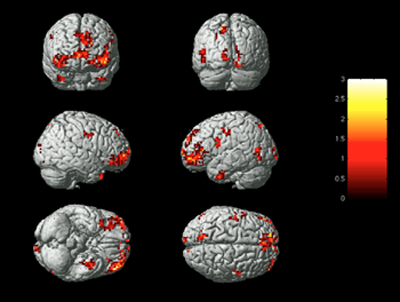

Neural Reorganization Accompanying Upper Limb Motor Rehabilitation from Stroke with Virtual Reality-Based Gesture Therapy

Neural Reorganization Accompanying Upper Limb Motor Rehabilitation from Stroke with Virtual Reality-Based Gesture Therapy.

Top Stroke Rehabil. 2013 May-June 1;20(3):197-209

Authors: Orihuela-Espina F, Fernández Del Castillo I, Palafox L, Pasaye E, Sánchez-Villavicencio I, Leder R, Franco JH, Sucar LE

Background: Gesture Therapy is an upper limb virtual reality rehabilitation-based therapy for stroke survivors. It promotes motor rehabilitation by challenging patients with simple computer games representative of daily activities for self-support. This therapy has demonstrated clinical value, but the underlying functional neural reorganization changes associated with this therapy that are responsible for the behavioral improvements are not yet known. Objective: We sought to quantify the occurrence of neural reorganization strategies that underlie motor improvements as they occur during the practice of Gesture Therapy and to identify those strategies linked to a better prognosis. Methods: Functional magnetic resonance imaging (fMRI) neuroscans were longitudinally collected at 4 time points during Gesture Therapy administration to 8 patients. Behavioral improvements were monitored using the Fugl-Meyer scale and Motricity Index. Activation loci were anatomically labelled and translated to reorganization strategies. Strategies are quantified by counting the number of active clusters in brain regions tied to them. Results: All patients demonstrated significant behavioral improvements (P < .05). Contralesional activation of the unaffected motor cortex, cerebellar recruitment, and compensatory prefrontal cortex activation were the most prominent strategies evoked. A strong and significant correlation between motor dexterity upon commencing therapy and total recruited activity was found (r2 = 0.80; P < .05), and overall brain activity during therapy was inversely related to normalized behavioral improvements (r2 = 0.64; P < .05). Conclusions: Prefrontal cortex and cerebellar activity are the driving forces of the recovery associated with Gesture Therapy. The relation between behavioral and brain changes suggests that those with stronger impairment benefit the most from this paradigm.

22:32 | Permalink | Comments (0)

Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes

Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes.

Proc Natl Acad Sci USA. 2013 Jul 15;

Authors: Banakou D, Groten R, Slater M

Abstract. An illusory sensation of ownership over a surrogate limb or whole body can be induced through specific forms of multisensory stimulation, such as synchronous visuotactile tapping on the hidden real and visible rubber hand in the rubber hand illusion. Such methods have been used to induce ownership over a manikin and a virtual body that substitute the real body, as seen from first-person perspective, through a head-mounted display. However, the perceptual and behavioral consequences of such transformed body ownership have hardly been explored. In Exp. 1, immersive virtual reality was used to embody 30 adults as a 4-y-old child (condition C), and as an adult body scaled to the same height as the child (condition A), experienced from the first-person perspective, and with virtual and real body movements synchronized. The result was a strong body-ownership illusion equally for C and A. Moreover there was an overestimation of the sizes of objects compared with a nonembodied baseline, which was significantly greater for C compared with A. An implicit association test showed that C resulted in significantly faster reaction times for the classification of self with child-like compared with adult-like attributes. Exp. 2 with an additional 16 participants extinguished the ownership illusion by using visuomotor asynchrony, with all else equal. The size-estimation and implicit association test differences between C and A were also extinguished. We conclude that there are perceptual and probably behavioral correlates of body-ownership illusions that occur as a function of the type of body in which embodiment occurs.

22:28 Posted in Research tools, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

The Size of Electronic Consumer Devices Affects Our Behavior

Re-blogged from Textually.org

What are our devices doing to us? We already know they're snuffing our creativity - but new research suggests they're also stifling our drive. How so? Because fussing with them on average 58 minutes a day leads to bad posture, FastCompany reports.

The body posture inherent in operating everyday gadgets affects not only your back, but your demeanor, reports a new experimental study entitled iPosture: The Size of Electronic Consumer Devices Affects Our Behavior. It turns out that working on a relatively large machine (like a desktop computer) causes users to act more assertively than working on a small one (like an iPad).That poor posture, Harvard Business School researchers Maarten Bos and Amy Cuddy find, undermines our assertiveness.

Read more.

22:23 Posted in Research tools, Wearable & mobile | Permalink | Comments (0)

SENSUS Transcutaneous Pain Management System Approved for Use During Sleep

Via Medgadget

NeuroMetrix of out of Waltham, MA received FDA clearance for its SENSUS Pain Management System to be used by patients during sleep. This is the first transcutaneous electrical nerve stimulation system to receive a sleep indication from the FDA for pain control.

The device is designed for use by diabetics and others with chronic pain in the legs and feet. It’s worn around one or both legs and delivers an electrical current to disrupt pain signals being sent up to the brain.

22:18 Posted in Biofeedback & neurofeedback, Wearable & mobile | Permalink | Comments (0)

Digital Assistance for Sign-Language Users

Via MedGadget

From Microsoft:

"In this project—which is being shown during the DemoFest portion of Faculty Summit 2013, which brings more than 400 academic researchers to Microsoft headquarters to share insight into impactful research—the hand tracking leads to a process of 3-D motion-trajectory alignment and matching for individual words in sign language. The words are generated via hand tracking by theKinect for Windows software and then normalized, and matching scores are computed to identify the most relevant candidates when a signed word is analyzed.

The algorithm for this 3-D trajectory matching, in turn, has enabled the construction of a system for sign-language recognition and translation, consisting of two modes. The first, Translation Mode, translates sign language into text or speech. The technology currently supports American sign language but has potential for all varieties of sign language."

12:39 Posted in Cognitive Informatics | Permalink | Comments (0)

Jul 18, 2013

How to see with your ears

Via: KurzweilAI.net

A participant wearing camera glasses and listening to the soundscape (credit: Alastair Haigh/Frontiers in Psychology)

A device that trains the brain to turn sounds into images could be used as an alternative to invasive treatment for blind and partially-sighted people, researchers at the University of Bath have found.

“The vOICe” is a visual-to-auditory sensory substitution device that encodes images taken by a camera worn by the user into “soundscapes” from which experienced users can extract information about their surroundings.

It helps blind people use sounds to build an image in their minds of the things around them.

A research team, led by Dr Michael Proulx, from the University’s Department of Psychology, looked at how blindfolded sighted participants would do on an eye test using the device.

Read full story

18:17 Posted in Brain training & cognitive enhancement, Neurotechnology & neuroinformatics | Permalink | Comments (0)

Advanced ‘artificial skin’ senses touch, humidity, and temperature

Artificial skin (credit: Technion) Technion-Israel Institute of Technology scientists have discovered how to make a new kind of flexible sensor that one day could be integrated into “electronic skin” (e-skin) — a covering for prosthetic limbs.

Read full story

18:14 Posted in Pervasive computing, Research tools, Wearable & mobile | Permalink | Comments (0)

US Army avatar role-play Experiment #3 now open for public registration

Military Open Simulator Enterprise Strategy (MOSES) is secure virtual world software designed to evaluate the ability of OpenSimulator to provide independent access to a persistent, virtual world. MOSES is a research project of the United States Army Simulation and Training Center. STTC’s Virtual World Strategic Applications team uses OpenSimulator to add capability and flexibility to virtual training scenarios.

18:12 Posted in Research tools, Telepresence & virtual presence | Permalink | Comments (0)

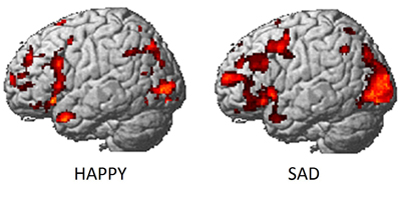

Identifying human emotions based on brain activity

For the first time, scientists at Carnegie Mellon University have identified which emotion a person is experiencing based on brain activity.

The study, published in the June 19 issue of PLOS ONE, combines functional magnetic resonance imaging (fMRI) and machine learning to measure brain signals to accurately read emotions in individuals. Led by researchers in CMU’s Dietrich College of Humanities and Social Sciences, the findings illustrate how the brain categorizes feelings, giving researchers the first reliable process to analyze emotions. Until now, research on emotions has been long stymied by the lack of reliable methods to evaluate them, mostly because people are often reluctant to honestly report their feelings. Further complicating matters is that many emotional responses may not be consciously experienced.

Identifying emotions based on neural activity builds on previous discoveries by CMU’s Marcel Just and Tom M. Mitchell, which used similar techniques to create a computational model that identifies individuals’ thoughts of concrete objects, often dubbed “mind reading.”

“This research introduces a new method with potential to identify emotions without relying on people’s ability to self-report,” said Karim Kassam, assistant professor of social and decision sciences and lead author of the study. “It could be used to assess an individual’s emotional response to almost any kind of stimulus, for example, a flag, a brand name or a political candidate.”

One challenge for the research team was find a way to repeatedly and reliably evoke different emotional states from the participants. Traditional approaches, such as showing subjects emotion-inducing film clips, would likely have been unsuccessful because the impact of film clips diminishes with repeated display. The researchers solved the problem by recruiting actors from CMU’s School of Drama.

“Our big breakthrough was my colleague Karim Kassam’s idea of testing actors, who are experienced at cycling through emotional states. We were fortunate, in that respect, that CMU has a superb drama school,” said George Loewenstein, the Herbert A. Simon University Professor of Economics and Psychology.

For the study, 10 actors were scanned at CMU’s Scientific Imaging & Brain Research Center while viewing the words of nine emotions: anger, disgust, envy, fear, happiness, lust, pride, sadness and shame. While inside the fMRI scanner, the actors were instructed to enter each of these emotional states multiple times, in random order.

Another challenge was to ensure that the technique was measuring emotions per se, and not the act of trying to induce an emotion in oneself. To meet this challenge, a second phase of the study presented participants with pictures of neutral and disgusting photos that they had not seen before. The computer model, constructed from using statistical information to analyze the fMRI activation patterns gathered for 18 emotional words, had learned the emotion patterns from self-induced emotions. It was able to correctly identify the emotional content of photos being viewed using the brain activity of the viewers.

To identify emotions within the brain, the researchers first used the participants’ neural activation patterns in early scans to identify the emotions experienced by the same participants in later scans. The computer model achieved a rank accuracy of 0.84. Rank accuracy refers to the percentile rank of the correct emotion in an ordered list of the computer model guesses; random guessing would result in a rank accuracy of 0.50.

Next, the team took the machine learning analysis of the self-induced emotions to guess which emotion the subjects were experiencing when they were exposed to the disgusting photographs. The computer model achieved a rank accuracy of 0.91. With nine emotions to choose from, the model listed disgust as the most likely emotion 60 percent of the time and as one of its top two guesses 80 percent of the time.

Finally, they applied machine learning analysis of neural activation patterns from all but one of the participants to predict the emotions experienced by the hold-out participant. This answers an important question: If we took a new individual, put them in the scanner and exposed them to an emotional stimulus, how accurately could we identify their emotional reaction? Here, the model achieved a rank accuracy of 0.71, once again well above the chance guessing level of 0.50.

“Despite manifest differences between people’s psychology, different people tend to neurally encode emotions in remarkably similar ways,” noted Amanda Markey, a graduate student in the Department of Social and Decision Sciences.

A surprising finding from the research was that almost equivalent accuracy levels could be achieved even when the computer model made use of activation patterns in only one of a number of different subsections of the human brain.

“This suggests that emotion signatures aren’t limited to specific brain regions, such as the amygdala, but produce characteristic patterns throughout a number of brain regions,” said Vladimir Cherkassky, senior research programmer in the Psychology Department.

The research team also found that while on average the model ranked the correct emotion highest among its guesses, it was best at identifying happiness and least accurate in identifying envy. It rarely confused positive and negative emotions, suggesting that these have distinct neural signatures. And, it was least likely to misidentify lust as any other emotion, suggesting that lust produces a pattern of neural activity that is distinct from all other emotional experiences.

Just, the D.O. Hebb University Professor of Psychology, director of the university’s Center for Cognitive Brain Imaging and leading neuroscientist, explained, “We found that three main organizing factors underpinned the emotion neural signatures, namely the positive or negative valence of the emotion, its intensity — mild or strong, and its sociality — involvement or non-involvement of another person. This is how emotions are organized in the brain.”

In the future, the researchers plan to apply this new identification method to a number of challenging problems in emotion research, including identifying emotions that individuals are actively attempting to suppress and multiple emotions experienced simultaneously, such as the combination of joy and envy one might experience upon hearing about a friend’s good fortune.

12:30 Posted in Emotional computing, Research tools | Permalink | Comments (0)