May 26, 2016

From User Experience (UX) to Transformative User Experience (T-UX)

In 1999, Joseph Pine and James Gilmore wrote a seminal book titled “The Experience Economy” (Harvard Business School Press, Boston, MA) that theorized the shift from a service-based economy to an experience-based economy.

According to these authors, in the new experience economy the goal of the purchase is no longer to own a product (be it a good or service), but to use it in order to enjoy a compelling experience. An experience, thus, is a whole-new type of offer: in contrast to commodities, goods and services, it is designed to be as personal and memorable as possible. Just as in a theatrical representation, companies stage meaningful events to engage customers in a memorable and personal way, by offering activities that provide engaging and rewarding experiences.

Indeed, if one looks back at the past ten years, the concept of experience has become more central to several fields, including tourism, architecture, and – perhaps more relevant for this column – to human-computer interaction, with the rise of “User Experience” (UX).

The concept of UX was introduced by Donald Norman in a 1995 article published on the CHI proceedings (D. Norman, J. Miller, A. Henderson: What You See, Some of What's in the Future, And How We Go About Doing It: HI at Apple Computer. Proceedings of CHI 1995, Denver, Colorado, USA). Norman argued that focusing exclusively on usability attribute (i.e. easy of use, efficacy, effectiveness) when designing an interactive product is not enough; one should take into account the whole experience of the user with the system, including users’ emotional and contextual needs. Since then, the UX concept has assumed an increasing importance in HCI. As McCarthy and Wright emphasized in their book “Technology as Experience” (MIT Press, 2004):

“In order to do justice to the wide range of influences that technology has in our lives, we should try to interpret the relationship between people and technology in terms of the felt life and the felt or emotional quality of action and interaction.” (p. 12).

However, according to Pine and Gilmore experience may not be the last step of what they call as “Progression of Economic Value”. They speculated further into the future, by identifying the “Transformation Economy” as the likely next phase. In their view, while experiences are essentially memorable events which stimulate the sensorial and emotional levels, transformations go much further in that they are the result of a series of experiences staged by companies to guide customers learning, taking action and eventually achieving their aspirations and goals.

In Pine and Gilmore terms, an aspirant is the individual who seeks advice for personal change (i.e. a better figure, a new career, and so forth), while the provider of this change (a dietist, a university) is an elictor. The elictor guide the aspirant through a series of experiences which are designed with certain purpose and goals. According to Pine and Gilmore, the main difference between an experience and a transformation is that the latter occurs when an experience is customized:

“When you customize an experience to make it just right for an individual - providing exactly what he needs right now - you cannot help changing that individual. When you customize an experience, you automatically turn it into a transformation, which companies create on top of experiences (recall that phrase: “a life-transforming experience”), just as they create experiences on top of services and so forth” (p. 244).

A further key difference between experiences and transformations concerns their effects: because an experience is inherently personal, no two people can have the same one. Likewise, no individual can undergo the same transformation twice: the second time it’s attempted, the individual would no longer be the same person (p. 254-255).

But what will be the impact of this upcoming, “transformation economy” on how people relate with technology? If in the experience economy the buzzword is “User Experience”, in the next stage the new buzzword might be “User Transformation”.

Indeed, we can see some initial signs of this shift. For example, FitBit and similar self-tracking gadgets are starting to offer personalized advices to foster enduring changes in users’ lifestyle; another example is from the fields of ambient intelligence and domotics, where there is an increasing focus towards designing systems that are able to learn from the user’s behaviour (i.e. by tracking the movement of an elderly in his home) to provide context-aware adaptive services (i.e. sending an alert when the user is at risk of falling).

But likely, the most important ICT step towards the transformation economy could take place with the introduction of next-generation immersive virtual reality systems. Since these new systems are based on mobile devices (an example is the recent partnership between Oculus and Samsung), they are able to deliver VR experiences that incorporate information on the external/internal context of the user (i.e. time, location, temperature, mood etc) by using the sensors incapsulated in the mobile phone.

By personalizing the immersive experience with context-based information, it might be possibile to induce higher levels of involvement and presence in the virtual environment. In case of cyber-therapeutic applications, this could translate into the development of more effective, transformative virtual healing experiences.

Furthermore, the emergence of "symbiotic technologies", such as neuroprosthetic devices and neuro-biofeedback, is enabling a direct connection between the computer and the brain. Increasingly, these neural interfaces are moving from the biomedical domain to become consumer products. But unlike existing digital experiential products, symbiotic technologies have the potential to transform more radically basic human experiences.

Brain-computer interfaces, immersive virtual reality and augmented reality and their various combinations will allow users to create “personalized alterations” of experience. Just as nowadays we can download and install a number of “plug-ins”, i.e. apps to personalize our experience with hardware and software products, so very soon we may download and install new “extensions of the self”, or “experiential plug-ins” which will provide us with a number of options for altering/replacing/simulating our sensorial, emotional and cognitive processes.

Such mediated recombinations of human experience will result from of the application of existing neuro-technologies in completely new domains. Although virtual reality and brain-computer interface were originally developed for applications in specific domains (i.e. military simulations, neurorehabilitation, etc), today the use of these technologies has been extended to other fields of application, ranging from entertainment to education.

In the field of biology, Stephen Jay Gould and Elizabeth Vrba (Paleobiology, 8, 4-15, 1982) have defined “exaptation” the process in which a feature acquires a function that was not acquired through natural selection. Likewise, the exaptation of neurotechnologies to the digital consumer market may lead to the rise of a novel “neuro-experience economy”, in which technology-mediated transformation of experience is the main product.

Just as a Genetically-Modified Organism (GMO) is an organism whose genetic material is altered using genetic-engineering techniques, so we could define aTechnologically-Modified Experience (ETM) a re-engineered experience resulting from the artificial manipulation of neurobiological bases of sensorial, affective, and cognitive processes.

Clearly, the emergence of the transformative neuro-experience economy will not happen in weeks or months but rather in years. It will take some time before people will find brain-computer devices on the shelves of electronic stores: most of these tools are still in the pre-commercial phase at best, and some are found only in laboratories.

Nevertheless, the mere possibility that such scenario will sooner or later come to pass, raises important questions that should be addressed before symbiotic technologies will enter our lives: does technological alteration of human experience threaten the autonomy of individuals, or the authenticity of their lives? How can we help individuals decide which transformations are good or bad for them?

Answering these important issues will require the collaboration of many disciplines, including philosophy, computer ethics and, of course, cyberpsychology.

Feb 09, 2014

Nick Bostrom: The intelligence explosion hypothesis

Via IEET

Philosopher Nick Bostrom is a Swedish at the University of Oxford known for his work on existential risk and the anthropic principle covered in books such as Global Catastrophic Risks, Anthropic Bias and Human Enhancement. He holds a PhD from the London School of Economics . He is currently the director of both The Future of Humanity Institute and the Programme on the Impacts of Future Technology as part of the Oxford Martin School at Oxford University.

22:31 Posted in Blue sky, Ethics of technology | Permalink | Comments (0)

Aug 07, 2013

Phubbing: the war against anti-social phone use

Via Textually.org

Don't you just hate it when someone snubs you by looking at their phone instead of paying attention? The Stop Phubbing campaign group certainly does. The Guardian reports.

In a list of "Disturbing Phubbing Stats" on their website, of note:

-- If phubbing were a plague it would decimate six Chinas

-- 97% of people claim their food tasted worse while being a victim of phubbing

-- 92% of repeat phubbers go on to become politicians

So it's really just a joke site? Well, a joke site with a serious message about our growing estrangement from our fellow human beings. But mostly a joke site, yes.

Read full article.

13:43 Posted in Ethics of technology | Permalink | Comments (0)

Sep 03, 2012

Therapy in Virtual Environments - Clinical and Ethical Issues

Telemed J E Health. 2012 Jul 23;

Authors: Yellowlees PM, Holloway KM, Parish MB

Abstract. Background: As virtual reality and computer-assisted therapy strategies are increasingly implemented for the treatment of psychological disorders, ethical standards and guidelines must be considered. This study determined a set of ethical and legal guidelines for treatment of post-traumatic stress disorder (PTSD)/traumatic brain injury (TBI) in a virtual environment incorporating the rights of an individual who is represented by an avatar. Materials and Methods: A comprehensive literature review was undertaken. An example of a case study of therapy in Second Life (a popular online virtual world developed by Linden Labs) was described. Results: Ethical and legal considerations regarding psychiatric treatment of PTSD/TBI in a virtual environment were examined. The following issues were described and discussed: authentication of providers and patients, informed consent, patient confidentiality, patient well-being, clinician competence (licensing and credentialing), training of providers, insurance for providers, the therapeutic environment, and emergencies. Ethical and legal guidelines relevant to these issues in a virtual environment were proposed. Conclusions: Ethical and legal issues in virtual environments are similar to those that occur in the in-person world. Individuals represented by an avatar have the rights equivalent to the individual and should be treated as such.

20:04 Posted in Cybertherapy, Ethics of technology, Virtual worlds | Permalink | Comments (0)

Jul 14, 2012

Does technology affect happiness?

Via The New York Times

As young people spend more time on computers, smartphones and other devices, researchers are asking how all that screen time and multitasking affects children’s and teenagers’ ability to focus and learn — even drive cars.

A study from Stanford University, published Wednesday, wrestles with a new question: How is technology affecting their happiness and emotional development?

Read the full article here

http://bits.blogs.nytimes.com/2012/01/25/does-technology-affect-happiness/

18:51 Posted in Ethics of technology, Positive Technology events | Permalink | Comments (0)

Mar 11, 2012

Augmenting cognition: old concept, new tools

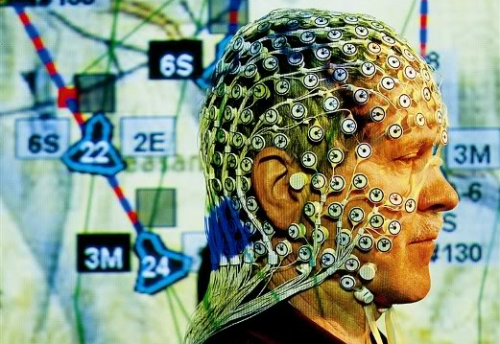

The increasing miniaturization and computing power of information technology devices allow new ways of interaction between human brains and computers, progressively blurring the boundaries between man and machine. An example is provided by brain-computer interface systems, which allow users to use their brain to control the behavior of a computer or of an external device such as a robotic arm (in this latter case, we speak of “neuroprostetics”).

The idea of using information technologies to augment cognition, however, is not new, dating back in 1950’s and 1960’s. One of the first to write about this concept was british psychiatrist William Ross Ashby.

In his Introduction to Cybernetics (1956), he described intelligence as the “power of appropriate selection,” which could be amplified by means of technologies in the same way that physical power is amplified. A second major conceptual contribution towards the development of cognitive augmentation was provided few years later by computer scientist and Internet pioneer Joseph Licklider, in a paper entitled Man-Computer Symbiosis (1960).

In this article, Licklider envisions the development of computer technologies that will enable users “to think in interaction with a computer in the same way that you think with a colleague whose competence supplements your own.” According to his vision, the raise of computer networks would allow to connect together millions of human minds, within a “'thinking center' that will incorporate the functions of present-day libraries together with anticipated advances in information storage and retrieval.” This view represent a departure from the prevailing Artificial Intelligence approach of that time: instead of creating an artificial brain, Licklider focused on the possibility of developing new forms of interaction between human and information technologies, with the aim of extending human intelligence.

A similar view was proposed in the same years by another computer visionnaire, Douglas Engelbart, in its famous 1962 article entitled Augmenting Human Intellect: A Conceptual Framework.

In this report, Engelbart defines the goal of intelligence augmentation as “increasing the capability of a man to approach a complex problem situation, to gain comprehension to suit his particular needs, and to derive solutions to problems. Increased capability in this respect is taken to mean a mixture of the following: more-rapid comprehension, better comprehension, the possibility of gaining a useful degree of comprehension in a situation that previously was too complex, speedier solutions, better solutions, and the possibility of finding solutions to problems that before seemed insoluble (…) We do not speak of isolated clever tricks that help in particular situations.We refer to away of life in an integrated domain where hunches, cut-and-try, intangibles, and the human ‘feel for a situation’ usefully co-exist with powerful concepts, streamlined terminology and notation, sophisticated methods, and high-powered electronic aids.”

These “electronic aids” nowdays include any kind of harware and software computing devices used i.e. to store information in external memories, to process complex data, to perform routine tasks and to support decision making. However, today the concept of cognitive augmentation is not limited to the amplification of human intellectual abilities through external hardware. As recently noted by Nick Bostrom and Anders Sandberg (Sci Eng Ethics 15:311–341, 2009), “What is new is the growing interest in creating intimate links between the external systems and the human user through better interaction. The software becomes less an external tool and more of a mediating ‘‘exoself’’. This can be achieved through mediation, embedding the human within an augmenting ‘‘shell’’ such as wearable computers (…) or virtual reality, or through smart environments in which objects are given extended capabilities” (p. 320).

At the forefront of this trend is neurotechnology, an emerging research and development field which includes technologies that are specifically designed with the aim of improving brain function. Examples of neurotechnologies include brain training games such as BrainAge and programs like Fast ForWord, but also neurodevices used to monitor or regulate brain activity, such as deep brain stimulators (DBS), and smart prosthetics for the replacement of impaired sensory systems (i.e. cochlear or retinal implants).

Clearly, the vision of neurotechnology is not free of issues. The more they become powerful and sophisticated, the more attention should be dedicated to understand the socio-economic, legal and ethical implications of their applications in various field, from medicine to neuromarketing.

May 21, 2011

You are not a gadget

Recently, I came across an intriguing book that brings a new, thought-provoking perspective on how the Internet is shaping our lives and culture. The title of the book is You Are Not a Gadget: A Manifesto and the author is Jaron Lanier, a computer scientist and musician who is best known for his pioneering work in the field of virtual reality.

The leitmotiv of the book can be summarized in a single question: are new technologies really playing an empowering role, by increasing people’s creativity, control, and freedom? As can be expected from the title, the author’s answer is more negative than positive. To construct his argument, Lanier starts from the observation that the evolution of computing is not as free of constraints as one might assume.

As a key example, the author describes the evolution of MIDI, a protocol for composing and playing music on computers. This format emerged in the early 1980s and was immediately recognized as an empowering tool for musicians. However, as more and more people adopted it, it became a rigid standard that limited the expressive potential of artists because, as Lanier points out, it ‘‘could only describe the tile mosaic world of the keyboardist, not the watercolor world of the violin.’’ For the author, this lock-in effect can be seen in other fields of information technology. For example, certain features that were included in the early versions of the UNIX operating system are now deeply embedded in the software and cannot be modified, even if they are considered obsolete or inappropriate. Once an approach becomes standard, it tends to inhibit other solutions, thereby limiting the potential for creativity.

Lanier goes on to demystify some of today’s most popular Internet buzzwords, such as ‘‘Web 2.0,’’ ‘‘Open Culture,’’ ‘‘Mash-Ups,’’ and ‘‘Wisdom of Crowds.’’ He maintains that these trendy notions are ultimately pointing to a new form of ‘‘digital collectivism,’’ which rather than encouraging individual inventiveness, promotes mediocrity and homologation. By allowing everyone to offer up their opinion and ideas, the social web is melting into an indistinct pool of information, a vast gray zone where it is increasingly difficult to find quality or meaningful content. This observation leads the author to the counterintuitive conclusion that the introduction of boundaries is sometimes useful (if not even necessary) to achieve originality and excellence.

Another issue raised by Lanier concerns the risk of de-humanization and de-individualization associated with online social networks. He describes the early Web as a space full of ‘‘flavours and colours’’ where each Web site was different from the others and contributed to the diversity of the Internet landscape. But with the advent of Facebook and other similar sites, this richness was lost because people started creating their personal web pages using predefined templates. On the one hand, this formalism has allowed anyone to create, publish, and share content online easily (blog, video, music, etc.). On the other hand, it has reduced the potential for individuals to express their uniqueness.

Lanier reminds us of the importance of putting the human being, and not the machine, at the center of concerns for technology and innovation. For this goal to be achieved, it is not enough to develop usable and accessible tools; it is also necessary to emphasize the uniqueness of experience. This humanist faith leads the author to criticize the idea of technological Singularity, popularized by recognized experts such as Ray Kurzweil, Vernor Vinge, and Kevin Kelly. This concept holds that exponential increase in computing power and communication networks, combined with the rapid advances in the fields of artificial intelligence and robotics, may lead to the emergence of a super-intelligent organism (the ‘‘Singularity’’), which could eventually develop intentional agency and subordinate the human race. Lanier’s opposition to this idea is based on the conviction that the ‘‘human factor’’ will continue to play an essential role in the evolution of technology. The author believes that computers will never be able to replace the uniqueness of humans nor replicate the complexity of their experience. Further, he considers the concept of technological Singularity culturally dangerous because it enforces the idea of an inevitable superiority of machines over humans: ‘‘People degrade themselves in order to make machines seem smart all the time,’’ writes Lanier.

However, Lanier is genuinely admired by the potential of the Internet and new technologies. This iswhy he calls for a new ‘‘technological humanism’’ able to contrast the overarching vision of digital collectivism and empower creative selfexpression. As a key illustration, the author describes the unique combination of idealism, technical skills, and imaginative talent that, in the 1980s, lead a small group of programmers to conceive the vision of virtual reality. This powerful new paradigm in human–computer interaction inspired in the following decades a number of innovative applications in industry, education, and medicine.

Beside the nostalgic remembrances of the heroic times of Silicon Valley and the sophisticated overtone of some terms (e.g., ‘‘numinous neoteny’’), the book written by Lanier conveys a clear message and deserves the attention of all who are interested in the relationship between humans and technology. The idea that technological innovation should be informed by human values and experience is not new, but Lanier brings it out vividly in detail and with a number of persuasive examples.

More to explore

- Jaron Lanier’s homepage: The official website of Jaron Lanier, which with its old-fashion style recaptures the freshness and simplicity of the early Internet. The website features biographical information about the author and includes links to a number of Lanier’s articles and commentaries on a number of different technology-related topics.

- Kurzweil Accelerating Intelligence: Launched in 2001, Kurzweil Accelerating Intelligence explores the forecasts and insights on accelerating change described in Ray Kurzweil’s books, with updates about breakthroughs in science and technology.

- Singularity University: Singularity University is an interdisciplinary university founded by Ray Kurzweil and other renowned experts in technology with the support of a number of sponsors (including Google), whose mission is “to stimulate groundbreaking, disruptive thinking and solutions aimed at solving some of the planet’s most pressing challenges”. Singularity University is based at the NASA Ames campus in Silicon Valley.

- Humanity+: Humanity+ is a non-profit organization dedicated to “the ethical use of technology to extend human capabilities and transcend the legacy of the human condition”. The mission of the organization is to support discussion and public awareness about emerging technologies, as well as to propose solutions for potential problems related to these technologies. The website includes plenty of resources about transhumanism topics and news about upcoming seminars and conferences.

13:30 Posted in Ethics of technology, Social Media, Technology & spirituality, Virtual worlds | Permalink | Comments (0)

Jul 10, 2009

Neuroscience and the military: ethical implications of war neurotechnologies

Super soldiers equipped with neural implants, suits that contain biosensors, and thought scans of detainees may become reality sooner than you think.

In this video taken from the show "Conversations from Penn State", Jonathan Moreno discusses the ethical implications of the applications of neuroscience in modern warfare.

Moreno is David and Lyn Silfen professor and professor of medical ethics and the history and sociology of science at the University of Pennsylvania and was formerly the director of the Center for Ethics at the University of Virginia. He has served as senior staff member for two presidential commissions and is an elected member of the Institute of Medicine of the National Academies.

12:13 Posted in Ethics of technology, Neurotechnology & neuroinformatics, Wearable & mobile | Permalink | Comments (0) | Tags: ethics, neuroethics, war, biosensors, neurotechnology