Jan 07, 2007

Imaging Place SL: The U.S./Mexico Border

Re-blogged from Networked Performance

Imaging Place SL: The U.S./Mexico Border by John (Craig) Freeman: Jan 5 - Feb 23, 2007: Ars Virtua: Gallery 2: Opening 7 - 9pm SLT(Pacific Time) Friday January 5, 2007. Go there

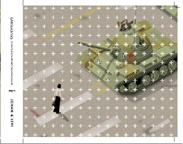

"Imaging Place," is a place-based, virtual reality art project. It takes the form of a user navigated, interactive computer program that combines panoramic photography, digital video, and three-dimensional technologies to investigate and document situations where the forces of globalization are impacting the lives of individuals in local communities. The goal of the project is to develop the technologies, the methodology and the content for truly immersive and navigable narrative, based in real places. For the past several months, Freeman has been implementing the "Imaging Place" project in Second Life.

When a denizen of Second Life first arrives at an Imaging Place SL Scene he, she or it sees on the ground a large black and white satellite photograph of the full disk of the Earth. An avatar can then walk over the Earth to a thin red line which leads to an adjacent higher level platform made of a high resolution aerial photograph of specific location from around the world. Mapped to the aerial images are networks of nodes constructed of primitive spherical geometry with panoramic photographs texture mapped to the interior.

The avatar can walk to the center of one of these nodes and use a first person perspective to view the image, giving the user the sensation of being immersed in the location. Streaming audio is localized to individual nodes providing narrative content for the scene. This content includes stories told by people who appear in the images, theory and ambient sound. When the avatar returns to the Earth platform, several rotating ENTER signs provide teleports to other "Imaging Place" scenes located at other places within the world of Second Life. In "Imaging Place SL: The U.S./Mexico Border," Freeman explores the issues, politics and personal memories of this contested space.

LIVE PERFORMANCE by Second Front: Friday, January 05, 2007 - 7 PM PST Second Front is the first dedicated performance art group in Second Life. To officially open JC Fremont's Installation at Ars Virtua, Second Front will be creating a realtime interpretive and site-specific performance based on JC Fremont's theme 'Borders' to compliment "Imaging Place SL: The U.S./Mexico Border."

23:32 Posted in Cyberart, Virtual worlds | Permalink | Comments (0) | Tags: second life

Dec 30, 2006

Mind and brain artwork

via mind hacks

have a look at this wonderful collection of mind and brain artwork, collected by the author of the Italian website PsicoCafé

Link to PsicoCafé image gallery.

Link to PsicoCafé (Italian)

12:55 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart

Dec 23, 2006

Brain mouse

via omnibrain

if you are still looking for a xmas gift, what about this Brain mouse designed by Pat Says Now

17:50 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart

Nov 10, 2006

BrainWaves

A great catch by the always-interesting NeuroFuture:

BrainWaves is a musical performance by cultured cortical cells interfacing with multielectrode arrays. Eight electrodes recorded neural patterns that were filtered to eight speakers after being sonified by robotic and human interpretation. Sound patterns followed neural spikes and waveforms, and also extended to video, with live visualizations of the music and neural patterns in front of a mesmerized audience. See a two minute video here (still image below). Teams from two research labs designed and engineered the project; read more from collaborator Gil Weinberg.

17:05 Posted in Cyberart, Neurotechnology & neuroinformatics | Permalink | Comments (0) | Tags: neuroinformatics, cyberart

Nov 09, 2006

DNART

via LiveScience (thanks to Johnatan Loroni, bioinformatics researcher)

Paul Rothemund, researcher at Caltech, has developed a new tecnique that allows to weave DNA strands into any desired two-dimensional shape or figure, which he calls "DNA origami." According to Rothemund, the technology could one day be used to construct tiny chemical factories or molecular electronics by attaching proteins and inorganic components to DNA circuit boards.

From the press release:

"The construction of custom DNA origami is so simple that the method should make it much easier for scientists from diverse fields to create and study the complex nanostructures they might want," Rothemund explains.

"A physicist, for example, might attach nano-sized semiconductor 'quantum dots' in a pattern that creates a quantum computer. A biologist might use DNA origami to take proteins which normally occur separately in nature, and organize them into a multi-enzyme factory that hands a chemical product from one enzyme machine to the next in the manner of an assembly line."

Reporting in the March 16th issue of Nature, Rothemund describes how long single strands of DNA can be folded back and forth, tracing a mazelike path, to form a scaffold that fills up the outline of any desired shape. To hold the scaffold in place, 200 or more DNA strands are designed to bind the scaffold and staple it together.

Each of the short DNA strands can act something like a pixel in a computer image, resulting in a shape that can bear a complex pattern, such as words or images. The resulting shapes and patterns are each about 100 nanometers in diameter-or about a thousand times smaller than the diameter of a human hair. The dots themselves are six nanometers in diameter. While the folding of DNA into shapes that have nothing to do with the molecule's genetic information is not a new idea, Rothemund's efforts provide a general way to quickly and easily create any shape. In the last year, Rothemund has created half a dozen shapes, including a square, a triangle, a five-pointed star, and a smiley face-each one several times more complex than any previously constructed DNA objects. "At this point, high-school students could use the design program to create whatever shape they desired,'' he says.

Once a shape has been created, adding a pattern to it is particularly easy, taking just a couple of hours for any desired pattern. As a demonstration, Rothemund has spelled out the letters "DNA," and has drawn a rough picture of a double helix, as well as a map of the western hemisphere in which one nanometer represents 200 kilometers.

Link to Live Science report on DNA art

16:34 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart

Nov 08, 2006

Ars Virtua Artist-in-Residence (AVAIR)

Re-blogged from Networked performance

Ars Virtua Artist-in-Residence (AVAIR): Call for Proposals: Deadline November 21, 2006: Ars Virtua Gallery and New Media Center in Second Life is soliciting proposals for its artist-in-residence program. The deadline for submissions is November 21, 2006. Established and emerging artists will work within the 3d rendered environment of Second Life. Each 11-week residency will culminate in an exhibition and a community-based event. Residents will also receive a $400 stipend, training and mentorship.

Ars Virtua Artist-in-Residence (AVAIR) is an extended performance that examines what it means to reside in a place that has no physical location.

Ars Virtua presents artists with a radical alternative to "real life" galleries: 1) Since it does not physically exist artists are not limited by physics, material budgets, building codes or landlords. Their only constraints are social conventions and (malleable-extensible) software. 2) The gallery is accessible 24 hours a day to a potentially infinite number of people in every part of the world simultaneously. 3) Because of the ever evolving, flexible nature of Second Life the "audience" is a far less predictable variable than one might find a Real Life gallery. Residents will be encouraged to explore, experiment with and challenge traditional conventions of art making and distribution, value and the art market, artist and audience, space and place.

Application Process: Artists are encouraged to log in to Second Life and create an avatar BEFORE applying. Download the application requirements here: http://arsvirtua.com/residence. Finalists will be contacted for an interview. Interviews will take place from November 28-30.

23:13 Posted in Cyberart, Virtual worlds | Permalink | Comments (0) | Tags: cyberart, virtual worlds

Body as musical instrument

Dance and music go together. Intuitively, we know they have common elements, and while we cannot even begin to understand what they are or how they so perfectly complement one another, it is clear that they are both are an expression of something deep and fundamental within all human beings. Both express things that words cannot - beyond intellect, they are perhaps two of the fundamental building blocks of human expression, common to the souls of all people. Which is why when we saw this machine which links the two, we knew there was something special brewing.

The GypsyMIDI is a unique instrument for motion-capture midi control - a machine that enables a human being to become a musical instrument - well, a musical instrument controller to be exact, or a bunch of other things depending on your imagination.

Read the full post on NP

23:05 Posted in Cyberart | Permalink | Comments (0) | Tags: creativity and computers

Nov 07, 2006

[meme.garden]

re-blogged from Networked Performance

[meme.garden] by Mary Flanagan, Daniel Howe, Chris Egert, Junming Mei, and Kay Chang [meme.garden] is an Internet service that blends software art and search tool to visualize participants' interests in prevalent streams of information, encouraging browsing and interaction between users in real time, through time. Utilizing the WordNet lexical reference system from Princeton University, [meme.garden] introduces concepts of temporality, space, and empathy into a network-oriented search tool. Participants search for words which expand contextually through the use of a lexical database. English nouns, verbs, adjectives and adverbs are organized into floating synonym "seeds," each representing one underlying lexical concept. When participants "plant" their interests, each becomes a tree that "grows" over time. Each organism's leaves are linked to related streaming RSS feeds, and by interacting with their own and other participants' trees, participants create a contextual timescape in which interests can be seen growing and changing within an environment that endures.

The [meme.garden] software was created by an eclectic team of artists and scientists: Mary Flanagan, Daniel Howe, Chris Egert, Junming Mei, and Kay Chang.

23:10 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart

Nov 01, 2006

Synthecology

Re-blogged from Networked Performance

Synthecology combines the possibilities of tele-immersive collaboration with a new architecture for virtual reality sound immersion to create a environment where musicians from all locations can interactively perform and create sonic environments.

Compose, sculpt, and improvise with other musicians and artists in an ephemeral garden of sonic lifeforms. Synthecology invites visitors in this digitally fertile space to create a musical sculpture of sythesized tones and sound samples provided by web inhabitants. Upon entering the garden, each participant can pluck contributed sounds from the air and plant them, wander the garden playing their own improvisation or collaborate with other participants to create/author a new composition.

As each new 'seed' is planted and grown, sculpted and played, this garden becomes both a musical instrument and a composition to be shared with the rest of the network. Every inhabitant creates, not just as an individual composer shaping their own themes, but as a collaborator in real time who is able to improvise new soundscapes in the garden by cooperating with other avatars from diverse geographical locations.

Virtual participants are fully immersed in the garden landscape through the use of passive stereoscopic technology and spatialized audio to create a networked tele-immersive environment where all inhabitants can collaborate, socialize and play. Guests from across the globe are similarly embodied as avatars through out this environment, each experiencing the audio and visual presence of the others.

Continue to read the full post here

23:51 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart, creativity and computers

Oct 31, 2006

ENGAGE: Interaction, Art and Audience Experience

from Rhizome.org

26-28 November 2006

University of Technology, Sydney

Reduced fee early registration deadline approaching: 8 November 2006.

ENGAGE is an international symposium positioning audience experience at the heart of our understanding of interactive art. Papers will be presented by leading artists, curators and theorists exploring key issues in audience-based interactive art research.

Further information on keynote speakers, presenters, registration and contact information is available at: http://www.creativityandcognition.com/engage06/

ENGAGE is the 3rd annual symposium organised by the Creativity and Cognition Studios at the University of Technology, Sydney. Sponsorship is care of the Australasian CRC for Interaction Design (ACID), creating new forms of human interaction with emerging content technology; and the Australian Network for Art and Technology (ANAT), Australia's peak network and advocacy body for media arts.

17:47 Posted in Creativity and computers, Cyberart | Permalink | Comments (0) | Tags: cyberart

Oct 26, 2006

EvoMUSART 2007

From Networked Performance

EvoMUSART 2007: 5th European Workshop on Evolutionary Music and Art: 11-13 April, 2007, Valencia, Spain: EVOSTAR: EVOMUSART: "ArtEscapes: Variations of Life in the Media Arts"

The use of biological inspired techniques for the development of artistic systems is a recent, exciting and significant area of research. There is a growing interest in the application of these techniques in fields such as: visual art and music generation, analysis, and interpretation; sound synthesis; architecture; video; and design.

EvoMUSART 2007 is the fifth workshop of the EvoNet working group on Evolutionary Music and Art. Following the success of previous events and the growth of interest in the field, the main goal of EvoMUSART 2007 is to bring together researchers who are using biological inspired techniques for artistic tasks, providing the opportunity to promote, present and discuss ongoing work in the area.

The workshop will be held from 11-13 April, 2007 in Valencia, Spain, as part of the Evo* event.

The event includes the exhibition "ArtEscapes: Variations of Life in the Media Arts", giving an opportunity for the presentation of evolutionary art and music. The submission of art works for the exhibition session is independent from the submission of papers.

Accepted papers will be presented orally at the workshop and included in the EvoWorkshops proceedings, published by Springer Verlag in the Lecture Notes in Computer Science series.

Further information can be found on the following pages:

Evo*2007: http://www.evostar.org

EvoMUSART2007: http://evonet.lri.fr/TikiWiki/tiki-index.php?page=EvoMUSART

21:39 Posted in Creativity and computers, Cyberart | Permalink | Comments (0) | Tags: cyberart, creativity and computers

Brain Waves Drawing

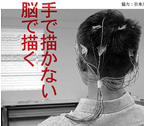

Brain Waves Drawing: Live Performance by Hideki Nakazawa: Nov 4-5, 2006 at Fuchu Art Museum, Tokyo Supported by Nihon Kohden.

Not to Draw by Hand. To Draw by Brain: Artists usually draw pictures by hand with brushes or pencils. However, the activities of brains must be more important and essential than the ones of hands at the moment of creating art. Therefore, I decided to draw pictures with electrodes being set on my head through controlling the activities of my own brain. The curved lines so-called "brain waves" in medicine must be the "drawings" in the world of fine art, directly drawn by my brain without using hands.

21:34 Posted in Brain-computer interface, Cyberart | Permalink | Comments (0) | Tags: brain-computer interface, cyberart

Oct 07, 2006

Gamescenes

GameScenes. Art in the Age of Videogames is the first volume entirely dedicated to Game Art. Edited by Matteo Bittanti and Domenico Quaranta, GameScenes provides a detailed overview of the emerging field of Game Art, examining the complex interaction and intersection of art and videogames.

Video and computer game technologies have opened up new possibilities for artistic creation, distribution, and appreciation. In addition to projects that might conventionally be described as Internet Art, Digital Art or New Media Art, there is now a wide spectrum of work by practitioners that crosses the boundaries between various disciplines and practices. The common denominator is that all these practitioners use digital games as their tools or source of inspiration to make art. They are called Game Artists.

GameScenes explores the rapidly expanding world of Game Art in the works of over 30 international artists. Included are several milestones in this field, as well as some lesser known works. In addition to the editors' critical texts, the book contains contributions from a variety of international scholars that illustrate, explain, and contextualize the various artifacts.

M. Bittanti, D. Quaranta (editors), GameScenes. Art in the Age of Videogames, Milan, Johan & Levi 2006. Hardcover, 454 pages, 25 x 25 cm, 200+ hi-res illustrations, available from October 2006.

17:26 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart

Aug 08, 2006

The Travels of Mariko Horo

Re-blogged from Networked Performance

The Travels of Mariko Horo by Tamiko Thiel: Sometime between the 12th and the 22nd centuries Mariko HMrM, Mariko the Wanderer, journeys westward from Japan in search of the Buddhist Paradise floating in the Western Seas. She does find Paradise, but finds also a chilling, darker side to the West, an island where lost souls are held in an eternal Limbo. She encapsulates her impressions of the places she visits in a series of 3D virtual worlds and invites you to see the West through her eyes.

The Travels of Mariko is an interactive 3D virtual reality installation. The image is generated in real time on a fast gaming PC and projected on a large 9 x 12 screen to produce an immersive experience. Users move their viewpoint through the virtual environment with a joystick or similar navigational input device. Mariko is a fictitious character Thiel invented to incorporate the viewpoint for the project - users will never actually see Mariko, except perhaps in a mirror. In essence they will be Mariko, seeing the exotic and mysterious Occident through her eyes and experiences.

The virtual environment is sensitive to their presence, changing around them as a result of their movements and actions: An empty church fills with saints who vanish into the heavens. A basilica transports the user directly into the Western Cosmos, where angels sing the praises of the Goddess of Compassion. A pavilion takes users deep into the underwater realm of the Heavenly King. A plain wooden chapel leads into a Limbo of constant torment.

Music for Mariko Horo is embedded in the piece itself, localized to specific places within the 3D world. The composer Ping Jin, Professor of Music at SUNY/New Paltz, studied music both in his native China and in the USA. Ping describes the music as "creating a sonic dimension for Mariko's meditation on the mythic West. Created from both sampled and computer generated sounds, there are fusions and juxtapositions of Eastern and Western sounds to enhance the scene and mood of each section."

World premiere:

July 29 - November 26, 2006: "Edge Conditions" show, part of the Pacific Rim Theme of ISEA2006 Symposium/ZeroOne San Jose Festival at the San Jose Museum of Art, USA.

14:13 Posted in Cyberart, Virtual worlds | Permalink | Comments (0) | Tags: cyberart, virtual reality

Aug 03, 2006

empathic painting

Via New Scientist

A team of computer scientists (Maria Shugrina and Margrit Betke from Boston University, US, and John Collomosse from Bath University, UK) have created a video display system (the "Empatic Painting") that tracks the expressions of onlookers and metamorphoses to match their emotions:

"For example, if the viewer looks angry it will apply a red hue and blurring. If, on the other hand, they have a cheerful expression, it will introduce increase the brightness and colour of the image." (New Scientist )

See a video of the empathic painting (3.4MG .avi, required codec).

20:50 Posted in Cyberart, Emotional computing | Permalink | Comments (0) | Tags: emotional computing

Aug 01, 2006

Freq2

From Networked Performance (via Pixelsumo]

Freq2, by Squidsoup, uses your whole body to control the precise nature of a sound - a form of musical instrument. The mechanism used is to trace the outline of a person's shadow, using a webcam, and transform this line into an audible sound. Any sound can be described as a waveform - essentially a line - and so these lines can be derived from one's shadow. What you see is literally what you hear, as the drawn wave is immediately audible as a realtime dynamic drone.

Freq2 adds to this experience by adding a temporal component to the mix; a sonic composition in which to frame the instrument. The visuals, an abstract 3-dimensional landscape, extrudes in realtime into the distance, leaving a trail of the interactions that have occurred. This `memory' of what has gone before is reflected in the sounds, with long loops echoing passed interactions. The sounds, all generated in realtime from the live waveform, have also been built into more of a compositional soundscape, with the waveform being played at a range of pitches and with a rhythmic component.

Watch video

11:39 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart

Oribotics

via textually.org

Oribotics - by Matthew Gardiner - is the fusion of origami and technology, specifically 'bot' technology, such as robots, or intelligent computer agents known as bots. The system was designed to create an intimate connection between the audience and the bots; a cross between gardening, messaging a friend, and commanding a robot. It was developed during an Australian Network for Artists and Technology (ANAT) artists lab; achieved with Processing as an authoring tool, and connected a mobile phone via a USB cable.

"Ori-botics is a joining of two complex fields of study. Ori comes from the Japanese verb Oru literally meaning 'to fold'. Origami the Japanese word for paper folding, comes from the same root. Oribotics is origami that is controlled by robot technology; paper that will fold and unfold on command. An Oribot by definition is a folding bot. By this definition, anything that uses robotics/botics and folding together is an oribot. This includes some industrial applications already in existance, such as Miura's folds taken to space, and also includes my two latest works. Orimattic, and Oribotics."

11:38 Posted in AI & robotics, Cyberart | Permalink | Comments (0) | Tags: cyberart

Jul 27, 2006

Mouthpiece

Re-blogged from WMMNA

The Mouthpiece has been designed to help strangers who find it difficult to express their feelings or opinions face to face. A small LCD monitor and loudspeakers are covering the mouth of the wearer like a gag. The equipment replaces the real act of speech with pre-recorded, edited and electronically perfected statements, questions, answers, stories, etc.

21:28 Posted in Cyberart, Emotional computing | Permalink | Comments (0)

The Endless Forest

12:34 Posted in Cyberart, Emotional computing | Permalink | Comments (0) | Tags: emotional computing

Jul 25, 2006

Interactive Dance Technology

Within the field of interactive dance technology, a number of projects have experimented with dancers producing music in real time from their body movements, as opposed to following the music. In MusicViaMotion (2000) for example, dance movements are captured with a video camera and mapped to sound synthesis in real time. In MIT Medialab's Expressive Footwear project (1998) and Katherine Moriwaki's Music Shoes (2000), the dancers wear sport shoes respectively chinese slippers, equipped with a range of sensors. In Alfred Desio's Zapped Taps, sensors are also used, this time on tap shoes. In all these projects, the sensed movements actuate and modulate artificial sounds.

22:16 Posted in Cyberart | Permalink | Comments (0) | Tags: cyberart