Jan 02, 2017

Mind-controlled toys: The next generation of Christmas presents?

Source: University of Warwick

The next generation of toys could be controlled by the power of the mind, thanks to research by the University of Warwick.

Led by Professor Christopher James, Director of Warwick Engineering in Biomedicine at the School of Engineering, technology has been developed which allows electronic devices to be activated using electrical impulses from brain waves, by connecting our thoughts to computerised systems. Some of the most popular toys on children's lists to Santa - such as remote-controlled cars and helicopters, toy robots and Scalextric racing sets - could all be controlled via a headset, using 'the power of thought'.

This could be based on levels of concentration - thinking of your favourite colour or stroking your dog, for example. Instead of a hand-held controller, a headset is used to create a brain-computer interface - a communication link between the human brain and the computerised device.

Sensors in the headset measure the electrical impulses from brain at various different frequencies - each frequency can be somewhat controlled, under special circumstances. This activity is then processed by a computer, amplified and fed into the electrical circuit of the electronic toy. Professor James comments on the future potential for this technology: "Whilst brain-computer interfaces already exist - there are already a few gaming headsets on the market - their functionality has been quite limited.

New research is making the headsets now read cleaner and stronger signals than ever before - this means stronger links to the toy, game or action thus making it a very immersive experience. "The exciting bit is what comes next - how long before we start unlocking the front door or answering the phone through brain-computer interfaces?"

Jun 21, 2016

New book on Human Computer Confluence - FREE PDF!

Two good news for Positive Technology followers.

1) Our new book on Human Computer Confluence is out!

2) It can be downloaded for free here

Human-computer confluence refers to an invisible, implicit, embodied or even implanted interaction between humans and system components. New classes of user interfaces are emerging that make use of several sensors and are able to adapt their physical properties to the current situational context of users.

A key aspect of human-computer confluence is its potential for transforming human experience in the sense of bending, breaking and blending the barriers between the real, the virtual and the augmented, to allow users to experience their body and their world in new ways. Research on Presence, Embodiment and Brain-Computer Interface is already exploring these boundaries and asking questions such as: Can we seamlessly move between the virtual and the real? Can we assimilate fundamentally new senses through confluence?

The aim of this book is to explore the boundaries and intersections of the multidisciplinary field of HCC and discuss its potential applications in different domains, including healthcare, education, training and even arts.

DOWNLOAD THE FULL BOOK HERE AS OPEN ACCESS

Please cite as follows:

Andrea Gaggioli, Alois Ferscha, Giuseppe Riva, Stephen Dunne, Isabell Viaud-Delmon (2016). Human computer confluence: transforming human experience through symbiotic technologies. Warsaw: De Gruyter. ISBN 9783110471120.

09:53 Posted in AI & robotics, Augmented/mixed reality, Biofeedback & neurofeedback, Blue sky, Brain training & cognitive enhancement, Brain-computer interface, Cognitive Informatics, Cyberart, Cybertherapy, Emotional computing, Enactive interfaces, Future interfaces, ICT and complexity, Neurotechnology & neuroinformatics, Positive Technology events, Research tools, Self-Tracking, Serious games, Technology & spirituality, Telepresence & virtual presence, Virtual worlds, Wearable & mobile | Permalink

May 26, 2016

From User Experience (UX) to Transformative User Experience (T-UX)

In 1999, Joseph Pine and James Gilmore wrote a seminal book titled “The Experience Economy” (Harvard Business School Press, Boston, MA) that theorized the shift from a service-based economy to an experience-based economy.

According to these authors, in the new experience economy the goal of the purchase is no longer to own a product (be it a good or service), but to use it in order to enjoy a compelling experience. An experience, thus, is a whole-new type of offer: in contrast to commodities, goods and services, it is designed to be as personal and memorable as possible. Just as in a theatrical representation, companies stage meaningful events to engage customers in a memorable and personal way, by offering activities that provide engaging and rewarding experiences.

Indeed, if one looks back at the past ten years, the concept of experience has become more central to several fields, including tourism, architecture, and – perhaps more relevant for this column – to human-computer interaction, with the rise of “User Experience” (UX).

The concept of UX was introduced by Donald Norman in a 1995 article published on the CHI proceedings (D. Norman, J. Miller, A. Henderson: What You See, Some of What's in the Future, And How We Go About Doing It: HI at Apple Computer. Proceedings of CHI 1995, Denver, Colorado, USA). Norman argued that focusing exclusively on usability attribute (i.e. easy of use, efficacy, effectiveness) when designing an interactive product is not enough; one should take into account the whole experience of the user with the system, including users’ emotional and contextual needs. Since then, the UX concept has assumed an increasing importance in HCI. As McCarthy and Wright emphasized in their book “Technology as Experience” (MIT Press, 2004):

“In order to do justice to the wide range of influences that technology has in our lives, we should try to interpret the relationship between people and technology in terms of the felt life and the felt or emotional quality of action and interaction.” (p. 12).

However, according to Pine and Gilmore experience may not be the last step of what they call as “Progression of Economic Value”. They speculated further into the future, by identifying the “Transformation Economy” as the likely next phase. In their view, while experiences are essentially memorable events which stimulate the sensorial and emotional levels, transformations go much further in that they are the result of a series of experiences staged by companies to guide customers learning, taking action and eventually achieving their aspirations and goals.

In Pine and Gilmore terms, an aspirant is the individual who seeks advice for personal change (i.e. a better figure, a new career, and so forth), while the provider of this change (a dietist, a university) is an elictor. The elictor guide the aspirant through a series of experiences which are designed with certain purpose and goals. According to Pine and Gilmore, the main difference between an experience and a transformation is that the latter occurs when an experience is customized:

“When you customize an experience to make it just right for an individual - providing exactly what he needs right now - you cannot help changing that individual. When you customize an experience, you automatically turn it into a transformation, which companies create on top of experiences (recall that phrase: “a life-transforming experience”), just as they create experiences on top of services and so forth” (p. 244).

A further key difference between experiences and transformations concerns their effects: because an experience is inherently personal, no two people can have the same one. Likewise, no individual can undergo the same transformation twice: the second time it’s attempted, the individual would no longer be the same person (p. 254-255).

But what will be the impact of this upcoming, “transformation economy” on how people relate with technology? If in the experience economy the buzzword is “User Experience”, in the next stage the new buzzword might be “User Transformation”.

Indeed, we can see some initial signs of this shift. For example, FitBit and similar self-tracking gadgets are starting to offer personalized advices to foster enduring changes in users’ lifestyle; another example is from the fields of ambient intelligence and domotics, where there is an increasing focus towards designing systems that are able to learn from the user’s behaviour (i.e. by tracking the movement of an elderly in his home) to provide context-aware adaptive services (i.e. sending an alert when the user is at risk of falling).

But likely, the most important ICT step towards the transformation economy could take place with the introduction of next-generation immersive virtual reality systems. Since these new systems are based on mobile devices (an example is the recent partnership between Oculus and Samsung), they are able to deliver VR experiences that incorporate information on the external/internal context of the user (i.e. time, location, temperature, mood etc) by using the sensors incapsulated in the mobile phone.

By personalizing the immersive experience with context-based information, it might be possibile to induce higher levels of involvement and presence in the virtual environment. In case of cyber-therapeutic applications, this could translate into the development of more effective, transformative virtual healing experiences.

Furthermore, the emergence of "symbiotic technologies", such as neuroprosthetic devices and neuro-biofeedback, is enabling a direct connection between the computer and the brain. Increasingly, these neural interfaces are moving from the biomedical domain to become consumer products. But unlike existing digital experiential products, symbiotic technologies have the potential to transform more radically basic human experiences.

Brain-computer interfaces, immersive virtual reality and augmented reality and their various combinations will allow users to create “personalized alterations” of experience. Just as nowadays we can download and install a number of “plug-ins”, i.e. apps to personalize our experience with hardware and software products, so very soon we may download and install new “extensions of the self”, or “experiential plug-ins” which will provide us with a number of options for altering/replacing/simulating our sensorial, emotional and cognitive processes.

Such mediated recombinations of human experience will result from of the application of existing neuro-technologies in completely new domains. Although virtual reality and brain-computer interface were originally developed for applications in specific domains (i.e. military simulations, neurorehabilitation, etc), today the use of these technologies has been extended to other fields of application, ranging from entertainment to education.

In the field of biology, Stephen Jay Gould and Elizabeth Vrba (Paleobiology, 8, 4-15, 1982) have defined “exaptation” the process in which a feature acquires a function that was not acquired through natural selection. Likewise, the exaptation of neurotechnologies to the digital consumer market may lead to the rise of a novel “neuro-experience economy”, in which technology-mediated transformation of experience is the main product.

Just as a Genetically-Modified Organism (GMO) is an organism whose genetic material is altered using genetic-engineering techniques, so we could define aTechnologically-Modified Experience (ETM) a re-engineered experience resulting from the artificial manipulation of neurobiological bases of sensorial, affective, and cognitive processes.

Clearly, the emergence of the transformative neuro-experience economy will not happen in weeks or months but rather in years. It will take some time before people will find brain-computer devices on the shelves of electronic stores: most of these tools are still in the pre-commercial phase at best, and some are found only in laboratories.

Nevertheless, the mere possibility that such scenario will sooner or later come to pass, raises important questions that should be addressed before symbiotic technologies will enter our lives: does technological alteration of human experience threaten the autonomy of individuals, or the authenticity of their lives? How can we help individuals decide which transformations are good or bad for them?

Answering these important issues will require the collaboration of many disciplines, including philosophy, computer ethics and, of course, cyberpsychology.

Oct 06, 2014

Bionic Vision Australia’s Bionic Eye Gives New Sight to People Blinded by Retinitis Pigmentosa

Via Medgadget

Bionic Vision Australia, a collaboration between of researchers working on a bionic eye, has announced that its prototype implant has completed a two year trial in patients with advanced retinitis pigmentosa. Three patients with profound vision loss received 24-channel suprachoroidal electrode implants that caused no noticeable serious side effects. Moreover, though this was not formally part of the study, the patients were able to see more light and able to distinguish shapes that were invisible to them prior to implantation. The newly gained vision allowed them to improve how they navigated around objects and how well they were able to spot items on a tabletop.

The next step is to try out the latest 44-channel device in a clinical trial slated for next year and then move on to a 98-channel system that is currently in development.

This study is critically important to the continuation of our research efforts and the results exceeded all our expectations,” Professor Mark Hargreaves, Chair of the BVA board, said in a statement. “We have demonstrated clearly that our suprachoroidal implants are safe to insert surgically and cause no adverse events once in place. Significantly, we have also been able to observe that our device prototype was able to evoke meaningful visual perception in patients with profound visual loss.”

Here’s one of the study participants using the bionic eye:

First direct brain-to-brain communication between human subjects

Via KurzweilAI.net

An international team of neuroscientists and robotics engineers have demonstrated the first direct remote brain-to-brain communication between two humans located 5,000 miles away from each other and communicating via the Internet, as reported in a paper recently published in PLOS ONE (open access).

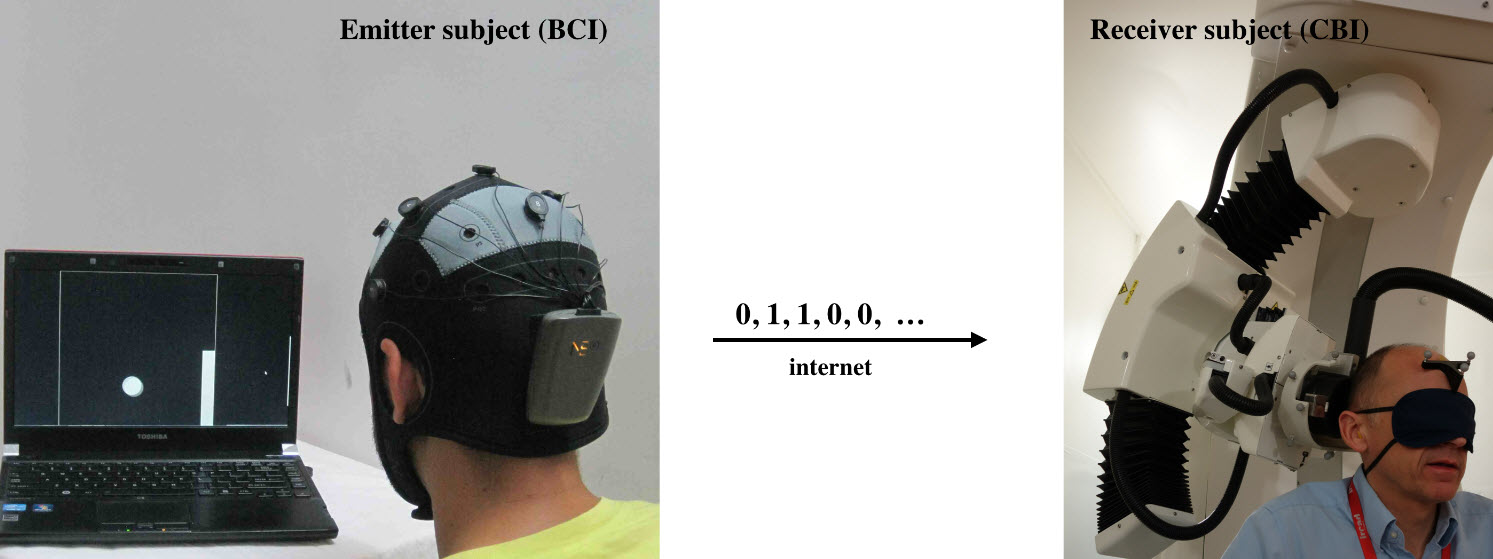

Emitter and receiver subjects with non-invasive devices supporting, respectively, a brain-computer interface (BCI), based on EEG changes, driven by motor imagery (left) and a computer-brain interface (CBI) based on the reception of phosphenes elicited by neuro-navigated TMS (right) (credit: Carles Grau et al./PLoS ONE)

In India, researchers encoded two words (“hola” and “ciao”) as binary strings and presented them as a series of cues on a computer monitor. They recorded the subject’s EEG signals as the subject was instructed to think about moving his feet (binary 0) or hands (binary 1). They then sent the recorded series of binary values in an email message to researchers in France, 5,000 miles away.

There, the binary strings were converted into a series of transcranial magnetic stimulation (TMS) pulses applied to a hotspot location in the right visual occipital cortex that either produced a phosphene (perceived flash of light) or not.

“We wanted to find out if one could communicate directly between two people by reading out the brain activity from one person and injecting brain activity into the second person, and do so across great physical distances by leveraging existing communication pathways,” explains coauthor Alvaro Pascual-Leone, MD, PhD, Director of the Berenson-Allen Center for Noninvasive Brain Stimulation at Beth Israel Deaconess Medical Center (BIDMC) and Professor of Neurology at Harvard Medical School.

A team of researchers from Starlab Barcelona, Spain and Axilum Robotics, Strasbourg, France conducted the experiment. A second similar experiment was conducted between individuals in Spain and France.

“We believe these experiments represent an important first step in exploring the feasibility of complementing or bypassing traditional language-based or other motor/PNS mediated means in interpersonal communication,” the researchers say in the paper.

“Although certainly limited in nature (e.g., the bit rates achieved in our experiments were modest even by current BCI (brain-computer interface) standards, mostly due to the dynamics of the precise CBI (computer-brain interface) implementation, these initial results suggest new research directions, including the non-invasive direct transmission of emotions and feelings or the possibility of sense synthesis in humans — that is, the direct interface of arbitrary sensors with the human brain using brain stimulation, as previously demonstrated in animals with invasive methods.

Brain-to-brain (B2B) communication system overview. On the left, the BCI subsystem is shown schematically, including electrodes over the motor cortex and the EEG amplifier/transmitter wireless box in the cap. Motor imagery of the feet codes the bit value 0, of the hands codes bit value 1. On the right, the CBI system is illustrated, highlighting the role of coil orientation for encoding the two bit values. Communication between the BCI and CBI components is mediated by the Internet. (Credit: Carles Grau et al./PLoS ONE)

“The proposed technology could be extended to support a bi-directional dialogue between two or more mind/brains (namely, by the integration of EEG and TMS systems in each subject). In addition, we speculate that future research could explore the use of closed mind-loops in which information associated to voluntary activity from a brain area or network is captured and, after adequate external processing, used to control other brain elements in the same subject. This approach could lead to conscious synthetically mediated modulation of phenomena best detected subjectively by the subject, including emotions, pain and psychotic, depressive or obsessive-compulsive thoughts.

“Finally, we anticipate that computers in the not-so-distant future will interact directly with the human brain in a fluent manner, supporting both computer- and brain-to-brain communication routinely. The widespread use of human brain-to-brain technologically mediated communication will create novel possibilities for human interrelation with broad social implications that will require new ethical and legislative responses.”

This work was partly supported by the EU FP7 FET Open HIVE project, the Starlab Kolmogorov project, and the Neurology Department of the Hospital de Bellvitge.

Aug 03, 2014

Detecting awareness in patients with disorders of consciousness using a hybrid brain-computer interface

Detecting awareness in patients with disorders of consciousness using a hybrid brain-computer interface.

J Neural Eng. 2014 Aug 1;11(5):056007

Authors: Pan J, Xie Q, He Y, Wang F, Di H, Laureys S, Yu R, Li Y

Abstract. Objective. The bedside detection of potential awareness in patients with disorders of consciousness (DOC) currently relies only on behavioral observations and tests; however, the misdiagnosis rates in this patient group are historically relatively high. In this study, we proposed a visual hybrid brain-computer interface (BCI) combining P300 and steady-state evoked potential (SSVEP) responses to detect awareness in severely brain injured patients. Approach. Four healthy subjects, seven DOC patients who were in a vegetative state (VS, n = 4) or minimally conscious state (MCS, n = 3), and one locked-in syndrome (LIS) patient attempted a command-following experiment. In each experimental trial, two photos were presented to each patient; one was the patient's own photo, and the other photo was unfamiliar. The patients were instructed to focus on their own or the unfamiliar photos. The BCI system determined which photo the patient focused on with both P300 and SSVEP detections. Main results. Four healthy subjects, one of the 4 VS, one of the 3 MCS, and the LIS patient were able to selectively attend to their own or the unfamiliar photos (classification accuracy, 66-100%). Two additional patients (one VS and one MCS) failed to attend the unfamiliar photo (50-52%) but achieved significant accuracies for their own photo (64-68%). All other patients failed to show any significant response to commands (46-55%). Significance. Through the hybrid BCI system, command following was detected in four healthy subjects, two of 7 DOC patients, and one LIS patient. We suggest that the hybrid BCI system could be used as a supportive bedside tool to detect awareness in patients with DOC.

22:34 Posted in Brain-computer interface, Cybertherapy, Research tools | Permalink | Comments (0)

Apr 15, 2014

Brain-computer interfaces: a powerful tool for scientific inquiry

Brain-computer interfaces: a powerful tool for scientific inquiry.

Curr Opin Neurobiol. 2014 Apr;25C:70-75

Authors: Wander JD, Rao RP

Abstract. Brain-computer interfaces (BCIs) are devices that record from the nervous system, provide input directly to the nervous system, or do both. Sensory BCIs such as cochlear implants have already had notable clinical success and motor BCIs have shown great promise for helping patients with severe motor deficits. Clinical and engineering outcomes aside, BCIs can also be tremendously powerful tools for scientific inquiry into the workings of the nervous system. They allow researchers to inject and record information at various stages of the system, permitting investigation of the brain in vivo and facilitating the reverse engineering of brain function. Most notably, BCIs are emerging as a novel experimental tool for investigating the tremendous adaptive capacity of the nervous system.

23:14 Posted in Brain-computer interface | Permalink | Comments (0)

A Hybrid Brain Computer Interface System Based on the Neurophysiological Protocol and Brain-actuated Switch for Wheelchair Control

A Hybrid Brain Computer Interface System Based on the Neurophysiological Protocol and Brain-actuated Switch for Wheelchair Control.

J Neurosci Methods. 2014 Apr 5;

Authors: Cao L, Li J, Ji H, Jiang C

BACKGROUND: Brain Computer Interfaces (BCIs) are developed to translate brain waves into machine instructions for external devices control. Recently, hybrid BCI systems are proposed for the multi-degree control of a real wheelchair to improve the systematical efficiency of traditional BCIs. However, it is difficult for existing hybrid BCIs to implement the multi-dimensional control in one command cycle.

NEW METHOD: This paper proposes a novel hybrid BCI system that combines motor imagery (MI)-based bio-signals and steady-state visual evoked potentials (SSVEPs) to control the speed and direction of a real wheelchair synchronously. Furthermore, a hybrid modalities-based switch is firstly designed to turn on/off the control system of the wheelchair.

RESULTS: Two experiments were performed to assess the proposed BCI system. One was implemented for training and the other one conducted a wheelchair control task in the real environment. All subjects completed these tasks successfully and no collisions occurred in the real wheelchair control experiment.

COMPARISON WITH EXISTING METHOD(S): The protocol of our BCI gave much more control commands than those of previous MI and SSVEP-based BCIs. Comparing with other BCI wheelchair systems, the superiority reflected by the index of path length optimality ratio validated the high efficiency of our control strategy.

CONCLUSIONS: The results validated the efficiency of our hybrid BCI system to control the direction and speed of a real wheelchair as well as the reliability of hybrid signals-based switch control.

23:03 Posted in Brain-computer interface, Neurotechnology & neuroinformatics | Permalink | Comments (0)

Mar 02, 2014

3D Thought controlled environment via Interaxon

In this demo video, artist Alex McLeod shows an environment he designed for Interaxon to use at CES in 2011 interaxon.ca/CES#.

The glasses display the scene in 3D and attaches sensors read users brain-states which control elements of the scene.

3D Thought controlled environment via Interaxon from Alex McLeod on Vimeo.

Dec 24, 2013

Speaking and cognitive distractions during EEG-based brain control of a virtual neuroprosthesis-arm

Speaking and cognitive distractions during EEG-based brain control of a virtual neuroprosthesis-arm.

J Neuroeng Rehabil. 2013 Dec 21;10(1):116

Authors: Foldes ST, Taylor DM

BACKGROUND: Brain-computer interface (BCI) systems have been developed to provide paralyzed individuals the ability to command the movements of an assistive device using only their brain activity. BCI systems are typically tested in a controlled laboratory environment were the user is focused solely on the brain-control task. However, for practical use in everyday life people must be able to use their brain-controlled device while mentally engaged with the cognitive responsibilities of daily activities and while compensating for any inherent dynamics of the device itself. BCIs that use electroencephalography (EEG) for movement control are often assumed to require significant mental effort, thus preventing users from thinking about anything else while using their BCI. This study tested the impact of cognitive load as well as speaking on the ability to use an EEG-based BCI. FINDINGS: Six participants controlled the two-dimensional (2D) movements of a simulated neuroprosthesis-arm under three different levels of cognitive distraction. The two higher cognitive load conditions also required simultaneously speaking during BCI use. On average, movement performance declined during higher levels of cognitive distraction, but only by a limited amount. Movement completion time increased by 7.2%, the percentage of targets successfully acquired declined by 11%, and path efficiency declined by 8.6%. Only the decline in percentage of targets acquired and path efficiency were statistically significant (p < 0.05). CONCLUSION: People who have relatively good movement control of an EEG-based BCI may be able to speak and perform other cognitively engaging activities with only a minor drop in BCI-control performance.

21:03 Posted in Brain-computer interface, Neurotechnology & neuroinformatics | Permalink | Comments (0)

Dec 08, 2013

How to use mind-controlled robots in manufacturing, medicine

via KurzweilAI

University at Buffalo researchers are developing brain-computer interface (BCI) devices to mentally control robots.

“The technology has practical applications that we’re only beginning to explore,” said Thenkurussi “Kesh” Kesavadas, PhD, UB professor of mechanical and aerospace engineering and director of UB’s Virtual Reality Laboratory. “For example, it could help paraplegic patients to control assistive devices, or it could help factory workers perform advanced manufacturing tasks.”

Most BCI research has involved expensive, invasive BCI devices that are inserted into the brain, and used mostly to help disabled people.

UB research relies on a relatively inexpensive ($750), non-invasive external device (Emotiv EPOC). It reads EEG brain activity with 14 sensors and transmits the signal wirelessly to a computer, which then sends signals to the robot to control its movements.

Kesavadas recently demonstrated the technology with Pramod Chembrammel, a doctoral student in his lab. Chembrammel trained with the instrument for a few days, then used the device to control a robotic arm.

He used the arm to insert a wood peg into a hole and rotate the peg. “It was incredible to see the robot respond to my thoughts,” Chembrammel said. “It wasn’t even that difficult to learn how to use the device.”

The video (below) shows that a simple set of instructions can be combined to execute more complex robotic actions, Kesavadas said. Such robots could be used by factory workers to perform hands-free assembly of products, or carry out tasks like drilling or welding.

The potential advantage, Kesavadas said, is that BCI-controlled devices could reduce the tedium of performing repetitious tasks and improve worker safety and productivity. The devices can also leverage the worker’s decision-making skills, such as identifying a faulty part in an automated assembly line.

23:10 Posted in AI & robotics, Brain-computer interface | Permalink | Comments (0)

Nov 29, 2013

Real-time Neurofeedback Using Functional MRI Could Improve Down-Regulation of Amygdala Activity During Emotional Stimulation

Real-time Neurofeedback Using Functional MRI Could Improve Down-Regulation of Amygdala Activity During Emotional Stimulation: A Proof-of-Concept Study.

Brain Topogr. 2013 Nov 16;

Authors: Brühl AB, Scherpiet S, Sulzer J, Stämpfli P, Seifritz E, Herwig U

Abstract. The amygdala is a central target of emotion regulation. It is overactive and dysregulated in affective and anxiety disorders and amygdala activity normalizes with successful therapy of the symptoms. However, a considerable percentage of patients do not reach remission within acceptable duration of treatment. The amygdala could therefore represent a promising target for real-time functional magnetic resonance imaging (rtfMRI) neurofeedback. rtfMRI neurofeedback directly improves the voluntary regulation of localized brain activity. At present, most rtfMRI neurofeedback studies have trained participants to increase activity of a target, i.e. up-regulation. However, in the case of the amygdala, down-regulation is supposedly more clinically relevant. Therefore, we developed a task that trained participants to down-regulate activity of the right amygdala while being confronted with amygdala stimulation, i.e. negative emotional faces. The activity in the functionally-defined region was used as online visual feedback in six healthy subjects instructed to minimize this signal using reality checking as emotion regulation strategy. Over a period of four training sessions, participants significantly increased down-regulation of the right amygdala compared to a passive viewing condition to control for habilitation effects. This result supports the concept of using rtfMRI neurofeedback training to control brain activity during relevant stimulation, specifically in the case of emotion, and has implications towards clinical treatment of emotional disorders.

00:06 Posted in Brain-computer interface | Permalink | Comments (0)

Nov 28, 2013

Effect of mindfulness meditation on brain-computer interface performance

Effect of mindfulness meditation on brain-computer interface performance.

Conscious Cogn. 2013 Nov 22;23C:12-21

Authors: Tan LF, Dienes Z, Jansari A, Goh SY

Abstract. Electroencephalogram based Brain-Computer Interfaces (BCIs) enable stroke and motor neuron disease patients to communicate and control devices. Mindfulness meditation has been claimed to enhance metacognitive regulation. The current study explores whether mindfulness meditation training can thus improve the performance of BCI users. To eliminate the possibility of expectation of improvement influencing the results, we introduced a music training condition. A norming study found that both meditation and music interventions elicited clear expectations for improvement on the BCI task, with the strength of expectation being closely matched. In the main 12week intervention study, seventy-six healthy volunteers were randomly assigned to three groups: a meditation training group; a music training group; and a no treatment control group. The mindfulness meditation training group obtained a significantly higher BCI accuracy compared to both the music training and no-treatment control groups after the intervention, indicating effects of meditation above and beyond expectancy effects.

23:40 Posted in Brain-computer interface, Mental practice & mental simulation | Permalink | Comments (0)

Nov 16, 2013

Monkeys Control Avatar’s Arms Through Brain-Machine Interface

Via Medgadget

Researchers at Duke University have reported in journal Science Translational Medicine that they were able to train monkeys to control two virtual limbs through a brain-computer interface (BCI). The rhesus monkeys initially used joysticks to become comfortable moving the avatar’s arms, but later the brain-computer interfaces implanted on their brains were activated to allow the monkeys to drive the avatar using only their minds. Two years ago the same team was able to train monkeys to control one arm, but the complexity of controlling two arms required the development of a new algorithm for reading and filtering the signals. Moreover, the monkey brains themselves showed great adaptation to the training with the BCI, building new neural pathways to help improve how the monkeys moved the virtual arms. As the authors of the study note in the abstract, “These findings should help in the design of more sophisticated BMIs capable of enabling bimanual motor control in human patients.”

Here’s a video of one of the avatars being controlled to tap on the white balls:

15:56 Posted in Brain-computer interface, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Nov 03, 2013

Neurocam wearable camera reads your brainwaves and records what interests you

Via KurzweilAI.net

The neurocam is the world’s first wearable camera system that automatically records what interests you, based on brainwaves, DigInfo TV reports.

It consists of a headset with a brain-wave sensor and uses the iPhone’s camera to record a 5-second GIF animation. It could also be useful for life-logging.

The algorithm for quantifying brain waves was co-developed by Associate Professor Mitsukura at Keio University.

The project team plans to create an emotional interface.

22:59 Posted in Brain-computer interface, Emotional computing, Wearable & mobile | Permalink | Comments (0)

Aug 07, 2013

Pupil responses allow communication in locked-in syndrome patients

Pupil responses allow communication in locked-in syndrome patients.

Josef Stoll et al., Current Biology, Volume 23, Issue 15, R647-R648, 5 August 2013

For patients with severe motor disabilities, a robust means of communication is a crucial factor for their well-being. We report here that pupil size measured by a bedside camera can be used to communicate with patients with locked-in syndrome. With the same protocol we demonstrate command-following for a patient in a minimally conscious state, suggesting its potential as a diagnostic tool for patients whose state of consciousness is in question. Importantly, neither training nor individual adjustment of our system’s decoding parameters were required for successful decoding of patients’ responses.

Paper full text PDF

Image credit: Flickr user Beth77

13:25 Posted in Brain-computer interface, Neurotechnology & neuroinformatics | Permalink | Comments (0)

May 26, 2013

A Hybrid Brain-Computer Interface-Based Mail Client

A Hybrid Brain-Computer Interface-Based Mail Client.

Comput Math Methods Med. 2013;2013:750934

Authors: Yu T, Li Y, Long J, Li F

Abstract. Brain-computer interface-based communication plays an important role in brain-computer interface (BCI) applications; electronic mail is one of the most common communication tools. In this study, we propose a hybrid BCI-based mail client that implements electronic mail communication by means of real-time classification of multimodal features extracted from scalp electroencephalography (EEG). With this BCI mail client, users can receive, read, write, and attach files to their mail. Using a BCI mouse that utilizes hybrid brain signals, that is, motor imagery and P300 potential, the user can select and activate the function keys and links on the mail client graphical user interface (GUI). An adaptive P300 speller is employed for text input. The system has been tested with 6 subjects, and the experimental results validate the efficacy of the proposed method.

19:33 Posted in Brain-computer interface | Permalink | Comments (0)

Mar 03, 2013

Brain-to-brain communication between rats achieved

From Duke Medicine News and Communications

Researchers at Duke University Medical Center in the US report in the February 28, 2013 issue of Scientific Reports the successful wiring together of sensory areas in the brains of two rats. The result of the experiment is that one rat will respond to the experiences to which the other is exposed.

The results of these projects suggest the future potential for linking multiple brains to form what the research team is calling an "organic computer," which could allow sharing of motor and sensory information among groups of animals.

"Our previous studies with brain-machine interfaces had convinced us that the rat brain was much more plastic than we had previously thought," said Miguel Nicolelis, M.D., PhD, lead author of the publication and professor of neurobiology at Duke University School of Medicine. "In those experiments, the rat brain was able to adapt easily to accept input from devices outside the body and even learn how to process invisible infrared light generated by an artificial sensor. So, the question we asked was, ‘if the brain could assimilate signals from artificial sensors, could it also assimilate information input from sensors from a different body?’"

To test this hypothesis, the researchers first trained pairs of rats to solve a simple problem: to press the correct lever when an indicator light above the lever switched on, which rewarded the rats with a sip of water. They next connected the two animals' brains via arrays of microelectrodes inserted into the area of the cortex that processes motor information.

One of the two rodents was designated as the "encoder" animal. This animal received a visual cue that showed it which lever to press in exchange for a water reward. Once this “encoder” rat pressed the right lever, a sample of its brain activity that coded its behavioral decision was translated into a pattern of electrical stimulation that was delivered directly into the brain of the second rat, known as the "decoder" animal.

The decoder rat had the same types of levers in its chamber, but it did not receive any visual cue indicating which lever it should press to obtain a reward. Therefore, to press the correct lever and receive the reward it craved, the decoder rat would have to rely on the cue transmitted from the encoder via the brain-to-brain interface.

The researchers then conducted trials to determine how well the decoder animal could decipher the brain input from the encoder rat to choose the correct lever. The decoder rat ultimately achieved a maximum success rate of about 70 percent, only slightly below the possible maximum success rate of 78 percent that the researchers had theorized was achievable based on success rates of sending signals directly to the decoder rat’s brain.

Importantly, the communication provided by this brain-to-brain interface was two-way. For instance, the encoder rat did not receive a full reward if the decoder rat made a wrong choice. The result of this peculiar contingency, said Nicolelis, led to the establishment of a "behavioral collaboration" between the pair of rats.

"We saw that when the decoder rat committed an error, the encoder basically changed both its brain function and behavior to make it easier for its partner to get it right," Nicolelis said. "The encoder improved the signal-to-noise ratio of its brain activity that represented the decision, so the signal became cleaner and easier to detect. And it made a quicker, cleaner decision to choose the correct lever to press. Invariably, when the encoder made those adaptations, the decoder got the right decision more often, so they both got a better reward."

In a second set of experiments, the researchers trained pairs of rats to distinguish between a narrow or wide opening using their whiskers. If the opening was narrow, they were taught to nose-poke a water port on the left side of the chamber to receive a reward; for a wide opening, they had to poke a port on the right side.

The researchers then divided the rats into encoders and decoders. The decoders were trained to associate stimulation pulses with the left reward poke as the correct choice, and an absence of pulses with the right reward poke as correct. During trials in which the encoder detected the opening width and transmitted the choice to the decoder, the decoder had a success rate of about 65 percent, significantly above chance.

To test the transmission limits of the brain-to-brain communication, the researchers placed an encoder rat in Brazil, at the Edmond and Lily Safra International Institute of Neuroscience of Natal (ELS-IINN), and transmitted its brain signals over the Internet to a decoder rat in Durham, N.C. They found that the two rats could still work together on the tactile discrimination task.

"So, even though the animals were on different continents, with the resulting noisy transmission and signal delays, they could still communicate," said Miguel Pais-Vieira, PhD, a postdoctoral fellow and first author of the study. "This tells us that it could be possible to create a workable, network of animal brains distributed in many different locations."

Nicolelis added, "These experiments demonstrated the ability to establish a sophisticated, direct communication linkage between rat brains, and that the decoder brain is working as a pattern-recognition device. So basically, we are creating an organic computer that solves a puzzle."

"But in this case, we are not inputting instructions, but rather only a signal that represents a decision made by the encoder, which is transmitted to the decoder’s brain which has to figure out how to solve the puzzle. So, we are creating a single central nervous system made up of two rat brains,” said Nicolelis. He pointed out that, in theory, such a system is not limited to a pair of brains, but instead could include a network of brains, or “brain-net.” Researchers at Duke and at the ELS-IINN are now working on experiments to link multiple animals cooperatively to solve more complex behavioral tasks.

"We cannot predict what kinds of emergent properties would appear when animals begin interacting as part of a brain-net. In theory, you could imagine that a combination of brains could provide solutions that individual brains cannot achieve by themselves," continued Nicolelis. Such a connection might even mean that one animal would incorporate another's sense of "self," he said.

"In fact, our studies of the sensory cortex of the decoder rats in these experiments showed that the decoder's brain began to represent in its tactile cortex not only its own whiskers, but the encoder rat's whiskers, too. We detected cortical neurons that responded to both sets of whiskers, which means that the rat created a second representation of a second body on top of its own." Basic studies of such adaptations could lead to a new field that Nicolelis calls the "neurophysiology of social interaction."

Such complex experiments will be enabled by the laboratory's ability to record brain signals from almost 2,000 brain cells at once. The researchers hope to record the electrical activity produced simultaneously by 10-30,000 cortical neurons in the next five years.

Such massive brain recordings will enable more precise control of motor neuroprostheses—such as those being developed by the Walk Again Project—to restore motor control to paralyzed people, Nicolelis said.

More to explore:

, , , & Sci. Rep. 3, 1319 (2013). PUBMED

14:20 Posted in Brain-computer interface, Future interfaces | Permalink | Comments (0)

Jul 14, 2012

Robot avatar body controlled by thought alone

Via: New Scientist

For the first time, a person lying in an fMRI machine has controlled a robot hundreds of kilometers away using thought alone.

"The ultimate goal is to create a surrogate, like in Avatar, although that’s a long way off yet,” says Abderrahmane Kheddar, director of the joint robotics laboratory at the National Institute of Advanced Industrial Science and Technology in Tsukuba, Japan.

Teleoperated robots, those that can be remotely controlled by a human, have been around for decades. Kheddar and his colleagues are going a step further. “True embodiment goes far beyond classical telepresence, by making you feel that the thing you are embodying is part of you,” says Kheddar. “This is the feeling we want to reach.”

To attempt this feat, researchers with the international Virtual Embodiment and Robotic Re-embodiment project used fMRI to scan the brain of university student Tirosh Shapira as he imagined moving different parts of his body. He attempted to direct a virtual avatar by thinking of moving his left or right hand or his legs.

The scanner works by measuring changes in blood flow to the brain’s primary motor cortex, and using this the team was able to create an algorithm that could distinguish between each thought of movement (see diagram). The commands were then sent via an internet connection to a small robot at the Béziers Technology Institute in France.

The set-up allowed Shapira to control the robot in near real time with his thoughts, while a camera on the robot’s head allowed him to see from the robot’s perspective. When he thought of moving his left or right hand, the robot moved 30 degrees to the left or right. Imagining moving his legs made the robot walk forward.

Read further at: http://www.newscientist.com/article/mg21528725.900-robot-avatar-body-controlled-by-thought-alone.html

18:51 Posted in Brain-computer interface, Future interfaces | Permalink | Comments (0)

Jul 05, 2012

A Real-Time fMRI-Based Spelling Device Immediately Enabling Robust Motor-Independent Communication

A Real-Time fMRI-Based Spelling Device Immediately Enabling Robust Motor-Independent Communication.

Curr Biol. 2012 Jun 27

Authors: Sorger B, Reithler J, Dahmen B, Goebel R

Human communication entirely depends on the functional integrity of the neuromuscular system. This is devastatingly illustrated in clinical conditions such as the so-called locked-in syndrome (LIS) [1], in which severely motor-disabled patients become incapable to communicate naturally-while being fully conscious and awake. For the last 20 years, research on motor-independent communication has focused on developing brain-computer interfaces (BCIs) implementing neuroelectric signals for communication (e.g., [2-7]), and BCIs based on electroencephalography (EEG) have already been applied successfully to concerned patients [8-11]. However, not all patients achieve proficiency in EEG-based BCI control [12]. Thus, more recently, hemodynamic brain signals have also been explored for BCI purposes [13-16]. Here, we introduce the first spelling device based on fMRI. By exploiting spatiotemporal characteristics of hemodynamic responses, evoked by performing differently timed mental imagery tasks, our novel letter encoding technique allows translating any freely chosen answer (letter-by-letter) into reliable and differentiable single-trial fMRI signals. Most importantly, automated letter decoding in real time enables back-and-forth communication within a single scanning session. Because the suggested spelling device requires only little effort and pretraining, it is immediately operational and possesses high potential for clinical applications, both in terms of diagnostics and establishing short-term communication with nonresponsive and severely motor-impaired patients.

23:24 Posted in Brain-computer interface, Cybertherapy, Neurotechnology & neuroinformatics | Permalink | Comments (0)