Oct 18, 2014

New Technique Helps Diagnose Consciousness in Locked-in Patients

Via Medgadget

Brain networks in two behaviourally-similar vegetative patients (left and middle), but one of whom imagined playing tennis (middle panel), alongside a healthy adult (right panel). Credit: Srivas Chennu

People locked into a vegetative state due to disease or injury are a major mystery for medical science. Some may be fully unconscious, while others remain aware of what’s going on around them but can’t speak or move to show it. Now scientists at Cambridge have reported in journal PLOS Computational Biology on a new technique that can help identify locked-in people that can still hear and retain their consciousness.

Some details from the study abstract:

We devised a novel topographical metric, termed modular span, which showed that the alpha network modules in patients were also spatially circumscribed, lacking the structured long-distance interactions commonly observed in the healthy controls. Importantly however, these differences between graph-theoretic metrics were partially reversed in delta and theta band networks, which were also significantly more similar to each other in patients than controls. Going further, we found that metrics of alpha network efficiency also correlated with the degree of behavioural awareness. Intriguingly, some patients in behaviourally unresponsive vegetative states who demonstrated evidence of covert awareness with functional neuroimaging stood out from this trend: they had alpha networks that were remarkably well preserved and similar to those observed in the controls. Taken together, our findings inform current understanding of disorders of consciousness by highlighting the distinctive brain networks that characterise them. In the significant minority of vegetative patients who follow commands in neuroimaging tests, they point to putative network mechanisms that could support cognitive function and consciousness despite profound behavioural impairment.

Study in PLOS Computational Biology: Spectral Signatures of Reorganised Brain Networks in Disorders of Consciousness

Oct 17, 2014

Leia Display System - promo video HD short version

www.leiadisplay.com

www.facebook.com/LeiaDisplaySystem

21:11 Posted in Telepresence & virtual presence | Permalink | Comments (0)

Oct 16, 2014

TIME: Fear, Misinformation, and Social Media Complicate Ebola Fight

From TIME

Based on Facebook and Twitter chatter, it can seem like Ebola is everywhere. Following the first diagnosis of an Ebola case in the United States on Sept. 30, mentions of the virus on Twitter leapt from about 100 per minute to more than 6,000. Cautious health officials have tested potential cases in Newark, Miami Beach and Washington D.C., sparking more worry. Though the patients all tested negative, some people are still tweeting as if the disease is running rampant in these cities. In Iowa the Department of Public Health was forced to issue a statement dispelling social media rumors that Ebola had arrived in the state. Meanwhile there have been a constant stream of posts saying that Ebola can be spread through the air, water, or food, which are all inaccurate claims.

Research scientists who study how we communicate on social networks have a name for these people: the “infected.”

11:05 Posted in ICT and complexity, Social Media | Permalink | Comments (0)

Oct 12, 2014

MIT Robotic Cheetah

MIT researchers have developed an algorithm for bounding that they've successfully implemented in a robotic cheetah. (Learn more: http://mitsha.re/1uHoltW)

I am not that impressed by the result though.

22:20 Posted in AI & robotics | Permalink | Comments (0)

New Material May Help Us To Breathe Underwater

Scientists in Denmark announced they have developed a substance that absorbs, stores and releases huge amounts of oxygen.

The substance is so effective that just a few grains are capable of storing enough oxygen for a single human breath while a bucket full of the new material could capture an entire room of O2.

With the new material there are hopes those requiring medical oxygen might soon be freed from carrying bulky tanks, while SCUBA divers might also be able to use the material to absorb oxygen from water, allowing them to stay submerged for significantly longer.

The substance was developed by tinkering with the molecular structure of cobalt, a chemical element that when found in meteoric iron, resembles a silver-gray metal.

Read More: University of Southern Denmark

21:59 Posted in Blue sky, Research tools | Permalink | Comments (0)

New Scientist on new virtual reality headset Oculus Rift

From New Scientist

The latest prototype of virtual reality headset Oculus Rift allows you to step right into the movies you watch and the games you play

AN OLD man sits across the fire from me, telling a story. An inky dome of star-flecked sky arcs overhead as his words mingle with the crackling of the flames. I am entranced.

This isn't really happening, but it feels as if it is. This is a program called Storyteller – Fireside Tales that runs on the latest version of the Oculus Rift headset, unveiled last month. The audiobook software harnesses the headset's virtual reality capabilities to deepen immersion in the story. (See also "Plot bots")

Fireside Tales is just one example of a new kind of entertainment that delivers convincing true-to-life experiences. Soon films will get a similar treatment.

Movie company 8i, based in Wellington, New Zealand, plans to make films specifically for Oculus Rift. These will be more immersive than just mimicking a real screen in virtual reality because viewers will be able to step inside and explore the movie while they are watching it.

"We are able to synthesise photorealistic views in real-time from positions and directions that were not directly captured," says Eugene d'Eon, chief scientist at 8i. "[Viewers] can not only look around a recorded experience, but also walk or fly. You can re-watch something you love from many different perspectives."

The latest generation of games for Oculus Rift are more innovative, too. Black Hat Oculus is a two-player, cooperative game designed by Mark Sullivan and Adalberto Garza, both graduates of MIT's Game Lab. One headset is for the spy, sneaking through guarded buildings on missions where detection means death. The other player is the overseer, with a God-like view of the world, warning the spy of hidden traps, guards and passageways.

Deep immersion is now possible because the latest Oculus Rift prototype – known as Crescent Bay – finally delivers full positional tracking. This means the images that you see in the headset move in sync with your own movements.

This is the key to unlocking the potential of virtual reality, says Hannes Kaufmann at the Technical University of Vienna in Austria. The headset's high-definition display and wraparound field of view are nice additions, he says, but they aren't essential.

The next step, says Kaufmann is to allow people to see their own virtual limbs, not just empty space, in the places where their brain expects them to be. That's why Beijing-based motion capture company Perception, which raised more than $500,000 on Kickstarter in September, is working on a full body suit that gathers body pose estimation and gives haptic feedback – a sense of touch – to the wearer. Software like Fireside Tales will then be able to take your body position into account.

In the future, humans will be able to direct live virtual experiences themselves, says Kaufmann. "Imagine you're meeting an alien in the virtual reality, and you want to shake hands. You could have a real person go in there and shake hands with you, but for you only the alien is present."

Oculus, which was bought by Facebook in July for $2 billion, has not yet announced when the headset will be available to buy.

This article appeared in print under the headline "Deep and meaningful"

21:48 Posted in Virtual worlds, Wearable & mobile | Permalink | Comments (0)

Oct 06, 2014

Is the metaverse still alive?

In the last decade, online virtual worlds such as Second Life and alike have become enormously popular. Since their appearance on the technology landscape, many analysts regarded shared 3D virtual spaces as a disruptive innovation, which would have rendered the Web itself obsolete.

This high expectation attracted significant investments from large corporations such as IBM, which started building their virtual spaces and offices in the metaverse. Then, when it became clear that these promises would not be kept, disillusionment set in and virtual worlds started losing their edge. However, this is not a new phenomenon in high-tech, happening over and over again.

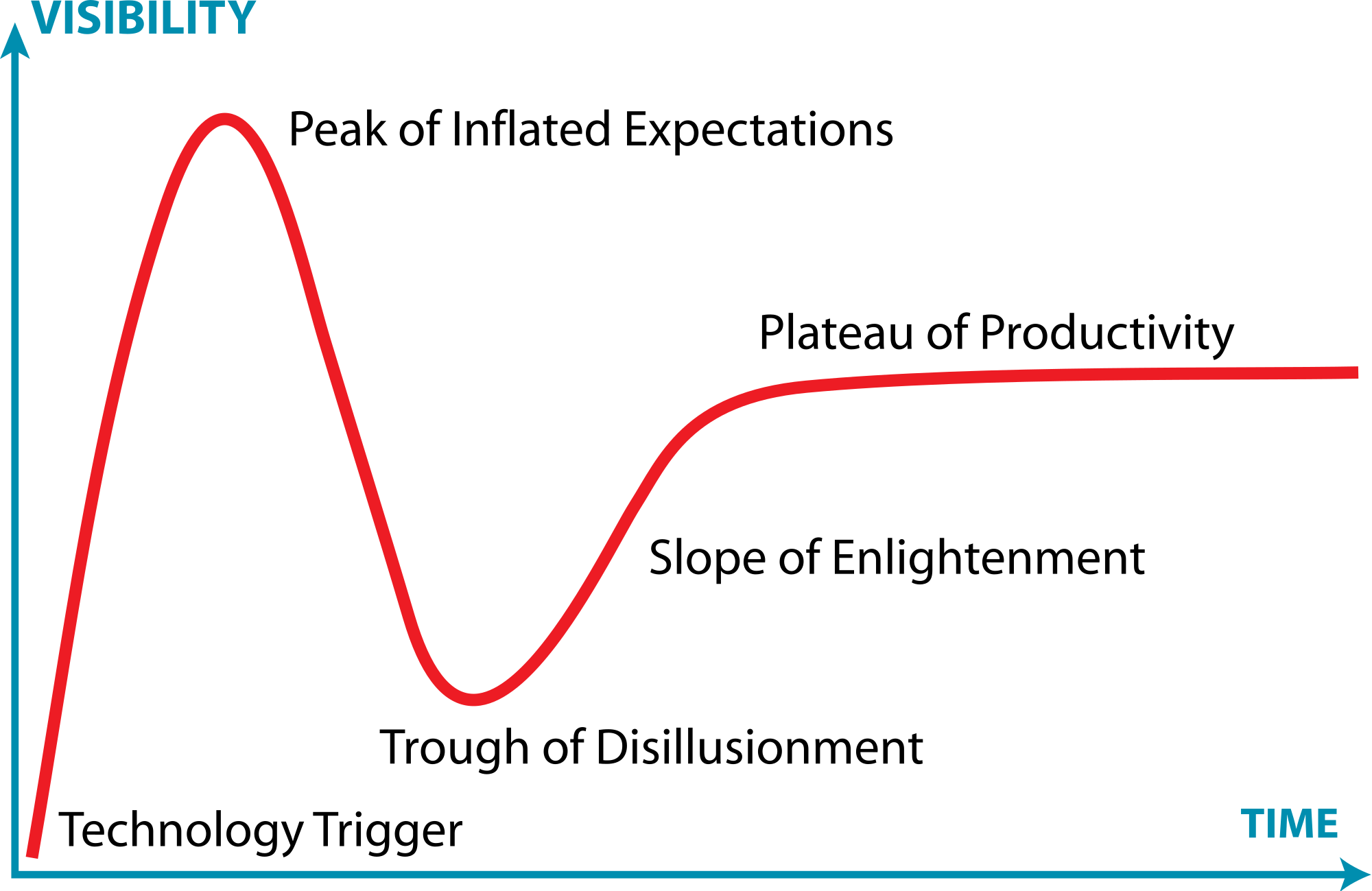

The US consulting company Gartner has developed a very popular model to describe this effect, called the “Hype Cycle”. The Hype Cycle provides a graphic representation of the maturity and adoption of technologies and applications.

It consists of five phases, which show how emerging technologies will evolve.

In the first, “technology trigger” phase, a new technology is launched which attracts the interest of media. This is followed by the “peak of inflated expectations”, characterized by a proliferation of positive articles and comments, which generate overexpectations among users and stakeholders.

In the next, “trough of disillusionment” phase, these exaggerated expectations are not fulfilled, resulting in a growing number of negative comments generally followed by a progressive indifference.

In the “slope of enlightenment” the technology potential for further applications becomes more broadly understood and an increasing number of companies start using it.

In the final, “plateau of productivity” stage, the emerging technology established itself as an effective tool and mainstream adoption takes off.

So what stage in the hype cycle are virtual worlds now?

After the 2006-2007 peak, metaverses entered the downward phase of the hype cycle, progressively loosing media interest, investments and users. Many high-tech analysts still consider this decline an irreversible process.

However, the negative outlook that headed shared virtual worlds into the trough of disillusionment maybe soon reversed. This is thanks to the new interest in virtual reality raised by the Oculus Rift (recently acquired by Facebook for $2 billion), Sony’s Project Morpheus and alike immersive displays, which are still at the takeoff stage in the hype cycle.

Oculus Rift's chief scientist Michael Abrash makes no mystery of the fact that his main ambition has always been to build a metaverse such the one described in Neal Stephenson's (1992) cyberpunk novel Snow Crash. As he writes on the Oculus blog

"Sometime in 1993 or 1994, I read Snow Crash and for the first time thought something like the Metaverse might be possible in my lifetime."

Furthermore, despite the negative comments and deluded expectations, the metaverse keeps attracting new users: in its 10th anniversary on June 23rd 2013, an infographic reported that Second Life had over 1 million users visit around the world monthly, more than 400,000 new accounts per month, and 36 million registered users.

So will Michael Abrash’s metaverse dream come true? Even if one looks into the crystal ball of the hype cycle, the answer is not easily found.

22:36 Posted in Blue sky, Serious games, Telepresence & virtual presence, Virtual worlds | Permalink | Comments (0)

Bionic Vision Australia’s Bionic Eye Gives New Sight to People Blinded by Retinitis Pigmentosa

Via Medgadget

Bionic Vision Australia, a collaboration between of researchers working on a bionic eye, has announced that its prototype implant has completed a two year trial in patients with advanced retinitis pigmentosa. Three patients with profound vision loss received 24-channel suprachoroidal electrode implants that caused no noticeable serious side effects. Moreover, though this was not formally part of the study, the patients were able to see more light and able to distinguish shapes that were invisible to them prior to implantation. The newly gained vision allowed them to improve how they navigated around objects and how well they were able to spot items on a tabletop.

The next step is to try out the latest 44-channel device in a clinical trial slated for next year and then move on to a 98-channel system that is currently in development.

This study is critically important to the continuation of our research efforts and the results exceeded all our expectations,” Professor Mark Hargreaves, Chair of the BVA board, said in a statement. “We have demonstrated clearly that our suprachoroidal implants are safe to insert surgically and cause no adverse events once in place. Significantly, we have also been able to observe that our device prototype was able to evoke meaningful visual perception in patients with profound visual loss.”

Here’s one of the study participants using the bionic eye:

First direct brain-to-brain communication between human subjects

Via KurzweilAI.net

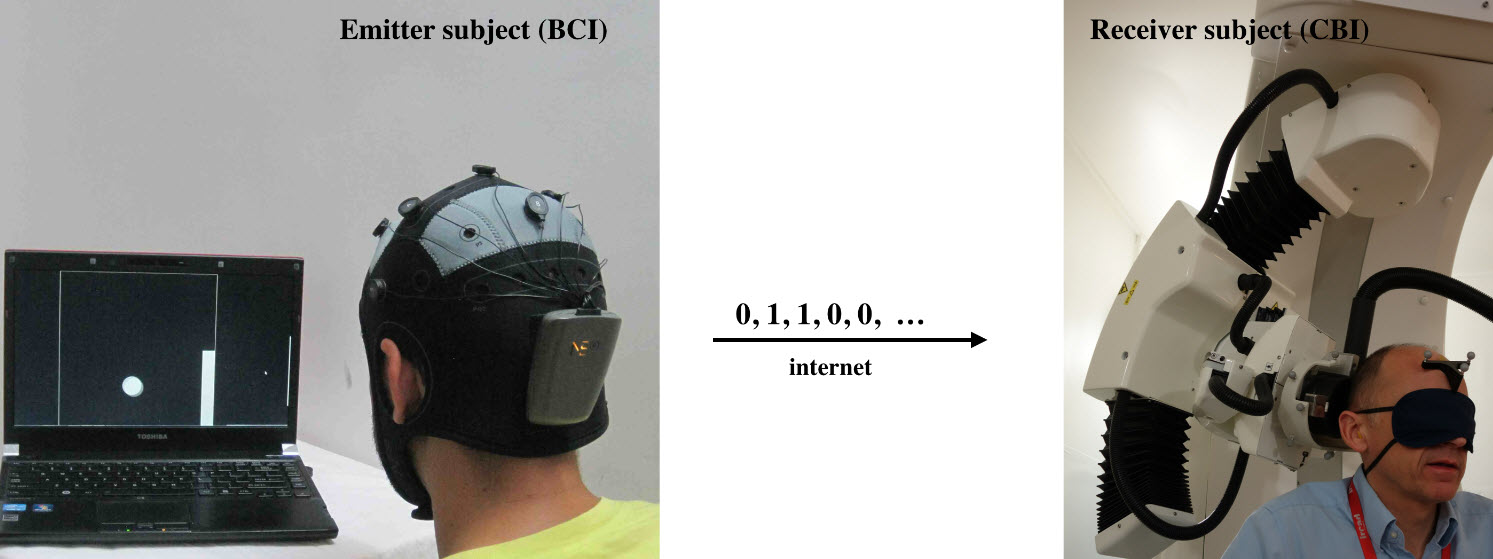

An international team of neuroscientists and robotics engineers have demonstrated the first direct remote brain-to-brain communication between two humans located 5,000 miles away from each other and communicating via the Internet, as reported in a paper recently published in PLOS ONE (open access).

Emitter and receiver subjects with non-invasive devices supporting, respectively, a brain-computer interface (BCI), based on EEG changes, driven by motor imagery (left) and a computer-brain interface (CBI) based on the reception of phosphenes elicited by neuro-navigated TMS (right) (credit: Carles Grau et al./PLoS ONE)

In India, researchers encoded two words (“hola” and “ciao”) as binary strings and presented them as a series of cues on a computer monitor. They recorded the subject’s EEG signals as the subject was instructed to think about moving his feet (binary 0) or hands (binary 1). They then sent the recorded series of binary values in an email message to researchers in France, 5,000 miles away.

There, the binary strings were converted into a series of transcranial magnetic stimulation (TMS) pulses applied to a hotspot location in the right visual occipital cortex that either produced a phosphene (perceived flash of light) or not.

“We wanted to find out if one could communicate directly between two people by reading out the brain activity from one person and injecting brain activity into the second person, and do so across great physical distances by leveraging existing communication pathways,” explains coauthor Alvaro Pascual-Leone, MD, PhD, Director of the Berenson-Allen Center for Noninvasive Brain Stimulation at Beth Israel Deaconess Medical Center (BIDMC) and Professor of Neurology at Harvard Medical School.

A team of researchers from Starlab Barcelona, Spain and Axilum Robotics, Strasbourg, France conducted the experiment. A second similar experiment was conducted between individuals in Spain and France.

“We believe these experiments represent an important first step in exploring the feasibility of complementing or bypassing traditional language-based or other motor/PNS mediated means in interpersonal communication,” the researchers say in the paper.

“Although certainly limited in nature (e.g., the bit rates achieved in our experiments were modest even by current BCI (brain-computer interface) standards, mostly due to the dynamics of the precise CBI (computer-brain interface) implementation, these initial results suggest new research directions, including the non-invasive direct transmission of emotions and feelings or the possibility of sense synthesis in humans — that is, the direct interface of arbitrary sensors with the human brain using brain stimulation, as previously demonstrated in animals with invasive methods.

Brain-to-brain (B2B) communication system overview. On the left, the BCI subsystem is shown schematically, including electrodes over the motor cortex and the EEG amplifier/transmitter wireless box in the cap. Motor imagery of the feet codes the bit value 0, of the hands codes bit value 1. On the right, the CBI system is illustrated, highlighting the role of coil orientation for encoding the two bit values. Communication between the BCI and CBI components is mediated by the Internet. (Credit: Carles Grau et al./PLoS ONE)

“The proposed technology could be extended to support a bi-directional dialogue between two or more mind/brains (namely, by the integration of EEG and TMS systems in each subject). In addition, we speculate that future research could explore the use of closed mind-loops in which information associated to voluntary activity from a brain area or network is captured and, after adequate external processing, used to control other brain elements in the same subject. This approach could lead to conscious synthetically mediated modulation of phenomena best detected subjectively by the subject, including emotions, pain and psychotic, depressive or obsessive-compulsive thoughts.

“Finally, we anticipate that computers in the not-so-distant future will interact directly with the human brain in a fluent manner, supporting both computer- and brain-to-brain communication routinely. The widespread use of human brain-to-brain technologically mediated communication will create novel possibilities for human interrelation with broad social implications that will require new ethical and legislative responses.”

This work was partly supported by the EU FP7 FET Open HIVE project, the Starlab Kolmogorov project, and the Neurology Department of the Hospital de Bellvitge.

Google Glass can now display captions for hard-of-hearing users

Georgia Institute of Technology researchers have created a speech-to-text Android app for Google Glass that displays captions for hard-of-hearing persons when someone is talking to them in person.

“This system allows wearers like me to focus on the speaker’s lips and facial gestures, “said School of Interactive Computing Professor Jim Foley.

“If hard-of-hearing people understand the speech, the conversation can continue immediately without waiting for the caption. However, if I miss a word, I can glance at the transcription, get the word or two I need and get back into the conversation.”

Captioning on Glass display captions for the hard-of-hearing (credit: Georgia Tech)

The “Captioning on Glass” app is now available to install from MyGlass. More information here.

Foley and the students are working with the Association of Late Deafened Adults in Atlanta to improve the program. An iPhone app is planned.